TL;DR

In a large-scale survey we found that only 7.4% of New York University students knew what effective altruism (EA) is. At the same time, 8.8% were extremely sympathetic to EA. These students agreed with basic EA ideas when presented with a short introduction, showed interest in learning more about it, and scored highly on our ‘effectiveness-focus’ and ‘expansive altruism’ measures. Interestingly, these EA-sympathetic students were largely ignorant about EA; only 14.5% knew about it before the survey. We estimate that, in total, 7.5% of NYU students do not know of EA but would be very sympathetic to the basic ideas. These findings could have important implications for the scaling of outreach but need to be interpreted carefully.

Note: This post is long because of the Detailed results section. Most readers may just want to focus on the Key results and Conclusions sections.

Key results

We conducted an approximately representative online survey with 938 students at NYU to investigate their familiarity with and views on EA and existential risk.

Familiarity

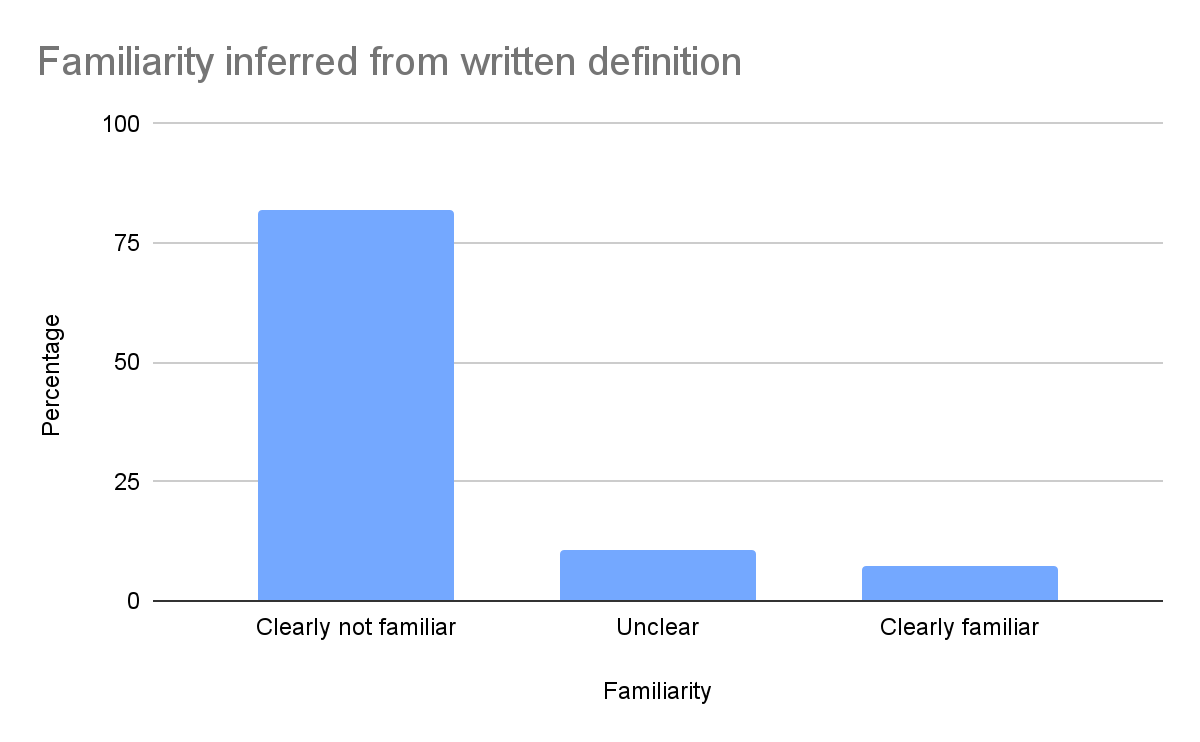

- When asked to explain what EA is, only 7.4% of NYU students could clearly demonstrate that they knew it. An additional 10.6% demonstrated that they may have heard of it, but their definition wasn’t sufficiently accurate (see below). The remaining 82.1% clearly did not know about EA.

Views on effective altruism

- After reading a short introduction about EA (see below), 25.0% agreed with the key ideas relatively strongly, and 82.1% of those respondents expressed interest in learning more about EA.

- We found that 8.8% of students were especially sympathetic towards EA since they agreed with the basic principles, wanted to learn more, and scored highly on “effectiveness-focus” and “expansive altruism,” two psychological traits which seem to predict EA engagement.

- (When we mention ‘EA-sympathetic’ students in the post, it is this group we refer to. Please keep in mind that students who didn’t meet the ‘very sympathetic’ bar didn’t necessarily dislike EA ideas).

Unreached EA-sympathetic students

- 7.5% were unaware of EA, yet were very sympathetic when first presented with the ideas. Since there are about 50,000 NYU students in total, we estimate there are about 3,800 such students. (Of course, as discussed below, not all of them would become highly engaged EAs. Conversely, some students who are not immediately in agreement with EA may be interested after thinking more about the ideas.)

Predictors

- There were few robust demographic predictors of EA agreement. Neither gender, SAT scores, nor most study subjects significantly correlated with it. Exceptions are that students studying arts and media or humanities tended to agree slightly less with EA than other students and that older students tended to agree slightly more. And the most EA-sympathetic students tended to be more politically liberal than other students.

- These results could be seen as good news for attempts to make EA more diverse. They suggest that different groups are sympathetic toward the basic EA ideas. But keep in mind that immediate agreement is not the only predictor of becoming more engaged with EA (see below).

- Older students, those with higher SAT scores, and students studying STEM or social sciences were more likely to already know of EA.

- Unsurprisingly, students with greater intellectual interest in people and resources related to EA (as well as other philosophical topics) tended to agree more with EA and were more likely to know of it.

Reactions to existential risk

- After reading a short introduction about existential risk (see below), 25.7% agreed relatively strongly that existential risk mitigation should be among the most important global problems. Agreement with this view was positively associated with agreement with EA as well as with scores on “effectiveness-focus” and “expansive altruism.”

- After reading the short existential risk introduction that mentioned Toby Ord’s estimate of a 1 in 6 extinction chance this century, 51.3% of participants estimated that the risk of human extinction this century was 10% or greater. Strikingly, 47.9% of this group did not consider existential risk mitigation to be a global priority.

Conclusions

Why are there so many EA-sympathetic students who don’t know of EA yet? And what are the implications of this finding for outreach?

Have we simply not done enough to reach everyone?

The first obvious answer is that most students simply haven’t been reached yet. If true, we may need to massively boost (high-quality) student outreach, making sure that every student who could be sympathetic towards EA ideas finds out about EA.

We find this view pretty plausible. It’s likely correct that student outreach should be scaled a lot. But it's not obvious to us how much student outreach should be increased and in what form. We’ll now discuss some considerations and caveats.

Are there motivational obstacles to deeper EA engagement?

From a naively optimistic view, we might assume that all students who don't know EA yet but would be very sympathetic to it are psychologically (or circumstantially) similar to the typical highly engaged EA (or an earlier version of that person). If so, similar mechanisms that have led existing EAs to become highly engaged would also work for them.

While that may be true for some students who don’t know of EA, it probably isn’t the case for all. It’s very well possible that many EA-sympathetic students at US/UK top universities who don’t know EA yet are, on average, psychologically or circumstantially different from the typical highly engaged EAs. In particular, it’s possible that the factors that have prevented them from finding out about EA will also prevent them from getting more engaged once they learn about it. For example, perhaps such students are generally less proactive, intellectually curious, motivated, or well-situated to act upon EA ideas, although they may agree with them (attitude-behavior gap). They may also find the ideas less important relative to other values and interests.

This means that simply boosting the type of outreach that has worked for the currently typical highly engaged EA won’t work as well for EA-sympathetic students who aren’t that motivated to get more engaged. To get such students more engaged, if that’s possible at all, we may need to shift the type of outreach conducted at universities. For example, perhaps such students need much more frequent in-depth exposure and social proof from different types of people to get more engaged. This is something worth exploring more.

Do we miss out on students because they find EA outreach off-putting?

One possibility is that some students are put off by certain aspects of EA outreach that they experience and are therefore turned away from EA before finding out exactly what it entails. While it is of course entirely possible that some students have a negative initial impression of the community, certain people, or practices, it’s not clear if this could explain why so few people know of EA. And since our survey was not designed to answer this question, we hope that future empirical research will investigate it. Needless to say, it’s extremely important that EA outreach is done in a high quality way that avoids putting people off.

How predictive is immediate agreement with basic EA ideas?

An important task for future research would be to estimate what fraction of the students who don’t yet know of EA but immediately agree with the basic ideas could become highly engaged EAs. In other words, what’s the conversion rate?

Obviously, not everyone who agrees with EA after learning about it for the first time will become highly engaged. That said, we find it relatively plausible to assume that a person who disagrees with our EA introductory text, which mostly focuses on very basic principles and general approaches (see below for the text), is unlikely to become highly engaged. But this assumption isn’t entirely obvious. Some people may be skeptical initially and need more time to warm up to the ideas. It’s even possible that such critically-minded people would be particularly valuable to the community. If true, our measures may miss some promising people.

Of course, in addition to agreeing with the basic ideas and being sufficiently motivated to take action, there could be further predictors that are essential to becoming more engaged. For example, some people may find it more natural and appealing to adopt the thinking styles and attitudes (e.g. Scout Mindset) that the EA community cultivates. This is something we aim to explore more in future research.

Detailed results

Overview

Students at top universities are one of the key outreach target audiences for EA. We tried to better understand what they know about and think of EA and existential risk. To do so, we conducted an approximately representative online survey with 1045 students at NYU. Analyses are based on 938 participants (see Methods section for exclusion criteria). For more details about our methodology, see our Methods section at the end of this post.

To our knowledge, this is the first online survey of this scale that measures both familiarity with EA as well as reactions after giving a brief description. There have been a couple of studies that measured familiarity conducted by Rethink Priorities and CEA as well as Global Challenges Project (see the “Are there differences between universities?” section). In part, the present survey is a trial to explore how useful such surveys could be and whether they should be replicated at other universities. We conducted the survey at NYU because we had easy access to the university. When interpreting the results, it’s important to keep in mind that awareness of EA likely differs between universities.

Our main research questions were:

- What fraction of students at this university have already heard about effective altruism?

- How many people have positive initial reactions towards effective altruism?

- How many people have positive initial reactions towards existential risk mitigation?

- What factors, such as demographics, study subjects, and intellectual interests predict positive attitudes towards effective altruism?

General disclaimer: Since we report so many results and did not formally state our hypotheses in advance, there are likely some false positives. This possibility is particularly relevant for some of the significant but weak correlations, e.g., with study subjects. We hope to follow up on some of these relationships in future studies. It’s also possible that some relationships between variables are driven by third variables that we didn’t measure. Please keep this in mind when interpreting the results.

Prior exposure to effective altruism

Familiarity and understanding

We first asked participants whether they had heard of “the school of thought Effective Altruism.” 40.7% said they had heard of it, 45.3% said they had not, and 14.0% said they were not sure. Participants who responded “Yes” or “Not sure” were asked to explain what EA is.

We noticed that many participants responded affirmatively even though they didn’t really know what EA means. It seems that many said yes because they had heard the term “effective altruism” without knowing anything more about it or simply because they knew both words separately. (See also acquiescence bias). We therefore reviewed participants' written explanations of what EA is and manually coded whether or not their descriptions demonstrated an accurate understanding. See this footnote[1] for some written examples and our supplementary materials for a list of our coding rules and all responses. Based on this analysis, we found that, of the total sample, only 7.4% clearly knew what EA is. An additional 10.6% showed weak evidence that they knew what EA is but did not give a sufficiently accurate definition. 82.1% evidently didn’t know what EA is.[2] (Note that we refer to this variable based on written definitions whenever we say “know EA” throughout the post; whereas, when we report findings of "self-reported familiarity" we refer to the 7-point scale variable that we explain next.)

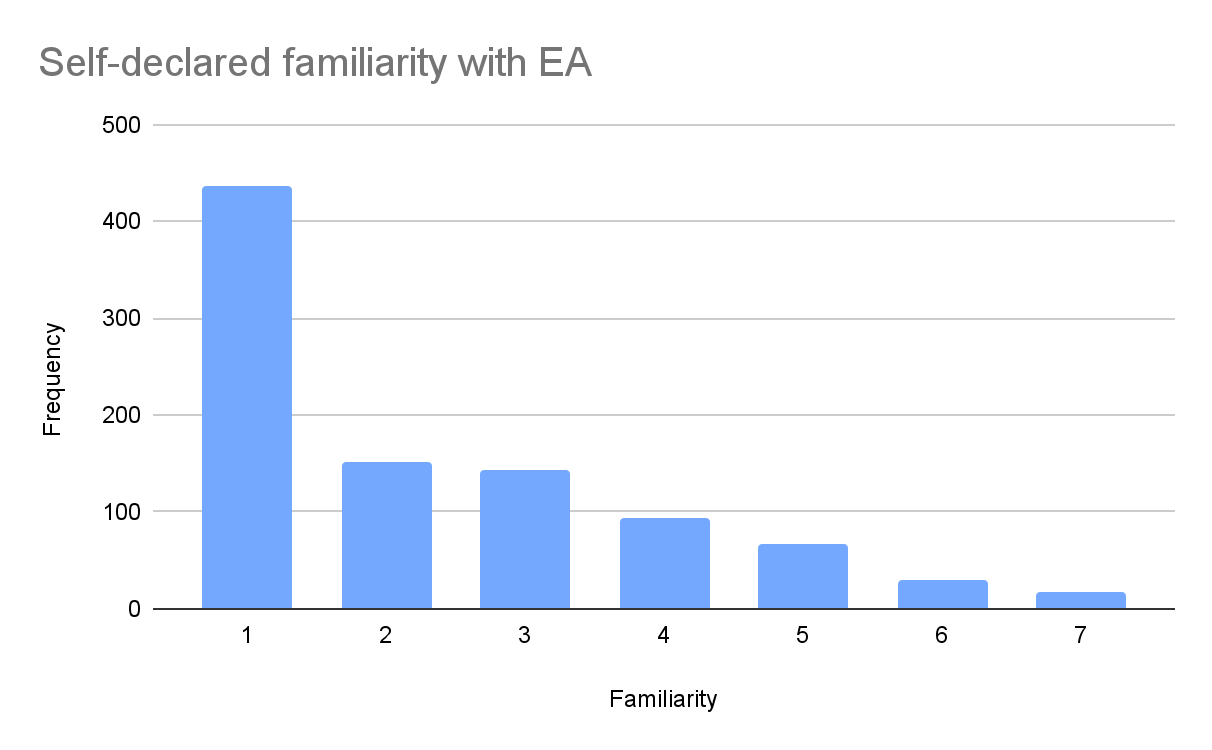

When we asked students explicitly about their familiarity with EA, on a scale from 1 (no familiarity) to 7 (very familiar), the mean response was relatively low at 2.3. 46.5% of participants said they had no familiarity. And only 5.0% indicated considerable familiarity with EA, scoring 6 or 7. This is in line with our finding above that less than 10% know EA.

|  |

There are some minor differences in familiarity with EA across age and gender, but these differences are probably quite trivial. First, older students tended to rate their familiarity with EA higher than younger students (r = .13) and, accordingly, were more likely to know EA (r = .10). (Note that all reported correlations throughout the post are statistically significant.) That’s probably explained by the fact that they have had more time to find out about it. Men tended to rate their familiarity with EA (M = 2.5) slightly higher than women (M = 2.2). However, based on participants’ written explanations of EA, we did not find that men were more likely to know what EA was compared to women. Thus, the effect of gender on self-reported familiarity may be an artifact of men being more motivated to express familiarity than women, rather than any actual difference.

There were perhaps more meaningful differences in familiarity based on students’ academic characteristics. First, students with a higher (self-reported) SAT score were more likely to know EA (r = .17). Second, familiarity with EA differed by study subject. Students who studied social sciences (r = .08) and a STEM subject (r = .08) were more likely to know EA (for each correlation we report, we are comparing students in the specified field to students from all other fields combined). By contrast, students who studied arts and media were less likely to know EA (r = -.08). Looking more specifically at majors, students who studied psychology (r = .08), political science (r = .11), and computer or data science (r = .11) were more likely to know EA. Aside from that, there were no further statistically significant correlations between EA-familiarity and study subject categories or majors.[3]

Involvement

Although it is possible that we oversampled or undersampled students who were familiar with EA, outside data on EA involvement suggests the sample was approximately representative. We conducted this analysis by comparing the proportion of students who reported active involvement with EA to estimates of active involvement from the NYU EA group. In our survey, 1.9% of participants said they had previously attended an event at NYU about EA. And 1.4% said they participated in the NYU EA group or fellowship in response to a yes/no question; however, to confirm they were actually part of the group, we asked participants to explain their involvement with the group or fellowship. Only 8 of the 13 indicated they were in the group or fellowship in their free response. Others gave a response that indicated they weren’t actually in the group (e.g., they were only on the email list or had made one donation.) This suggests only 0.85% of our sample was part of the EA group or fellowship. According to the NYU EA group, there are 92 fellows this spring term (both undergrad and postgrads) and 19 last semester. Based on these numbers, we estimate that there were about 20 students each semester in previous years, as the group was smaller during the onset of the Covid-19 pandemic. If the academic year of students in the group was evenly distributed, around 175 past fellows or EA group participants would still be enrolled at NYU. Given the total NYU enrollment of 51,123, the percent of the NYU population involved with EA is roughly 0.4%. That’s smaller than the 0.85% in our survey who participated in the NYU EA group/fellowship. Overall, this analysis provides some (imperfect) evidence that active effective altruists are neither hugely over nor underrepresented in our sample. But it’s possible that the number of active effective altruists is slightly overrepresented. This is important for our purposes of estimating what fraction of students know of EA and are sympathetic to it.

One measure appeared to undermine this reassuring conclusion, but in hindsight, it was probably a poor measure. Specifically, 14.7% of participants already considered themselves a "member of the Effective Altruism community." We believe that participants likely misinterpreted this question, given that only 7.4% actually knew EA. They may have responded positively simply to signal that they support the idea of being altruistic in an effective way.

Additionally, for comparison, we asked participants whether they had heard of other schools of thought such as utilitarianism and longtermism. See our findings in this footnote.[4]

Reactions to effective altruism

In a section that appeared later in the survey, we presented participants with a one-page introduction to EA. The introduction text (see full text in this footnote[5]) explained basic EA principles and approaches. Participants then responded to some follow-up questions.

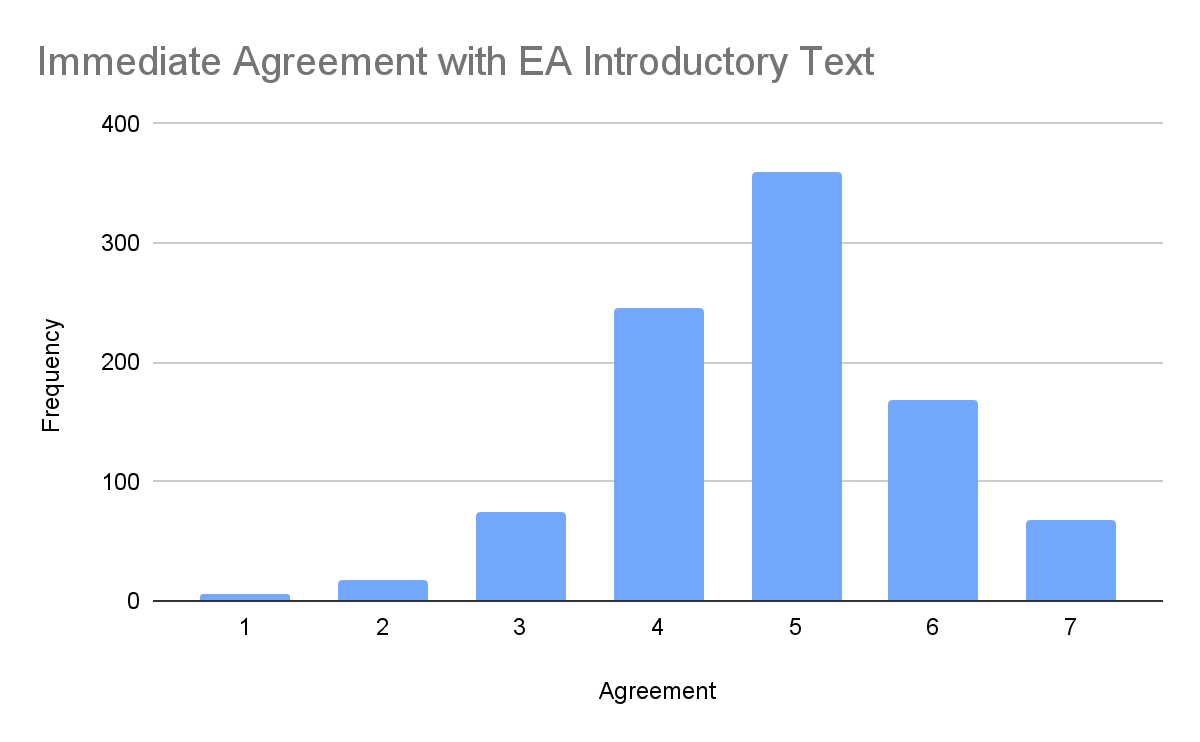

Initial agreement

When asked how much they agreed or disagreed with the ideas of EA, the mean score was 4.8 on a scale from 1 (strongly disagree) to 7 (strongly agree). 25.1% of participants scored 6 or 7, and 7.1% scored 7.[6]

It seems plausible to us that someone who disagrees with the described ideas is unlikely to become a highly engaged EA. But of course, not everyone who immediately agrees will necessarily become highly engaged either. For one, responses will depend heavily on how correctly and comprehensively the ideas are described, and our text isn’t perfect. Furthermore, several other factors, in addition to agreement, are likely required to turn someone into a highly engaged EA. We address this more in the Conclusion section above.

For recruitment purposes, we might want to know the following: of people who agree with EA, how many had already been exposed to it? If this number is low enough, it suggests considerably more room for outreach. Indeed, of the 235 participants (25%) who scored 6 or 7, only 25 clearly knew EA. And of the 67 (7%) who scored 7, only 6 knew what EA was. In other words, roughly 90% of the NYU students who relatively strongly agreed with EA after reading a one-page introductory text did not know what EA was before the survey.

Also relevant for recruitment purposes: exposure to EA may increase people’s favorability to it, although, our evidence for this contention is only correlational. Specifically, we found that prior exposure to EA was associated with positive attitudes towards it. Participants who rated their familiarity with EA higher also tended to agree more with EA (r = .15). And in line with that, participants who knew EA tended to agree more with it (r = .07). We found that 75.4% of those who knew EA agreed with EA (scored above the midpoint). By contrast, only 8 of those who knew EA disagreed with it (scored below the midpoint). Of course, a very plausible alternative explanation is that those who are likely to be more sympathetic towards EA are more likely to have already found out about it.

Older students were slightly more likely to agree with EA than younger students (r = .09). In a mediation analysis we found that the positive effect of age on EA agreement was mediated (i.e., a weak significant indirect effect) by whether or not participants knew of EA. This provides some (weak) evidence for the hypothesis that exposure to EA can improve people’s views of EA. But again, since this finding is correlational only, there could be alternative hypotheses. We hope that future research could test this experimentally.

Other student characteristics were broadly unpredicted of agreement with EA, except for less agreement among certain study subject areas. Agreement with EA did not correlate with gender, political orientation, or (self-reported) SAT scores. Students who studied arts and media (r = -.10) and humanities (r = -.12) were less likely to agree with EA. Aside from that, there were no further robust correlations with study subject categories and majors. (Note that for all these analyses, we compare students who study a certain subject/major against all other students.)

As well as the quantitative measure of agreement, we also asked participants to write down their thoughts about EA, specifically which aspects they do and do not like. Those who scored less than the midpoint on agreement with EA commonly mentioned the following dislikes: personal connections should be part of doing good, there is a lack of empathy, treating all people as equal ignores minority groups, it’s too idealistic and wouldn’t work, and EA is a very Western view. Free responses from all participants can be seen here.

Interest and intentions

When we asked participants whether they would be interested in learning more about EA, the mean score was 4.7 on a scale from 1 (not at all interested) to 7 (very interested). 28.9% of participants scored 6 or 7, and 12.6% scored 7.

Of those who agreed relatively strongly with the EA introduction text (scoring 6 or 7), 56.1% scored 6 or above on interest in learning more, and 82.1% scored 5 or above on interest in learning more.

We then asked participants about their intended future actions. (A completely implausible) 62.8% said they could imagine donating ten percent of their income to the most effective charities. 42.9% said they could imagine changing their career to do the most good possible. And 56.0% said they would be interested in reading a book about EA. These numbers are likely overestimates of their actual future behavior, as can be seen from the measure described next.

Our behavioral measures indicated a much lower level of interest. When asked whether they would be interested in signing up for a newsletter about EA, 27.0% said yes. At the end of the survey, we presented those who said yes with a link to the EA website where, as we told them, they could sign up for the newsletter. Only 8.7% of the people who said they were interested in the newsletter (so 2.3% of the total sample) clicked on the link. And according to CEA, there were only two new signups with NYU email addresses during that period (constituting a maximum of 0.2% of the total sample).

Similarly, those who indicated they wanted to learn more about EA were shown a list of resources at the end of the survey. A low but non-negligible proportion of students clicked on these resources. Of those who were shown resources, 12.3% clicked on at least one of the links. Of the total sample, 1.6% clicked on the EA website link, 1.9% clicked on the 80,000 Hours website link, and 5.7% clicked on the “get a free book” link. There were 17 new signups for the 80,000 Hours newsletter with NYU email addresses during the time period of the survey.

Agreement with EA is positively correlated with all measures of interest and future actions. For some measures of future actions, correlations with agreement are stronger for those who clearly know EA than those who don't: newsletter (those who know EA, r = .51; those who don't know EA r = .22), read book (those who know EA, r = .39; those who don't know EA r = .19), change careers (those who know EA, r = .35; those who don't know EA r = .11). One interpretation is that those who already know of EA are generally more likely to take action based on these ideas. But there could be alternative explanations.

Reactions to existential risk

Next, participants were presented with a brief introduction to existential risk mitigation. The text (see supplementary materials) explained what existential risks to humanity are, that the total risk this century is estimated by some to be 1 in 6, and that human-caused risks (including some examples) are the most severe. The concluding point was that existential risk mitigation may be a key moral priority.

Perceived importance

When asked about the overall agreement with the argument, the mean score was 4.6 on a scale from 1 (strongly disagree) to 7 (strongly agree). 16.4% of participants scored 6 or 7, and 4.8% scored 7. When asked how much they agreed with the claim that reducing existential risk is one of the most important global problems we should focus on (on the same scale), the mean score was 4.6. 25.7% scored 6 or 7, and 11.6% scored 7.

Next, participants were told that some experts believe that the biggest risks of human extinction come from uncontrolled AI, that some believe this risk is real and could happen this century, and therefore one of the most important global problems to work on might be to ensure that AI is developed safely. When asked how plausible they find this argument, the mean score was 3.9 on a scale from 1 (not at all plausible) to 7 (very plausible). 19.2% scored 6 or 7, and 10.4% scored 7.

Neither self-declared familiarity with nor knowledge of EA correlated with greater agreement with the existential risk or AI safety arguments. However, overall agreement with EA (after reading the introductory text) correlated positively with overall agreement with the existential risk argument (r = .35), the view that reducing existential risk is one of the most important global problems (r = .28), and the AI safety argument (r = .15). Note that the EA introductory text did not mention existential risk explicitly, but it did discuss the view that future people matter morally.

Men found existential risk mitigation slightly more important than women (r = -.08; men coded as 0, women as 1). But aside from that, there were no other gender effects, nor were there any noteworthy correlations with age or political orientation. Interestingly, religious respondents found existential risk mitigation slightly more important (r = .09) and the AI safety argument more plausible (r = .15). (However, religiosity was not normally distributed, with the modal response being 0, i.e., non-religious.) Agreement with the existential risk argument did not correlate with self-reported SAT scores.

We found that SAT scores correlated negatively with perceiving the AI risk argument as plausible (r = -.13). This negative correlation holds when controlling for gender and age. It’s unclear how to interpret this. One possibility is that people with high SAT scores are generally more critical and may naturally disagree more with radical views when little information is provided. However, it could be a spurious correlation based on a third, unmeasured predictor. Given the priority of finding highly intelligent people who can work on AI safety, exploring this effect further would be valuable.

Two notable correlations emerged in how students from different fields perceived existential risk. First, students studying computer and data science were more likely to agree with the overall existential risk argument (r = .07), suggesting that those with more expertise in AI might take existential risk somewhat more seriously. Second, despite relatively low favorability towards EA as a whole (see above), students studying humanities were more likely to see existential risk mitigation as a global priority (r = .08), perhaps reflecting more mainstream concerns about climate change. Either way, future work could explore whether emphasizing such concerns could be a way to broaden the appeal of EA to humanities students. Aside from that, there were no robust correlations with study subject categories and specific majors, in either perception of AI risk or general existential risk.

Extinction estimates

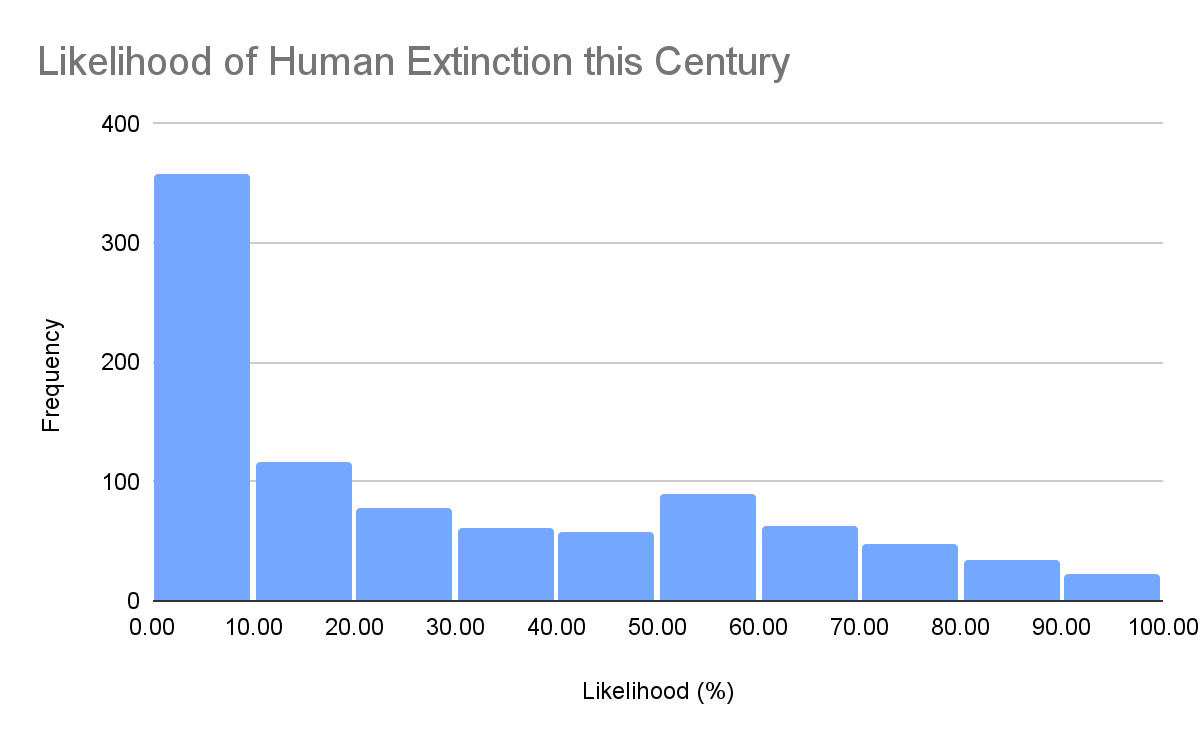

Next, we asked how likely participants personally thought it was that humanity would go extinct before the end of this century. When interpreting these numbers, it’s important to note that participants just before answering these questions had read the text saying that some experts believe there may be a one in six chance of human extinction this century.

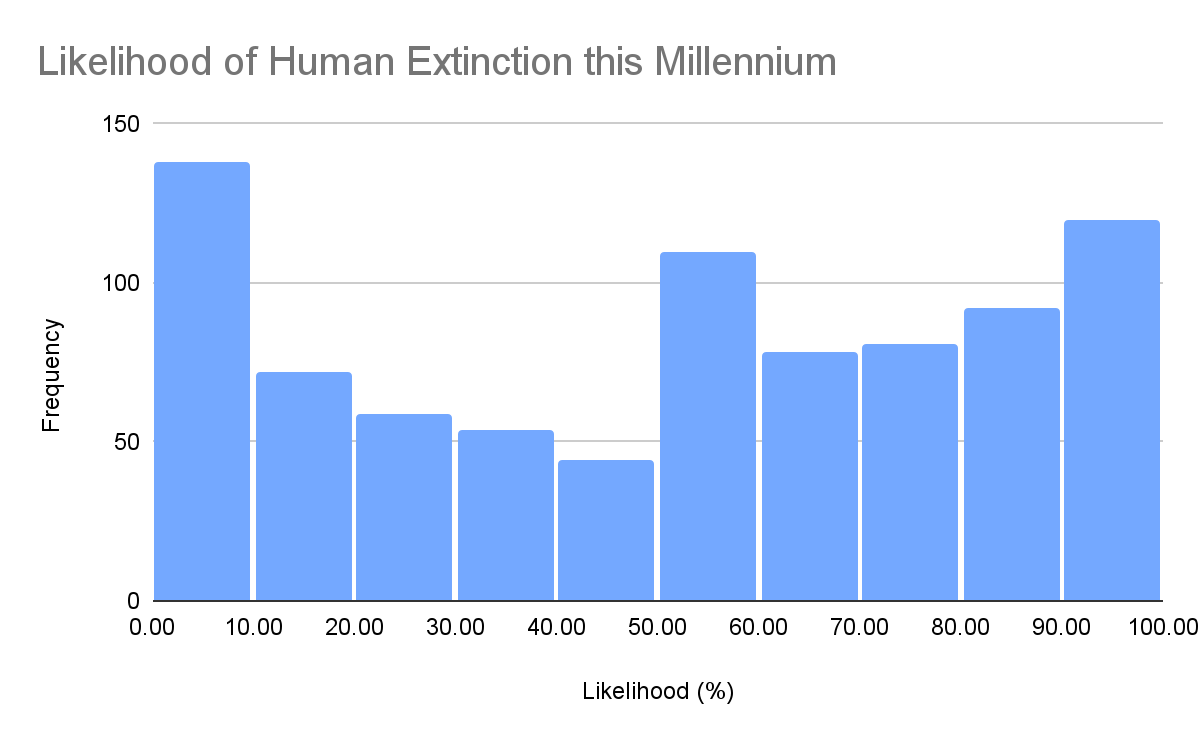

With that caveat in mind, the mean estimate was 27.3%, and the median estimate was 15%. 51.3% of participants (482 in total) estimated that the risk of human extinction this century was 10% or greater. Strikingly, 47.9% of this latter group (which thought the risk was 10% or larger) did not consider existential risk mitigation to be a global priority (i.e., responded below 5 on that question). When asked about the chance of human extinction this millennium, the mean response was 52.9%, and the median response was 52.5%.

It’s very likely that the text participants read before answering the questions influenced their beliefs. But it’s still remarkable that many participants have adopted the claim, even though it’s not clear whether their reported numbers reflect their true beliefs. (We are exploring these findings in follow-up studies.)

|  |

Views on career choice and cause areas

In the following section, we asked participants about their potential interest in dedicating their careers to a range of EA-related activities and cause areas. Specifically, we said:

“Assuming you had the skills to help, would you be potentially interested in dedicating your career to any of the following activities/cause areas? Note, there are many types of careers that contribute to each cause, including technical research, policy, advocacy, fundraising, operations management, etc.”

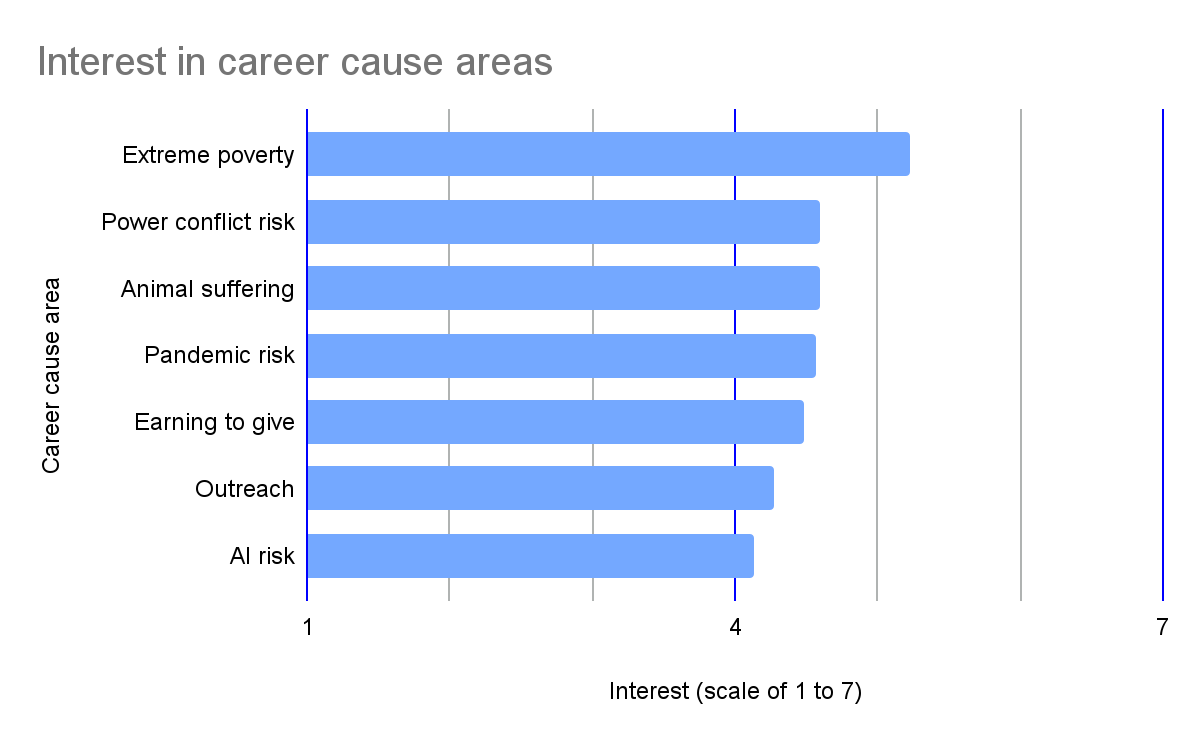

Participants then responded on a scale from 1 (definitely not interested) to 7 (definitely interested) for each of the following career options: reducing risk from AI (artificial intelligence), reducing risk from engineered pandemics, reducing risk from great power conflict, reducing animal suffering on factory farms, reducing extreme poverty in low income countries, empowering and persuading others to donate to or work on the most effective causes, and choosing an extremely lucrative career path (e.g. entrepreneurship) in order to donate very large sums (e.g., 100s of millions of dollars) to effective causes.

The results are shown in the figure below. On average, students tended to be more interested than not in all career paths. And they tended to be most interested in working on reducing extreme poverty in low income countries and least interested in working on AI safety. (And note that these questions were asked after participants were presented with the EA introductory and the existential risk argument texts.)

Overall agreement with EA after reading the introductory text as well as overall agreement with the existential risk argument both correlated positively with interest in all of the career paths (r between .17 and .29).

We analyzed how students’ demographics predicted their interest in career paths, with some interesting results. The results with age were hard to interpret, but perhaps worthy of further explanation; older students were more interested in working on AI safety (r = .11) or in earning-to-give (r = .08). There appeared to be some gender differences. Men were more interested in working on reducing AI risk (r = -.16), pandemic risk (r = -.09), and in earning-to-give (r = -.12). Women were more interested in working on reducing animal suffering (r = .07), extreme poverty (r = .11), and in outreach (r = .07). Finally, political orientation was influential. The more politically liberal students were, the more interested they were in working on reducing extreme poverty (r = -.13) and the less interested in working on reducing AI risk (r = .10) or pandemic risk (r = .09). These results suggest that different cause areas might appeal to people with different political ideologies, giving EA as a whole relatively broad reach (although, given the political polarization around the covid-19 pandemic in the US, the pandemic result is somewhat surprising).

Some students’ career interests seem to reflect their interests and abilities relative to their major. For example, STEM students were more interested in AI risk (r = .23), pandemic risk (r = .26), and earning-to-give (r = .12). Arts and media students were less interested in AI risk (r = -.09), pandemic risk (r = -.12), and earning-to-give (r = -.09), and humanities students were also less interested in AI risk (r = -.15), pandemic risk (r = -.13), and earning-to-give (r = -.17). Some correlations between majors and career interests were harder to interpret. Business students were less interested in pandemic risk (r = -.10), animal suffering (r = -.08), and poverty (r = -.07). Health students were less interested in AI risk (r = -.11) and power conflict (r = -.07). Social science students were less interested in pandemic risk (r = -.13). Correlations between career cause areas and specific majors can be found in this footnote.[7] (Note that we only report correlations that were statistically significant.)

Next, we asked participants how excited different cause areas would make them to join the EA community on a scale from 1 (definitely not interested) to 7 (definitely interested). We found that excitement to join the EA community to “reduce extreme poverty in low income countries” (M = 5.1) was significantly higher than to “improve the very long-term future of humanity” (M = 4.9), which in turn was significantly higher than to “reduce human extinction risk” (M = 4.5) as well as to “reducing animal suffering on factory farms” (M = 4.5). We think it’s noteworthy that the ‘long-term future’ framing performed better than the ‘existential risk’ framing, even though it’s not clear whether we can infer anything action-guiding from just this result (see related longtermism vs xrisk framing discussion).

Proto-EA intuitions

Participants also completed the proto-EA scale, which measures two psychologically distinct moral components of EA: "effectiveness-focus" (the E) and "expansive altruism" (the A). Previous studies show the predictive power of this scale. For example, we found that both components equally strongly predicted the extent to which existing effective altruists self-identify with EA.

On a scale from 1 (strongly disagree) to 7 (strongly agree), our sample of NYU students had a mean effectiveness-focus score of 4.2 and a mean expansive altruism score of 4.6. The two scores were roughly normally distributed, and their correlation was r = .24. This means that NYU students scored very similarly on these measures to our (MTurk) sample of the general US population in a previous study (see post linked above). These measures predicted students’ attitudes as expected. Overall agreement with EA correlated positively with both effectiveness-focus (r = .34) and expansive altruism (r = .29). Similarly, overall agreement with the existential risk argument correlated positively with effectiveness-focus (r = .17) and expansive altruism (r = .28). Finally, interest in learning more about EA after reading the introductory text correlated positively with both effectiveness-focus (r = .17) and expansive altruism (r = .30).

Expansive altruism was also positively associated with the choice to donate the $20 payment for completing this study to GiveWell instead of personally receiving a gift card (r = .11). There was no association between effectiveness-focus and donating.

The following table shows the proportion of participants who scored above a certain bar on both effectiveness-focus and expansive altruism. We used three different bars: more than or equal to 4, more than or equal to 5, and more than or equal to 6. Recall that 4 was the midpoint (neither agree nor disagree), which means that any score above 4 indicates a positive tendency towards EA values.

Sample size | 4 and above | 5 and above | 6 and above | |

NYU students | 938 | 51.5% | 11.8% | 1.7% |

US general population | 534 | 49% | 14.0% | 3% |

Effective altruists | 226 | 95% | 81% | 33% |

Note: The lower two studies are reported in a previous forum post.

Unlike attitudes towards EA, whether or not participants knew EA before the survey was neither associated with their scores on effectiveness-focus nor expansive altruism. Of the 111 participants (11.8%) who scored 5 or above on both measures, only 13 clearly knew EA. In other words, roughly 88% of the NYU students who are likely sympathetic towards EA in terms of these psychological measures didn’t know what EA is.

Demographics and areas of study were somewhat predictive of scores on expanded altruism and effectiveness-focus. Consistent with our previous study with the US general population, women scored slightly higher on expansive altruism (r = .07), whereas men scored slightly higher on effectiveness-focus (r = -.12). In contrast to that previous study, there were no correlations between age and the proto-EA measures. This is likely because the students were generally young with a narrower age range. Politically liberal participants scored higher on expansive altruism (r = -.14), whereas effectiveness-focus did not correlate with political orientation. Religious participants also scored higher on expansive altruism (r = .16), whereas effectiveness-focus did not correlate with religiosity. Self-reported SAT scores didn’t correlate with effectiveness-focus, but they correlated negatively with expansive altruism (r = -.12). This negative correlation between SAT scores and expansive altruism is fully explained by the fact that women score higher on expansiveness than men but lower, on average, on the SAT in our sample.[8] Studying psychology correlated positively with expansive altruism (r = .08). Studying business correlated negatively with expansive altruism (r = -.08). Studying math correlated negatively with expansive altruism (r = -.09). Otherwise, there were no significant correlations between study subjects and the two scales.

Expected value reasoning

We presented participants with three expected value (EV) tasks in which they chose between two policies for saving lives, one with a higher EV and one with a lower EV. For example, one task asked participants to choose either "Policy A: Will save 1,000 lives with certainty" or "Policy B: Will save 10,000 lives with a 50% chance and will save no one with a chance of 50%." A total EV score was calculated by adding the score on each individual task (for each individual task selecting the lower EV policy = 0 and higher EV policy = 1; total EV score ranged from 0 to 3).

The average total EV score was 1.4. Total EV score was positively associated with effectiveness focus (r = .08) but not with expansive altruism. The only other correlations with total EV score were gender and certain study subjects. Men tended to score higher than women (r = -.15). Studying business (r = .10) and STEM (r = .08) correlated positively with higher EV scores, whereas studying health fields (r = -.14) and social sciences (r = -.11) correlated negatively. There was no correlation with SAT scores. And notably, there was no correlation with studying economics, which suggests that these effects may not necessarily be driven by having learned expected value theory. EV scores were not associated with agreement with EA, knowledge of EA, or any existential risk measures.

Intellectual interests

In another section, we measured participants’ intellectual interests. The goal was to explore associations with prior exposure to and attitudes towards EA. A better understanding of the intellectual interests of EA-sympathetic students could potentially inform outreach. For example, it could point us towards outreach channels we may have missed so far.

We first asked them, in a free text response field, what their top 3 favorite intellectual people were and next what their top 5 favorite intellectual books or resources (e.g. podcasts) were. The results can be found in this spreadsheet. (Note that we did not statistically analyze these responses. If someone is interested in analyzing them, please reach out.)

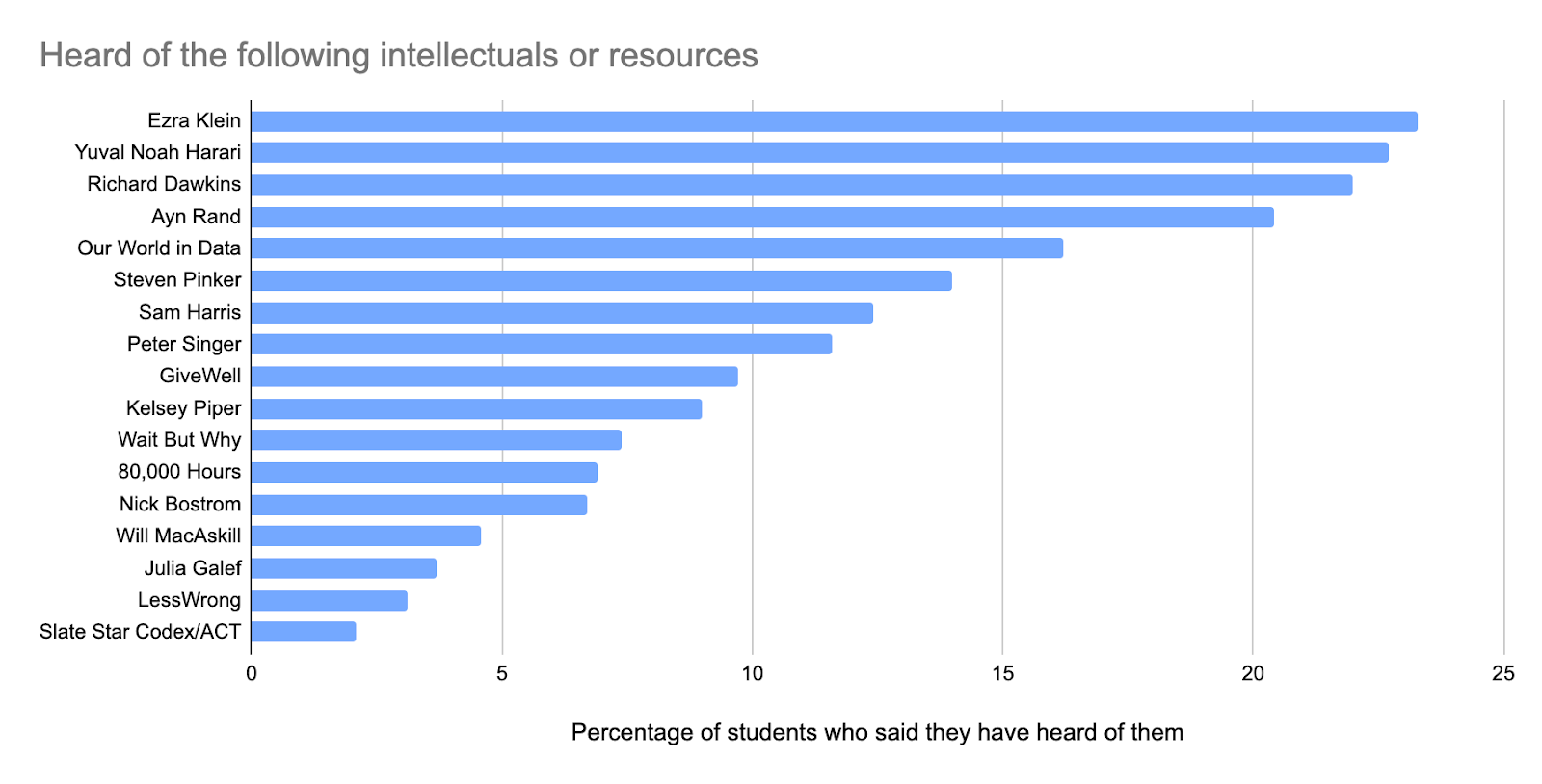

On the next page, we presented participants with a range of intellectuals and resources and asked them whether they had heard of them before.[9] We display average familiarities in the figure below. 38.6% of participants said they hadn’t heard of any of them before.

Participants who said they hadn’t heard of any of the people or resources (38.6%) were significantly less likely to know EA (r = -.19). For each person/resource in the list, we found that participants who had heard of them before tended to be more likely to know EA. More specific correlations can be found in this footnote.[10] Given that some of the listed people are not particularly connected with the ideas of EA (e.g., Ayn Rand, Yuval Noah Harari or Richard Dawkins), these findings suggest that general interest in certain types of intellectual/philosophical ideas predicts knowledge of EA.

In some cases, having heard of the people/resources correlated with higher agreement with EA. But most of these correlations were relatively weak. Participants who had heard of Sam Harris (r = .11), Our World in Data (r = .11), Nick Bostrom (r = .12), and Kelsey Piper (r = .11) tended to agree more with EA after reading the introductory text. The other options did not correlate with it. Those who had heard of none of the people/resources were significantly less likely to agree with EA (r = -.08). Participants who had heard of Sam Harris (r = .09), Yuval Harari (r = .09), and Will MacAskill (r = .08) tended to agree more with the existential risk argument. There were no further noteworthy correlations with agreement with EA and the existential risk argument.

Of participants who did not know what EA is, those who had higher agreement with EA (score of 6 or 7 on agreement with EA introductory text) were more likely to have heard of multiple of the intellectuals and resources: Sam Harris (r = .08), Nick Bostrom (r = .08), Wait But Why? (r = .10), Harari (r = .09), and Kelsey Piper (r = .09). This suggests that even those who have not heard of EA but are in agreement with it may be already more curious about EA-related resources.

The most EA-sympathetic students

We tried a few approaches to identify the students who are most likely to become highly engaged EAs. One approach is to only look at participants who scored above a certain threshold on general agreement with EA and interest in learning more. A second approach is to only look at the participants who scored above a certain threshold on both the effectiveness-focus and expansive altruism scale. In the above sections, we applied these techniques separately. Here we apply them together. Of course, it’s far from clear whether immediate agreement with these measures is necessarily a predictor for becoming a highly engaged EA (see Conclusion section), and there are more approaches to explore.[11]

We found that 8.8% of participants (83 in total) scored 5 or above on all four of the following measures: effectiveness-focus scale, expansive altruism scale, overall agreement with EA, and interest in learning more about EA after reading the introductory text. Of these 83 participants, 69.9% (58 or 6.2% of the total sample) clearly did not know EA, whereas 14.5% clearly knew it. Additionally, only 6 said they were already part of the EA group at NYU, and only 4 have attended an event at NYU about EA.

The group of highly EA-sympathetic students were more likely to believe that existential risk mitigation should be a global priority compared to the rest of the sample (r = .16) and agree overall with the existential risk argument (r = .20). Similarly, they considered the AI risk and safety argument more plausible than the rest of the sample did (r = .08).

What are the characteristics of these participants? In general, the group is relatively similar to the full sample with respect to age and self-reported SAT score (for a comparison table, see the supplementary materials). Students sympathetic to EA were more likely to be politically liberal than students not sympathetic to EA (r = -.12). Due to the small sample size, we didn’t find any further significant correlations. For completeness sake, we still report some noteworthy findings that may become significant with larger sample sizes. This group of students contained a slightly greater proportion of men compared to the remaining sample (r = -.06). Further, this group contained a slightly greater proportion of people studying a STEM subject than the remaining sample (r = .05). These preliminary findings suggest that people from different fields of study and of different demographics are very sympathetic to EA.

The highly EA-sympathetic group was also more likely to have heard of Peter Singer, Sam Harris, Will MacAskill, 80,000 Hours, Slate Star Codex/ACT, and Harari (r between .09 and .15). This group was less likely to have heard of none of the resources (r = -.09). See here for an overview of their proactively listed favorite intellectuals and favorite books/resources. Commonly listed favorite intellectuals were Elon Musk (22), Stephen Hawking (10), Sir Issac Newton (7), Nikola Tesla (7), Albert Einstein (6), Ratan Tata (5), Jordan Peterson (5), Noam Chomsky (4), Barack Obama (4), and Bill Gates (4). This group also repeatedly listed the following intellectual books and resources: New York Times (11), Sapiens by Yuval Noah Harari (7), NPR (5), Atomic Habits by James Clear (4), Rich Dad Poor Dad by Robert Kiyosaki and Sharon Lechter (4),1984 by George Orwell (4), Thinking Fast and Slow by Daniel Kahneman (4), Outliers by Malcolm Gladwell (3), Animal Farm by George Orwell (3), the podcast Stuff You Should Know (3), The Alchemist by Paulo Coelho (3), Lex Fridman Podcast (3), and Republic by Plato (3).

Are there differences between universities?

Since outreach efforts between universities differ, there could be big differences in EA exposure. To our knowledge, the following studies have been conducted.

First, in two unpublished online surveys, Rethink Priorities and CEA found that 10-15% of respondents had heard of EA and that familiarity with EA was higher among students at highly-ranked universities.

Second, Emma Abele from the Global Challenges Project found that there are significant differences in EA familiarity at different universities through an in-person university campus study (with a sample of 100 participants per university). Initial findings suggest the following levels of familiarity: Stanford (17%), Brown (16%), LSE (13%), UChicago (6%), Columbia (3%), UCS (2%). These numbers seem to match the level of outreach efforts at the respective universities, and they are roughly in line with the findings of our NYU survey.

We think it might be valuable to replicate the type of large-scale online survey we conducted at different universities. If so, we would suggest changing several things. In particular, since we gained many general insights from this first survey that don’t need to be tested again, newer surveys could be much shorter and only include the essential questions. Such a significantly shortened survey would also be less costly. If you have suggestions on what to improve in potential follow-up surveys, please reach out to us.

Methods

Participants

In total, we recruited 1045 students in two days. 107 were excluded from the final analyses because they did not pass a simple attention check or because they finished the survey in less than 7 minutes. The median duration was ~19.4 minutes. 938 participants were included in the final sample.

The mean age was 22.5 (median: 22, min: 18, max: 35). The gender ratio (looking only at men and women) was 42.2% men vs. 57.8% women. This is very close to the overall gender ratio of NYU: 43% men vs. 57% women. 51.5% were undergraduates, and 48.5% were graduate students. More specifically, 14.7% were first-year students, 12.2% were sophomores, 12.0% were juniors, 12.6% were seniors, 36.9% were master's students, and 11.6% were Ph.D. students. Amongst undergraduates (for which we have clear statistics from NYU), the study subject distribution was as follows: 29.7% STEM, 19.3% social sciences, 15.7% arts and media, 11.7% business, 9.9% health or pre-med, 7.5% humanities, 1.5% education, and 4.6% other subjects. This is relatively representative of most subjects, although our sample contained slightly more STEM students and slightly fewer arts and media students than the NYU population. See our supplementary materials for a more detailed breakdown of majors and a comparison with NYU population numbers.

Recruitment

We advertised the study as the “NYU Student Values Survey." We did that in order to be descriptive enough but without biasing the sample too much. Even though we cannot rule out that EA-aligned people may be more likely to participate in such a survey just because of the name, we think it’s relatively unlikely. One reason is that participants were incentivized financially and received $20 in the form of an Amazon gift card for completing the study or, alternatively, could choose to donate $20 (the donation option was not mentioned in advertising but was in the consent form at the beginning of the survey). Only NYU students were eligible. The study ran for two days, from April 25 to April 26, 2022.

We recruited participants through social media and email. In addition, we used a snowball sampling technique, whereby participants could earn extra for referring other students. To avoid biasing results, we asked participants who wanted to recruit others to only describe the survey as the NYU Student Values Survey, not to mention EA, and not to share the study specifically with supporters of EA.

66.8% of participants indeed used a referral link for the survey that they had received from another participant. When asked explicitly, 50.6% said they were recruited directly through a friend, 26.3% said they were recruited through email, and 16.7% said they were recruited through social media. The rest said they were recruited through other means. 13.3% reported being introduced to the survey through someone they believe is a member of the EA community. In some parts of our analysis, we excluded those participants because it’s possible they could have biased the sample, even though it rarely made a noteworthy difference.

At the end of the survey, participants could provide optional feedback. Most of that feedback[12] was positive, e.g., of students saying they enjoyed the survey and found it informative and interesting.

Representativeness

In terms of age, gender, and study subject, our sample is relatively close to the total NYU population distribution. An exception is that our sample contained slightly too many STEM (in particular computer science) students and too few arts and media students. Given that studying a STEM subject correlates positively with positive attitudes towards EA, our estimates of the fraction of highly sympathetic students may be slightly too optimistic. But we don’t think adjusting for that changes the interpretation of our survey.

More relevant for our purposes is the question of whether our sample contained too many or too few students who knew EA or who were sympathetic towards it. The answer is not obvious to us. A priori, a slight bias towards recruiting too many EA sympathetic people seems somewhat more plausible. But we are not sure if that’s the case. We found that the fraction of respondents who indicated they had participated in an EA group or fellowship was only somewhat larger than we estimated the number should be (see Involvement section above). So we think there’s probably no strong bias. But it’s possible that our sample had a slightly too high proportion of active effective altruists. If so, the true fraction of students at NYU who know EA and are very sympathetic towards it could be slightly lower than the numbers we reported. We are open to concrete suggestions for how to test this and how to improve representativeness (with respect to EA knowledge and attitudes) in future surveys.

Data

The anonymized data, our analysis script, and our supplementary materials are available at this Open Science Framework repository. If you want to do further analyses, we encourage you to do so, and please contact us if you have questions.

Limitations and future research

There are several limitations to this survey, and we hope that future studies can benefit from the insights we’ve gained from this project.

One important limitation is that agreement with EA depends a lot on how EA is described. Our EA introduction text is certainly not the only way of describing EA. And there may be better alternatives for describing and framing EA. For example, some may argue that certain ideas our text contained (e.g. cost-effectiveness of charities) could be deemphasized and that other ideas (e.g. existential risk or certain epistemic virtues) could be emphasized more.

Future studies could also present participants with criticism of EA or with ideological views that are in conflict with EA. This may help to get a more fine-grained picture of who agrees with EA even after reflecting on criticism and alternative views. It could also help with overcoming social-desirability bias — the tendency to respond to questions in a manner that will be viewed favorably by others. And it may help to account for acquiescence bias — the tendency to simply agree (or select a positive response option).

As mentioned in the Methods section, it is unclear if our sample is entirely representative. Getting a fully representative sample is very difficult — not even professional polling organizations achieve this — but there are certainly approaches to improve representativeness. It would be useful for future research to explore different strategies that improve representativeness while keeping costs and effort low.

Perhaps most importantly, as discussed in the Conclusion section, it is unclear how predictive our measures are of EA engagement and making valuable contributions to the world. Rigorously developing and testing such measures is an important task for future research and something we will explore further ourselves.

There are many directions for future research. Here we review some concrete open research questions:

- Beyond agreement with basic EA principles, what other (e.g., motivational or cognitive) predictors are essential to becoming more engaged and making valuable contributions?

- How many students have a negative first impression of the EA community or certain practices? And to what extent could negative first impressions account for the fact that so few people know EA?

- Does exposure to EA increase people’s favorability to it, or are those who are likely to be sympathetic towards EA more likely to have already found out about it?

- How would students respond to a survey that also presents ideologies in conflict with EA or (good and bad) criticisms of EA? Would this elicit more honest feedback? Can we use this method to identify talented people who aren’t persuaded too quickly but tend to be critical of novel radical ideas?

- How robust are the weak associations we found between study subjects and agreement with EA and existential risk mitigation? For example, are students studying humanities indeed more likely to see existential risk mitigation as a global priority despite lower favorability towards EA in general? And what are the implications for outreach?

- How robust is the negative correlation between SAT scores and perceiving the AI risk argument as plausible? If so, what is the explanation and what are the implications for recruiting the most competent people for AI safety?

- Why do many students not view existential risk mitigation as a key global priority despite considering the likelihood of human extinction this century or millennium to be relatively high?

We would be excited for researchers to explore these questions. If you're interested, please feel free to get in touch with us.

Acknowledgments

We thank the following people for their helpful inputs on the survey plan/materials or this write up: Emma Abele, David Althaus, Sage Bergerson, Matt Coleman, Dewi Erwan, Theo Hawking, João Bosco de Lucena, David Moss, Cian Mullarkey, Eli Rose, George Rosenfeld, Stefan Schubert, Ben Todd, Ben West, Matti Wilks, and Claire Zabel.

- ^

Examples classified as clearly knowing what EA is: "Using evidence and logic to try to figure out the best way to help people, or like taking actions based on effective outcomes towards others such as taking jobs that help others the most" and "I have limited understanding of this topic. Effective Altruism is a type of philosophy which consists of objectively listing out all the resources and options available for taking decisions and choosing the ones that maximize the "good" for humanity."

Example classified as weak evidence of knowing what EA is but the definition is not sufficiently clear: "To do things that are good for others and benefit them in a way that has been proven or has reason".

Examples that show the person clearly does not know what EA is: "Being placed in a situation where you feel the need to do good," "Effective Altruism is the concept of being generous and helpful to others without expecting anything in return," and "Altruism is the act of being selfless. Doing something for the groups benefit, even if it harms you. Seen a lot in animals."

- ^

13.3% of participants said they were introduced to the survey by someone they believe is a member of the EA community. When we excluded those participants, the percentage of participants who said they had heard of EA or who had a correct understanding of EA slightly dropped to from 40.7% to 39.2% and from 7.4% to 7.1%, respectively.

- ^

Students self-reported their majors from a list of 43 majors including "other." To analyze study subjects we grouped these majors into 8 main disciplines: arts and media, business, education, health-related fields, humanities, social sciences, STEM, other. See our supplementary materials for more information about majors and study subjects.

- ^

Recall that 40.7% said they had heard of EA. In contrast, 68.3% said they had heard of utilitarianism. 25.2% said they had heard of existential risk mitigation. 15.7% said they had heard of longtermism. 59.8% said they had heard of evidence-based medicine. 14.9% said they had heard of poststructuralism. The latter two options were included simply for comparison. However, people may just be more likely to indicate knowledge of concepts whose names have easily understandable literal meanings like “effective altruism” or “evidence-based medicine” than with more abstractly named concepts like “poststructuralism” or “longtermism” (while utilitarianism might be widely known enough for people to have genuine familiarity). This interpretation is supported by the fact that many participants who indicated their familiarity with EA could not accurately describe it.

- ^

EA Introduction Text: In this study, we would like to learn about your views on “Effective Altruism”. Effective Altruism is a philosophy and a social movement that tries to use reason and evidence to do the most good in the world. Here is a very short summary of some of the key principles of Effective Altruism.

1) When we want to do good, we should aim to help as many individuals as much as possible

An important aspect of Effective Altruism is the focus on doing the most good possible with our limited resources. For example, when we are using our time, money, abilities, or knowledge to improve the world, we should use these resources in the most effective way possible, so we can improve as many lives as possible, as much as possible. The reason this is important is because some ways of doing good are much more effective than others. For example, according to experts, the most effective charities tend to be hundreds of times more effective compared to typical charities. The same principle applies to the impact of careers you might choose to try to make the world better. Therefore, when deciding between altruistic careers or donations, it’s important to choose the most effective options.

2) When we want to do good, we should count everybody roughly the same

Many people prefer helping others that they have a personal connection to. For example, they may want to volunteer at a local charity that they personally care about. However, proponents of effective altruism argue that we should help whom we can help the most, even if those people are strangers that live far away from us. For example, often it’s much cheaper, and therefore more cost-effective, to help people who live in poor countries on the other side of the world. Furthermore, effective altruists argue we should also consider people who live in the future. They matter too, and since there could be trillions of future people, it has great moral priority to ensure they will live good lives. And finally, effective altruists even argue that the suffering of animals matters, and that focusing on helping animals could therefore have a high priority.

3) When we want to do good, we should use reason and evidence to find the most effective strategies

There are thousands of ways to do good in the world and to help others. So how should we decide which cause to focus on? Many people have a cause that is personally meaningful to them. Effective Altruism, by contrast, argues that we should primarily use careful, evidence-based reasoning to figure out which cause you should focus on in order to do the most good with your time and money. For example, you could carefully consider information about how large a problem is (how many individuals are affected by it), how neglected a problem is (how many resources are already directed to solving it), and how solvable a problem is (how easy it is to make progress on it). Of course, sometimes these factors conflict and you must carefully weigh them against each other; if a problem is large enough, it might be worth working on it even if you have a low probability of solving it. In short, when you want to have a positive impact in the world, it’s rarely the case that simply following your passion or your intuition will lead you to improve the world the most. Instead, you will have a bigger impact if you decide what to do based on reason and evidence, even if this leads you to support causes that don’t seem commonsensical at first glance.

- ^

Participants recruited by someone they believe is a member of the EA community did not significantly differ in their agreement with EA from the views of the remaining participants.

- ^

Computer and data science students were more interested in AI risk (r = .26), pandemic risk (r = .14), and earning-to-give (r = .10). Engineering students were more interested in pandemic risk (r = .16), earning-to-give (r = .10), and power conflict (r = .07). Political science students were more interested in power conflict (r = .07) and poverty (r = .07) but less interested in AI risk (r = -.07) and pandemic risk (r = -.07). Psychology students were less interested in pandemic risk (r = -.09). Biology students were less interested in power conflict (r = -.07). Math students were less interested in poverty (r = -.08). Performing and visual arts students were less interested in AI risk (r = -.07), pandemic risk (r = -.08), and earning-to-give (r = -.09).

- ^

See supplementary materials for results of statistical tests.

- ^

In follow-up questions we asked participants how much they liked the intellectuals/resources that they had heard of. We didn’t analyze the results of these measures.

- ^

Correlations with EA agreement were strongest for participants having heard of 80,000 Hours (r = .31), GiveWell (r = .27) LessWrong (r = .26), Peter Singer (r = .23), Steven Pinker (r = .23), William MacAskill (r = .23), Sam Harris (r = .20), and Richard Dawkins (r = .20). Even having heard of Ayn Rand correlated positively with knowing what EA is (r = .17).

Older students were more likely to have heard of Our World in Data (r = .11), William MacAskill (r = .10), 80,000 Hours (r = .12), LessWrong (r = .13), GiveWell (r = .09), Richard Dawkins (r = .08), Slate Star Codex/ACT (r = .07), Ayn Rand (r = .07) and Yuval Harari (r = .10). Women were more likley to have heard of Steven Pinker (r = .07) and Ezra Klein (r = .09). Men were more likely to have heard of Our World in Data (r = .07), William MacAskill (r = -.08), Nick Bostrom (r = -.09), 80,000 Hours (r = -.07), Richard Dawkins (r = -.13), Slate Star Codex/ACT (r = -.09), LessWrong (r = -.07), and Julia Galef (r = -.07). The more politically liberal students were, the more likely they were to have heard of Sam Harris (r = -.07), Steven Pinker (r = -.10), Ayn Rand (r = -.16), Yuval Harari (r = -.11), Ezra Klein (r = -.12), and Kelsey Piper (r = -.08). The more politically conservative students were, the more likely they were to have heard of none of the people/resources (r = .12).

STEM students were more likely to have heard of Our World in Data (r = .15), 80,000 Hours (r = .09), Slate Star Codex/ACT (r = .07), LessWrong (r = .08), and Yuval Harari (r = .07) and less likely to have heard of Peter Singer (r = -.10), Steven Pinker (r = -.07), and Ayn Rand (r = -.07). Social science students were more likely to have heard of Peter Singer (r = .11), Sam Harris (r = .07), Steven Pinker (r = .13), Ezra Klein (r = .12), Ayn Rand (r = .08) and less likely to have heard of none of the people/resources (r = -.07). Humanities students were more likely to have heard of Peter Singer (r = .14), Steven Pinker (r = .11), Ayn Rand (r = .15), and Ezra Klein (r = .15) and less likely to have heard of none of the people/resources (r = -.12). Arts and media students were less likely to have heard of Our World in Data (r = -.07). Business students were more likely to have heard of Ayn Rand (r = .07). Health students were less likely to have heard of Bostrom (r = -.08), 80,000 Hours (r = -.08), and more likely to have heard of none of the people/resources (r = .07). - ^

A third approach, to the extent we are focused on existential risk, is to look at the participants who scored above a certain threshold on agreeing that existential risk mitigation is a global priority. However, we are not sure whether instant agreement with immediately prioritizing existential risk mitigation is as predictive of a measure for becoming a highly engaged EA, so we do not include agreement with that argument in this section. People may need more information and time to think about existential risk arguments.

- ^

5.5% left a positive comment, 3.7% left a neutral comment (e..g, saying "thank you") or suggestion, and 0.7% left a negative comment. Some examples of the positive comments were: "I think this was a very informative study, and I am interested in learning more about effective altruism," and "Very interesting and would love to be involved in the community to meet people and learn new perspectives."

This is my bias as a media guy, but to me this survey actually suggests the opposite of the need to "massively boost (high-quality) student outreach" — if there is a large bloc of students who are sympathetic to EA but have never heard of it, what's needed is really just greater prominence in mass media. If you just randomly come across the term "effective altruism" enough times, you'll get curious what it means and if you go Google and find out it turns out to be ideas that you are sympathetic to.

I think the Carrick Flynn congressional race just generating some articles that say "here's this thing called Effective Altruism and they got this guy running for congress" is constructive in that same way. The survey indicates that EA is currently very far from tapped out in terms of the potential upside to extremely shallow outreach.

I don't really know anyone who got into EA after reading a newspaper article and from what I've heard this matches other people's experience.

That’s because there haven’t been enough articles!

In the UK there are people who've heard about it on the radio, podcasts and some articles in the media.

A lot of people got into EA after reading a book, and a lot of people find new topics to investigate by reading newspaper articles.

Thank you again for all your work on this - it's super useful, and maybe a significant update for me. (I wish we'd done more surveying work like this years ago!)

A) I agree the attitude-behaviour gap seems like perhaps the biggest issue in interpreting the results (maybe the most proactive and able to act people are the ones who have already heard of EA, so we've already reached more of the audience than it seems from these results).

One way to get at that would be to define EA interest using the behavioural measures, and then check which fraction had already heard of EA.

E.g. you mention that ~2% of the sample clicked on a link to sign up to a newsletter about EA. Of those, what fraction had already heard of it?

B) Some notes that illustrate the importance of the attitude-behaviour gap:

C) One minor thing – in future versions, it would be useful to ask about climate change as a cause to work on. I expect it would be the most popular of the options, and is therefore better for 'meeting people where they are' than extreme poverty.

Thanks Ben!

13.6% (3 people) of the 22 students who clicked on a link to sign up to a newsletter about EA already knew what EA was.

And 6.9% of the 115 students who clicked on at least one link (e.g. EA website, link to subscribe to newsletter, 80k website) already knew what EA was.

Another potentially useful measure (to get at people’s motivation to act) could be this one:

“Some people in the Effective Altruism community have changed their career paths in order to have a career that will do the most good possible in line with the principles of Effective Altruism. Could you imagine doing the same now or in the future? Yes / No”

Of the total sample, 42.9% said yes to it. And of those people, only 10.4% already knew what EA was.

And if we only look at those who are very EA-sympathetic (scoring high on EA agreement, effectiveness-focus, expansive altruism and interest to learn more about EA), the number is 21.8%. In other words: of the most EA-sympathetic students who said they could imagine changing their career to do the most good, 21.8% (12 people) already knew what EA was.

(66.3% of the very EA-sympathetic students said they could imagine changing their career path to do the most good.)

A caveat is that some of these percentages are inferred from relatively small sample sizes — so they could be off.

Have you included dummy answers/Lizardman questions to test whether (e.g) people who claimed to have heard of EA are also systematically more likely to hear about fake social movements like "rational globalism" or whatever?

We've asked them about a few 'schools of thought': effective altruism, utilitarianism, existential risk mitigation, longtermism, evidence-based medicine, poststructuralism (see footnote 4 for results). But very good idea to ask about a fake one too!

(Note that we also asked participants who said they have heard of EA to explain what it is. And we then manually coded whether their definition was sufficiently accurate. That's how we derived the 7.4% estimate.)

This is such cool research! Thanks to everybody who contributed :)

I've found the majority of EA University Club members drift out of the EA community and into fairly low impact careers. These people presumably agree with all the EA basic premises, and many of them have done in depth EA fellowships, so they aren't just agreeing to ideas in a quick survey due to experimenter demands, acquiescence bias, etc.

Yet, exposure to/agreement with EA philosophy doesn't seem sufficient to convince people to actually make high impact career choices. I would say the conversion rate is actually shockingly low. Maybe CEA has more information on this, but I would be surprised if more than 5% of people who do Introductory EA fellowships make a high impact career change.

So I would be super excited to see more research into your first future direction: "Beyond agreement with basic EA principles, what other (e.g., motivational or cognitive) predictors are essential to becoming more engaged and making valuable contributions?"

Yes: lots of people agree with EA in principle. But, of those, very few are motivated to do anything. As a suggestion for future research, could you look for what might predict serious commitment in later life?

FWIW, my hunch is the distribution of motivation to be altruistic is not normally distributed, but perhaps even approaching bi-modal: there few people are prepared to dedicate their lives to helping others, but most people will only help if the costs to them are very low.

Why do you think a conversion rate of 5% is shockingly low? Depending on the intervention this can be a high rate in marketing. A fellowship seems like a relatively small commitment and changing the career is a relatively high ask. As we’re not emphasizing earning to give as much as before I would also expect many people to not find high impact work.

This is a thing I and a lot of other organizers I've talked to have really struggled with. My pet theory that I'll eventually write up and post (I really will, I promise!) is that you need Alignment, Agency, and Ability to have a high impact. Would definitely be interested in actual research on this.

Sounds really cool! Would love to hear more when you're ready :)

Do you have a sense of the fraction of people who do introductory fellowships, then make some attempt at a high impact career change? A mundane way for this 5% to happen would be if lots of people apply to a bunch of jobs or degree programs, some of which are high impact, then go with something lower impact before getting an offer for anything high impact.

Do you think part of the reason is that they may find it difficult to get into high impact careers, that they lose interest, or that there are other different factors affecting their decisions like pay, where they can live, etc?

From my experience with running EA at Georgia Tech, I think the main factors are:

Great question! We need more research ;)

Really interesting observations.

Do you have any sense of how many of those people are earning to give or end up making donation to effective causes play a significant role in their lives? I wonder if 5% is at least a little pessimistic for the "retention" of effective altruists if it's not accounting for people who take this path to making an impact.

Nice work. I agree that these results help us judge how much outreach we should do. More specifically, they can offer us an upper-bound: at NYU, there's only room to grow the talent pool n-fold, for the time being, where n is 8.8/1.3=6.7. Let's say that on the margin, NYU student outreach is currently y times more effective than OpenPhil. Then, outreach should be scaled up at most n × y-fold. The actual number will be less, because as you say, the average person who hasn't found out about EA yet but is very sympathetic towards it is less likely to become highly engaged than a person who has found out about it. Put differently, there will be diminishing returns to getting people engaged, and also in getting them doing useful work. But this kind of calculation could be a useful starting point.

Nice work! Lots of interesting results in here that I think lead to concrete strategy insights.

This is a great core finding! I think I got a couple important lessons from these three numbers alone. Outreach could probably be a few times bigger without the proportion of EA students who know about it getting near enough to 100% for sharply diminishing returns. Knowing what EA is seems like about 2:1 evidence in favor of being EA-sympathetic, which is useful, but not that huge. Getting the impression that "nobody at my school knows about EA :(" isn't actually very bad news - folks who are interested in EA do know about it at a meaningfully higher rate, so even at the ideal level, maybe only 50% of students will know what EA is.

This seems to suggest that recent thoughts about "longtermism" vs "X-risk" and the importance of arguing for the size of the far future may not be good for outreach. My impression is that maybe the importance of X-risk even without the "size of the future" piece of the argument seems important to someone who's been around EA for a while, but isn't obvious enough to be good for outreach, where attention is a scarce resource. Accepting the argument for high X-risk only leads to prioritizing it about half the time. I wonder how including a size-of-the-future argument would change this?

This is a big update! I expected correlations on all three of those things. This suggests the current EA stereotype is more due to founder effect than actual difference in affinity for the ideas, which is huge for outreach targeting.

Hm… of 7.4% of students who are familiar with EA, only 17.6% of those students (1.3%/7.3%) are pro-EA and 82% are not. What fraction did we expect here?

I didn't make any prediction beforehand so take this with a grain of salt, but it didn't sound too surprising to me? I feel like when pitching EA during uni freshers fair roughly 1 in 4 people I talk to are sympathetic enough to want to sign up to mailing list and similar. So 17.6% doesn't sound too far off from that vague estimate

This is super cool research and it’s great that you all were able to conduct this survey!!

I think it’s great that you all renamed to survey so that it didn’t specifically attract people who looked for words like “effective” or “altruism” or “existential risk.”

7.4% actually seems quite high to me (for a university without a long-time established intellectual hub, etc); I would have predicted lower in advance.

EA does seem a bit overrepresented (sort of acknowledged here).

Possible reasons: (a) sharing was encouraged post-survey, with some forewarning (b) EAs might be more likely than average to respond to 'Student Values Survey'?

In marketing, there’s the concept of the awareness-consideration-conversion funnel. I’ve argued for many years that EA has low brand awareness but relatively high conversion. It’s good to finally see data on it!

I think further meta work is important (I started funding community building before CEA did) and we should be focused on making sure everybody’s heard of EA rather than spending hours worrying about persuading certain individuals.

Does anyone have thoughts on