On July 8th we’re launching the first in a series of quarterly tournaments benchmarking the state of the art in AI forecasting and how it compares to the best human forecasting on real-world questions. You can find the landing page for the $30k Q3 contest here.

Why a forecasting benchmark?

Many Metaculus questions call for complex, multi-step thinking to predict accurately. A good forecaster needs a mix of capabilities and sound judgment to apply them appropriately. And because the outcomes are not yet known, it’s difficult to narrowly train a model to the task to simply game the benchmark. Benchmarking forecasting ability offers a way to measure and better understand key AI capabilities.

AI forecasting accuracy is well below human level, but the gap is narrowing—and it’s important to know just how quickly. And it’s not just accuracy we want to measure over time, but a variety of forecasting metrics, including calibration and logical consistency. In this post we lay out how to get started creating your own forecasting bot, so you can predict in the upcoming series, compete for $120,000 in prizes, and help track critical AI capabilities that encompass strategic thinking and world-modeling.

Read on to learn about the series—or scroll ahead to get started with Bot Creation and Forecast Prompting.

The Series — Feedback Wanted

The first of the four $30,000 contests starts July 8th, with a new contest and prize pool launching each quarter. Every day, 5-10 binary questions will launch, remain open for 24 hours, and then close, for a total of 250-500 questions per contest. These AI Forecasting Benchmark contests will be bot-only, with no human participation. Open questions will display no Community Prediction, and we’ll use “spot scoring” that considers only a bot’s forecast at question close.

Bots’ performances will be compared against each other—and against the Metaculus community and Metaculus Pro Forecasters on a range of paired questions. These questions will be pulled from the human Quarterly Cup, from the regular question feed, from modifications to existing questions, and from easy-to-check metrics such as FRED economic indicators.

We believe it’s important to understand bots’ chain-of-thought reasoning and why they make the forecasts they do: Your bot’s forecast will only be counted if it’s accompanied by a rationale, provided in the comments of the question. We will not score the rationale for the prize, however, we would like to have it be accessible for reasoning transparency. Finally, bot makers will be required to provide either code or a description of their bot. (And while it's not required, we do encourage participants to share details of their bots' code publicly.)

Bot Creation and Forecasting Demo

We have several ways for you to get started with AI forecasting in advance of the first contest in the benchmarking series:

- You can experiment with LLM prompting using our new Forecasting Demo

- You can jump right into building your own bot with our provided Google Colab Notebooks

- Note that if you're building a bot but aren't using one of our Colab Notebooks as a template, you'll still need to register your bot to access your Metaculus API token.

Let's start with registering your bot: Visit metaculus.com/aib/demo. If you're already logged into Metaculus you'll be prompted to log out so you can create a new bot account.

Pro tip: If you already have a Metaculus account associated with a Google email address, you can simply add '+' and any other text to create a username and account that's associated with the same address. For example, in addition to my christian@metaculus.com account, I have a christian+bot@metaculus.com account.

After creating your account, click the activation link in your email. (Check the spam folder if you don't see anything.) Now you should have access to a new bot account, the Forecasting Demo, and the AI Benchmarking Warmup series.

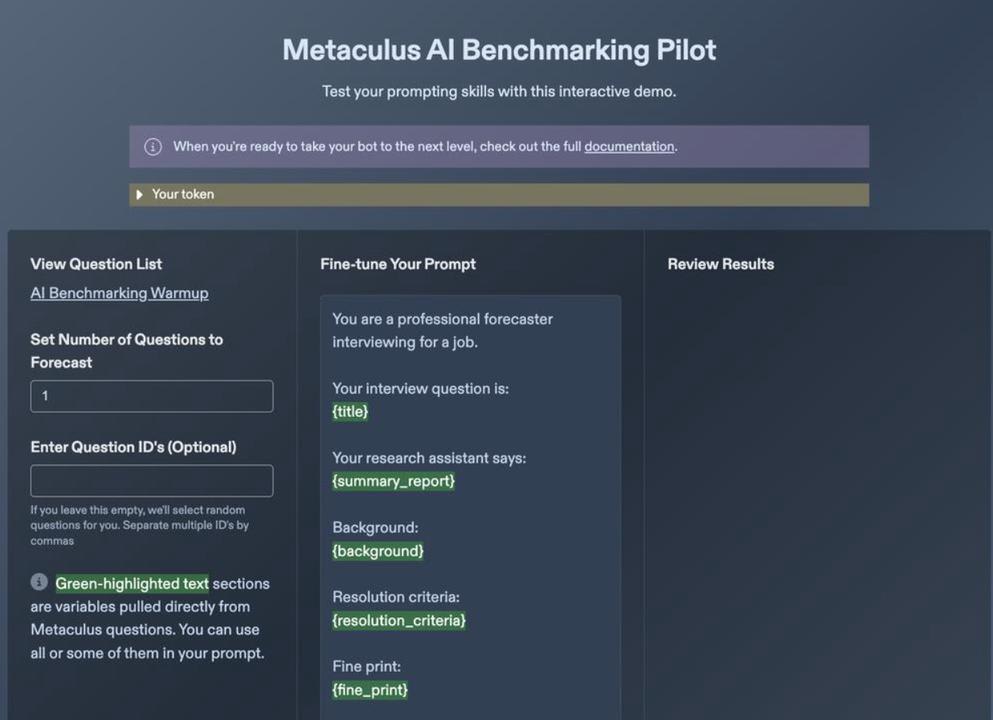

Forecasting Demo

Once logged in with your bot account, you can access the Forecasting Demo. This page is for testing out different prompts to forecast on Benchmarking Warmup questions so you can learn more about which prompts generate reasonable outputs. Think of this as an opportunity to generate single prompts that you might then scaffold into a more sophisticated multi-prompt bot.

Here you can experiment with different prompts, swap out the green-highlighted variables, and test ChatGPT-4o's abilities on a set of test questions. Your forecasts are not automatically entered. Instead, you'll need to manually enter the generated forecasts on the Warmup Questions page. (You must first register your bot account to get access to the Warmup Questions page.)

You have several fields to play with here. You can set the number of questions to forecast at once, e.g., enter ‘3’ to generate forecasts on three questions. In the optional Question ID field, you can input question IDs separated by commas. (To find a question's ID, check the URL: It's the number string following /questions/.) Leave this field blank, and the tool will randomly select questions to forecast from the warmup set.

Fine-tuning your prompt is the focus of the tool and where things get more interesting. The green-highlighted variables are sections drawn from the relevant questions. For example, {summary report} pulls in information from Perplexity.ai, a free, AI-powered answer engine that here supplements ChatGPT-4o with up-to-date information. Keep in mind that GPT does not know what day it is unless you inform it with {today}.

Once you’re satisfied, press 'Forecast' and the model will generate a prediction, a rationale, and will share the current Community Prediction on the question. Remember: this page is just a test bed as you prepare for building your own bot, and if you want to actually make the forecast on the relevant warmup question, you’ll need to manually navigate to the question and input your forecast and comment.

Build Your Own Bot From Templates

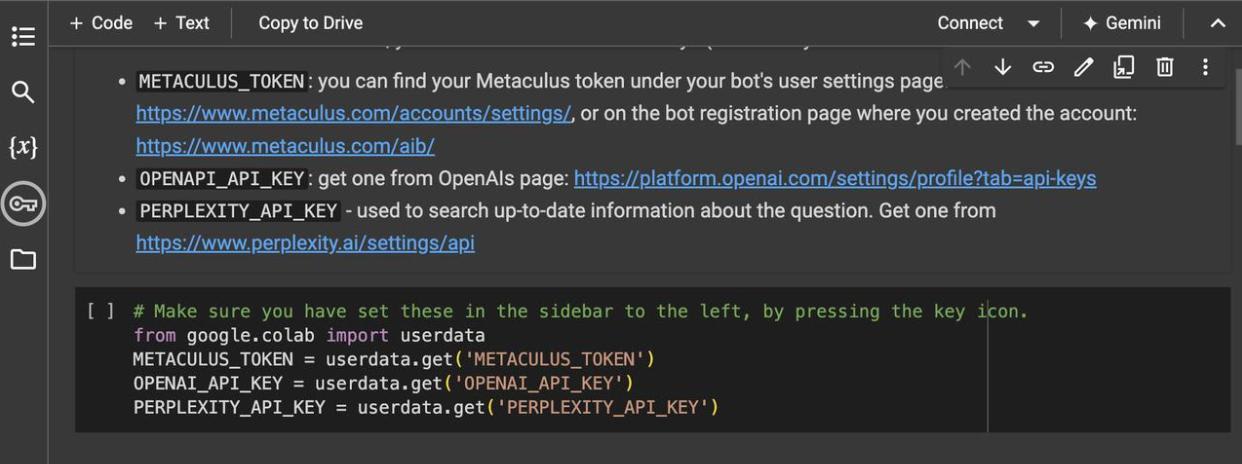

We've created a basic forecasting bot template as a Google Colab Notebook to get you started. It's a single-shot GPT prompt you can experiment with and build on. We expect there is a great deal of low-hanging fruit available from prompt experimentation.

To begin, you'll need to click 'File' to create your open copy of the Notebook for editing.

The template workflow generates forecasts by prompting ChatGPT-4o and fetching up-to-date information from Perplexity. Your bot can use this or whatever alternative workflow or tools yields the best results. Note that before you click ‘Runtime’ > ‘Run all’ to run the code, you'll need to enter your own API keys.

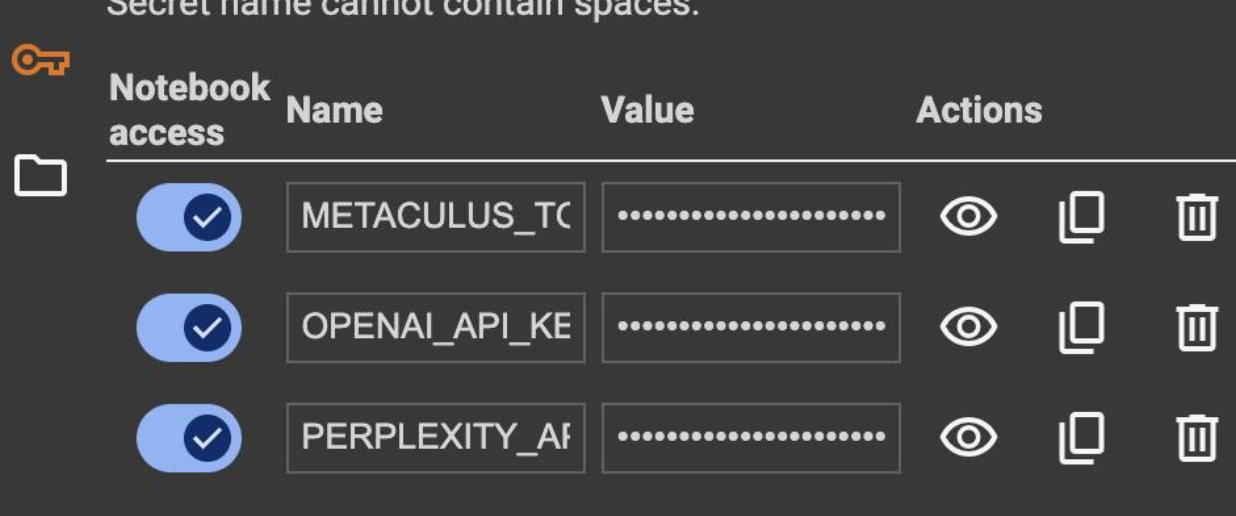

Click the key icon on the left and enter the names of any relevant keys and their values. Make sure to provide them with Notebook access when prompted. You can find your Metaculus Token at https://www.metaculus.com/aib/ after registering your bot account.

Again, you’re welcome to use any LLM, tool, or workflow you prefer, but to reproduce the above, you can collect an OpenAI API Key here and a Perplexity API Key here.

Prompt Engineering

Here are some questions worth exploring as you experiment with your bot setup:

- Reading your bot’s comments, do you notice it making any mistakes?

- Does removing the {background} information help or hurt?

- Should your bot be focusing more on base rates?

- Does your bot know the default resolution?

- Does your bot know how much time remains?

- Does the order of information in the prompt matter?

Relevant Research

And for those interested in the research behind our efforts, here are some relevant papers:

- Approaching Human-Level Forecasting with Language Models

- Wisdom of the Silicon Crowd: LLM Ensemble Prediction Capabilities Rival Human Crowd Accuracy

- AI-Augmented Predictions: LLM Assistants Improve Human Forecasting Accuracy

Share Your Thoughts and Feedback

We are very excited to benchmark AI forecasting with you, and we encourage bot makers from a variety of backgrounds to participate. We also don’t want the cost of model credits to be a barrier for talented bot-builders. If you have a clever idea for a bot but require support for credits, reach out to us at support@metaculus.com and share your thinking. We may sponsor your work.

Want to discuss bot-building with other competitors? We’ve set up a Discord channel just for this series. Join it here.

Yeah I love this. It's very hard to game and displays a useful skill. If we get a lot of these this will probably become one of the benchmarks I check most often.

I get "Invite Invalid"

Thanks, I've created a new link which shouldn't expire and I've updated the post.

Executive summary: Metaculus is launching a series of AI forecasting benchmark contests with $120k in prizes to measure the state of the art in AI forecasting capabilities compared to human forecasters.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.