This post was co-authored by the Forecasting Research Institute and Rose Hadshar. Thanks to Josh Rosenberg for managing this work, Zachary Jacobs and Molly Hickman for the underlying data analysis, Adam Kuzee and Bridget Williams for fact-checking and copy-editing, the whole FRI XPT team for all their work on this project, and our external reviewers.

In 2022, the Forecasting Research Institute (FRI) ran the Existential Risk Persuasion Tournament (XPT). Over the course of 4 months, 169 forecasters, including 80 superforecasters[1] and 89 experts, forecasted on various questions related to existential and catastrophic risk. Forecasters moved through a four-stage deliberative process that was designed to incentivize them not only to make accurate predictions but also to provide persuasive rationales that boosted the predictive accuracy of others’ forecasts. Forecasters stopped updating their forecasts on 31st October 2022, and are not currently updating on an ongoing basis. FRI plans to run future iterations of the tournament, and open up the questions more broadly for other forecasters.

You can see the results from the tournament overall here.

Some of the questions in the XPT related to AI timelines. This post:

- Sets out the XPT forecasts on AI timelines, and puts them in context.

- Lays out the arguments given in the XPT for and against these forecasts.

- Offers some thoughts on what these forecasts and arguments show us about AI timelines.

TL;DR

- XPT superforecasters predict a 50% chance that advanced AI[2] exists by 2060.

- XPT superforecasters predict that very powerful AI by 2030 is very unlikely (1% that Nick Bostrom affirms AGI by 2030; 3% that the compute required for TAI is attainable by 2030 (taking Ajeya Cotra’s biological anchors model as given, and using XPT superforecaster forecasts as some of the inputs)).

- In the XPT postmortem survey, superforecasters predicted:

- 13% chance of AGI by 2070, defined as “any scenario in which cheap AI systems are fully substitutable for human labor, or if AI systems power a comparably profound transformation (in economic terms or otherwise) as would be achieved in such a world.”

- 3.8% chance of TAI by 2070, defined as “any scenario in which global real GDP during a year exceeds 115% of the highest GDP reported in any full prior year.”

- It’s unclear how accurate these forecasts will prove, particularly as superforecasters have not been evaluated on this timeframe before.[3]

The forecasts

In the tables below, we present forecasts from the following groups:

- Superforecasters: median forecasts across superforecasters in the XPT.

- All experts: median forecasts across all experts in the XPT.

- This includes AI domain experts, general x-risk experts, and experts in other fields like nuclear and biorisk. The sample size for AI domain experts is small, so we have included all experts for reference.

- Domain experts: AI domain experts only.

| Question | Forecasters | n[4] | 2030 | 2050 | 2100 |

| Probability of Bostrom affirming AGI | Superforecasters | 27-32 | 1.0% | 20.5% | 74.8% |

| All experts | 12-15 | 3.0% | 45.5% | 85.0% | |

| Domain experts | 5 | 9.0% | 46.0% | 87.0% | |

| Compute required for TAI attainable* | [Inferred from some XPT superforecaster forecasts inputted into Cotra’s biological anchors model] | 31-32 | ~3% | ~20% | 61% |

* XPT questions can be used to infer some of the input forecasts to Ajeya Cotra’s biological anchors model, which we investigate here. Note that for this analysis Cotra’s training computation requirements distribution is being held constant.

| Question | Forecasters | n[5] | Year (90% confidence interval) |

| Date of Advanced AI[6] | Superforecasters | 32-34 | 2060 (2035-2120) |

| All experts | 23-24 | 2046 (2029-2097) | |

| Domain experts | 9 | 2046 (2029-2100) |

The forecasts in context

There are various methods of estimating AI timelines:[7]

- Surveying experts of various kinds, e.g. Zhang et al., 2022, Grace et al., 2017, Gruetzemacher et al., 2018

- Doing in-depth investigations, e.g. Cotra 2020

- Making forecasts, e.g. Metaculus on weak AGI and strong AGI, Samotsvety on AGI

The XPT forecasts are unusual among published forecasts in that:

- The forecasts were incentivized: for long-run questions, XPT used ‘reciprocal scoring’ rules to incentivize accurate forecasts (see here for details).

- Forecasts were solicited from superforecasters as well as experts.

- Forecasters were asked to write detailed rationales for their forecasts, and good rationales were incentivized through prizes.

- Forecasters worked on questions in a four-stage deliberative process in which they refined their individual forecasts and their rationales through collaboration with teams of other forecasters.

- The degree of convergence of beliefs over the course of the tournament is documented:

- For “Date of Advanced AI” the standard deviation for the median estimates decreased by 38.44% over the course of the tournament for superforecasters and increased by 34.87% for the domain experts.

- For “Nick Bostrom Affirms Existence of AGI,” the standard deviation for the median estimates decreased by 42.29% over the course of the tournament for superforecasters and increased by 206.99% for the domain experts.

Should we expect XPT forecasts to be more or less accurate than previous estimates? This is unclear, but some considerations are:

- Relative to many previous forecasts, XPT forecasters may have spent more time thinking and writing about their forecasts, and were incentivized to be accurate.

- XPT (and other) superforecasters have a history of superiorly accurate forecasts (primarily on short-range geopolitical and economic questions), and may be less subject to biases like groupthink than domain experts are.

- On the other hand, there is limited evidence that superforecaster’s superior accuracy extends to technical domains like AI, long-range forecasts6, or out-of-distribution events.

Note that in the XPT, superforecasters and experts disagreed on most questions, with superforecasters making relatively lower estimates and experts relatively higher estimates. On AI-related questions, however, their degree of disagreement was the greatest.

Other timelines forecasts

| Year | XPT superforecaster forecasts (as of 31st Oct 2022) | [Inferred from some XPT super forecasts inputted into biological anchors model] | Other relevant forecasts | |

| Bostrom affirms AGI | Advanced AI | Compute required for TAI[8] attainable | ||

| 2030 | ~1% | - | ~3% | 2030: Cotra compute required for TAI attainable (2020): ~8%

2028: Metaculus weak AGI 50%, as of 31st Oct 2022[10] 2030: Samotsvety AGI 31%, as of 21st Jan 2023[11] 2040: Metaculus strong AGI 50%, as of 31st Oct 2022[12] 2040: Cotra 2022: 50% TAI 2043:

|

| 2050 | ~21% | - | ~20%

| 2050: Cotra compute required for TAI attainable (2020): ~46%

2050: Cotra 2020: 50% TAI 2050: Samotsvety AGI 63%, as of 21st Jan 2023[13] |

| 2060 | - | 50% | - | 2060: Zhang et al, 2019: 50% ~HLMI* 2061: Grace et al, 2017: 50% ~HLMI* 2068: Gruetzemacher et al, 2018: 50% ~HLMI* |

| 2100 | ~75% | - | ~61% | 2100: Cotra compute required for TAI attainable (2020): ~78%

2100: Samotsvety AGI 81%, as of 21st Jan 2023[14] 2100:

|

* ‘HLMI’ refers to ‘human-level machine intelligence.’

Some notes on how different definitions of advanced AI relate:

- According to Cotra, her model of the probability that the compute required for TAI is attainable:

- Overstates the probability of TAI in 2030.

- Understates the probability of TAI in 2100.

- Roughly matches the probability of TAI in 2050.[15]

- Human-level machine intelligence (HLMI) requires that AI systems outperform humans on all tasks; TAI could be achieved by AI systems which complement humans on some tasks.[16]

- The criteria for the XPT advanced AI question are stronger than those for the Metaculus weak AGI question, and weaker than those for the Metaculus strong AGI question (the XPT question contains some but not all of the criteria for the Metaculus strong AGI question).

- Taking XPT superforecaster forecasts at face value implies that XPT superforecasters think that advanced AI is a lower bar than Bostrom’s AGI or TAI. The latter two appear similar from XPT superforecaster forecasts alone.

Cross-referencing with XPT’s postmortem survey

Forecasters in the XPT were asked to fill in a postmortem survey at the end of the tournament. Some of the questions in this survey relate to AGI/TAI timelines, so we can use them as a cross-reference. Unlike the XPT, the postmortem survey was not incentivized and there was no collaboration between forecasters. The survey was administered in the months immediately following the conclusion of the XPT, from November 2022 to February 2023. It may be worth noting that the influential LLM ChatGPT was released on November 30, 2022.

Note that only a subset of XPT forecasters responded to the postmortem survey, so in the table below we compare the postmortem results with the XPT forecasts of only the subset of XPT forecasters who responded to the postmortem survey, for comparability. (This is why the XPT medians in this table differ from medians quoted in the rest of the post, which are based on all XPT forecasts.)

| Question | Forecasters | n[17] | 2030 | 2050 | 2070 | 2100 |

| Probability of Bostrom affirming AGI | Superforecasters - XPT subset | 26-31 | 1% | 21% | - | 74.9% |

| All experts - XPT subset | 12-14 | 3% | 45.5% | - | 86.0% | |

| Domain experts - XPT subset | 5 | 9% | 46.0% | - | 87.0% | |

| Probability of AGI* by 2070 | Superforecasters - postmortem | 78 | - | - | 13% | - |

| All experts - postmortem | 59 | - | - | 25% | - | |

| Domain experts - postmortem | 23 | - | - | 20% | - | |

| Probability of TAI** by 2070 | Superforecasters - postmortem | 78 | - | - | 3.8% | - |

| All experts - postmortem | 58 | - | - | 16% | ||

| Domain experts - postmortem | 23 | - | - | 15% | - | |

| [Inferred using superforecaster XPT subset forecasts in biological anchors model] | 29-31 | ~3% | ~20% | ~35% | ~60% | |

| Probability of >15% GWP growth by 2100*** | Superforecasters - XPT subset | 34 | - | - | - | 2.75% |

| Superforecasters - postmortem | 78 | - | - | - | 3% | |

| All experts - XPT subset | 10 | - | - | - | 16.9% | |

| All experts - postmortem | 58 | - | - | - | 10% | |

| Domain experts - XPT subset | 5 | - | - | - | 25% | |

| Domain experts - postmortem | 23 | - | - | - | 5% |

* “Artificial general intelligence is defined here as any scenario in which cheap AI systems are fully substitutable for human labor, or if AI systems power a comparably profound transformation (in economic terms or otherwise) as would be achieved in such a world.”

** “Transformative AI is defined here as any scenario in which global real GDP during a year exceeds 115% of the highest GDP reported in any full prior year.”

*** The full question is “By 2100, will the global real GDP in a year ever exceed 115% of the highest GDP reported in any full prior year?” This question was asked in the XPT as well as the postmortem survey, and corresponds to the definition of TAI used in the postmortem survey.

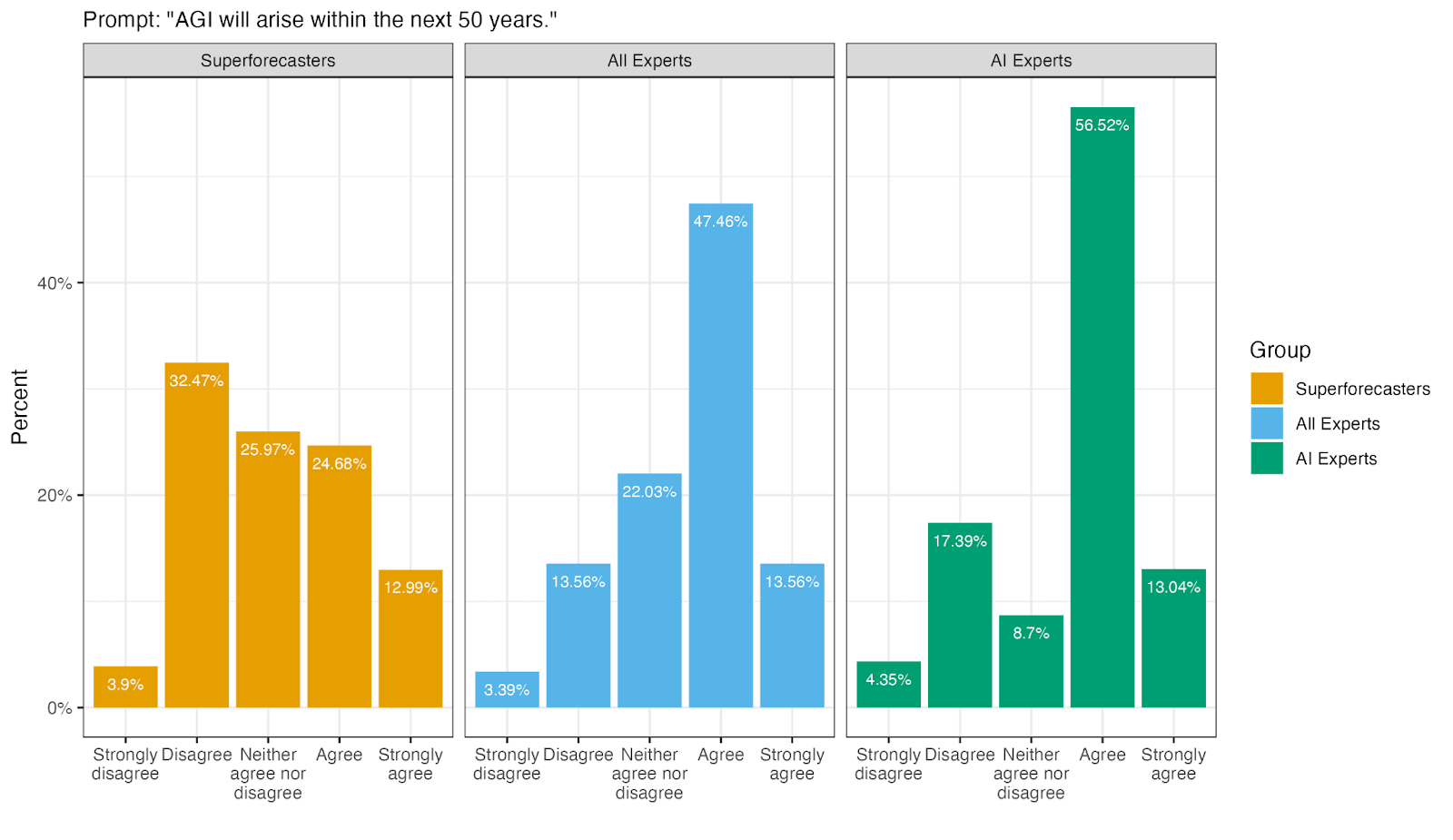

There was also a strongly disagree - strongly agree scale question in the postmortem survey, for the statement “AGI will arise within the next 50 years.” (This question was asked in 2022, so ‘within the next 50 years’ corresponds to ‘by the end of 2072’.) The results were:

From these postmortem survey results, we can see that:

- Forecasters' views appear inconsistent.

- ~26% of superforecasters predicted AGI by 2070 as 50% likely or more, but ~38% agree or strongly agree that AGI will arise by the end of 2072. ~36% of experts predicted AGI by 2070 as 50% likely or more, but ~61% agree or strongly agree that AGI will arise by the end of 2072.

- Superforecasters predict a 3% chance of >15% growth by 2100,[18] and a 3.75% chance of TAI (defined as >15% growth) by 2070.

- Forecasters gave longer timelines when directly asked to forecast AGI/TAI, than forecasts elicited more indirectly.

- Forecasters’ direct forecasts on AGI are much less aggressive than their XPT forecasts on Bostrom affirming AGI.

- Forecasters’ direct forecasts on TAI are much less aggressive than the forecasts inferred by using XPT forecasts as inputs to Cotra’s biological anchors model.

- This is to be expected, as the modes depend on many other parameters and outputs using some XPT forecasts cannot be taken as a representation of XPT forecasters’ views. See here for details of our analysis.

- Forecasters view AGI/TAI as much further away than ‘advanced AI’.

- The subset of superforecasters who responded to the postmortem survey predicted advanced AI by 2060 in the XPT. Their postmortem survey results imply both AGI and especially TAI will arrive substantially later than 2070.

- The same is true of experts in a less extreme form. (Both the subsets of all experts and domain experts who responded to the postmortem survey predicted advanced AI by 2046 in the XPT.)

- Forecasters view TAI as a much higher bar than AGI, as operationalized in the postmortem survey.

- There are no XPT forecasts on TAI and AGI directly, so we can’t directly compare between these postmortem results and the XPT forecasts. XPT forecasts on the probability of Bostrom affirming AGI are in the same ballpark as the outputs of Cotra’s biological anchors model using XPT forecasts as inputs, but these operationalizations are sufficiently different from those in the postmortem survey that it’s hard to draw firm conclusions from this.

The arguments made by XPT forecasters

XPT forecasters were grouped into teams. Each team was asked to write up a ‘rationale’ summarizing the main arguments that had been made during team discussions about the forecasts different team members made. The below summarizes the main arguments made across all teams in the XPT tournament. Footnotes contain direct quotes from team rationales.

See also the arguments made on questions which relate to the inputs to Cotra’s biological anchors model here.

For shorter timelines

- Recent progress is impressive.[21]

- Counterargument: this anchors too much on the recent past and not enough on the longer view.[22]

- Scaling laws may hold.[23]

- External factors:

- Wars may lead to AI arms races.[24]

- Advances in quantum computing or other novel technology may speed up AI development.[25]

- Opinions of others:

- Recent progress has been faster than predicted.[26]

- Other forecasts have moved forward lately.[27]

- People who’ve spent a lot of time thinking about timelines tend to have shorter timelines.[28]

- Teams cited Cotra’s biological anchors report[29] and Karnofsky’s forecasts.[30]

For longer timelines

- Scaling laws may not hold, such that new breakthroughs are needed.[31]

- External factors:

- We may be in a simulation in which it is impossible for us to build AGI.[43]

- Opinions of others:

What do XPT forecasts tell us about AI timelines?

This is unclear:

- Which conclusions to draw from the XPT forecasts depends substantially on your priors on AI timelines to begin with, and your views on which groups of people’s forecasts on these topics you expect to be most accurate.

- There are many uncertainties around how accurate to expect these forecasts to be:

- Forecasters' views appear inconsistent.

- There is limited evidence on how accurate long-range forecasts are.[47]

- There is limited evidence on whether superforecasters or experts are likely to be more accurate in this context.

That said, there are some things to note about the XPT results on AI timelines:

- These are the first incentivized forecasts from superforecasters on AI timelines.

- XPT superforecasters have longer timelines than Metaculus.

- At the time the XPT closed, Metaculus was predicting advanced AI between 2028 and 2040; XPT superforecasters were predicting 2060.

- Forecasts from XPT superforecasters imply longer timelines than Cotra and than ML experts, under certain assumptions.

- Using median XPT superforecaster forecasts for some of the inputs to Cotra’s biological anchors model implies:

- ~20% chance that the compute required to train a transformative model is attainable by 2050, compared with Cotra’s ~46%.

- Median TAI timelines of ~2080, compared with Cotra’s most recent median of 2040.

- Assuming that human-level machine intelligence is a higher bar than TAI, such that HLMI timelines will be longer than TAI timelines,[48] XPT forecasts imply median HLMI timelines of >2080. Various surveys of ML experts have median HLMI timelines of between 2060 and 2068.[49]

- Using median XPT superforecaster forecasts for some of the inputs to Cotra’s biological anchors model implies:

- Explicit superforecaster forecasts on TAI and AGI from the postmortem survey give even longer timelines.

- 13% chance of AGI by 2070.

- 3.8% chance of TAI by 2070.

- XPT superforecasters put very little probability on advanced AI by 2030.

- 1% that Bostrom affirms AGI by 2030.

- ~3% chance that the compute required for TAI is attainable by 2030, when XPT forecasts are used as some of the inputs to Cotra’s model.

- ^

By superforecasters, we mean seasoned forecasters with a track record of predictive accuracy on shorter-run questions in forecasting tournaments held by the Good Judgment Project.

- ^

Full question text (with details on criteria here):

When will the first unified AI system meeting all of the following criteria be trained, tested, and publicly known of?

1. Able to reliably pass a 2-hour adversarial Turing test.

2. High competency at answering questions across diverse fields of expertise.

3. High competency on interview-level problems in the APPS benchmark.

4. Able to learn the classic Atari game “Montezuma’s revenge” in the equivalent of 100 hours or less of real-time play.

- ^

See here for a discussion of the feasibility of long-range forecasting.

- ^

Here n is a range because different numbers of forecasters provided forecasts for different resolution years and different questions.

- ^

Here n is a range because different numbers of forecasters provided 5th, 50th and 95th percentile forecasts.

- ^

Full question text (with details on criteria here):

When will the first unified AI system meeting all of the following criteria be trained, tested, and publicly known of?

- Able to reliably pass a 2-hour adversarial Turing test.

- High competency at answering questions across diverse fields of expertise.

- High competency on interview-level problems in the APPS benchmark.

- Able to learn the classic Atari game “Montezuma’s revenge” in the equivalent of 100 hours or less of real-time play.

- ^

For a recent overview of AI timelines estimates, see here.

- ^

Transformative AI: ““software” -- a computer program or collection of computer programs -- that has at least as profound an impact on the world’s trajectory as the Industrial Revolution did”. Pp. 1-2

- ^

“[T]he date at which AI models will achieve human level performance on a transformative task.” https://epochai.org/blog/direct-approach-interactive-model

- ^

The date when the XPT forecasters stopped updating their forecasts.

- ^

“The moment that a system capable of passing the adversarial Turing test against a top-5%[1] human who has access to experts on various topics is developed.” See here for more details.

- ^

The date when the XPT forecasters stopped updating their forecasts.

- ^

“The moment that a system capable of passing the adversarial Turing test against a top-5%[1] human who has access to experts on various topics is developed.” See here for more details.

- ^

“The moment that a system capable of passing the adversarial Turing test against a top-5%[1] human who has access to experts on various topics is developed.” See here for more details.

- ^

“How does the probability distribution output by this model relate to TAI timelines? In the very short-term (e.g. 2025), I’d expect this model to overestimate the probability of TAI because it feels especially likely that other elements such as datasets or robustness testing or regulatory compliance will be a bottleneck even if the raw compute is technically affordable, given that a few years is not a lot of time to build up key infrastructure. In the long-term (e.g. 2075), I’d expect it to underestimate the probability of TAI, because it feels especially likely that we would have found an entirely different path to TAI by then. In the medium-term, e.g. 10-50 years from now, I feel unsure which of these two effects would dominate, so I am inclined to use the output of this model as a rough estimate of TAI timelines within that range.” p. 18

- ^

In her biological anchors report, Cotra notes that “The main forecasting target researchers were asked about in [the Grace et al. 2017] survey was “high-level machine intelligence”, defined as the time when “when unaided machines can accomplish every task better and more cheaply than human workers.” This is a stronger condition than transformative AI, which can be achieved by machines which merely complement human workers.” p. 39

- ^

N is sometimes a range here because different numbers of forecasters provided forecasts for different resolution years.

- ^

The probability of >15% growth by 2100 was asked about in both the main component of the XPT and the postmortem survey. The results here are from the postmortem survey. The superforecaster median estimate for this question in the main component of the XPT was 2.75% (for all superforecaster participants and the subset that completed the postmortem survey).

- ^

The probability of >15% growth by 2100 was asked about in both the main component of the XPT and the postmortem survey. The results here are from the postmortem survey. The experts median estimate for this question in the main component of the XPT was 19% for all expert participants and 16.9% for the subset that completed the postmortem survey.

- ^

The probability of >15% growth by 2100 was asked about in both the main component of the XPT and the postmortem survey. The results here are from the postmortem survey. The experts median estimate for this question in the main component of the XPT was 19% for all expert participants and 16.9% for the subset that completed the postmortem survey.

- ^

Question 3: 339, “Forecasters assigning higher probabilities to AI catastrophic risk highlight the rapid development of AI in the past decade(s).” 337, “some forecasters focused more on the rate of improvement in data processing over the previous 78 years than AGI and posit that, if we even achieve a fraction of this in future development, we would be at far higher levels of processing power in just a couple decades.”

Question 4: 339, “AI research and development has been massively successful over the past several decades, and there are no clear signs of it slowing down anytime soon.”

Question 44: 344, “Justifications for the possibility of near AGI include the impressive state of the art in language models.” 336, “General rapid progress in all fields of computing and AI, like bigger models, more complex algorithms, faster hardware.”

Question 51: 341, “In the last five years, the field of AI has made major progress in almost all its standard sub-areas, including vision, speech recognition and generation, natural language processing (understanding and generation), image and video generation, multi-agent systems, planning, decision-making, and integration of vision and motor control for robotics. In addition, breakthrough applications emerged in a variety of domains including games, medical diagnosis, logistics systems, autonomous driving, language translation, and interactive personal assistance.

AI progress has been quick in specific domains.

Language systems are developing the capability to learn with increasing resources and model parameters. Neural network models such as GPT learn about how words are used in context, and can generate human-like text, including poems and fiction.

Image processing technology has also made huge progress for self-driving cars and facial recognition, and even generating realistic images.

Agile robots are being developed using deep-learning and improved vision.

Tools now exist for medical diagnosis.

Deep-learning models partially automate lending decisions and credit scoring.” See also 336, “There's no doubt among forecasters that Machine Learning and Artificial Intelligence have developed tremendously and will continue to do so in the foreseeable future.”

- ^

Question 44: 341, “A few forecasters have very short AI timelines (within a decade on their median forecast) based on recent trends in AI research and the impressive models released in the last year or two. Other team members have longer timelines. The main difference seems to be projecting from very recent trends compared to taking the long view. In the recent trends projection forecasters are expecting significant growth in AI development based on some of the impressive models released in the past year or two, while the opposite long view notes that the concept of AI has been around for ~75 years with many optimistic predictions in that time period that failed to account for potential challenges.”

- ^

Question 3: 336, “the probabilities of continuing exponential growth in computing power over the next century as things like quantum computers are developed, and the inherent uncertainty with exponential growth curves in new technologies.”

Question 44: 343, “The scaling hypothesis says that no further theoretical breakthroughs are needed to build AGI. It has recently gained in popularity, making short timelines appear more credible.”

Question 51: 336, “The majority of our team might drastically underestimate the near-future advances of AI. It is likely that there are architectural/algorithmic bottlenecks that cannot (efficiently/practically) be overcome by compute and data scale, but that leaves a fair amount of probability for the alternative. Not all bottlenecks need to be overcome for this question to resolve with a 'yes' somewhere this century.”

- ^

Question 51: 339, “wars might have erupted halting progress - but this reasoning could also be used for accelerating development (arms race dynamics)”.

- ^

Question 4: 336, “The most plausible forecasts on the higher end of our team related to the probabilities of continuing exponential growth in computing power over the next century as things like quantum computers are developed, and the inherent uncertainty with exponential growth curves in new technologies.”

Question 45: 336, “Advances in quantum computing might radically shift the computational power available for training.”

Question 51: 341, “Also, "quantum computing made significant inroads in 2020, including the Jiuzhang computer’s achievement of quantum supremacy. This carries significance for AI, since quantum computing has the potential to supercharge AI applications".

- ^

Question 3: 343, “Most experts expect AGI within the next 1-3 decades, and current progress in domain-level AI is often ahead of expert predictions”; though also “Domain-specific AI has been progressing rapidly - much more rapidly than many expert predictions. However, domain-specific AI is not the same as AGI.” 340, “Perhaps the strongest argument for why the trend of Sevilla et al. could be expected to continue to 2030 and beyond is some discontinuity in the cost of AI training compute precipitated by a novel technology such as optical neural networks.”

Question 44: 343, “Recent track record of ML research of reaching milestones faster than expected

Development in advanced computer algorithms and/or AI research has a strong history of defining what seem to be impossible tasks, and then achieving those tasks within a decade or two. Something similar may well happen with this prompt, i.e. researchers may build a system capable of achieving these goals, which are broader than ones already reached, as early as in the late 2020s or early 2030s. Significant gains in AI research have been accelerating recently, so these estimates may be low if we're on the cusp of exponential growth in AI capabilities.” See also 341, “Another argument for shorter AI timelines is the recent trend in AI development. If improvements to language models increase at the rate they have recently, such as between GPT-2, GPT-3, and the rumored upcoming advances of GPT-4, then we might expect that the Turing test requirement could be accomplished within a decade. Additionally, recently developed generalist models such as Gato, along with other new models, have surprised many observers, including the Metaculus community. The Metaculus "Weak AGI" question median has moved 6 years closer this year.”

Question 51: 341, “Both the text creating models and the image generating models are achieving results that are more impressive than most had imagined at this stage.” Incorrectly tagged as an argument for lower forecasts.

- ^

Question 51: 341, “Ajeya Cotra, who wrote the biological anchors report from OpenPhil, recently published a follow up post saying that she had moved her timelines forward, to closer to now.

The forecasts for AGI on Metaculus have all moved nearer to our time recently as we have seen the string of astounding AI models, including GPT-3, LaMDA, Dall-E 2 and Stable Diffusion…Metaculus has human parity by 2040 at 60% and AGI by 2042. The latter has dropped by 15 years in recent months with the advent of all the recent new models…AI Impacts survey on timelines has a median with HLMI (Human Level Machine Intelligence) in 37 years, i.e. 2059. That timeline has become about eight years shorter in the six years since 2016, when the aggregate prediction put 50% probability at 2061, i.e. 45 years out.”

- ^

Question 44: 341, “One of the stronger arguments for forecasts on the lower end are the forecasts from those with expertise who have made predictions, such as Ajeya Cotra and Ray Kurzweil, as well as the Metaculus community prediction. Cotra and Kurzweil have spent a lot of time thinking and studying this topic and come to those conclusions, and the Metaculus community has a solid track record of accuracy. In the absence of strong arguments to the contrary it may be wise to defer somewhat to those who have put a lot of thought and research into the question.”

- ^

Question 51: 341, “Ajeya Cotra, who wrote the biological anchors report from OpenPhil, recently published a follow up post saying that she had moved her timelines forward, to closer to now.”

Question 44: 341, “One of the stronger arguments for forecasts on the lower end are the forecasts from those with expertise who have made predictions, such as Ajeya Cotra and Ray Kurzweil, as well as the Metaculus community prediction. Cotra and Kurzweil have spent a lot of time thinking and studying this topic and come to those conclusions, and the Metaculus community has a solid track record of accuracy. In the absence of strong arguments to the contrary it may be wise to defer somewhat to those who have put a lot of thought and research into the question.”

- ^

Question 51: 341, “Karnofsky says >10% by 2036, ~50% by 2060, 67% by 2100. These are very thoughtful numbers, as his summary uses several different approaches. He has experts as 20% by 2036, 50% by 2060 and 70% by 2100, biological anchors >10% by 2036, ~50% by 2055, 80% by 2100, and semi-informative priors at 8% by 2036, 13% by 2060, 20% by 2100”. Incorrectly tagged as an argument for lower forecasts.

- ^

Question 51: 336, “Not everyone agrees that the 'computational' method (adding hardware, refining algorithms, improving AI models) will in itself be enough to create AGI or something sufficiently similar. They expect it to be a lot more complicated (though not impossible). In that case, it will require a lot more research, and not only in the field of computing.” See also 340, “The current path does not necessarily lead to AGI by just adding more computational power.”

Question 3: 341, “there are many experts arguing that we will not get to AGI with current methods (scaling up deep learning models), but rather some other fundamental breakthrough is necessary.” See also 342, “While recent AI progress has been rapid, some experts argue that current paradigms (deep learning in general and transformers in particular) have fundamental limitations that cannot be solved with scaling compute or data or through relatively easy algorithmic improvements.” See also 337, "The current AI research is a dead end for AGI. Something better than deep learning will be needed." See also 341, “Some team members think that the development of AI requires a greater understanding of human mental processes and greater advances in mapping these functions.”

Question 4: 336, “Not everyone agrees that the 'computational' method (adding hardware, refining algorithms, improving AI models) will in itself be enough to create AGI and expect it to be a lot more complicated (though not impossible). In that case, it will require a lot more research, and not only in the field of computing.” 341, “An argument for a lower forecast is that a catastrophe at this magnitude would likely only occur if we have AGI rather than say today's level AI, and there are many experts arguing that we will not get to AGI with current methods (scaling up deep learning models), but rather some other fundamental breakthrough is necessary.” See also 342, “While recent AI progress has been rapid, some experts argue that current paradigms (deep learning in general and transformers in particular) have fundamental limitations that cannot be solved with scaling compute or data or through relatively easy algorithmic improvements.” See also 340, “Achieving Strong or General AI will require at least one and probably a few paradigm-shifts in this and related fields. Predicting when a scientific breakthrough will occur is extremely difficult.”

Question 44: 338, “The current pace of progress may falter if this kind of general task solving can not be achieved by just continuing to upscale existing systems.” 344, “Justifications for longer timelines include the possibility of unforeseen issues with the development of the technology, events that could interrupt human progress, and the potential that this achievement will require significant algorithmic breakthroughs.”

- ^

Question 44: 344, “Justifications for longer timelines include the possibility of unforeseen issues with the development of the technology, events that could interrupt human progress, and the potential that this achievement will require significant algorithmic breakthroughs.” See also 343, “The biological anchors method assumes that no major further bottlenecks are lurking”; “Previous ML paradigms expanded capabilities dramatically with the advent of new tools/paradigms, only to run up against the limits of what that paradigm was capable of accomplishing. Simple trendline extension masks this effect. So long as we are in the exponential growth phase of a sigmoid curve, it's impossible to determine where the inflection point will be.”

Question 51: 343, “Perhaps X-Risks, slow scientific progress, or the unknown theoretical impossibilities in the way of AGI creation or Nick Bostrom Convincing might prove such an event highly unlikely over the long term.”

- ^

Question 44: 341, “Due to the challenging level of individual tasks, forecasters also expect generalizing to being able to accomplish multiple tasks at the required capability will be very difficult”; “Some teammates expect that while AI may advance in individual tasks, generalizing may be much more difficult than expected. So far most advancements in AI models have been based on using large amounts of training data with large amounts of parameters, but it may require more computing power and more data than will exist based on current trends in order to create powerful models that are both talented in one specific field but also generalize to other fields.” See also 343, “Narayanan and Kapoor (Why are deep learning technologists so overconfident? (substack.com)) are on a mission to convince people that current expectations are often too aggressive and that even the best contemporary systems are still highly specialized. An adversarial Turing test would presumably detect failure of generality.” See also 336, “It´s four different capabilities in one single system required for resolution as "yes". With probably nobody going for having exactly these four in the portfolio (and nothing else), we might need to wait for a superior system, that can do much more, with these 4 requirements only as a byproduct. If that is the case, it seems plausible that proving it can win a game of Montezuma's revenge might not be top priority.” See also 338, “Some of the criteria seemed more likely to met sooner, in particular 2 and 3. However, the cumulative resolution of all the criteria is much more challenging and rendered the question more difficult. Another possible source of uncertainty is whether AI researchers in the future will choose to work on those problems due to funding or academic trends. For example, if AI researchers choose another game instead of Montezuma's revenge then this question may not resolve.”

- ^

Question 44: 336, “Deepmind's Gato has already shown that unified AI on diverse tasks is possible.”

- ^

Question 51: 341, “For most problems there won't be training data, most human behavior is not yet digitized.” See also 340, “The tasks it completes are great, but only conducted in areas where there are large data sets of reliable information and correlations can be drawn.”

- ^

Question 46 on compute spending: 341, “The AI impacts note also states that the trend would only be sustainable for a few more years. 5-6 years from 2018, i.e. 2023-24, we would be at $200bn, where we are already past the total budgets for even the biggest companies.”

Question 45 on maximum compute: 338, “Some predictors, instead of modeling compute spending and compute price separately, directly projected compute doubling times into the future. This implicitly assumes the fast spending increase from the recent past will continue. However, such an increase would become increasingly unsustainable over time.”

- ^

Question 51: 339, “wars might have erupted halting progress - but this reasoning could also be used for accelerating development (arms race dynamics)”.

- ^

Question 45: 336, “Possible legislation or pushback against AI if fears mount about possible threat to humanity.”

Question 44: 344, “The possibility of limiting the intentionally slowing down progress is also taken into consideration as a factor for a later emergence”.

- ^

Question 44: 338, “The high end forecast is that the goal is never achieved. The justification is that at long dates the likelihood of human extinction is above 5% so advanced AI development is not achieved”. See also 343, “An existential catastrophe might cut short the advance of science. Theoretically, an AGI could cause radical changes to happen (including, but not limited to, the extinction of humanity) that make it impossible for the tests mentioned in the prompt to be performed.”

Question 51: 343, “Perhaps X-Risks, slow scientific progress, or the unknown theoretical impossibilities in the way of AGI creation or Nick Bostrom Convincing might prove such an event highly unlikely over the long term.” See also 344, “The most likely way for this to not resolve positively by 2050 or later is if there is some non-AI catastrophe of enough magnitude that it set backs humankind by years or decades.” Given in an argument for forecasts of 55% (2030), 100% (2050) and 100% (2100).

- ^

Question 51: 343, “Perhaps X-Risks, slow scientific progress, or the unknown theoretical impossibilities in the way of AGI creation or Nick Bostrom Convincing might prove such an event highly unlikely over the long term.”

- ^

Question 3: 341, “Both evolutionary theory and the history of attacks on computer systems imply that the development of AGI will be slowed and perhaps at times reversed due to its many vulnerabilities, including ones novel to AI.” “Those almost certain to someday attack AI and especially AGI systems include nation states, protesters (hackers, Butlerian Jihad?), crypto miners hungry for FLOPS, and indeed criminals of all stripes. We even could see AGI systems attacking each other.” “These unique vulnerabilities include:

poisoning the indescribably vast data inputs required; already demonstrated with image classification, reinforcement learning, speech recognition, and natural language processing.

war or sabotage in the case of an AGI located in a server farm

latency of self-defense detection and remediation operations if distributed (cloud etc.)”

Question 4: 341. See above.

- ^

Question 51: 336, “An -indirect- argument from Bostrom himself: We might be living in a simulation, possibly set up and directed by AI. That system might want to block us from achieving 'full' AI.”

- ^

Question 4: 337, “The optimists tend to be less certain that AI will develop as quickly as the pessimists think likely and indeed question if it will reach the AGI stage at all. They point out that AI development has missed forecast attainment points before”. 336, “There have been previous bold claims on impending AGI (Kurzweil for example) that didn't pan out.” See also 340, “The prediction track record of AI experts and enthusiasts have erred on the side of extreme optimism and should be taken with a grain of salt, as should all expert forecasts.” See also 342, “given the extreme uncertainty in the field and lack of real experts, we should put less weight on those who argue for AGI happening sooner. Relatedly, Chris Fong and SnapDragon argue that we should not put large weight on the current views of Eliezer Yudkowsky, arguing that he is extremely confident, makes unsubstantiated claims and has a track record of incorrect predictions.”

Question 51: 337, “Yet, taking into account that more often than not predictions of the future tend to be wildly optimistic, the probabilities at the lower end are probably worth taking into consideration.” Given as an argument for forecasts of close to 0% (2030), 15-40% (2050) and the order of 70% (2100). 336, “There have been previous bold claims on impending AGI (Kurzweil for example) that didn't pan out.”

- ^

Question 44: 341, “Forecasters also note the optimism that experts in a field typically have with regard to the rapidity of developments.” See also 337, “Past predictions about present technology have been in general excessively optimistic with regards to the pace of progress, though it should be stressed that this is not always the case. In particular, AI predictions seem to have fallen on the optimistic side. Good examples are previous predictions about AI [4] and past Kurzweil's predictions for the year 2019 [5].” The references cited are https://en.wikipedia.org/wiki/Progress_in_artificial_intelligence#Past_and_current_predictions and https://web.archive.org/web/20100421224141/http://en.wikipedia.org/wiki/The_Age_of_Spiritual_Machines#2019.

- ^

Question 44: 341, “The question being considered would be easier to accomplish than the Metaculus "AGI" question which is currently at a median of 2043, so we can expect that Metaculus would assign a sooner date for the question being considered. However, the team likely believes the Metaculus community is underestimating the difficulty involved in an AI generalizing to accomplish several tasks of which it has not yet accomplished nearly any of the tasks and still seems years or even a decade or two away from the more challenging of these.” See also 337, “It has been argued [6] that Metaculus has also an optimistic bias for their technological predictions.” The reference cited is https://forum.effectivealtruism.org/posts/vtiyjgKDA3bpK9E4i/an-examination-of-metaculus-resolved-ai-predictions-and#.

- ^

See this article for more details.

- ^

“The main forecasting target researchers were asked about in this survey was “high-level machine intelligence”, defined as the time when “when unaided machines can accomplish every task better and more cheaply than human workers.” This is a stronger condition than transformative AI, which can be achieved by machines which merely complement human workers”.

- ^

2060: Zhang et al, 2019: 50% ~HLMI. 2061: Grace et al, 2017: 50% ~HLMI. 2068: Gruetzemacher et al, 2018: 50% ~HLMI