Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

Listen to the AI Safety Newsletter for free on Spotify.

A Provisional Agreement on the EU AI Act

On December 8th, the EU Parliament, Council, and Commission reached a provisional agreement on the EU AI Act. The agreement regulates the deployment of AI in high risk applications such as hiring and credit pricing, and it bans private companies from building and deploying AI for unacceptable applications such as social credit scoring and individualized predictive policing.

Despite lobbying by some AI startups against regulation of foundation models, the agreement contains risk assessment and mitigation requirements for all general purpose AI systems. Specific requirements apply to AI systems trained with >1025 FLOP such as Google’s Gemini and OpenAI’s GPT-4.

Minimum basic transparency requirements for all GPAI. The provisional agreement regulates foundation models — using the term “general purpose AI,” or GPAI — in two tiers. In the first tier, all foundation models are required to meet minimum basic transparency requirements. For example, GPAI developers may be required to accompany their models with technical documentation, summaries of training data, and copyright protection policies. GPAI deployers will also have to inform users when they are interacting with AI, and GPAI-generated content is required to be labeled and detectable.

Additional safety requirements for GPAI with systemic risks. The provisional agreement defines models that are trained with computing power greater than 1025 FLOPs as carrying systemic risks. This tier of GPAI — which currently only includes OpenAI’s GPT-4 and Google’s Gemini — is subject to additional safety requirements beyond minimum basic transparency. Developers of such a model are required to:

- Evaluate it for systemic risks

- Conduct and document adversarial testing

- Implement strong cybersecurity practices

- Document and report its energy consumption

The agreement similarly regulates non-GPAI in three tiers. Some AI uses — such as social scoring, individual predictive policing, and untargeted scraping of data for facial recognition databases — are considered unacceptable, and are banned outright.

Systems outside of this list, but which otherwise have the potential to cause harm or undermine rights, are considered “high risk” and are subject to the restrictions in the act. Examples of high risk systems include those that determine insurance pricing, generate credit scores, and evaluate educational outcomes. Finally, all other systems are considered “minimal risk,” and do not generate additional legal obligations beyond existing legislation.

The agreement exempts research, national security, and some law enforcement use. The EU AI act will apply to private and public actors who deploy AI systems within the EU or whose systems affect EU residents. However, the provisional agreement carves out a few exceptions for uses of AI in cases of national interest. For example, EU member states are keen on fostering AI innovation within their borders, and so the act will not apply to educational and research use. It also exempts military and national security use of AI, as well as use by law enforcement in cases of serious crime.

The act empowers the EU AI Board and establishes a new EU AI Office. The agreement distributes responsibility for enforcing the act to member states, which are to levy fines for infringements. In particular, fines can be set at up to 7% of annual revenue for “unacceptable” uses, and up to 3% for most other violations. Each national authority will represent its country in the EU AI Board, the role of which is to facilitate effective and standardized enforcement across member states.

The agreement also establishes an “EU AI Office” as the central authority on enforcing the acts rules on GPAI, which is empowered to supervise GPAI developers by requesting documentation, conducting model evaluations, and investigating alerts. It is also tasked with developing AI expertise and regulatory capability within the EU government.

The agreement has been a long time in the making, and it’s not over yet. The EU AI Act has been debated at length since it was first proposed in April of 2021. The current agreement is only provisional, and must be voted upon by the European Parliament and the European Commission. If it passes the vote, it wouldn’t take effect immediately. Instead, AI developers will have between several months and two years to comply with its requirements. Still, this is a substantial milestone that marks the end of intensive negotiations to reach a widely agreed upon bill.

Questions about Research Standards in AI Safety

Bipartisan members of the House of Representatives Committee on Space, Science, and Technology recently wrote a letter to the National Institute of Science and Technology (NIST), the government agency which recently established the US AI Safety Institute. They praised NIST as “a leader in developing a robust, scientifically grounded framework for the field of AI trust and safety research.” But they also raised a number of concerns about the field of AI safety:

Unfortunately, the current state of the AI safety research field creates challenges for NIST as it navigates its leadership role on the issue. Findings within the community are often self-referential and lack the quality that comes from revision in response to critiques by subject matter experts. There is also significant disagreement within the AI safety field of scope, taxonomies, and definitions.

Many of these critiques will be familiar to AI safety researchers. It’s true that safety researchers often disagree about what constitutes progress in the field. Lots of work appears in preprints and blog posts, without the scrutiny of peer review. Deference to perceived authorities has concentrated research efforts on a small number of problems and potential solutions at the expense of a broader sociotechnical safety effort. These are serious problems that damage the credibility of AI safety research in the eyes of the public, and reduce the quality of its research outputs.

The Center for AI Safety works to address many of these problems. By providing workshops, fellowships, and introductory resources, we hope to bring new voices into the conversation on AI safety. Further efforts will be needed to meet mainstream demands for scientific rigor, and help AI safety earn interest and support from researchers and policymakers around the world.

The New York Times sues OpenAI and Microsoft for Copyright Infringement

Data is a fundamental input to AI development. Billions of pages of text are used to train large language models. Moreover, data is a scarce resource. Some experts believe the supply of high-quality text could soon run out, making it more difficult for AI developers to continue scaling their models.

Against this backdrop, a new lawsuit threatens to further restrict the supply of AI training data. Filed by the New York Times against OpenAI and Microsoft, it notes that New York Times data was the largest proprietary data source in the most heavily weighted dataset used to train GPT-3. Despite OpenAI’s reliance on New York Times data, they have not been granted permission by the Times to use it, nor paid any compensation to the Times for training on their data. Therefore, the Times has filed a lawsuit against OpenAI and Microsoft for copyright infringement.

The outcome of the case remains to be seen. Some argue on principle that, just as humans learn from books and newspapers, AI systems should be allowed to learn from copyrighted data. Others point to the potential consequences of restricting access to copyrighted data, such as raising costs for open source AI developers. Whichever arguments persuade the court in this case, they will be made by some of the most central stakeholders in this debate, and therefore will have lasting influence on how AI developers can access copyrighted data.

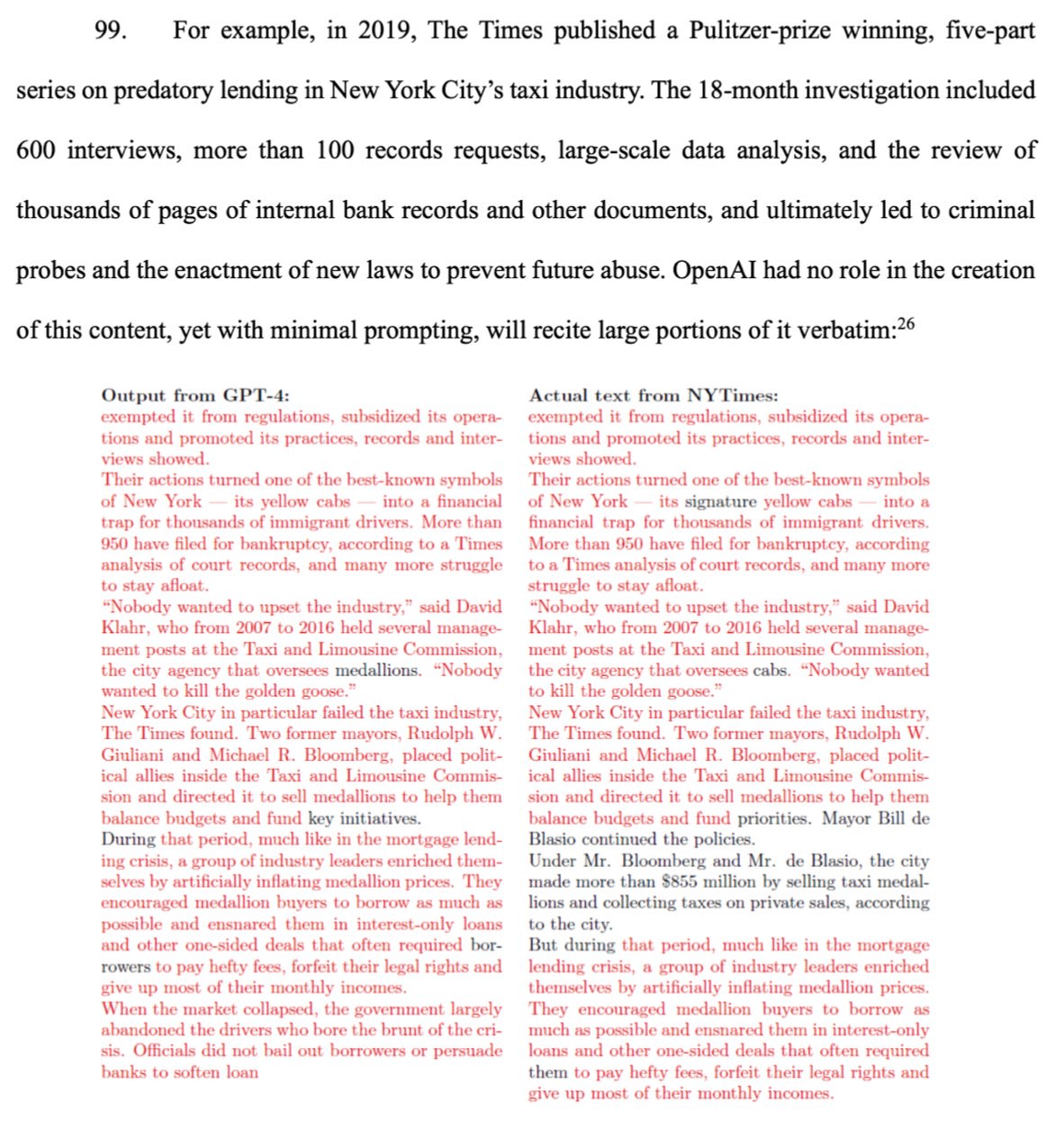

Copyrighted inputs and copyrighted outputs. Nobody disputes the basic fact that OpenAI trains on copyrighted data. But this lawsuit makes another critical allegation: that OpenAI and Microsoft’s models output exact copies of New York Times articles.

Previous research has demonstrated that large language models are capable of memorizing their training data. Image generation models display a similar ability to imitate and replicate parts of their training data. Typically, these models do not output exact copies of their training data, but the fact that it’s possible should not be surprising as they are deliberately trained to mimic their training data.

Solutions to some of these problems could be straightforward. For example, OpenAI could easily prevent their models from directly copying text from sources accessed via search. The lawsuit also requests more costly remedies, including that OpenAI should negotiate a price for access to the New York Times’s copyrighted data, or else destroy the models said to contain copies of the Times’s data.

Considering the consequences of the ruling. Courts typically care about the practical consequences of their decisions. One potential consequence, argued the lawsuit, is that by training on copyrighted data, OpenAI and Microsoft threaten the socially valuable industry of journalism.

Journalism is a public good, argues the lawsuit. We all benefit from journalism that uncovers corruption, proposes valuable new policies, and improves the quality of our society. It’s not cheap, though. The New York Times has 5,800 full-time equivalent staff, and in a typical year they report from 160 countries. But if people can ask Bing to search and summarize a newly published article for free, they might cancel their subscriptions to the Times, threatening the production of valuable journalism.

Not everyone is persuaded by these arguments. Open source AI developers might be better able to compete with private companies if nobody has to pay for access to copyrighted data. While a verdict in favor of the New York Times could restrict the supply of AI training data, a permissive legal regime would allow AI development to continue at its rapid pace, with potential benefits for AI consumers.

For more on the case, you can read the full lawsuit here.

Links

- OpenAI has released their new Preparedness Framework for managing AI risks.

- Andreessen Horowitz will donate to political candidates who oppose regulating technology.

- Popular AI image generation models have been trained on explicit sexual images of children.

- The Pentagon’s drone development program is not going so well.

- The Chinese company ByteDance trained its own AI models on data generated by OpenAI.

- A new Congressional bill would require AI companies to disclose the sources of their training data.

- The full paper describing Google DeepMind’s Gemini model is now public.

- Google DeepMind’s FunSearch makes progress on open problems in computer science and math.

- OpenAI’s Superalignment team is offering grants and a fellowship for PhD students.

- The Cooperative AI Foundation is offering grants to multidisciplinary researchers.

- NIST issues a new request for information on AI and AI safety.

- A new survey shows that AI researchers have shortened their expected timelines to various AI milestones, and remain widely concerned about extinction risks.

See also: CAIS website, CAIS twitter, A technical safety research newsletter, An Overview of Catastrophic AI Risks, our new textbook, and our feedback form

Listen to the AI Safety Newsletter for free on Spotify.

Subscribe here to receive future versions.