Aaron Gertler 🔸

Bio

I ran the Forum for three years. I'm no longer an active moderator, but I still provide advice to the team in some cases.

I'm a Communications Officer at Open Philanthropy. Before that, I worked at CEA, on the Forum and other projects. I also started Yale's student EA group, and I spend a few hours a month advising a small, un-Googleable private foundation that makes EA-adjacent donations.

Outside of EA, I play Magic: the Gathering on a semi-professional level and donate half my winnings (more than $50k in 2020) to charity.

Before my first job in EA, I was a tutor, a freelance writer, a tech support agent, and a music journalist. I blog, and keep a public list of my donations, at aarongertler.net.

Posts 250

Comments1887

Topic contributions273

This isn't about your giving per se, but have your views on the moral valence of financial trading changed in any notable ways since you spoke about this on the 80K podcast?

(I have no reason to think your views have changed, but was reading a socialist/anti-finance critique of EA yesterday and thought of your podcast.)

The episode page lacks a transcript, but does include this summary: "There are arguments both that quant trading is socially useful, and that it is socially harmful. Having investigated these, Alex thinks that it is highly likely to be beneficial for the world."

In that section (starts around 43:00), you talk about market-making, selling goods "across time" in the way other businesses sell them across space, and generally helping sellers "communicate" by adjusting prices in sensible ways. At the same time, you acknowledge that market-making might be less useful than in the past and that more finance people on the margin might not provide much extra social value (since markets are so fast/advanced/liquid at this point).

I used to work as a part-time advisor and ops person for a family foundation (no actual staff) that gave away ~$500k annually; they've worked with several other people since then.

Much of my advisory time was spent researching and evaluating fairly small grants (workable for someone in the $20k-100k range), since the foundation's "experimental/non-GiveWell" budget was a small fraction of the total. I think I could have done this work for a group of 10-20 clients of that size at a time if I'd been a full-time advisor.

Again, I’m aware that concrete, impactful projects and people still exist within EA. But in the public sphere accessible to me, their influence and visibility are increasingly diminishing, while indirect high-impact approaches via highly speculative expected value calculations become more prominent and dominant.

This has probably been what many people experienced over the last few years, especially as the rest of the world also started getting into AI.

But I think it's possible to counteract by curating one's own "public sphere" instead.

For example, you could follow all of your favorite charities and altruistic projects on Twitter. This might be a good starting point. For inspiration, you could also check the follow lists of places like Open Phil (my employer; we follow a ton of our grantees) or CEA's "official EA" account. Throw in Dylan Matthews and Kelsey Piper while you're at it; Future Perfect publishes content across many cause areas. And finally, at the risk of sounding biased, I'll note that Alexander Berger has one of the best EA-flavored research feeds I know of.

If you mostly follow concrete, visibly impactful projects, Twitter will start throwing more of those your way. I assume you'll start seeing development economists and YIMBYs working on local policy — at least, that's what happened to me. And maybe some of those people have blogs you want to follow, or respond when you comment on their stuff, and suddenly you find yourself floating peacefully among a bunch of adjacent-to-EA communities focused on things that excite you.

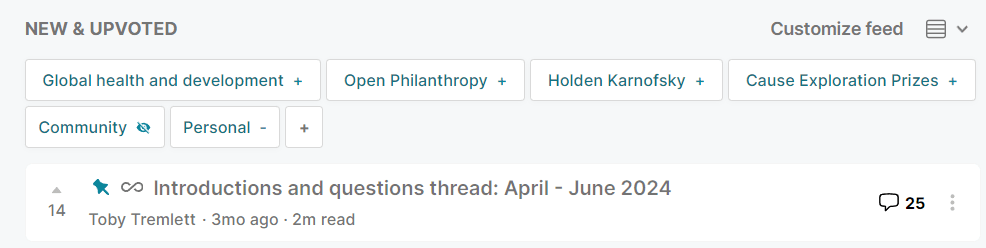

The Forum also lets you filter by topic pretty aggressively, hiding or highlighting whatever tags you want. You just have to click "Customize feed" at the top of the homepage...

...and follow these instructions. (You might be familiar with this, but many Forum users aren't, so I figured I'd mention it.)

Of course, it's not essential for anyone to follow a bunch of "EA content" — your plan of donating to and supporting projects you like is a good one. But if you previously enjoyed reading the Forum, and find it annoying as of late, it may be possible to restore (or improve upon!) your earlier experience and end up with a lot of stuff to read.

I'd have benefited from that kind of nudge myself! I was aware of 80K for years but never even considered coaching.

From a consequentialist perspective, I think you're better off sticking to digital — it takes a lot of time to sell things online, and you could be using that time for some combination of work and fun that would leave everyone better off (unless you place a very high value on physical manga).

Low-confidence idea: It might help to find some small ritual/mantra that you can use when you donate (or invest, etc.) the money you would have spent on physical manga — something along the lines of "I'm making the right decision" or "this is better for everyone".

Open Philanthropy has published a summary of the conflict of interest of policy we use. (Adding it as another example despite the age of this thread, since I expect people may still reference the thread in the future to find examples of COI policies.)

I think of EA as a broad movement, similar to environmentalism — much smaller, of course, which leads to some natural centralization in terms of e.g. the number of big conferences, but still relatively spread-out and heterogenous in terms of what people think about and work on.

Anything that spans GiveWell, MIRI, and Mercy for Animals already seems broad to me, and that's not accounting for hundreds of university/city meetups around the world (some of which have funding, some of which don't, and which I'm sure host people with a very wide range of views — if my time in such groups is any indication).

That's my way of saying that SMA seems at least EA-flavored, given the people behind it and many of the causes name-checked on the website. At a glance, it seems pretty low on the "measuring impact" scale, but you could say the same of many orgs that are EA-flavored. I'd be totally unsurprised to see people go through an SMA program and end up at EA Global, or to see an SMA alumnus create a charity that Open Phil eventually funds.

(There may be some other factor you're thinking of when you think of breadth — I could see arguments for both sides of the question!)

Thanks!

ETFs do sound like a big win. I suppose someone could look at them as "finance solving a problem that finance created" (if the "problem" is e.g. expensive mutual funds). But even the mutual funds may be better than the "state of nature" (people buying individual stocks based on personal preference?). And expensive funds being outpaced by cheaper, better products sounds like finance working the way any competitive market should.