MvK🔸

Posts 6

Comments75

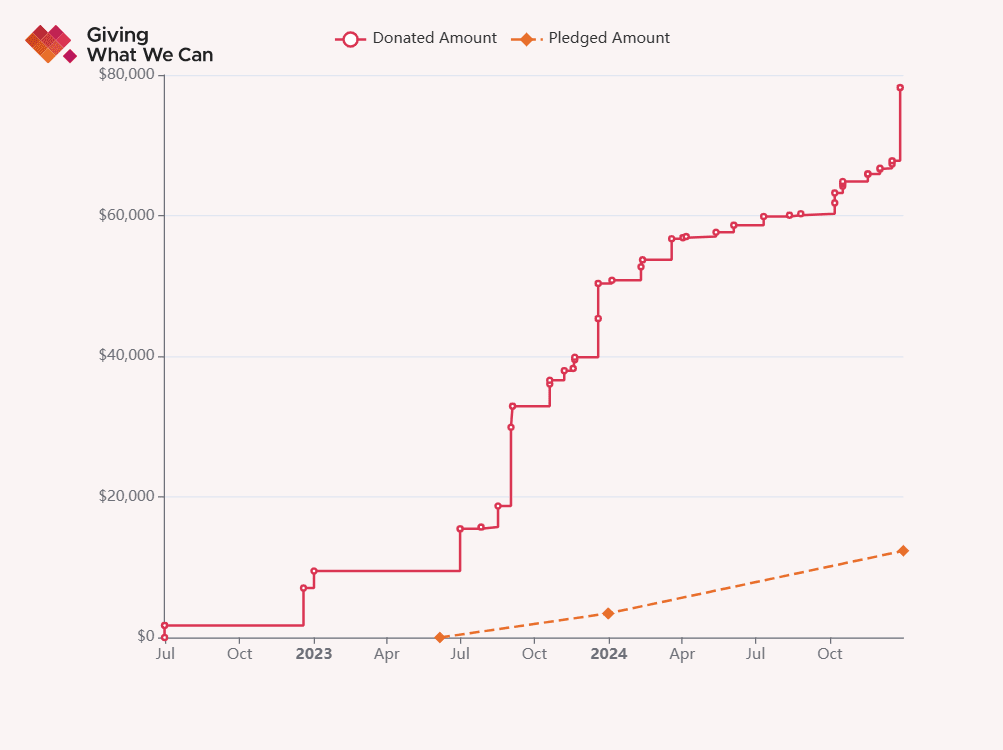

Giving What We Can tells me that I have donated $27,846 this year, which together with my donations from last year brings me to a total of ~78k donated, comfortably ahead of my pledge of 10% (which would amount to ~12k). I donated less than last year due to a move and a few personal reasons but aspire to bring my donations up again to something closer to 50% of my income.

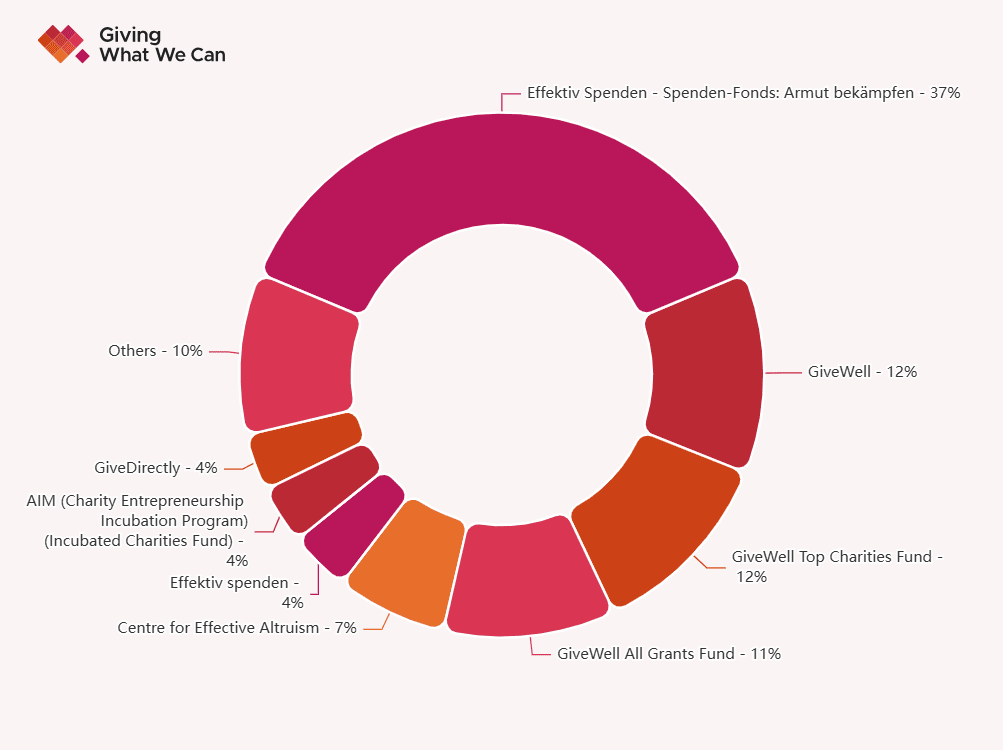

Here's where my donations went[1]:

Shoutout to the team at @Giving What We Can for providing these neat graphics, I've found this a) super motivating and b) very time-saving compared to how I tracked my donations last year!

- ^

I work on AI Governance (and support a number of field- and community building projects) so most of my donations go to GHD. I have since added a recurring donation to the Humane League and am considering adding more animal welfare charities to my donation portfolio. "Others" is mostly AW charities atm.

Is there a reason it's impossible to find out who is involved with this project? Maybe it's on purpose, but through the website I couldn't find out who's on the team, who supports it, or what kind of organisation (nonprofit? For profit? Etc.) you are legally. If this was a deliberate and strategic choice against being transparent because of the nature of the work you expect to be doing, I'd love to hear why you made it!

[My 2 cents: As an org that is focused on advocacy and campaigns, it might be especially important to be transparent to build trust. It's projects like yours where I find myself MOST interested who is behind it to evaluate trustworthiness, conflicting incentives, etc. For all I know (from the website), you could be a competitor of the company you are targeting! I am not saying you need Fish-Welfare-Project-level transparency with open budgets etc.,and maybe I am just an overly suspicious website visitor, but I felt it was worth flagging]

This is a great idea. It's such a good idea that someone else (https://forum.effectivealtruism.org/users/aaronb50) has had it before and has already solved this problem for us:

https://podcasts.apple.com/us/podcast/eag-talks/id1689845820

Have you done some research on the expected demand (e.g. survey the organisers of the mentioned programs, community builders, maybe Wytham Abbey event organisers)? I can imagine the location and how long it takes to get there (unless you are already based in London, though even then it's quite the trip) could be a deterrent, especially for events <3 days. (Another factor may be "fanciness" - I've worked with orgs and attended/organise events where fancy venues were eschewed, and others where they were deemed indispensable. If that building is anything like the EA Hotel - or the average Blackpool building - my expectation is it would rank low on this. Kinda depends on your target audience/user.)

"It's not common" wouldn't by itself suffice as a reason though - conducting CEAs "isn't common" in GHD, donating 10% "isn't common" in the general population, etc. (cf. Hume, is-and-ought something something).

Obviously, something may be "common" because it reliably protects you from legal exposure, is too much work for too little a benefit etc., but then I'm much more interested in those underlying reasons.

Hey Charlotte! Welcome to the EA Forum. :) Your skillset and interest in consulting work in GHD seems a near-perfect fit for working with one of the charities incubated by Ambitious Impact. As I understand, they are often for looking for people like you! Some even focus on the same problems you mention (STDs, nutritional deficiencies, etc.).

You can find them here: https://www.charityentrepreneurship.com/our-charities

I'm seeing lots of O's and no KR's. Is this intentional?

(My understanding is that one of the main benefits of having KPIs or OKRs is the accountability that comes from having specific and measurable (i.e. quantifiable) outcomes that you can track and evaluate, and I can't see any of those in the document.)