Vasco Grilo🔸

Bio

Participation4

I am open to work.

How others can help me

You can give me feedback here (anonymous or not).

You are welcome to answer any of the following:

- Do you have any thoughts on the value (or lack thereof) of my posts?

- Do you have any ideas for posts you think I would like to write?

- Are there any opportunities you think would be a good fit for me which are either not listed on 80,000 Hours' job board, or are listed there, but you guess I might be underrating them?

How I can help others

Feel free to check my posts, and see if we can collaborate to contribute to a better world. I am open to part-time volunteering and paid work.

Posts 165

Comments1941

Topic contributions27

Thanks for the post, Max.

AGI might be controlled by lots of people.

Advanced AI is a general purpose technology, so I expect it to be widely distributed across society. I would think about it as electricity or the internet. Relatedly, I expect most AI will come from broad automation, not from research and development (R&D). I agree with the view Ege Erdil describes here.

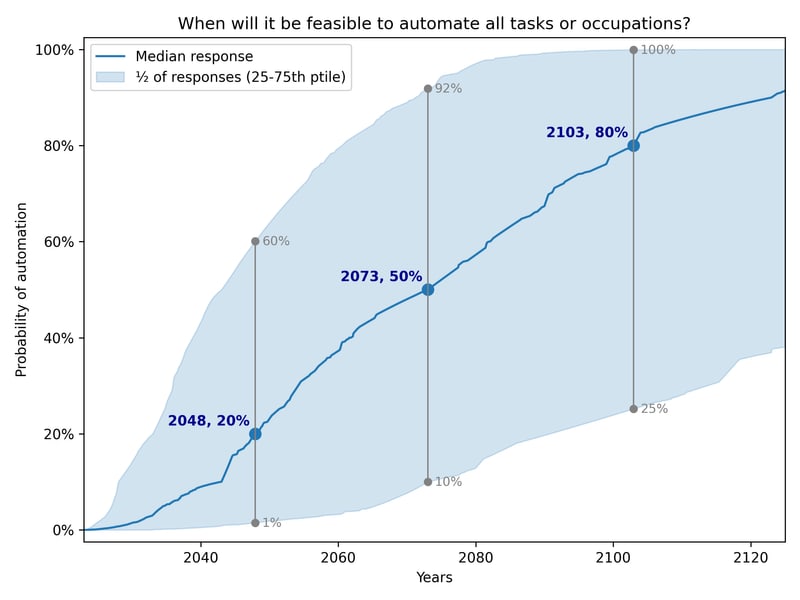

- A 2024 survey of AI researchers put a 50% chance of AGI by 2047, but this is 13 years earlier than predicted in the 2023 version of the survey.

2047 is the median for all tasks being automated, but the median for all occupations being automated was much further away. Both scenarios should be equivalent, so I think it makes sense to combine the predictions for both of them. This results in the median expert having a median date of full automation of 2073.

Regarding prioritization: You can find details on how we allocate funding across programmatic areas in our financial statements. Our funding distribution varies from year to year, and different sources of funding also influences how resources are allocated—not just cost-effectiveness.

I was looking for your thinking on prioritisation, not just the allocation of funds (this results from your thinking, but is not the prioritisation process itself).

As I mentioned earlier, the only portion of our unrestricted funding that supports our diet change work comes from donors who, while choosing to give unrestrictedly, have expressed that this program is their primary motivation for supporting us.

I am not sure I got it. If those donors give unrestrictedly, you could use their donations to support your cage-free work which you also think is more cost-effective than your meal replacement work?

Thanks for the follow-up, Carolina!

Regarding your question, we want to emphasize that we have reflected extensively on our prioritization of resources, including by considering your own analysis. This is an area of constant strategic consideration for us.

Is there any public write-up of your thinking on prioritisation you could point me to?

It is important to clarify that our meal replacement (diet-change) program is funded through restricted donations—meaning the funds allocated to this initiative come from donors who would not otherwise contribute to our cage-free or pig welfare campaigns.

Have you considered making the case to such donors that your cage-free work is way more cost-effective? Do you spend any unrestricted funds on the meal replacement program? If yes, the difference in their cost-effectiveness suggests it would be good for your to spend less. If not, I do not understand why Animal Charity Evaluators (ACE) assessed its cost-effectiveness, as donations would not fund it by default.

For instance, in 2023, we secured approximately USD 162K in restricted funding specifically for our meal replacement work, and at least USD 100K in donations were influenced by the program’s objectives and results. These amounts combined exceeded the program’s total expenditures for the year.

Where did the additional donations go to? If the meal replacement program caused additional donations equal to 61.7 % (= 100*10^3/(162*10^3)) of the spending on it, and they all went to the meal replacement program, it would only become 1.62 (= 1 + 0.617) times as cost-effective, or 0.173 % (= 1.62*1.07*10^-3) as cost-effective as your cage-free campaigns. On the other hand, if they all went to your cage-free campaigns, it would become 578 (= 1 + 0.617/(1.07*10^-3)) times as cost-effective, or 61.8 % (= 578*1.07*10^-3) as cost-effective as your cage-free campaigns. In this latter case, 99.8 % (= 1 - 1/578) of the impact of your meal replacement program would come from increasing the funds supporting your cage-free campaigns.

In short, when it comes to impact, we believe that cost effectiveness estimates per dollar do not tell the full story.

I think you mean that increases in welfare do not tell the full story. Even if you have other goals, such as ending factory-farming even if that is not ideal in terms of decreasing suffering, and increasing happiness, you could estimate the cost-effectiveness in terms of decreases in the number of animals, adjusted for their capacity for welfare, per $.

I suspect the crux of the disagreement might be a skepticism about the potential impact of working within the system

I believe there are positions within the system which are more impactful than a random one in ACE's recommended charities. However, I think those are quite senior, and therefore super hard to get, especially for people wanting to go against the system in the sense of prioritising animal welfare much more.

they are often more replaceable in these roles than they would be in an APA position and their impact is limited only to the difference between their skills and the next best candidate which for many roles is not that much.

I guess this also applies to junior positions within the system, whose freedom would be determined to a significant extent by people in senior positions.

Thanks for sharing your views, Lauren!

I disagree quite strongly with this!

I find it hard to be confident considering the lack of detailed quantitative analyses about the counterfactual impact of policy roles.

But I think as discussed during this week it is because you have the need for greater certainty over direct impact and policy in general is a much messier theory of change.

My guesses above refer to the expected counterfactual impact of the roles. They are supposed to be risk neutral with respect to maximising expected total hedonistic welfare, which I strongly endorse. I most likely act as if I prefer averting 1 h of disabling pain with certainty over decreasing by 10^-100 the chance of 10^100 h of disabling pain, but still recognise 1 h of disabling is averted in expectation in both scenarios, and therefore think both scenarios are equally good.

I also think this missed the point entirely of personal fit which is a multiplier for every persons impact.

My guesses are about the impact of people in the roles, who have to be a good fit. Otherwise, they would not have been selected.

Therefore offering them opportunities for potential impact and career capital should be compared against no role in the movement at all, not another hypothetical role

I would also consider working outside animal welfare to earn more, and therefore donate more to the best animal welfare organisations. I think this may well be more impactful than working in impact-focussed animal welfare organisations.

Thanks for sharing, Ula and Neil! I guess (I have not seen the report) that is super valuable work.

Due to the sensitive nature of the strategic analysis, this report is available exclusively to:

- Established advocacy organizations with EU policy programs

- Grantmakers supporting farmed animal welfare initiatives in Europe

- Strategic partners with demonstrated commitment to advancing EU animal welfare reforms

I was a little surprised that I was denied access. I have done research on animal welfare, and participated in Impactful Policy Careers, so I was guessing I could fall under the 3rd point above.

Thanks for the remarks, Jan. I also participated in the program.

- I've heard of several people under 30 who have had a relatively large influence on AI and biorisk policy within the European Commission. Perhaps this is because these are “newer” policy areas within the EU, and the same opportunities don’t exist in animal welfare-related roles.

I agree.

- Also, I was curious: was there a particular reason you didn’t mention think tank or NGO work (outside influence) as much? Do you see that as less impactful, or were there other reasons for not focusing on it?

Here are some related guesses.

Thanks for sharing, Joris! I also really liked the program. I can hardly imagine something better for people interested in helping animals working in EU's institutions.

Based on what I learned in the program, and my background beliefs, I guess:

- For over 90 % of the positions in the Commission, donating 10 % of one's net income to the Shrimp Welfare Project would imply over 90 % of one's impact coming from those donations. Relatedly, I think donating more and better is the best strategy to maximise impact for the vast majority of people working in impact-focussed organisations.

- The direct (expected counterfactual) impact of working in a random role in Animal Charity Evaluators' (ACE's) recommended charities is larger than that of a random APA, and this is larger than that of a random role in the Commision.

Thanks for putting this together! Looks great.