(This is a cross-post from my blog Cosmic Censorship: https://hansgundlach.substack.com/p/why-have-two-minds-when-you-can-have)

There’s a lot of uncertainty about the potential moral status of future AI systems. This is an issue because we do not know to what extent our usage of AI systems could create harm to these machines. In light of this uncertainty, I think there are cautionary measures we can take to reduce this unpredictability and prevent potential moral catastrophe. A central component of AI design should be based on creating systems that give accurate and representative self-reports. This means limiting overlapping AIs, mixtures of experts, ensemble minds, intermediate-scale systems, and AIs with complicated subsystem relationships. We also should give careful consideration to the scale, continuity, and running times of our creation. Finally, we should strive for a principle of minimality when creating AI beings. Only create two minds if one will not suffice. The rest of this post outlines how we can design AI systems with these principles in mind.

Creating Accurate Self Reports And Avoiding The Uncanny Valley of Consciousness

It would be good if we could create AIs that do not resemble entities we are uncertain about. For instance creating systems like organoids, animals, human mind subsections, etc which can not reliably present their experience. Therefore, it is advantageous to develop systems that can reliably self-reflect and develop reliable reports of their inner life. This means that given the option we should create more introspectively capable and linguistically competent systems.

One way to increase the likelihood that AIs are introspectively capable is to create systems that are cohesively connected. Systems that are reliably internally connected will have less risk of containing subagents or minds that are not introspectively accessible by the system as a whole (think ensemble or mixture or experts). This means prioritizing systems that do not rely on nonlinguistic sub-AIs to do import tasks. These nonagential and nonlinguistic subAI systems will not be properly accounted for in the overall system’s self-reports of its experience. If we ask GPT how it's doing will it be able to say how its image generation system is doing? It also may be possible to have AI systems that are made of very complicated subsystems. These systems may have issues related to the Hedonic Alignment Question where the welfare of the whole may not be reflected in its constituents. In this case, we should try to maximize the overlap between the subsystems and the whole (see Roelofs & Sebo 2024).

Interestingly, it is speculated that this sort of problem also occurs in the human mind and may have significant moral implications. Consider the case of split-brain patients. Patients undergo surgery where their left and right hemispheres are separated. Remarkably, when researchers isolate each hemisphere they get separate responses.

Increase Intelligence/Capabilities of AIs Rather Than Create More AIs:

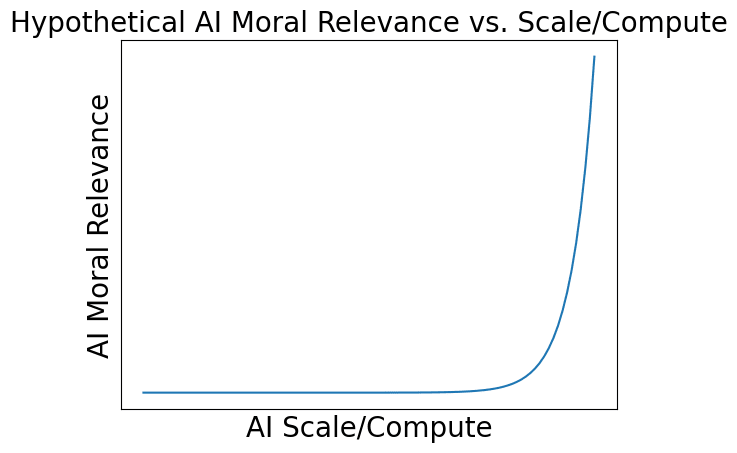

An interesting tradeoff we might face is between creating more capable AI systems vs creating more AI systems. I think there are a few reasons we should prioritize creating a more intelligent/capable system. First, more generally intelligent AIs will give better self-reports. Second, more intelligent systems may be better placed to satisfy their preferences. More intelligent AIs may also have better emotional or pain-coping methods. Third, the moral weight we give to more intelligent AIs might not be much greater than less intelligent AIs. Therefore if we have a highly intelligent machine capable of doing twice the work of one machine with the same effort/pain we should prefer it over the two systems. This is the case if there is diminishing moral growth with increasing AI scale which I analyze below.

Further, It is more likely that a generally intelligent system( i.e. systems trained on general data to do general tasks with a lot of compute) will have concepts similar to us or at least more convergent concepts. This is based on research into the convergent representation of AIs. These concepts may include negative ideas like pain and loss. However, since we are more familiar with these concepts we are in a better position to alleviate and possibly remove expressions of these traits. Having representations of these states of being does not mean they really “feel” these states. However, it is much better to attribute suffering to a being without those innate feelings than to attribute unconsciousness to a being that suffers.

In the meantime, there might be tradeoffs that we face with more intelligent and more integrated minds. Besides the AI safety risks that come with creating highly intelligent systems, larger more connected AIs might be less interpretable than their more modular counterparts. This could be less of an issue if we have robust interpretability measures.

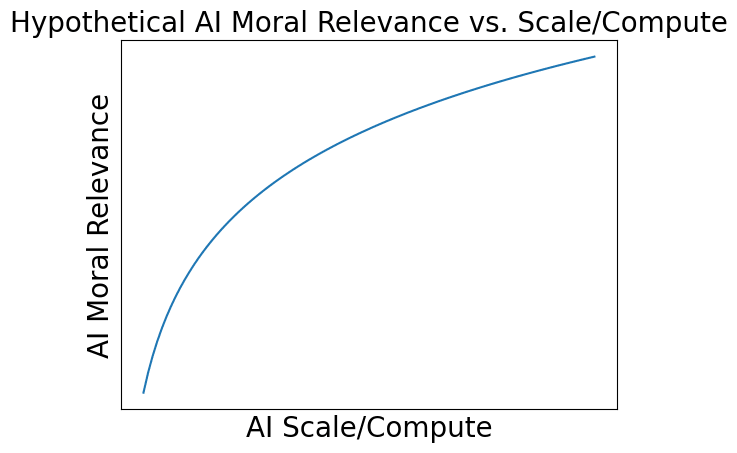

Reasons AI Moral Relevance Might Grow Slowly with AI Scale:

The first motivation for this belief comes from biological intuitions. Most people do not allocate more moral worth to human individuals with more intelligence. Further, some animal welfare advocates have highly nonlinear moral worth to brain size/intelligence measures. For instance, the philosopher Bob Fischer gives bees welfare range estimates of .07 of average human welfare ranges. Bees have almost a million times fewer neurons than humans[1] . This does not mean bees are a million times less intelligent. However, it is questionable whether bees are 7% as intelligent as humans. In this sense, scaling the number of neurons leads to dramatically less moral worth.

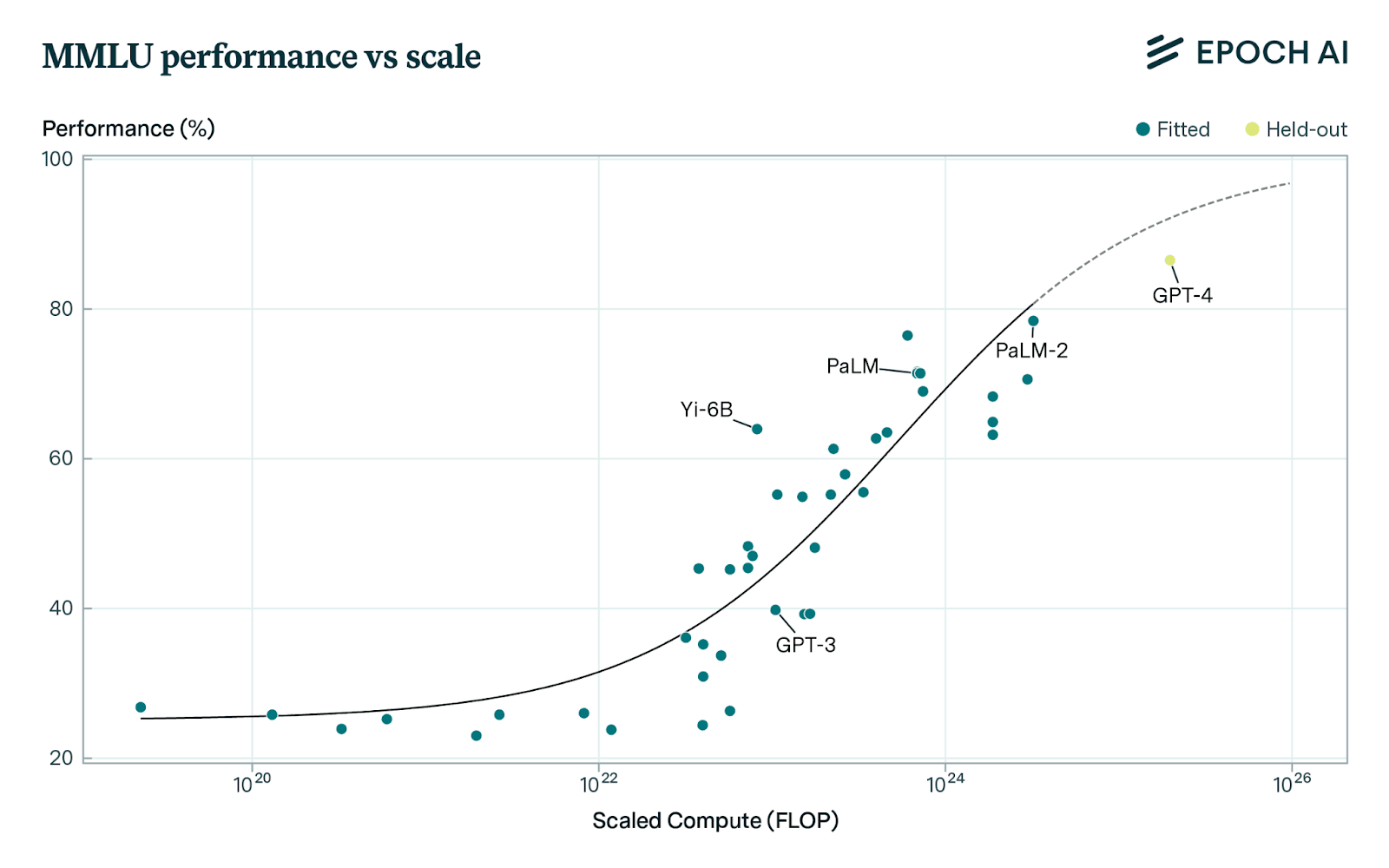

Maybe suffering is based on convergence to some internal representation of sadness. In this case, we might expect the moral worth of AIs to plateau as they get closer and closer to an accurate conception of sadness as we scale these systems. This would also be consistent with a notion of AI moral worth as a fixed capability like basic language understanding or classification. For these types of tasks, we see model capabilities scaling logarithmically with compute scaling up until they plateau even faster as they reach the limit of the task. Many AI tasks have diminishing or logarithmic returns to scale (even those without a fixed capability ceiling). Therefore, I find this relation much more likely than other possibilities like a moral takeoff or phase transition. In this case, we might want to design larger systems depending on the scaling of the capability/moral relevance tradeoff. For example, can we design Einstein systems? Einstein was capable of vastly more research output but had equal moral worth to other individuals.

Epoch AI Scaling Curves for MMLU benchmark on common language-based tasks.

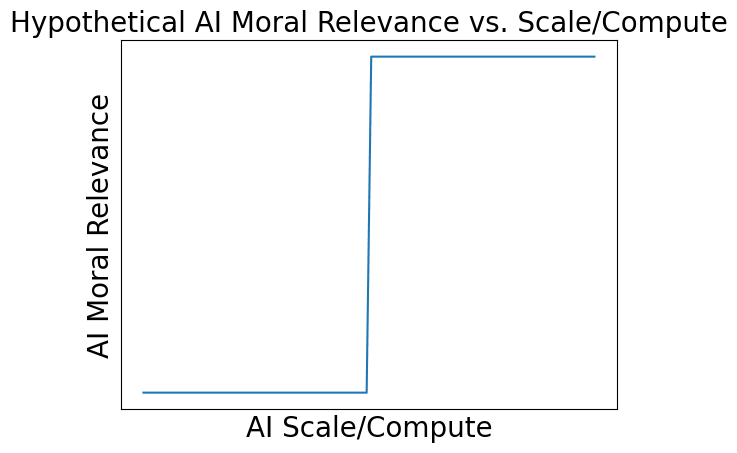

What If There is an AI Consciousness Phase Transition?

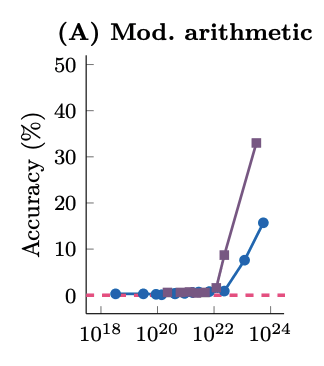

Module Arithmetic Ability in Emergent Abilities of Large Language Models

The relationship between AI intelligence and AI moral relevance could also be more of a phase transition where systems become all of a sudden conscious. These systems would suddenly "learn" the ability to be consciouses while further scaling would not enhance this capability. This could happen if moral relevance is a capped ability (like say arithmetic) but also depends on many component capabilities arising in tandem (say understanding of sadness, happiness, metacognition, etc).

I believe that larger, more intelligent systems are still better in some cases. However, my argument is more nuanced. If we build AIs that are less intelligent we may be able to develop models which have no consciousness experience. Yet, given our uncertainty about the exact phase transition point we may instead create a large number of morally ambiguous AI models. If we do develop such models then it is better to err on the side of training larger systems past the transition point.

In reality, phase transitions in natural science involve a range of property changes that are visible. For example, a phase change of water involves the dissociation of water molecules but also the creation of bubbles. If we are certain about such a consciousness phase transition and we can easily detect it then we can adjust the development of AI systems accordingly by either building less intelligent AIs or building systems that do not have such a transition.

What About a Moral Takeoff ?

What if more intelligent AI systems are much more conscious (what Nick Bostom refers to as super beneficiaries)? These would be models that would have moral worth vastly exceeding humans. Is this a possibility? Consciousness theories like IIT which give quantitative measures of consciousness leave open the possibility of developing systems that have superhuman levels of consciousness. In fact, more connected systems might be much more conscious than a modular system (Scott Aaronson’s take). In this case, we face pressure to prioritize developing minds with good self-reports while carefully considering the development of AIs beyond this point.

Can I Just Run One AI x100 as Fast?

Here we face another tradeoff. Is it better to run a single AI system 10 times vs running 10 systems once? In this case, it depends a lot on whether the AI has a continuity of experience. AI models might have radically different levels of self-modification or transformation. If an AI is completely retrained in a short period. We might liken this experience to death. If each time the AI is run it retains no memory of the past, it makes no difference what tradeoff we make. If the longer-running AI has continuity of experience we could analogize this decision as deciding between creating one AI that will live for 100 years or creating 10 AIs that will live and die in the span of 10 years. In this case, I would choose to create the 100-year-old AI to stay consistent with the principle of minimality. However, if the task is painful we might argue that it could be much worse for the 100-year-old AI. Imagine, that you have the option of telling 100 people to walk 1 mile to the grocery store or 1 person to walk 100 miles. In this case, I might argue that it's less ethical to take the second option because we are comparing a minor inconvenience to a few people to a large pain to one. This rests on what philosophers refer to as the non-convexity of pain (See Andreas Morgensen’s Welfare and Felt Duration). This is based on the intuition that the disvalue/utility loss of pain increases with increasing pain stimulus. Small inconveniences for many do not outweigh the tragedy of a few.

Generally Intelligent Systems over Expert Systems?

Expert systems seem particularly concerning. Expert systems are AIs that have superhuman capabilities in a particular domain with less general capabilities. These systems may not be able to adequately express their beliefs and preferences while being able to solve difficult mathematical or visual tasks. Further, one generally intelligent system might have the capability of several expert systems. If the expert system is of similar size i.e. has a similar number of parameters and training compute then we have created several possibly morally relevant systems when we could have created just one.

In addition, expert systems are likely to have unfamiliar concepts and forms of consciousness than more generally intelligent AIs. Researchers have shown that systems that perform well on general tasks seem to have convergent representations of the world.

The Trouble With Ensemble and Mixture of Expert Systems:

Similar arguments hold for prioritizing between non-ensemble vs ensemble systems. Ensemble systems are composed of a multitude of AIs that are not represented by the AI as a whole. However, ensemble systems have easily identifiable subcomponents. On the other hand, identifying subagents or trapped morally relevant entities could be easier.

This line of reasoning applies to a mixture of expert systems where the AI is composed of a multitude of experts which are queried based on the input they received. Some of the experts in the system may be focused on a more particular topic/input and be unable to report their experiences. It is unclear to what extent self-reports from these AIs will give accurate information about the mental state of each of the sub-experts. The increased number of experts also clashes with the principle of minimality.

It is also difficult to establish the moral relevance of these distributed AI entities. Complicated AI systems would share some experiences while privately experiencing others. Should we count the number of AI subjects or AI experiences? (Roelofs & Sebo 2024).

What If We Become Very Certain About AI Consciousness ?

In some cases, it makes sense to disregard the suggestions I’ve made above about limiting the creation of AI systems. If we have a lot of certainty around the state of systems we are developing then we will also probably be able to create happy AIs or AI that have much higher positive welfare than humans. Therefore, we might think that it's morally wrong not to create as many AI systems as possible (ie if we reject Asymmetry). If we don’t create such a system then we are depriving the world of a happy life.

Conclusion

In general, we should consider building AIs that are less ambiguous and try to avoid the uncanny valley of consciousness. This means we should build systems that can give reliable self-reports, as well as strive for a principle of minimality if we remain uncertain about the moral state of our creations.

- ^

Note in this paper I do not distinguish much between the number of neurons, compute level, and intelligence. This is based on a chinchilla scaling paradigm where neurons and compute are closely coupled while intelligence/capability is a power law of these quantities.

Executive summary: When designing AI systems, we should prioritize creating cohesive, intelligent systems capable of accurate self-reporting to minimize potential moral harm, given the uncertainty around AI moral status.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.