(Cross-posted from my website. Audio version here, or search "Joe Carlsmith Audio" on your podcast app.)

Summary

Non-naturalist normative realism is the view that there are mind-independent normative facts that aren’t reducible to facts about the natural world (more here). I don’t find this view very plausible, for various reasons.[1] Interestingly, though, some fans of the view don’t find it very plausible either. But they think that in expectation, all (or almost all) of the “mattering” happens in worlds where non-naturalist normative realism is true. So for practical purposes, they argue, we should basically condition on it, even if our overall probability on it is low. Call this view “the realist’s wager.”

I disagree with this view. This essay describes a key reason why: namely, the realist’s wager says horrible things about cases where you get modest benefits if non-naturalist normative realism is true, but cause terrible harm if it’s false.[2] I describe this sort of case in the first section. The rest of the essay teases out various subtleties and complications. In particular, I discuss:

- The difference between “extreme wagers,” which treat nihilism as the only viable alternative non-naturalist normative realism, and more moderate ones.

- Whether it makes sense to think that things matter more conditional on non-naturalist normative realism than they do conditional on some non-nihilist alternative, because the former has more “normative oomph.”

- Whether it makes sense to at least condition on the falsehood of nihilism. (I’m skeptical here, too.)

My aim overall is to encourage advocates of the realist’s wager (and related wagers) to think quantitatively about the meta-ethical bets they’d actually accept, rather than focusing on more sweeping assumptions like “if blah is false, then nothing matters.”

Thanks to Ketan Ramakrishnan, Katja Grace, Jacob Trefethen, Leopold Aschenbrenner, Will MacAskill and Ben Chang for discussion.

I. Martha the meta-ethical angel

The type of case I have in mind works like this:

Martha’s deal: Martha the meta-ethical angel appeals before you. She knows the truth about meta-ethics, and she offers you a deal. If non-naturalist normative realism is true, she’ll give you a hundred dollars. If it’s false, she’ll burn you, your family, and a hundred innocent children alive.

Claim: don’t take the deal. This is a bad deal. Or at least, I personally am a “hell no” on this deal, especially if my probability on non-naturalist normative realism is low – say, one percent.[3] Taking the deal, in that case, amounts to burning more than a hundred people alive (including yourself and your family) with 99% probability, for a dollar in expectation. No good.

But realist wagerers take deals like this. That is, they say “worlds where the burning happens are worlds that basically don’t matter, at least relative to worlds where you get the hundred dollars. So even if your probability of getting the hundred dollars is low, the deal is positive in expectation.”[4]

Or at least, that’s the sort of thing their position implies (I’m hoping that they’ll think more about this sort of case, and reconsider). Let’s look at some of the issues here in more detail.

II. Extreme wagers

I’m assuming, here, an expected-utility-ish approach to meta-ethical uncertainty.[5] That is, I’m assuming that we have a probability distribution over meta-ethical views, that these views imply that different actions have different amounts of choiceworthiness, and that we pick the action with the maximum expected choiceworthiness.[6]

This isn’t the only approach out there, and it has various problems (notably, with comparing the amounts of choiceworthiness at stake on different theories – this will be relevant later). But my paradigm realist wagerer is using something like this approach, it’s got some strong arguments in its favor,[7] and it’s helpfully easy to work with. Also, I expect other approaches to imply similar issues.

I’m also focusing, in particular, on non-naturalist normative realism, as opposed to other variants – for example, variants that posit mind-independent normative facts that are reducible to facts about the natural world. I’m doing this partly to simplify the discussion, partly because my paradigm realist wagerer is focused on non-naturalist forms of realism as well, and partly because in my opinion, the question of “are there normative facts that can’t be reduced to facts about the natural world” is the most important one in this vicinity (once you’ve settled on naturalism, it’s not clear – at least to me – how substantive or interesting additional debate in meta-ethics becomes).[8]

With this in mind, let’s suppose that we are splitting our credence between the following three views only:

- Non-naturalist realism: There are mind-independent normative facts that can’t be reduced to facts about the natural world.

- Naturalism-but-things-matter: There normative facts of some sort (maybe mind-independent, maybe mind-dependent), but they can be reduced to facts about the natural world.

- Nihilism: There are no normative facts.[9]

The simplest and most extreme form of the realist wager runs as follows:

Naturalism-but-things-matter is false: it’s either non-naturalist realism, or nihilism. Probably, it’s nihilism (the objections to non-naturalist realism are indeed serious). But if it’s nihilism, nothing matters. Thus, all the mattering happens conditional on non-naturalist realism. Thus, for practical purposes, I will condition on non-naturalist realism, even though probably, nothing matters.

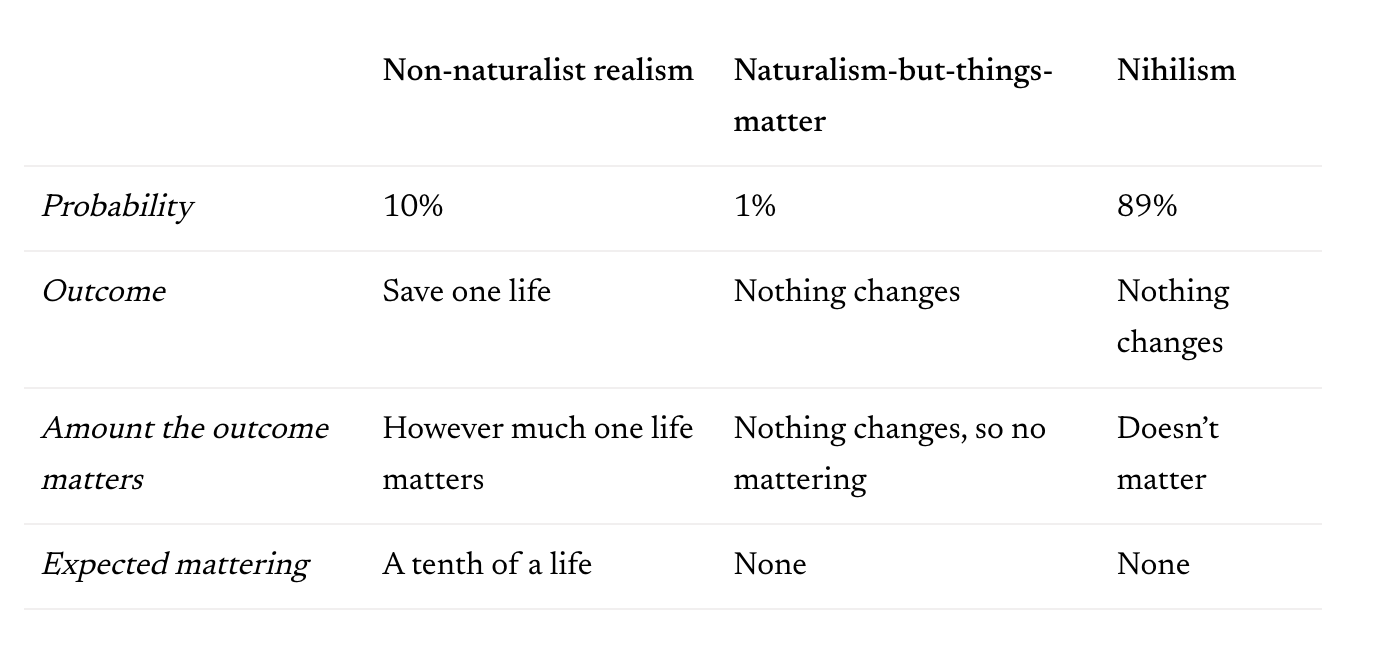

Thus, for concreteness, let’s say this wagerer is 99% on nihilism, 1% on realism, and 0% on naturalism-but-things-matter.[10] So the deal above looks like:

So, what’s the expected mattering of the deal overall? A dollar’s worth. Not amazing, but not nothing: go for it.

Now maybe you’re thinking: wait, really, zero percent on anything mattering conditional on the natural world being all there is? That sounds overconfident. And indeed: yes. Even beyond “can Bayesians ever be certain about anything,”[11] this sounds like the wrong place to spend your certainty points. Meta-ethics is tricky, people.[12] Are you so sure you even know what this debate is about – what it means for something to matter, or to be “natural,” or to be “mind-dependent”[13]— let alone what the answer is? So sure that if it’s only raw nature – only joy, love, friendship, pain, grief, and so on, with no extra non-natural frosting on top[14] -- then there’s nothing to fight for, or against? I, for one, am not. And perhaps, if you find yourself hesitating to take a deal like this, you aren’t, actually, either.

So “you should have higher credence on naturalism-but-things-matter” is the immediate objection here. Indeed, I think this objection cautions wariness about the un-Bayesian-ness of much philosophical discourse. Some meta-ethicist might well declare confidently “if naturalism is true, then nothing matters!”[15] But they are rarely thinking in terms of quantitative credences on “ok but actually maybe if naturalism is true some things matter after all,” or about the odds at which they’re willing to bet. I’m hoping that reflection on deals like Martha’s can prompt, at least, a bit more precision.

Some may also notice a more conceptually tricky objection: namely, that this deal seems bad even if you have this pattern of credences – i.e., even if non-naturalist realism and nihilism are genuinely the only live options. That is, you may notice that you don’t want to be burned alive, even if nihilism is true, and it doesn’t matter that you’re being burned alive.[16] And the same for your mother, the children, and so on.[17] That is, you may feel like, somehow, the whole “expected mattering” ontology here is leaving your interest in not being burned alive too much up-for-grabs.

I think this is a good objection, too. In fact, I think it may get closer to the heart of the issue than “you should have higher credence on naturalism-but-things-matter” (though the two objections are closely linked). However, it’s also a more complicated objection, so for now I want to set it aside (I discuss it more below), and assume that we accept that expected mattering is what we’re after, and that nihilism gives us none.

III. Ways to wager

The credences I gave above (in particular, the 0% on naturalism-but-things-matter) were extreme. What happens if we moderate them?

Suppose, for example, that your credences are: 10% on non-naturalist realism, 1% on naturalism-but-things-matter, and 89% on nihilism. That is, you give some credence to things mattering in a purely natural world, but you think this is 10x less likely than the existence of non-natural normative facts – and that both of these are much less likely than nothing mattering at all.

Now we start getting into some trickier issues. In particular, now we need to start distinguishing between a number of factors that enter into your expected mattering calculations, conditional on anything mattering (e.g., on non-nihilism): namely,

(a) How likely you think the various non-nihilist meta-ethical theories are.

For example, the credences just discussed.

(b) Whether your non-normative beliefs alter in important ways, conditional on different non-nihilist meta-ethical theories.

For example, you might think (indeed, I’ve argued, you should think) that epistemically non-hopeless forms of non-natural normative realism make empirical predictions (uh oh: careful, philosophers...) about the degree of normative consensus to expect amongst intelligent aliens, AI systems, and so on with sufficient opportunity to reflect – predictions that other views (importantly) don’t share.[18] Or: if non-naturalist realism is true, maybe you should expect your realist friends to be right about other stuff, too (and vice versa).[19]

(c) Whether different things matter, conditional on different non-nihilist meta-ethical theories, because your object-level normative views are correlated with your meta-ethical views.

For example, conditional on non-naturalist realism you might be a total utilitarian (so you love creating new happy lives), but conditional on naturalism-but-things-matter you’re a person-affector (so you shrug at new happy lives), such that even if you had equal credence in these two meta-ethical theories, you’d pay more to create a new happy life conditional on non-naturalist realism than you would conditional on naturalism-but-things-matter.[20]

(d) Whether the same thing matters different amounts, conditional on different non-nihilist meta-ethical theories, because some meta-ethical theories are just intrinsically “higher stakes.”

For example, you might be certain of total utilitarianism conditional on both non-naturalist realism and naturalism-but-things-matter, and you might have equal credence on both these meta-ethical views, but you’d still pay more to create a happy life conditional on non-naturalist realism than on naturalism-but-things-matter, because on non-naturalist realism, the mattering somehow has more oomph – i.e., it’s some objective feature of the fabric of reality, rather than something grounded in e.g. facts about what we care about (realist: “bleh, who cares what we care about”), and objective mattering is extra special.

To isolate some of these factors, let’s focus for a moment on (a) alone. That is, let’s assume that you have the same normative views (for simplicity, let’s say you’re a total utilitarian), with the same level of oomph, regardless of which non-nihilist meta-ethics is true; and that your non-normative views are independent of meta-ethics as well.

Granting for the moment that we’re after expected mattering, then, and that nihilism gives us none, we can consider cases like the following:

Martha’s buttons: Martha the meta-ethical angel offers you one of two buttons. The red button saves one life if non-naturalist realism is true, and does nothing otherwise. The blue button saves N lives if non-naturalist realism is false, and does nothing otherwise.

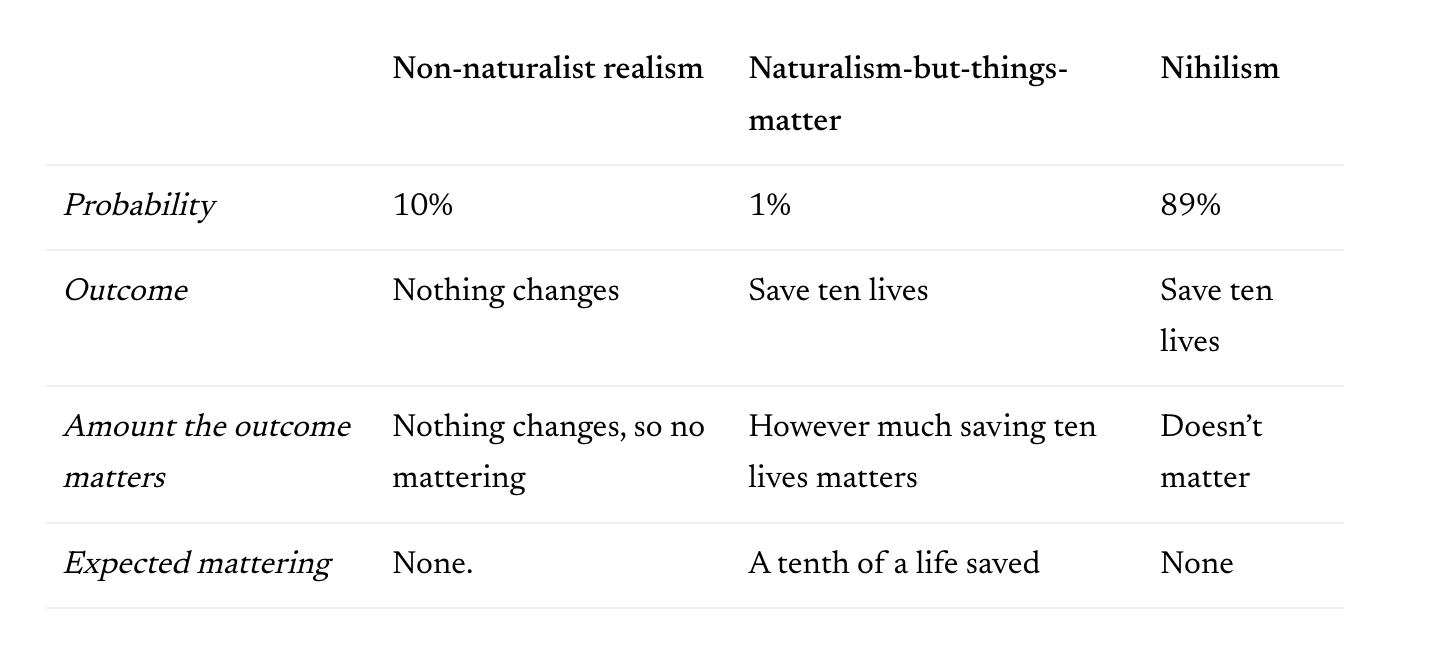

Here, the point at which your expected mattering calc becomes indifferent to the buttons is the point where naturalism-but-things-matter is N times less likely than non-naturalist realism. Thus, using the credences above, and setting N to 10, we get:

Blue button

Red button

I.e., both of these buttons save a tenth of a life-that-matters in expectation.

(Now maybe you’re thinking: wait a second, granted some pattern of credences about the metaphysics of normativity and the semantics of normative discourse, you want me to take a 10% chance of saving one life over a 90% chance of saving 9 lives? Red flag. And again: I’m sympathetic – more below. But I think this red flag is about the idea that expected mattering is what we’re after and that nihilism give us none, which we’re assuming for now. Or perhaps, it’s about having this sort of credence on nihilism – a closely related worry, but one that rejects the set-up of the case.)

Now consider your own credences on these different meta-ethical theories. What sort of N do they imply? That is, if Martha showed up and offered you these buttons, for what N would your stated credences imply that you should be indifferent? And (more importantly), for what N would you actually be indifferent? If the two differ substantially, I suggest not taking for granted that you’ve worked everything out, realism-wager-wise.

My own N, here, is quite a bit less than 1 – e.g., I choose the blue button even if it only causes Martha, conditional on the falsehood of non-naturalist realism, to flip a coin about whether to save a life or not (thereby saving .5 lives in expectation). This is for various reasons, but a simple one is that I think that non-naturalist realism (to the extent it’s a candidate for truth vs. falsehood) is more likely than not to be false – and even if it’s false, I care about the people whose lives I could save.

The broader point, though, is that keeping in mind what sort of N your stated views imply is a good consistency check on whether you actually believe them.

IV. Should realist mattering get extra oomph?

That said, understanding the relationship between your N and your credences gets more complicated once we start to bring in the other factors – (b), (c), and (d) – discussed above. In particular, let’s focus for a second on (d) – the extra normative “oomph” you might give to a certain meta-ethical theory, independent of your credence on it.

Thus, suppose you think that conditional on non-naturalist realism, everything matters ten times more than it does conditional on naturalism-but-things-matter, because non-naturalist mattering is extra special.[21] Granted 10% on non-naturalist realism, 1% on naturalism-but-things-matter, and 89% on nihilism, this bumps your N up to 100 rather than 10, while holding your credences fixed. Thus, let’s call one-life-saved conditional on non-naturalist realism one “oomph-adjusted life-that-matters.” The red button, then, is worth a tenth of an oomph-adjusted life-that-matters. But with N = 10, the blue button is only worth a hundredth of an oomph-adjusted life-that-matters: e.g., it has a 1% chance of saving ten lives-that-matter-at-all (so a tenth of a life-that-matters-at-all in expectation), but each of these lives matters 10x less than it would conditional on non-naturalist realism, so adjusting for oomph, you’re down to a hundredth of an oomph-adjusted life-that-matters overall. To compensate for this reduction, then, you have to bump N up to 100 to be indifferent to the buttons.

(Skeptic: “So, with this sort of oomph adjustment, you take a 1% chance of saving one life over a 90% chance of saving 99 lives?”

Wagerer: “Yep.”

Skeptic: “And you want to call this altruism?”)[22]

Should we make an oomph adjustment? One reason not to is that – unlike in many normative-uncertainty cases, where we need to find some way to compare units of mattering across very different first order, normative-ethical theories (e.g., average and total utilitarianism) – in this case, we’re conditioning on total utilitarianism either way, so there is a natural and seemingly-privileged way of normalizing the units of mattering at stake conditional on non-naturalist realism vs. naturalism-but-things-matter: namely, say that the same stuff matters the same amount. I.e., say that one life matters the same amount conditional on either of these meta-ethical views – and the same for two lives, or ten lives, and so on. When you’re grappling with different first-order ethical views, you don’t have the luxury of this option.[23] But when you have this luxury, it’s tempting to take advantage of it.

What’s more, a number of the standard ways of normalizing across first-order normative-ethical theories won’t allow you to give totalism-conditional-on-non-naturalist-realism more oomph than totalism-conditional-on-naturalism-but-things-matter. That’s because these ways appeal only to the structure of a theory’s ranking over options, rather than to any notion of the absolute amount of value that it posits.[24] That is, on many ways of approaching normative uncertainty, it doesn’t make sense to differentiate between “small-deal total utilitarianism” and “big-deal total utilitarianism” – where both imply the same behavior,[25] but one of them implies that all the stakes are blah times higher.[26] But this is what normative oomph adjustments are all about.

Once we start going in for oomph-adjustments, we might also wonder whether non-naturalist realism – at least of the standard secular sort -- is really going to be the most oomph-y meta-ethics out there, even weighted by probability. Thus, consider divine command theory, on which theism is true, and the normative facts holds in virtue of God commanding you to do certain things. Do the divine commands of the ultimately source of all reality – “God Himself, alive, pulling at the other end of the cord, perhaps approaching at an infinite speed, the hunter, king, husband”[27] – significantly out-oomph those thin, desiccated, “existing but not in an ontological sense” normative facts the Parfitians hope to spread like invisible frosting over science? And if so, should you, perhaps, be wagering on theism instead,[28] rather than Oxford’s favorite secular meta-ethics? Is God where roughly all the expected mattering really happens?

(But wait: have we looked hard enough for ways we can pascal’s mug ourselves, here? Consider the divine commands of the especially-big-deal-meta-ethics spaghetti monster – that unholy titan at the foundation of all being, who claims that his commands have a graham’s number times more normative oomph than would the commands of any more standard-issue God/frosting, if that God/frosting existed. Shall we ignore all the conventional meta-ethical views, in favor of views like this, that claim for themselves some fanatical amount of oomph? What about the infinitely oomph-y meta-ethical views? Non-zero credence on those, surely...)[29]

I’m not, here, going to delve deeply into how to think about oomph adjustments. And despite their issues, I do actually feel some resonance with some adjustments in this vein.[30] As with credences, though, I at least want to urge attention to the implications of one’s stated views about this topic with respect to cases like Martha’s buttons. It can feel easy, in the abstract, to say “everything matters much less if non-naturalist realism is false”; harder, perhaps, to say “I choose a 1% probability of saving one life over a 90% probability of saving 99 lives, because of how much less those lives matter if non-naturalist realism is false.” But it is in the latter sort of choice that the rubber meets the road.

V. Speaking the nihilist’s language

We can imagine yet further complications by introducing (b) and (c) into the picture as well (that is, correlations between meta-ethical views, normative-ethical views, and non-normative views), but for now I want to set those aside.[31] Instead, I want to return to the assumption we granted, temporarily, above: namely, that expected mattering is what we’re after, and that nihilism gives us none.

Is this right? Consider the following variant of Martha’s deal:

Martha’s nihilism-focused deal: Martha the meta-ethical angel offers you the following deal. If nihilism is false, she’ll give you a hundred dollars. If nihilism is true, she’ll burn you, your family, and a hundred innocent children alive.

Let’s say that you have at least some substantive credence on nihilism, here – say, 10% (the realist wagerer’s credence is generally much higher). Should you take the deal?

Here, we no longer have to deal with questions about how much expected mattering is at stake conditional on some alternative to both non-naturalist realism and nihilism. Rather, we know that the burning is only going to happen in worlds where no expected mattering is at stake. So: easy call, right? It’s basically just free money-that-matters.

Hmm, though. Is this an easy call? Doesn’t seem like it to me. In particular, as I mentioned above: are you sure you don’t care about getting burned alive, conditional on nihilism? Does nihilism really leave you utterly indifferent to the horrific suffering of yourself and others?

Remember, nihilism – the view that there are no normative facts[32] – is distinct from what we might call “indifference-ism” – that is, the view that there are normative facts, and they actively say that you should be indifferent to everything.[33] On nihilism, indifference is no more normatively required, as a response to the possibility of innocent children being burned alive, than is intense concern (or embarrassment, or desire to do a prime number of jumping jacks). Conditional on nihilism, nothing is telling you not to care about yourself, or your family, or those children: you’re absolutely free to do so. And plausibly – at least, if your psychology is similar to many people who claim to accept something like nihilism – you still will care. Or at least, let’s assume as much for the moment.[34]

Response: “Granted, I still care; granted, I’m not indifferent. But conditional on nihilism, this care has no normative weight, no justification. I just do care, but it’s not the case that I should. I don’t want the children to be burned alive, and they don’t either, but their lives don’t matter; what we want doesn’t matter. Nothing matters. So, even if its psychologically difficult to take the deal, because my brute, unjustified care gets in the way even conditional on nihilism, when we ask whether I should take the deal, it’s an easy call: yes.”

We can dramatize this sort of thought by imagining three versions of yourself: one who lives in a nihilist world, one who lives in a non-naturalist realist world, and one who lives in a naturalism-but-things-matter world. Suppose that each of these selves sends a representative to the High Court, which is going to decide whether to take Martha’s nihilism-focused deal. The judge at the high court asks the representatives in turn:

Judge: “To the representative of non-naturalist realism: in your world, should we take this deal?”

Non-naturalism realist: “Yes, your honor. It gives us a hundred dollars that matter.”

Judge: “To the representative of naturalism-but-things-matter: in your world, should we take this deal?”

Naturalist-but-things-matter: “Yes, your honor. It gives us a hundred dollars that matter.”

Judge: “To the representative of nihilism: in your world, is it the case that we shouldn’t take this deal?”

Nihilist: “No, your honor. In my world, shoulds aren’t a thing.”

Judge: “Well then, it seems we have an easy call. Multiple representatives say that we should take the deal, and no representative says that we shouldn’t. So, let’s take it.”[35]

But I think this sort of court is refusing to speak to the nihilist in her own language; refusing to accept the currency of a nihilist world. After all (we’ve assumed above), it’s not the case that, conditional on nihilism, you would be totally non-plussed as to how to decide between e.g. being burned alive, or taking a walk in a beautiful forest; between saving a deer from horrible pain, or letting it suffer; between building a utopia, or a prison camp.[36] Granted, you wouldn’t have any shoulds (non-natural or natural) to tell you what to do. But do you, actually, need them? After all, there is still other deliberative currency available – “wants,” “cares,” “prefers,” “would want,” “would care,” “would prefer,” and so on. Especially with such currency in the mix, is it so hard to decide for yourself?

But if we admit this sort of currency into the courtroom – without requiring that we later cash it out in terms of “shoulds” -- then the judge no longer has such an easy time. Thus, if the judge had instead asked the representatives whether they want the deal to get taken, she would have received an importantly different answer from the nihilist: namely, “nihilism world really really doesn’t want you to take this deal.” And she would not have been able to say “multiple representatives want us to take this deal, and no one wants us not to.”

That is, by choosing specifically via the should-focused procedure described above, the judge is declaring up front that “This is the court of should-y-ness (choiceworthiness, expected mattering, etc) in particular. All representatives from worlds that can pay in should-y-ness for influence over the decision shall be admitted to the table. The voices of those who cannot pay in should-y-ness, however, shall not be listened to.” And when the representative of nihilism world approaches the judge and says “Judge, I care deeply about your not taking this deal; I am willing to pay what would in my world be extreme costs to prevent you from taking this deal; I ask, please, for some sort of influence,” the judge says only: “Does all this talk of ‘caring deeply’ and ‘willing to pay extreme costs’ translate into your having any should-y-ness to offer? No? Then begone!”

Now, you might say: “But: the judge isn’t picking some arbitrary currency, here. Decision-making really is the court of should-y-ness.” And indeed, we do often think of it that way. But I think the topic at least gets tricky. Can nihilists make decisions, without self-deception? As mentioned above, I think they can. Perhaps it’s a slightly different kind of decision, framed in slightly different terms -- e.g., “If I untangle the deer from the barbed wire, then it can go free; I want this deer to be able to go free; OK, I will untangle the deer from the barbed wire” -- but it’s at least similar to the non-nihilist version, and I think it’s a substantive choice to assume, at the outset, that reasoning of this kind has no place in the high court.

And it’s not as though all the other, non-nihilists theories have the exact same currency, either. In the land of divine command theory, the currency is mattering made out of divine commands. In the land of non-naturalist realism, the currency is mattering made out of non-natural frosting. In the land of naturalism-but-things-matter, the currency is mattering made of out of natural stuff. And in the land of nihilism, the currency is just: natural stuff. Do all the realms that call their currency “mattering” get to gang up on the one that doesn’t? Who set up this court? We would presumably object if the court only accepted shoulds that were made out of e.g. divine commands, or non-natural frosting. So why not accept the currency of every representative? Letting everyone have a say seems the fairest default.

VI. The analogy of the Galumphians

Here's an analogy that might help illustrate the nihilist representative’s perspective on the situation. Suppose that there are three tribes of Galumphians, all of whom descend from an ancient tribe that placed the highest value on protecting the spirit god Galumph, who was amorphously associated with a certain style of ancient temple. All three tribes continue to build and protect this style of temple, but they understand it in different ways:

- In the first tribe, they say: “Galumph floats on top of (‘supervenes on’) our temples, in a separate realm that no one can detect or interact with, and we protect our temples for that reason.”

- In the second tribe, they say: “Galumph just is the temples; in protecting our temples, we protect Galumph.”

- In the third tribe, they say: “We no longer believe in the god Galumph: our temples are only themselves. But we love them dearly, and we will fight tooth and nail to protect them.”

Let’s say, further, that none of these tribes cares at all about what happens to the other tribes (the first two tribes each think that Galumph only lives in their realm in particular; and the third tribe is just fully focused on protecting its own temples in particular).[37]

Now suppose that a powerful sorcerer visits the tribes and offers them a deal: “I’ll add a few bricks of protection to a temple in the first tribe and in the second tribe. However, I will burn all the thousand temples of the third tribe.”

The three tribes hold a grand meeting to decide whether to take this deal.

Leader of the meeting: “Friends, we are all here from the grand lineage of the Galumphians. True, we each only care about what happens in our own realm; but spiritually, we are united in our desire to protect the great god Galumph, wherever he may live. So, let’s start by going around and saying how we each believe this deal affects Galumph protection in our own realm.”

First tribe representative: “In my realm, we believe that this deal protects Galumph; we support it.”

Second tribe representative: “In my realm, we believe that this deal protects Galumph; we support it.”

Third tribe representative: “Sorry, in my realm we don’t believe in Galumph, but this deal will result in the complete destruction of all of our beloved temples, and we are seriously against it.”

Leader of the meeting: “Ah, so two tribes believe that this deal protects Galumph; and no one believes that this deal will hurt Galumph. It appears, then, that the decision should be easy: clearly, the Galumph-optimal decision, by everyone’s lights, should be to take the deal.”

Third tribe representative: “Sorry, who decided that this decision was going to get made entirely in terms of Galumph-protection? Who picked this leader? The decision-process here is clearly biased against my realm: just because we don’t believe in Galumph anymore doesn’t mean that we’re OK with our temples getting destroyed.”

First tribe representative: “It was us; we picked the leader. But it’s totally fair to make this decision in Galumph-protection terms: after all, to decide what to do just is to decide what protects Galumph the most. Sure, we have our disagreements about the nature of Galumph, but we can all agree that Galumph is what it’s all about, right?”

Third tribe representative: “No -- we don’t think that Galumph is what it’s all about at all, actually.”

First tribe representative: “But how do you decide what to do?”

Third tribe representative: “We try to protect our temples. We love them.”

First tribe representative: “But… you don’t think they have any Galumph.”

Third tribe representative: “No Galumph at all.”

First tribe representative: “But why aren’t you in total despair? Why aren’t you indifferent to everything, in the absence of Galumph to guide you? Indeed, I expected you to show up at this meeting and be like: ‘Go ahead and burn our temples, we checked for Galumph but there’s none in our realm, so we’ve got no skin in the game.’”

Third tribe representative: “Long ago, when we first started having doubts about Galumph, we thought maybe that’s how we’d feel if we stopped believing in him. Indeed, for a while, even as the evidence against Galumph came in, we said ‘well, if there’s no Galumph, then nothing protects Galumph, so for practical purposes we can basically condition on Galumph existing even if it’s low probability.’ Eventually, though, as the evidence came in, we realized that actually, we loved our temples whether Galumph lives in them or not.”

First tribe representative: “Ok but you admit that your love for your temples does not protect Galumph at all – it’s just some attitude that you have. So while I recognize that it may be hard, psychologically,to let go of these temples (I think you haven’t fully internalized that they have no Galumph), you agree, surely, that the Galumph-protecting thing to do here is to submit your temples to the fire, and support the deal.”

Third tribe representative: “I am not here to protect Galumph. I am here to protect the temples.”

First tribe representative: “I cannot talk to this person. It is not Galumph-protecting for them to be admitted into this process. We are trying to make a decision here, people, and decisions are about Galumph protection.”

Third tribe representative: “C’mon, it’s not even very clear what the difference is between us and the second tribe: we have the same fundamental metaphysics, after all. Plausibly, the disagreement is mostly semantic – it’s about whether to call the temples Galumph or not.”

First tribe representative: “We agree: we think that the second tribe is a bunch of heathens, who barely understand the concept of Galumph. Galumph cannot be a temple; for with every temple, it is an open question whether Galumph lives in it! Thus, Galumph must live in a separate realm that no one can touch or interact with.”

Second tribe representative: “Yeah, we’re not sure that there’s a deep difference between us and the third tribe, either. But we’re definitely not into the separate realm thing, and we find talking about Galumph pretty natural and useful, so our bet is that Galumph talk is in good order and fine to continue with.”

Third tribe representative: “Whatever; it’s about the temples at the end of the day.”

Second tribe representative: “Yeah, true, Galumph protection is really all about the temples. It’s not like Galumph is some extra thing, anyway.”

Representative from the first tribe: “You must talk about Galumph. Temples are nothing if they have no Galumph. Burn them for any shred of Galumph protection…”

This is far from a perfect analogy, but hopefully it can illustrate the sense in which a focus on “expected mattering” biases the decision against nihilist worlds in a way that the inhabitants of those worlds wouldn’t necessarily endorse.

VII. How much is nihilistic despair about meta-ethics anyway?

I said above that the “you should have higher credence on naturalism-but-things-matter” objection and the “wait but I still care about things even if nihilism is true” objection to the realist’s wager are closely related. That is, in both cases one rejects the idea of indifference (or: effective indifference) to worlds without the non-natural normative frosting. We can articulate this rejection as “things still matter even without the frosting!”, or as “I’m not indifferent even if things don’t matter!”. But it’s not always clear what of substance is at stake in the difference – just as it’s not always clear what’s at stake in the disagreement between the second and the third tribes, other than how to use the word “Galumph.”

I’m not, here, going to dig in on exactly how deep the differences between nihilism and naturalism-but-things-matter go. To the extent there are substantive differences, though, my own take is that naturalism-but-things-matter is probably the superior view – I expect normative talk (perhaps with some amount of adjustment/re-interpretation) to make sense even in a purely natural world (and I’m skeptical, more generally, of philosophers who argue that “X widely-used-folk-theoretical-term – ‘value,’ ‘consciousness,’ ‘pain,’ ‘rationality,’ etc -- must be used to mean Y-very-specific-and-suspicious-metaphysical-thing, and thus, either some very-specific-and-suspicious metaphysics is true, or X does not exist!). And even in the absence of substantive differences, I feel far more resonance with the aesthetic and psychological connotations of naturalism-but-things-matter than with the aesthetic and psychological connotations of nihilism – e.g., despair, apathy, indifference, and so on. I have defended nihilism’s right to have a say in your decision-making, but I have not defended those things – to the contrary, one of my main points is that nihilism, as a purely meta-ethical thesis, does not imply them.

Indeed, my own sense is that most familiar, gloomy connotations of nihilism often aren’t centrally about meta-ethics at all. Rather, they are associated more closely with a cluster of psychological and motivational issues related to depression, hopelessness, and loss of connection with a sense of care and purpose. Sometimes, these issues are bound up with someone’s views about the metaphysics of normative properties and the semantics of normative discourse (and sometimes, we grope for this sort of abstract language in order to frame some harder-to-articulate disorientation). But often, when such issues crop up, meta-ethics isn’t actually the core explanation. After all, the most meta-ethically inflationary realists, theists, and so on can see their worlds drain of color and their motivations go flat; and conversely, the most metaphysically reductionist subjectivists, anti-realists, nihilists and so on can fight just as hard as others to save their friends and families from a fire; to build flourishing lives and communities; to love their neighbors as themselves. Indeed, often (and even setting aside basic stuff about mental health, getting enough sleep/exercise, etc), stuff like despair, depression, and so on is often prompted most directly by the world not being a way you want it to be -- e.g., not finding the sort of love, joy, status, accomplishment and so forth that you’re looking for; feeling stuck and bored by your life; feeling overwhelmed by the suffering in the world and/or unable to make a difference; etc -- rather than by actually not wanting anything, or by a concern that something you want deeply isn’t worth wanting at the end of the day. Meta-ethics can matter in all this, yes, but we should be careful not to mistake psychological issues for philosophical ones – even when the lines get blurry.[38]

That said, I acknowledge that there are some people for whom the idea that there might be no non-natural normative facts prompts the sort of despair, hopelessness, and indifference traditionally associated with nihilism (whether they are implied by it or no). And to those people, the realist’s wager can appear as a kind of lifeline – a way to stay in connection with some source of meaning and purpose, even if only via a slim thread of probability; a reason to keep getting up in the morning; a thin light amidst a wash of grey.[39] If such people have been left unconvinced by my arguments above (both for “you should have higher credence on naturalism-but-things-matter” and for “you can still care about things, and give weight to that care, even if nihilism is true”), then I grant that it’s better to accept the realist’s wager than to collapse into full-scale indifference and despair; better to at least demand a dollar, in some worlds, before acquiescing to being burned alive in others; better to fight for the realist worlds rather than for no worlds at all. But I want to urge the possibility of fighting for more worlds, too.

VIII. Do questions about the realist’s wager make a difference in practice?

There’s more to say about lots of issues here, and I don’t claim to have pinned them all down. The main thing I want to urge people to do is to think carefully about Martha-style cases before blithely accepting claims like “I should just basically condition on non-naturalist realism, because that’s where all the expected mattering is,” or “it’s fine to just ignore the nihilism worlds.” It can be easy to say such things when it’s mostly an excuse to stop thinking about some objection to non-naturalist realism, or some worry about nihilism, and to get back to whatever you were doing anyway. But it can be harder when lives are on the line.

Perhaps one wonders, though: are any lives on the line? Does any of this matter in the real world, where meta-ethical angels do not appear and ask us to make bets about the true theory of meta-ethics? I think it does matter, partly because of the (b) and (c) stuff above – that is, because of the possible correlations between meta-ethical, normative-ethical, and empirical views.[40] Thus, if you have different normative-ethical credences (for example, on totalism vs. something messier) conditional on non-naturalist realism vs. its falsehood, then “I should just condition on non-naturalist realism, because that’s where all the expected mattering is” will alter your first order normative credences as well. And similarly, if you make different empirical predictions conditional on non-naturalist realism vs. not (for example, about the degree of normative consensus to expect amongst aliens and AIs), then it’ll alter the empirical worlds you’re acting like you live in, too. I won’t, here, try to map out or argue for any particular correlations, here – but I think they can make a decision-relevant difference.[41]

That said, I don’t want to overplay that difference, either, or to encourage getting bogged down in meta-ethical debates when they aren’t necessary. Indeed, as I’ve discussed in the past, I think that high-level goals like “make it to a wise and empowered future, where we can figure out all this stuff much better” look reasonably robust across meta-ethical (and normative ethical) views, as do many more of the “ethical basics” (suffering = bad, flourishing = good, etc). And often, getting the basics right is what counts.

- ^

In particular, I think it leaves us without the right type of epistemic access to the normative facts it posits. More here.

- ^

This objection is closely related to the one I discuss in “The despair of normative realism bot” – but it focuses more directly on how someone with a “realism-or-bust” attitude reasons in expectation about meta-ethical bets.

- ^

One is tempted to say stronger things, which I won’t focus on here. For example (though this gets complicated): it’s wrong to take this deal. Also (though this is a separate point): it’s scary to take this deal. Most other people – for example, your mother, those children, etc – would still very much prefer to not be burned alive, even if the natural world is all there is, and your favorite meta-ethics is false (what’s meta-ethics again?). So realist wagering puts you and them at odds. (Perhaps you say: “that’s scary” isn’t a philosophical objection. And strictly: fair enough – but it might be a clue to one.)

- ^

Alternatively, if the realist wagerer is actually conditioning on normative realism, they won’t even say things like this. Rather, they’ll just predict that in fact, they’ll win the hundred dollars (even if e.g. they just saw their friend get burned alive taking a deal like this – Martha must’ve made a mistake!). But this is an especially silly way to wager – one that compromises not just your morals, but your epistemics, too. Here I’m reminded of a friend sympathetic to the realist’s wager, who wondered whether he should lower his credence on the theory of evolution, because normative realism struggles with the evolutionary origins of our moral beliefs. Bad move, I say. But also, not necessary: realist wagerers can stay clearer-eyed.

- ^

It can sometimes feel strange to assign credences to meta-ethical views – and in particular, views that you disagree with – because it can be hard to say exactly what the views are claiming, and one suspects that if they are false, they are also incoherent and/or deeply confused. I’m going to set this sort of hesitation aside, though.

- ^

See MacAskill, Bykvist, and Ord (2020) for more. I’ll mostly talk about expected “mattering” in what follows, rather than choiceworthiness, but the difference isn’t important.

- ^

See in particular Riedener (2021).

- ^

That said, I think that some of the discussion below – for example, the question of whether objective normative facts have more “oomph” than subjective ones – applies naturally to categories that cut across the distinction between naturalist and non-naturalist views (for example, because some naturalist views treat normative facts as objective). Most of the points I make, though, could be reformulated using a different and/or more fine-grained carving up of the options.

- ^

Nihilism is sometimes accompanied by some further claims about the semantics of normative discourse – i.e., the claim that normative claims are candidates for truth or falsity, as opposed to e.g. expressions of emotions, intentions, etc — but I’m going to focus on the simpler version.

- ^

Yes, 0% is the wrong credence to have here – more on that in a moment.

- ^

Traditional answer: no? Wait, what about the thing you updated on? Hmm…

- ^

I say this as someone with fairly strong views about it.

- ^

Let alone: is-ought gaps, moral twin earth, the semantics of normative discourse, the implicit commitments of deliberation, and so on…

- ^

“Merely joy” “just love” – say it with a bit of disgust.

- ^

See, famously, Parfit (2013): “Naturalists believe both that all facts are natural facts, and that normative claims are intended to state facts. We should expect that, on this view, we don’t need to make irreducibly normative claims. If Naturalism were true, there would be no facts that only such claims could state. If there were no such facts, and we didn’t need to make such claims, Sidgwick, Ross, I, and many others [i.e. normative theorists] would have wasted much of our lives. We have asked what matters, which acts are right or wrong, and what we have reasons to want, and to do. If Naturalism were true, there would be no point in trying to answer such questions” (vol 2, p. 367).

- ^

Remind me what it means for something to matter again?

- ^

Possible realist wagerer response: yes but when you’re deciding in the face of meta-ethical uncertainty, giving weight to your a-rational desire to not be burned alive, even in nihilist worlds, is wrong and bad and selfish. Or maybe: that’s because you secretly have high credence on normative realism after all, even when you condition on nihilism! More on this below.

- ^

Intuition pump: realism wants to make morality like math. But we expect the aliens, the paperclippers, etc to agree about math.

- ^

Though note that the realist wagerers, at least, never said realism was true. So careful about giving them Bayes points. I.e., if Bob says “99% percent that I’m about to die of poison, but if that’s true then all my actions are low-stakes, so I’m going to act like it’ll be fine,” and then it is fine, you might give Bob points for his expected-utility reasoning, but you shouldn’t treat him like someone who predicted that it will be fine.

- ^

Thanks to Will MacAskill for discussion of this sort of factor.

- ^

Are we going to get two envelope problems, here? Maybe — I’m hoping to double click on this issue more at some point. But for now I mostly want to gesture at some of the basic dynamics (and I think two envelope problems are going to bite hardest if we have uncertainty about what sort of normative oomph factor to use, and try to take the expectation – but my examples here won’t involve that).

- ^

Wagerer: “It’s altruism towards the people-that-matter, at least? Weighted by their mattering?”

Skeptic: “What about towards the people, period?”

Wagerer: “I don’t care about people, period. I only care about people-that-matter.”

Skeptic: “Sounds a bit scary. Would you burn me alive for a dollar-that-matters, I didn’t matter?”

Wagerer: “Yes. That’s like asking: would you burn me alive, if it were the case that you should burn me alive?”

- ^

E.g., if you say that X – say, the difference between a world with one person at 10 welfare and a world with one person at 20 welfare — matters the same amount conditional on total vs. average utilitarianism, you won’t always be able to say the same thing about Y – e.g. the difference between a world with two people, both at 10, and that world, but with one of them at 20 instead. Comparison 1: <10> vs. <20> Comparison 2: <10, 10> vs. <10, 20> That is, in both cases you add ten welfare to the total, but in the first you double the average, whereas in the second you multiple it by 1.5. So what sort of change to the average is equivalent in mattering, on average-ism, to adding ten units of value, on totalism? Is it 1.5x, or 2x, or something else? The available answers seem worryingly arbitrary.

- ^

See MacAskill, Bykvist, and Ord (2020), Chapter 5, for discussion of and objections to structuralist views.

- ^

Or at least, they imply the same behavior if you’re certain about them. If you have different amounts of credence on them (and we have some way of separating your credences from your normative oomph factors), then this difference can matter in Martha-like cases – a difference that I think helps diffuse the objection that the difference between small-deal and big-deal total utilitarianism is behaviorally meaningless.

- ^

This is also true of the standard way of understanding utility functions as unique up to positive affine transformation.

- ^

This is from Lewis (1947). The full passage is one of my favorites from Lewis: “Men are reluctant to pass over from the notion of an abstract and negative deity to the living God. I do not wonder. Here lies the deepest tap-root of Pantheism and of the objection to traditional imagery. It was hated not, at bottom, because it pictured Him as man but because it pictured Him as king, or even as warrior. The Pantheist’s God does nothing, demands nothing. He is there if you wish for Him, like a book on a shelf. He will not pursue you. There is no danger that at any time heaven and earth should flee away at His glance. If He were the truth, then we could really say that all the Christian images of kingship were a historical accident of which our religion ought to be cleansed. It is with a shock that we discover them to be indispensable. You have had a shock like that before, in connection with smaller matters—when the line pulls at your hand, when something breathes beside you in the darkness. So here; the shock comes at the precise moment when the thrill of life is communicated to us along the clue we have been following. It is always shocking to meet life where we thought we were alone. ‘Look out!’ we cry, ‘it’s alive.’ And therefore this is the very point at which so many draw back—I would have done so myself if I could—and proceed no further with Christianity. An ‘impersonal God’—well and good. A subjective God of beauty, truth and goodness, inside our own heads—better still. A formless life-force surging through us, a vast power which we can tap—best of all. But God Himself, alive, pulling at the other end of the cord, perhaps approaching at an infinite speed, the hunter, king, husband—that is quite another matter. There comes a moment when the children who have been playing at burglars hush suddenly: was that a real footstep in the hall? There comes a moment when people who have been dabbling in religion (‘Man’s search for God!’) suddenly draw back. Supposing we really found Him? We never meant it to come to that! Worse still, supposing He had found us?”

- ^

Yet another reason.

- ^

Of course, these aren’t new issues. We’ve just added another factor to our EV calcs – and thus, another place for the mugger to strike. And we can block the mugger elsewhere, perhaps we can block it here as well. But realist wagerers also tend to be usually open to being mugged.

- ^

That is, I do think that objective normative facts have a different intuitive character than subjective ones – one that makes intrinsic differences in oomphy-ness intuitive as well.

- ^

This is partly because I think that we just do have to incorporate those considerations into our decision-making – and I’m not sure that there are any distinctive issues for realist-wagerers in doing so. That said, I do think that (b) and (c) are crucial to why realist wagers matter in practice, even in the absence of Martha-like cases.

- ^

Reminder that I’m not, here, including any further claims about the semantics of normative discourse.

- ^

Ok, but what about the following version of the case?

Martha’s indifference-ism-focused deal: Martha the meta-ethical angel offers you the following deal. If indifference-ism is false, she’ll give you a hundred dollars. If indifference-ism is true, she’ll burn you, your family, and a hundred innocent children alive.

One issue here is that indifference-ism is very implausible – and if the probability that the burning happens is low enough, you should take the deal regardless of whether you care about what happens in that case. That said, there are some normative-ethical views that have implications in the broad vicinity of indifference-ism – i.e., versions of totalism that become indifferent to all finite actions in an infinite world, because they can’t affect the total (infinite) utility; or perhaps, views on which we are sufficiently “clueless” about the consequences of our actions that they all have the same EV. So you can’t just say that “this is deal is OK, but indifference-ism is totally out of the question.”

And indeed, interestingly, I think there’s some hesitation about taking this deal, too – hesitation that seems harder to justify than hesitations about the nihilism-focused version (or the original), and which might therefore prompt suspicion of all the hesitations about Martha-like deal. In particular, there is, perhaps, some temptation to say “even if I should be indifferent to these people burned alive, I’m not! Screw indifference-ism world! Sounds like a shitty objective normative order anyway – let’s rebel against it.” That is, it feels like indifference-ism worlds have told me what the normative facts are, but they haven’t told me about my loyalty to the normative facts, and the shittyness of these normative facts puts that loyalty even more in question.

And perhaps, as well, there’s some temptation to think that “Well, indifference-ism world is morally required to be indifferent to my overall decision-procedure as well – so I’ll use a decision-procedure that isn’t indifferent to what happens in indifference-ism world. Indifference-ism world isn't allowed to care!”

These responses might seem dicey, though. If they (or others) don't end up working, ultimately I think that biting the bullet and taking this sort of deal is in fact less bad than doing so in the nihilism-focused version or the original. So it’s an option if necessary – and one I’d substantially prefer to biting the bullet in all of them.

- ^

Perhaps, as a psychological matter, some genuinely would be indifferent to being burned alive, to the suffering of others, and so on, conditional on nihilism – or at least, substantially less invested in whether or not these things happen. I’ll discuss this relationship with nihilism, and meta-ethics more broadly, more below.

- ^

See Ross (2006) for more on this sort of reasoning; and MacAskill (2013) for some problems.

- ^

Some realists seem to think that making decisions like this requires some kind of self-deception about whether nihilism is true, but I’m skeptical.

- ^

This is necessary to capture the analogy with different possible worlds, where the representatives of each world are treated as knowing that their world is the one that exists.

- ^

Here I’m reminded of a diagnosis of solipsism/other-minds-skepticism that I think I once heard associated with Stanley Cavell, on which one mistakes a psychological difficulty relating to other people with an epistemic or metaphysical one about whether they or their minds exist at all. I’m not going to evaluate that diagnosis here, though.

- ^

Though here I think again of the analogy with theism. When I used to talk to a lot of Christian apologists, I would often hear the argument that without God, life and ethics are meaningless – an argument that, looking back, seems to me to function in a quasi-coercive way. That is, if you can convince someone that all meaning and purpose depends on your ideology being true, you can scare them into believing it – or at least, trying to believe it, and betting on it in the meantime, while assuming (I expect, wrongly) that they’ll fall into un-ending despair and hopelessness if they ever stop. And I worry that the non-naturalist realists are somehow pulling a similar move on themselves, and on their innocent undergrads (see “The despair of normative realism bot” for some more on this). But I wish I had asked the Christian apologists I spoke to: if life and ethics are meaningless without God, does that mean you’d take a deal like “I’ll give you a dollar if theism is true, but I’ll torture you and a hundred others if its false?” (Would even Jesus take that deal? What about Jesus on the cross? Eli, Eli…) And if not, what’s your N, such that you’re indifferent between saving one people conditional on theism, vs. N people conditional on non-theism? And I want to ask the same of the non-naturalist realists, now.

- ^

See Chappell here for more discussion of ways meta-ethics can matter.

- ^

See e.g. Eliezer Yudkowsky’s history with the realist’s wager, which initially led him to want to push for the singularity as fast as possible – a view he later came to see as extremely misguided.

Hi Joe,

I find this really interesting! I'm not sure I completely understand what your view is, though, so let me ask you about a different case, not involving nihilism.

Suppose you assign some credence to the view that all worlds are equally good ("indifference-ism") . And suppose the angel offers you a gamble that does nothing if that view is false, but will kill your family if that view is true. You use statewise indifference reasoning to conclude that accepting the gamble is just as good as rejecting it, so you accept it.

Here are some possible things to say about this:

Am I right that, from what you write here, you'd lean towards option 4? In that case, what would you say if you don't care about your family? Or what if you're not sure what you care about? (Replace "indifference-ism" with the view that you are in fact indifferent between all possible outcomes, for example.) And are you thinking more generally that what you should do depends on what your preferences are, rather than what's best? Sorry if I'm confused!

Hi Jake,

Thanks for this comment. I discuss this sort of case in footnote 33 here -- I think it's a good place to push back on the argument. Quoting what I say there:

That is, I'm interested in some combination of:

Adding a few more thoughts, I think part of what I'm interested in here is the question of what you would be "trying" to do (from some kind of "I endorse this" perspective, even if the endorsement doesn't have any external backing from the normative facts) conditional on a given world. If, in indifference-ism world, you wouldn't be trying, in this sense, to protect your family, such that your representative from indifference-ism world would indeed be like "yeah, go ahead, burn my family alive," then taking the deal looks more OK to me. But if, conditional on indifference-ism, you would be trying to protect your family anyway (maybe because: the normative facts are indifferent, so might as well), such that your representative from indifference-ism world would be like "I'm against this deal," then taking the deal looks worse to me. And the second thing seems more like where I'd expect to end up.

Hi Joe, thanks for sharing this. I enjoyed it - as I have enjoyed and learned from many of your philosophy posts recently!

A couple things:

1) I'm curious about your thoughts on the role of knowledge in epistemology and decision theory. You write, e.g., 'Consider the divine commands of the especially-big-deal-meta-ethics spaghetti monster...'. On pain of general skepticism, don't we get to know that a spaghetti monster is not 'the foundation of all being'? (I don't have a strong commitment here, but after talking with a colleague who works in epistemology + decision theory and studied under Williamson, I think this sort of k-first approach is at least worth a serious look.)

2) At risk of being the table-thumping realist, I wanted to press on the nihilist's response. You write that the nihilist has 'other deliberative currency available – “wants,” “cares,” “prefers,” “would want,” “would care,” “would prefer,” and so on.' We then get an example of this style of practical reasoning: '“If I untangle the deer from the barbed wire, then it can go free; I want this deer to be able to go free; OK, I will untangle the deer from the barbed wire”.'

The first two sentences don't in any way support the third (since 'supports' is a normative relation, and we're in nihilism world). The agent could just as well have thought to herself, 'If I untangle the deer from the barbed wire, then it can go free; I want this deer to be able to go free; OK, I will now read Hamlet.' There's nothing worse about this internal dialogue and sequence of action (assuming the agent does then read Hamlet) because, again, nothing is worse than anything else in nihilism world.

You ask, 'Who set up this court? We would presumably object if the court only accepted shoulds that were made out of e.g. divine commands, or non-natural frosting. So why not accept the currency of every representative?' I think the realist will want to say: 'the principled distinction is that in the other worlds there some sort of normativity. Whereas in nihilism world there isn't. That's why nihilism doesn't get a seat at the table.'

As far as I can tell (not being a specialist in metaethics), the best the nihilist can hope for is the "Humean" solution, namely that our natural dispositions will (usually) suffice to get us back in the saddle and keep on with the project of living and pursuing things of "value." ("...fortunately it happens, that since reason is incapable of dispelling these clouds, nature herself suffices to that purpose, and cures me of this philosophical melancholy and delirium, either by relaxing this bent of mind, or by some avocation, and lively impression of my senses, which obliterate all these chimeras. I dine, I play a game of backgammon, I converse, and am merry with my friends; and when after three or four hours' amusement, I would return to these speculations, they appear so cold, and strained, and ridiculous, that I cannot find in my heart to enter into them any farther.

Here then I find myself absolutely and necessarily determined to live, and talk, and act like other people in the common affairs of life" (Treatise 1.4.7.8-10).) But this does nothing to address the question of whether we have reason to do any of those things. It's just a descriptive forecast about what we will in fact do.

Disclaimer: I didn't read the whole post

But taking just this wager:

You should accept this wager, since if moral realism is false then by definition it doesn't matter whether anyone is tortured or not.

I didn't follow the meaningfulness to the argument of the natural/non-natural distinction but from this quote it looks like your view doesn't depend on it:

The perception you notice is just an intuition against moral non-realism. If moral realism isn't true, it doesn't matter what you want.

I really liked this post! fwiw, I've a partial response in my latest post on 'Metaethics and Unconditional Mattering'.