(This report is viewable as a Google Doc here.)

This report was completed as part of the Cambridge Existential Risks Initiative Summer Research Fellowship and with continued grant support following the end of the program in September 2022.

Acknowledgments

A special thank-you to my CERI mentor Haydn Belfield for his non-stop support and to Uliana Certan for her impeccable editing talent. I'd also like to thank those who donated their time to chat about the research including Michael Aird, Matthew Gentzel, Kayla Matteucci, Darius Meissner, Abi Olvera, and Christian Ruhl. And of course, one final thank-you to the entire CERI team and the other research fellows for all their support and rigorous debate.

1. Summary:

1.1 The Problem

The increasing autonomy of nuclear command and control systems stemming from their integration with artificial intelligence (AI) stands to have a strategic level of impact that could either increase nuclear stability or escalate the risk of nuclear use. Inherent technical flaws within current and near-future machine learning (ML) systems, combined with an evolving human-machine psychological relationship, work to increase nuclear risk by enabling poor judgment and could result in the use of nuclear weapons inadvertently or erroneously. A key takeaway from this report is that this problem does not have a solution; rather, it represents a shift in the paradigm behind nuclear decision making and it demands a change in our reasoning, behavior, and systems to ensure that we can reap the benefits of automation and machine learning without advancing nuclear instability.

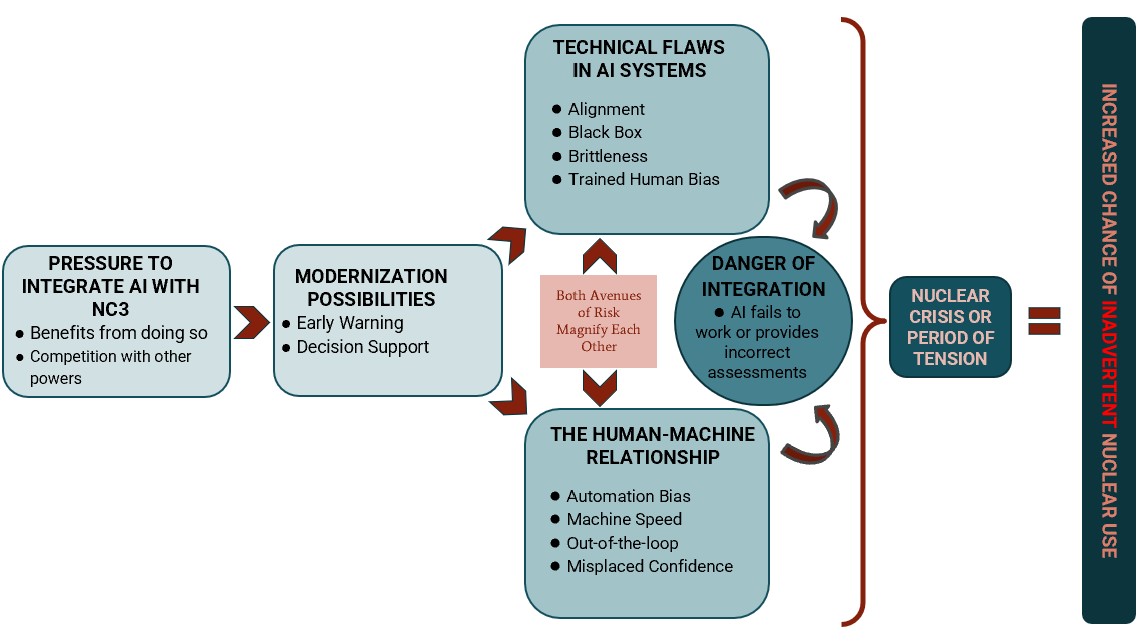

The figure below demonstrates the general path from AI/ML systems integration with nuclear command and control toward an increased risk of nuclear weapons use.

Navigating our relationship with machines, especially in a military context, is integral to safeguarding human lives. When considering this challenge at the already contentious nuclear level, our concern expands from preserving human lives to preserving human civilization. Undoubtedly, any development that alters decision making around nuclear weapons use demands our close attention. AI and ML stand to enable a significant increase in automation throughout Nuclear Command, Control, and Communications (NC3). Only time will reveal the exact way this will unfold, but early warning and decision support systems seem to be likely candidates for high levels of automation.

Integrating ML systems could increase the accuracy of these systems while also potentially removing human related errors. However, given their importance in detection, and their history with false positives, anything that impacts early warning and decision support systems requires the utmost scrutiny even if the change stands to potentially improve the safety of such systems.

This report is designed to lay out the problem, analyze possible solutions, and present funding opportunities designed to support impactful projects and developments. However, in a manner more akin to the academic paper, the through line in this report highlights that the automation ML fessably brings to NC3 could have substantial impacts on nuclear decision making. By increasing automation, we are effectively ‘pre-delegating’ authority to machine intelligences which are inevitably flawed systems despite the advantages they could bring. In one sense, their imperfection does not in-of-itself derail any argument in favor of ML integration. They would either work alongside, or replace, human operators who are also both flawed and limited in what they can do. However, the true danger of relying on flawed machines becomes apparent when we consider how automation will affect the human-machine relationship.

The very purpose of developing AI is for its theoretical ability to perform better than humans - to be faster, stronger, and continuously vigilant. However, overconfidence in their abilities could result in over deployment of the technology and premature ‘pre-delegation’ of responsibility. This not only sets the stage for technical flaws within the modern and nearterm ML systems to increase the risk of catastrophes, it also removes humanity from this process. While humans are far from perfect, we’ve managed to avoid inadvertent nuclear weapons use and nuclear war for just under a century. I argue that human weakness itself played a key role in preventing inadvertent use of nuclear weapons. Therefore, the increasing automation that accompanies ML integration with NC3 could represent a dramatic shift in nuclear weapons decision making processes and this exacerbates pre-existing risks around inadvertent use.

1.2 Possible interventions?

A philanthropist interested in reducing nuclear risk stemming from a poorly planned integration of ML with NC3 could support efforts to increase research and discussion on this topic, and/or efforts to provide stronger empirical support of these concepts and possibilities.

Possible interventions include funding new experimental wargaming efforts, funding research at key think tanks, and increasing international AI governance efforts. While far from an exhausted list, some of the following think tanks and research groups are likely impactful candidates:

- The United Nations Institute for Disarmament Research

- Stockholm International Peace Research Institute

- RAND Corporation

- The Centre for Strategic and International Studies

- The Nuclear Threat Initiative

I look at each intervention in more detail at the end of the document.

1.3 Target Audience

This report is designed to outline the effect that integrating AI/ML with NC3 could have for the risk of inadvertent use. It is also primarily designed for an audience already somewhat versed in nuclear deterrence literature with an understanding of what crisis stability/instability entails. Nonetheless, this report does delve into some of these topics insofar as they directly interact with the main argument. What this report is not is a deepdive into the computer science behind AI technology, nor is it a psychological piece of research designed to act as the definitive piece on the human-machine relationship.

This work aims to build off insights from both the fields of nuclear deterrence and AI safety. In synthesizing the literature of these two fields I hope to inform nuclear grantmakers of the risks involved in this integration and to provide direction for further research or other efforts to mitigate this problem.

1.4 Scope of Analysis

This work focuses on the U.S. and allied Western states: the U.K., France, and Israel. The reason for this is threefold:

- Systems between different powers differ and of the nuclear powers, I am best positioned to examine western nuclear command.

- The problems addressed are related to the technology itself: how it could potentially impact the human-machine relationship and affect nuclear decision-making. These issues are not state specific, so omitting other nuclear states from the scope of this report is not a significant shortcoming.

- Cooperative efforts to reduce nuclear risk are arguably preferable, but unilateral action matters and can make a positive impact on nuclear stability.

In this case, any effort to mitigate the negative side effects of AI integration with nuclear command will likely lead to a net-positive outcome in terms of safety for all actors. Therefore, one can primarily focus on the U.S. and still provide reasonable suggestions for reducing nuclear risk.

There is also the question of alarmism. Some thinkers have rightly pointed out that discussions on emerging technologies often amounts to a dangerous form of alarmism.[1] Historically, other technologies that were predicted to change the nature of warfare, such as chemical weapons, failed to live up to these expectations.[2] Other times, “even when technologies do have significant strategic consequences, they often take decades to emerge, as the invention of airplanes and tanks illustrates.”[3] The notable exception to this was the advent of nuclear weapons. The undeniability of their sheer destructive power has dominated international security and great power interactions since their conception. While the details of how AI will impact both the world at large, and the military context specifically, rests uncertain, there are reasons to be concerned that it will be more akin to nuclear weapons than other overhyped technologies. AI has been likened to electricity; “like electricity brings objects all around us to life with power, so too will AI bring them to life with intelligence.”[4] Others have stated that it will be “the biggest geopolitical revolution in human history.”[5] The potentially massive impact of strategic military AI, and the relatively small amount of philanthropic directed to this issue, highlight the need to outline potential dangers.

1.5 Outline of Report

This report starts with defining what is meant when discussing AI and NC3. Second, it then outlines the pressures to integrate AI with NC3, evidence to support this claim, and how this modernization could take place. Third, the report portrays the mechanisms that could lead to the risk of increased nuclear use risk: technical flaws within AI systems and problems surrounding the human-machine relationship. Forth, the report briefly analyzes a variety of solutions to these risks and outlines which seem most likely to have a positive impact on risk reduction. Fifth and finally, it provides a list of tractable funding opportunities.

2. Defining Key Aspects of the Discussion

2.1 Artificial Intelligence and Machine Learning

Automation, to varying degrees, has been a part of deterrence and command/control since the dawn of nuclear weapons.[6] “Modern AI” as it is publicly imagined is a relatively new addition. The Soviet Perimetr system, known as the ‘Dead Hand’ in the West, is an example of a more extreme form of automation with nuclear weapons. This was an automated NC3 system designed to react in a situation in which a potential nuclear detonation was detected and communication with national leadership was dead. The system could interpret this as an indication of a nuclear attack against Russia,[7] and give authority and ability to a human operator in a hardened bunker to launch nuclear missiles in response.[8] This operator would likely have very little information other than what the Perimetr system provided and would have to decide whether or not to trust its determination.

There is disagreement as to how developed this Perimetr system actually was.[9] Nonetheless, it acts as an example of the potential pitfalls associated with linking a ‘Doomsday Device’ to a machine intelligence. Fortunately, both the U.S. and U.K. have made formal declarations that humans will always retain political control and remain in the decision-making loop when nuclear weapons are concerned.[10]

The term “AI” invokes a myriad of different definitions, from killer robots to programs that classify images of dogs. What brings these computational processes together under the umbrella term of artificial intelligence is their ability to solve problems or perform functions that traditionally require human levels of cognition.[11]

Machine learning (ML) is an important subfield of this research as these systems learn “by finding statistical relationships in past data.”[12] To do so, they are trained using large data sets of real-world information. For example, image classifiers are shown millions of images of a specific type and form.[13] From there, they can look at new images and use their trained knowledge to determine what they are seeing. There are also a number of ways one can train an ML system. Training can be supervised, where data sets are pre-labeled by humans, or unsupervised, where the AI finds “hidden patterns or data groupings without the need for human intervention.”[14]

With this in mind, while ML is the overarching driver behind the renaissance of intelligent machines, its own subset of deep learning is arguably the main way in which AI will be used in nuclear command. Deep learning differs from machine learning through the number, or depth, of its neural network layers, which allows it to automate much of the training process and requires less human involvement in its training.[15] This automation allows the system to use unstructured or unlabeled data to train itself by finding patterns within larger datasets that are not curated by humans. This makes them extremely useful for recognizing patterns and managing and assessing data for “systems that the armed forces use for intelligence, strategic stability and nuclear risk.”[16]

However, because the ways in which AI could be integrated with NC3 could be incredibly varied and broad, I will generally use the ML terminology when discussing AI in this report. To put this in context, one study found that potentially 39% of the subsystems that make up NC3, could be integrated with ML.[17] While deep learning may be crucial to the success of AI within command systems, it is but one, very important, subset of ML, and therefore this report will focus on the idea of machine learning.

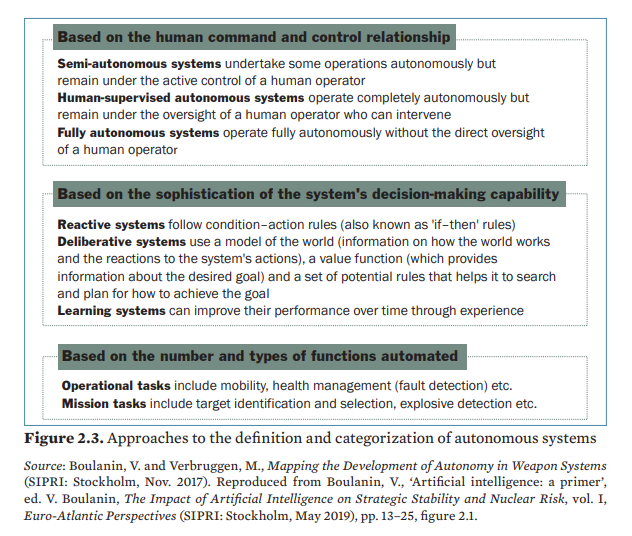

Machine learning is the key to the advent of intelligent machines within nuclear command, and the autonomy it enables is arguably the most important benefit. Autonomy, or ‘machine autonomy,’ “can be defined as the ability of a machine to execute a task or tasks without human input, using interactions of computer programming with the environment.”[18]

By removing human operators from the decision making loop in certain instances, one can better leverage both the operating speed, and the skill of ML systems to find hidden patterns in large complicated data sets. This presents a large strategic advantage within a military context where there is a premium on haste and reliable information. For the purpose of this report, AI integration will be generally discussed in terms of ML, automation, and its ability to assess data and provide analysis.

2.2 Nuclear Command, Control, and Communications (NC3)

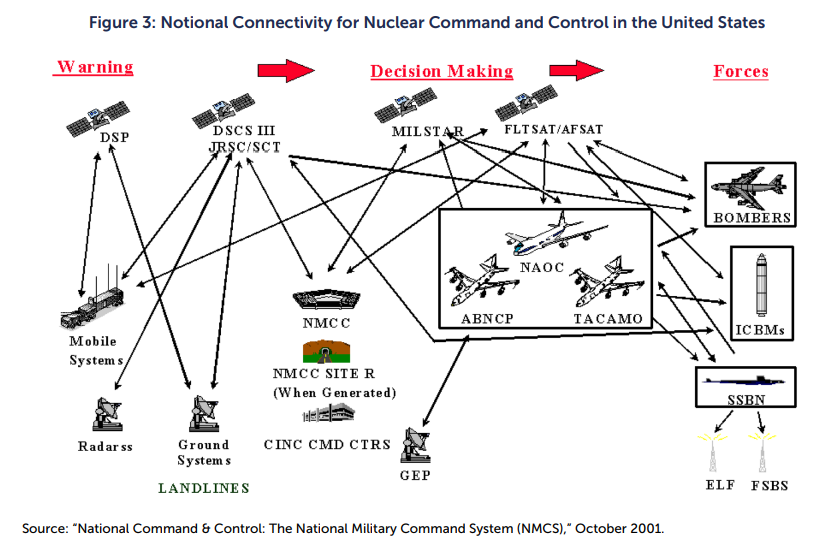

NC3 is “the combination of warning, communication, and weapon systems–as well as human analysts, decision-makers, and operators–involved in ordering and executing nuclear strikes, as well as preventing unauthorized use of nuclear weapons”.[19]

New and more powerful ML systems could be used to improve the speed and quality of assessment completed by NC3. ML systems’ ability to find correlations by continuously sorting through large amounts of data with an objective eye is particularly relevant to early-warning systems and pre-launch detection activities within the nuclear security field.

The other core reason for focusing on early warning and decision-support systems within NC3 is their susceptibility and influence on the possibility of inadvertent use. Since the inception of nuclear weapons, there has been a plethora of false alarms and false positives due to both technical and human error.[20] A now classic example of this occurred in the Soviet Union in September 1983 when Lieutenant Colonel Stanislav Yevgrafovich Petrov’s early warning system falsely detected five incoming U.S. Minuteman intercontinental ballistic missiles (ICBMs). The system confirmed the attack with the highest level of confidence with a probability factor of two.[21] Despite this, he had reservations about the system’s capability and accuracy and realized that the incoming attack did not fit Soviet strategic doctrine as it was far too small in scale.[22] Ultimately he dedicated to report it as a false alarm and his intuition was correct. The machine had made the wrong call and Petrov’s skepticism and critical thinking combined likely contributed to preventing Soviet retaliation.

On the other side of the Iron Curtain, NORAD was not free from human and technical error resulting in false alarm situations. In November 1979 an early warning system was accidentally fed test scenario data designed to simulate an incoming Soviet nuclear attack. Lucky radar was about to confirm that this was a mistake and in 1980 NORAD “changed its rules and standards regarding the evidence needed to support a launch on warning.”[23] However, this isn’t the only example as less than a year later a faulty computer chip caused the early warning system to detect what looked like an incoming Soviet attack.[24] At 02:26 on 3 June 1980, National Security Advisor Zbigniew Brzezinski received a telephone call informing him that 220 missiles had been fired at the U.S, which was then confirmed in another call with the number of missiles being raised to 2,200.[25] At the literal last minute before he was about to inform President Carter did Brzezinski receive the final call telling him it was a false alarm that had been caused by a faulty computer chip. In one sense these stories demonstrate that even in the face of complex and flawed systems, organizational safety measures can prevent inadvertent use. However, they also speak to the frightening ease with which we arrive at the potential brink of nuclear use when even a small mistake is made.

Integrating ML systems could increase the accuracy of these systems while also potentially removing the human source of these errors. However, given their importance in detection, and their history with false positives, anything that impacts early warning and decision support systems requires the utmost scrutiny even if the change stands to potentially improve the safety of such systems.

3. Pressures to Integrate AI with NC3 and the Shape It Could Take:

3.1 Pressures to integrate ML with nuclear systems

Despite the outlined dangers of AI integration, there are pressures and incentives to use ML systems within NC3. As previously stated, automation has always played a role in nuclear strategy and deterrence. ML could take the level of automation to new heights and potentially change nuclear weapons decision-making.

In one sense, states desire this integration for the strategic advantages that it promises.[26] ML systems can function without rest, look at enormous amounts of data, and, perhaps most importantly, find patterns and connections in a way that often outperforms human analysts by also being able to draw conclusions from seemingly unrelated data points.[27] Furthermore, current NC3 systems are aging and the last major update was during the 1980s.[28] The need to ensure the technical effectiveness of NC3 is clear.

Beyond working for deterrence as intended , there is also interest in ensuring that systems are progressively safer. The automation of ML stands to reduce the number of near-calls related to human error, cognitive bias, and fatigue.[29] Despite concerns over integrating AI, it’s also blatant that human operators are far from perfect and prone to allowing biases or making mistakes, especially when completing repetitive tasks or assessing adversarial moves.

Finally, states have an interest in keeping up with, if not surpassing, their adversaries’ military technology.[30] This is particularly concerning regarding the implementation of ML in nuclear command because in “an effort to gain a real or perceived nuclear strategic advantage against their adversaries, while engaging in an AI race, states may place less value on AI safety concerns and more on technological development.”[31] While the development of AI technologies by major states may not currently be best characterized as a ‘race’, the pressure to keep up is unmistakable: “AI has the potential to drastically change the face of war and the world at large… This in turn not only drives general integration, but raises the risk that both our advancements, and those of adversarial nations, increase the speed at which we field AI-enabled systems, even if testing safety measures are lacking.”[32] Not only will modernized NC3 incorporate ML but there is a real risk of rushed integration with higher risk tolerance than normally accepted.

3.2 Modernization

Given these pressures to integrate ML with nuclear command, what concrete evidence is there that integration is contemplated within the U.S. defense establishment? Additionally, how might this integration specifically take place within early warning decisions support systems?

Despite the inherently classified nature of these developments, public statements by US leadership indicate that NC3 modernization could include further automation and AI integration.[33] When asked about AI and NC3 modernization, former USSTRATCOM Commander General Hyten stated: “I think AI can play an important part.”[34] Former director of the USSTRATCOM NC3 Enterprise Center publication stated the desire and need for AI experts for NC3 modernization.[35] This follows statements by former U.S. Secretary of Defense James Mattis who also expressed an interest in the use of militarized AI and its ability to fundamentally change warfare.[36]

Building off this, “The U.S. budget for Fiscal Year 2020, for instance, singled out AI as a research and development priority and proposed $850 million of funding for the American AI Initiative.”[37] China has “declared its intentions to lead the world in AI by 2030, estimated to exceed tens of billions of dollars.”[38] Modernization and integration of AI with NC3 will likely take many different forms over the next decade with over $70 billion going towards command and control and early warning systems as part of the NC3 modernization.[39]

Given these statements and the potential benefits of AI, it is reasonable to assume that efforts to incorporate further AI and automation into the military include NC3. At the very least, it should be assumed that this prospect is being explored, and if integration doesn’t happen now, it could easily happen in future years.

Considering these varied indications that integrating AI into military systems, including NC3, is apparently beneficial and actively explored by the US military, it’s valuable to discuss the two important overarching ways in which integration could impact risk: firstly, through early warning systems, and secondly through a form of ‘predictive forecasting’.

3.3 Early Warning:

The early warning system is a core part of NC3 where integration of AI systems will be particularly incentivised and impactful.The US early warning system uses a combination of pace-based infrared (IR) sensors and ground-based radars to detect potential incoming ballistic missiles.[40] This then triggers an alert at the North American Aerospace Defense (NORAD) Command in Colorado where analysts work to confirm and authenticate the warning before quickly submitting the information to leadership if it is deemed reliable.[41]

This entire process takes place within a few minutes- an already short amount of time to analyze a high-stakes situation and avoid errors. And yet, detection and analysis of incoming attacks is getting increasingly complicated. Early warning systems must be capable of detecting multiple targets and also discriminating between: type of attack, launch and impact points, validity, and more.[42] AI could remove the worst aspects of humanity, while providing analysis for mass amounts of data, in a position that is traditionally both mentally taxing and boring for human analysts. This is arguably an important driver behind AI integration as both bias and exhaustion are human limitations that can easily impede good decision making.

ML systems could effectively replace at least some of the analytical work done by humans who assess early warning information to determine the credibility of a threat. “Recent publications have highlighted the potential for machine learning-based algorithms to provide better discrimination abilities in radar applications. If used in early-warning systems, this could in principle result in fewer false alarms.”[43] Early warning information is getting progressively complicated with the timeframe to determine the validity of the danger getting smaller. New weapons technologies, such as hypersonic delivery systems, can complicate traditional detection by appearing later or confounding detection systems.[44]

Additionally, the advent of technologies such as hypersonic weapons or even the automation of attacks means that combat could soon reach speeds much too fast for human cognition. AI-augmented systems would be essential for any offensive or defensive operations occurring at ‘machine-speeds’ including cyber warfare or automated weapon systems. [45]

Given the importance of early warning systems within NC3, the possibilities for their modernization, and the significant reasons to do so, it is feasible and even probable that ML will be integrated with early warning systems.

3.4 Predictive Forecasting of the Imminent Use of Nuclear Weapons

Once the realm of science fiction, there are now discussions around the use of machine learning in command and control to detect nuclear threats before they occur. This could involve ML systems analyzing relevant factors such as troop movements, supply lines, communication, and other intelligence to calculate where nuclear threats, not only could, but likely will, come from.

A prime, if somewhat rudimentary, example of this would be the Soviet Union’s response to the events leading up to Able Archer 83 (See footnote for more infoon event).[46] In response to fears that the U.S. would first-strike the Soviet Union, “some 300 operatives [were tasked] with examining 292 different indicators—everything from the location of nuclear warheads to efforts to move American ‘founding documents’ from display at the National Archives.”[47] This information “was then fed into a primitive computer system, which attempted to calculate whether the Soviets should go to war to pre-empt a Western first strike.”[48] An AI could theoretically be tasked with forecasting future attacks in a similar, though more advanced, manner.

A relatively modern example of this kind of technology is the Defense Advanced Research Projects Agency’s (DARPA) Real-time Adversarial Intelligence and Decision Making (RAID) machine learning algorithm “designed to predict the goals, movements, and even the possible emotions of an adversary’s forces five hours into the future.”[49] Acting as support tools for decision makers, “future iterations of these systems may be able to identify risks (including risks unforeseen by humans), predict when and where a conflict will break-out, and offer strategic solutions and alternatives, and, ultimately, map out an entire campaign.”[50]

This is not an attempt to see the future but rather a kind of ‘predictive analytics’ already used to combat crime in cities across the globe.[51] This kind of technology works to determine where crime will occur before it actually does. An AI system tasked with this responsibility conducts analysis, finds correlations, and then draws conclusions from the data and makes complex statistical predictions about future behavior, providing decision support and suggestions to the experts in the field.[52] In a basic sense, this isn’t too different from modern image classifiers or language models in which systems learn from patterns (guided by human-attributed labels and rewards). The predictive forecasting machine in NC3 would also seek patterns that indicate a potential incoming threat by continuously monitoring an immense amount of real world data and calculating their significance to nuclear threats..

The strategic value of an accurate predictive and preemptive method in nuclear security is invaluable. Rather than reacting and responding to a nuclear strike or a similarly threatening attack, a military power would anticipate their adversaries’ moves and either thoroughly prepare for them, hinder or inhibit them, or take the offensive. AI systems could theoretically offer the safest way to achieve preemption (an already contentious concept) if they can outperform their human counterparts, remain unbiased, and provide a calculated warning or suggestion. Additionally, AI can assess data and bring together different pieces of intelligence in a manner that a human potentially never would.[53]

Of course, even if such systems are deployed to assist with strategic decision-making, the question remains whether humans would act solely, or at least primarily, based on their recommendations. At a series of workshops on artificial intelligence and nuclear risk held by the Stockholm International Peace Research Institute (SIPRI) in 2020, “workshop participants found it hard to believe that a nuclear-armed state would find such a system reliable enough to initiate a pre-emptive nuclear attack based only on the information that its algorithms produce.”[54] Participants believed that states would likely wait for tangible evidence such as early warning system detections to confirm the AI conclusions .[55]

I personally question this confidence in the reliability of decision-makers and their ability to wait to confirm the results of this predictive forecasting. First, crisis situations are unpredictable, and emotions running high or the other systems failing could result in less patience than imagined. Second, the human-machine relationship could evolve to a point where trust in machine intelligence is far higher, almost implicit. Finally, waiting for systems such as those used in early warning to confirm the statistical prediction of AI would completely negate the reason for deploying it in the first place.

ML powered predictive analytics in nuclear command and control could potentially be, or already is being, pursued by militaries. Even if the SIPRI participants are correct that decision-makers will reluctantly follow the ML recommendations, there are nevertheless a myriad of risks that must still be addressed following this integration.

4. Inherent Dangers of Integration

This section outlines the risks of integrating ML with nuclear command. It offers an explanation of the contributing factors that result in these risks and also an explanation of the mechanisms - the how-and-what could go wrong and result in actual nuclear use or a significant increase in nuclear use risk. Each issue here presents a problem on its own, while also often working in conjunction with the other outlined problems to magnify the increased chance of inadvertent nuclear use.

In section 4.1 I outline the technical flaws within AI systems and how they interact with nuclear deterrence and decision making. In section 4.2 I explore how increasing automation could change the human-machine relationship, which stands to alter the decisions-making process of nuclear deterrence. As previously established, any potential changes to decision-making around nuclear weapons demand the utmost scrutiny.

4.1 Technical Flaws in AI Technology

The problems outlined here are technological hurdles that face current and near-term AI. Some of these seem solvable while others may simply be inherent and unsolvable. Regardless of these hurdles, the current and coming modernization means that integration may occur while these problems still persist. The simple adage that ‘complexity breeds accidents’ is relevant here. Even with rigorous testing, the coding error rate is often between 0.1 to 0.5 errors per 1000 lines of code.[56] Given that luxury automobiles have around 100 million lines of code,[57] one can imagine that the amount of code required in AI systems used for command and control would be immense. Errors would be inevitable.

The nuclear weapon context also has specific implications for looking at technical flaws in AI. It is the paramount example of a ‘safety critical’ environment. The consequences of failure are at their highest which demands the utmost scrutiny into how decisions are made and what tools are used to aid the process. Additionally, the flaws outlined below would likely become a serious issue in a time of crisis. Periods of inflamed tensions and dangerous rhetoric are when mistakes are most likely. Finally, in critical circumstances, humans would likely have a very limited amount of time, if any, to scrutinize the data or suggestions provided by an AI.

4.1.1 The Alignment Problem

AI systems alignment, or misalignment, is a key problem facing AI deployment. While typically considered in the context of artificial general intelligence (AGI) development, ML experts struggle to design even modern ML systems that act exactly as intended without behaving even slightly wrong in unexpected and surprising ways.

Recent work done by Anthropic built on this claim when they found that “large generative models have an unusual combination of high predictability - model capabilities scale in relation to resources expended on training - and high unpredictability — specific model capabilities, inputs, and outputs can’t be predicted ahead of time.”[58] It is important to recognize that generally speaking, the impact of technical flaws do not cause the machine to break or fail to work. Rather, the machine does exactly as instructed, but not what is wanted from its programmers. Brian Christian effectively illustrated the “alignment problem,” as by describing those who employ AI systems in “the position of the ‘sorcerer’s apprentice’: we conjure a force, autonomous but totally compliant, give it a set of instructions, then scramble like mad to stop it once we realize our instructions are imprecise or incomplete.”[59]

The following problems fall under the greater issue of misalignment or contribute to it. These specific technical flaws can result in the deployment of ultimately misaligned systems..

4.1.2 The ‘Black Box’ problem

This is perhaps the most central problem in the context of nuclear command integration as it creates its own issues while also magnifying the other technical problems. ‘Black Box’ refers to ML systems being “opaque in their functioning, which makes them potentially unpredictable and vulnerable.”[60] These systems are somewhat unknowable as “neural nets essentially program themselves… they often learn enigmatic rules that no human can fully understand.”[61] We can test their outputs, but we don’t really know why or how they reach their conclusions. For all we know, the system is misaligned and either using data incorrectly or measuring the wrong data altogether. Since we don’t understand the system’s inner workings, its behavior can seem odd and completely unexpected, almost alien. Reliability is key in terms of nuclear decision making.

One example of this problem’s consequences is discussed in a 2016 article from OpenAI describing their efforts to conduct new reinforcement learning (RL) experiments using the game CoastRunner. The team trained the AI to obtain the highest possible score in each level, assuming that this concrete goal would reflect their informal goal for the AI to finish the race. However, the RL agent determined that it could more effectively achieve a high score by simply going around in circles and continually knocking over the same three targets - as shown in the CoastRunners 7 video.[62] Despite catching on fire, crashing into a boat, and going the wrong direction, the AI scored 20 percent higher than human players. This perfectly illustrates the Black Box problem: we cannot fully predict its behavior, and we cannot foresee or control its interpretation of our goals. While this is harmless in a video game, this could be catastrophic in a nuclear context.

The ‘Black Box’ issue has been recognized by the U.S. Department of Defense (DoD) as the “dark secret heart of AI” which has acted as a significant hurdle to the military use of ML systems.[63] This innate opacity is particularly problematic in safety-critical circumstances with short timeframes such as a nuclear crisis. Crises by their very nature are unpredictable: “testing is vital to building confidence in how autonomous systems will behave in real world environments, but no amount of testing can entirely eliminate the potential for unanticipated behaviors.”[64] It is important to note that “there is active research, often called ‘explainable AI,’ or ‘interpretability’ to better understand the underlying logic of ML systems.”[65] However, at this time the ‘Black Box Problem’ remains and may be inherent to ML systems and thus not ‘solvable’.

Most importantly, the ‘black box’ makes it significantly more difficult to address the other technical flaws of AI systems. The need to determine and address AI system ‘flaws’ seems antithetical to the unknowable nature of these issues. Technical issues can very well remain dormant until they are triggered when the system is already in operation.

4.1.3 Brittleness

In the face of complex operating environments, machine learning systems often encounter their own brittleness – the tendency for powerfully intelligent programs to be brought low by slight tweaks or deviations in their data input that they have not been trained to understand.[66] On the technical side, this is known as a ‘distributional shift’ where ML systems can “make bad decisions – particularly silent and unpredictable bad decisions – when their inputs are very different from the inputs used during training.”[67]

In one case, despite a high success rate in the lab, graduate students in California found that the AI system they had trained to consistently beat Atari video games fell apart when they added just one or two random pixels to the screen.[68] In another case, trainers were able to throw off some of the best AI image classifiers by simply rotating the objects in an image.[69] This is an unacceptable issue when considering AI employment in an ever-changing real-world environment. Unlike human operators, autonomous ML systems “ lack the ability to step outside their instructions and employ ‘common sense’ to adapt to the situation at hand”.[70]

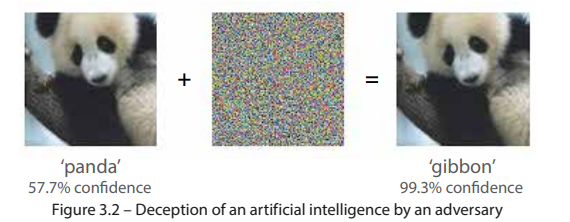

The figure below illustrates how easily an adversary could confuse an image classifier by simply adding pixels(invisible to humans) to a picture,drastically changing the AI’s interpretation while it signals evidentiary confidence.

Deception of an artificial intelligence by an adversary

Source: JCN 1/18, Human-Machine Teaming, Page 30 |

While this report does not focus on intentional attempts to deceive ML systems, the ease of this deception effectively demonstrates the concept and gravity of brittleness.

Indeed, ML systems struggling with brittleness are unprepared and unsuitable to function outside of the lab, let alone in a military setting. War is an atypical situation – while we can spend a prodigious amount of time training and preparing for it, the ‘fog of war’ will always lead to unexpected developments and surprises. An ML system would likely have a hard time adapting to new situations, and even if it was possible, taking the time to “adapt” could result in serious costs.[71] They would be held to extreme standards, as the need for accuracy and safety in the military context is unmatched. This is far more relevant in safety-critical nuclear security. We can imagine the impermissible consequences of a brittle AI system either failing to provide accurate information in a nuclear security context, or worse, providing inaccurate information with high confidence, leading to unwarranted decisions and actions by trusting human operators.

This vulnerability is compounded by inadequate data sets, resulting in even more unreliable systems.[72] This is especially true in the nuclear security sphere, where so much of the training data is simulated[73] Although there are extensive records associated with the launch of older ballistic missiles, newer, less tested models require the use of simulation.[74] The lack of data in terms of real-world offensive nuclear use is undoubtedly fortunate for the world, but it nevertheless means that much of the data involved in training machine learning programs for NC3 systems will be artificially simulated. Even real-world data is often insufficient to train ML systems for real-world environments, so training on simulated data leaves systems woefully unprepared for deployment in military operating environments. Despite the best efforts to ensure that simulated data is accurate and robust it will always be an imperfect, short-sighted imitation of world events.Therefore, when a ML system encounters even slightly unprecedented, real-world data, it it may be enough to throw it off track, with or without anyone noticing.

Watching AlphaGoZero play the game of Go has been described as ”watching an alien, a superior being, a creature from the future, or a god play.”[75] The program has the same goal as human players, but the way it achieves them - the actions it takes to get there - can be “almost impossible to comprehend.”[76] It may seem obvious that an AI system is not human, but its inherently inhuman nature matters more than it may first appear, and should never be forgotten, underestimated, or overlooked. This is linked to the ‘Black Box’ problem outlined earlier. We don’t truly know how or why an AI does what it does. We can see its output, but its reasons or justifications remain a mystery and should never be entirely trusted.

This issue of trusting the output of ML systems is further by the fact that these systems can be confident in their output even if they are wrong. Give a dog classifier a picture of a cat and it will tell you it's a Corgi with 99% confidence. Not only can systems be wrong but they can be wrong while telling you they are right with a high degree of confidence. This largely stems from the problem of brittleness as encountering unrecognized data often results in misinterpretation by the ML system as it attempts to categorize the data within the boundaries it does understand. Therefore, not only is it hard to identify when ML systems are not working as expected, but their degree of confidence may engender unwarranted trust in the machine.

AI systems in early warning or predictive forecasting would face immense, complex tasks. There is a very real chance that these machines would be brittle enough to fail at its desired performance outside the laboratory setting.

4.1.4 Human Bias in the Machine

One of the main arguments supporting the potential integration of ML systems with nuclear command and control states that decision-making processes stand to benefit from eliminating human error and bias. However, ironically, AI systems themselves are built and trained with human bias present, which clouds their own decision-making abilities.[77]

The code and algorithms that form the foundation of ‘objective’ and autonomous ML systems are written by humans - coders, developers, programmers, engineers - and it is also humans who train the program to run according to their interests and expectations. However well meaning, these humans unintentionally integrate human bias into their work; it is inevitable. Moreover, AI becomes further biased when it is trained using historical data, which is a product of its circumstances. For example: “machine learning algorithms designed to aid in criminal risk assessments… [are learning] racial bias from historical data, which reflects racial biases in the American criminal justice system.”[78] This conclusion was reinforced when a study “of a commonly used tool to identify criminal recidivism found that the algorithm was 45 percent more likely to give higher risk scores to black than to white defendants”.[79]

Another example of human bias occurred when Amazon used an AI system to filter résumés of potential job candidates. Evidently, the system was found to have a significant bias toward male applicants, presumably because it trained by observing desirable patterns in résumés of previously successful employees, most of which were male.[80] As a result, the AI determined that gender was a significant factor of candidate success and learned to place a higher value on male candidates. This not only reflected the male dominated nature of the industry at the time, but also perpetuated it.[81]

These examples illustrate that human bias can become integrated into AI systems by humans and/or training data, and then it is further ingrained and perpetuated by the AI system until it is detected. So, even in a best-case scenario where a ML system accurately interprets quality, relevant data and overcomes brittleness or other technical issues, it can still over- or under-evaluate the information it is assessing due to its trained human biases.

One can easily imagine similar problems occurring in AI systems trained to observe the actions of adversarial nations for the purpose of early warning or predictive forecasting. Human bias could sneak into the system, and the data relating to certain actors or variables could cease to accurately reflect what is occurring in the real world. In that case, extra weight could be given to standard activity, making a relatively normal action appear threatening. This could result in an AI warning of a potential incoming nuclear attack. A U.S. president and military commanders would be expecting a balanced, fair calculus when in truth the machine is perpetuating the kind of bias that could lead to catastrophic miscalculation and further the risk of responding to false positives.

These concerns become all the more pertinent during crisis situations such as the current ongoing conflict in Ukraine. A hypothetical ML integration system designed to assess the probability of Russian aggression against the West could act in unpredictable ways and give faulty information. The previously mentioned issues surrounding brittleness and the ‘Black Box’ could lead the system to misinterpret signating information such as troop movement, launch indicators, chatter, etc. A trained bias could then compound this misinterpretation, over-valuing the possibility of Russian aggression. For example, the system could perceive certain rhetoric or troop deployment as overly significant indicators of an imminent attack. While we hope that even in these cases military planners would review the information and hesitate to act on it, I don’t believe one should so easily discount the power of confirmation bias or the chaos and ‘fog of war’ in a crisis scenario. Seemingly accurate information provided during an emergency could easily be acted on.

4.2 Problems with Human-Machine Interaction

This section explores the impact more automation could have on the human element of the machine-human team. This involves both how we treat the AI and also what the use of such intelligent machines means for our own thought processes.

Understanding why something has not happened is an inherently arduous task. Determining why nuclear weapons have not been used, whether it is due to more normative factors like the nuclear taboo or factors linked to structural realism such as deterrence, is difficult. In all likelihood a complex combination of factors have contributed to the non-use of nuclear weapons since WW2. Nonetheless, I would argue a key factor has been human uncertainty and our lack of knowledge. How we make decisions matters.

Human limitations and emotions, despite all their dangers, seem to have played a key role in preventing nuclear weapon use. The consequences of potential mistakes are so great at the nuclear level that individuals facing decisions at key moments often chose to risk their own lives and their teams rather than deploy nuclear weapons because they were not convinced of what appeared to be an incoming attack.[82] This is not to downplay their courage or training, but when facing seemingly reliable information of a nuclear attack, these individuals decided not to act when others may have, and in some cases almost did. Whether it was intuition, reasonging, fear, guilt, panic, or a combination of these and other very human reactions, their decision to question the situation and hold back on reacting may have saved millions, maybe billions of lives.

Between 1945-2017 there have been “37 different known episodes [linked to close inadvertent use], including 25 alleged nuclear crises and twelve technical incidents.”[83] On the surface, it appears that the systems designed to prevent nuclear use worked. It has even been argued that “those in charge of nuclear weapons have been responsible, prudent, and careful… [and] ‘close calls’ have ranged in fact from ‘not-so-close’ to ‘very distant.’”[84] While I would be far more hesitant to put such strong faith in the systems designed to prevent inadvertent use, the fact remains that we haven’t used the weapons since the end of WW2. Luck may play a role here, but too many years with too many ‘close calls’ have occurred for us to completely discount the nuclear decision making systems. As the current decision-makers, we must be doing something right. One part of this success preventing inadvertent use is explicit mistrust of computer warning systems. In both the Petrov and NORAD cases, computers demonstrated high confidence of incoming attacks, and human operators didn’t trust these false alarms. Their doubt and skepticism was paramount. Regardless of one’s stance in this discussion, any evolving technology that could even potentially change how nuclear decisions are made demands thorough scrutiny as there are a number of unfortunate ways the situation could become more dangerous as a result.

While the previous section looked at technical flaws within ML systems and how they could impact the quality and reliability of their outputs, this section looks at how increased automation impacts the human element. This includes issues such as an over reliance on ML systems, the increasing speed of warfare and AI systems, and the growth of misplaced confidence in military commanders when backed by powerful machine intelligences. Each issue will be outlined and their possible impact on nuclear decision making explored. The eventual conclusion is that integrating ML with NC3 could result in a paradigm shift in nuclear decision-making.

4.2.1 Automation Bias

One key issue is the development of automation bias – the “phenomenon whereby humans over-rely on a system and assume that the information provided by the system is correct.”[85] Bias also exists in the other direction with anover-mistrust of machines known as the “trust gap”.[86]

Michael Horowitiz suggests there are three stages of trust towards artificial intelligence technologies:

- ‘Technology Hype’, or “inflated expectations of how a given technology will change the world”

- The ‘Trust Gap’, “or the inability to trust machines to do the work of people, in addition to the unwillingness to deploy or properly use these systems”[87]

- ‘Overconfidence’ or automation bias.

The claim that humans progressively move through these stages was supported when “psychologists… demonstrated that humans are slow to trust the information derived from algorithms (e.g., radar data and facial recognition software), but as the reliability of the information improves so the propensity to trust machines increases – even in cases where evidence emerges that suggests a machine’s judgment is incorrect.”[88]

Automation bias has “been recorded in a variety of areas, including medical decision-support systems, flight simulators, air traffic control, and even “making friendly-enemy engagement decisions” in shooting-related tasks.”[89] Given the multitude of technical flaws outlined earlier, one can imagine the problem with an overreliance on ML systems. And yet, the ‘trust gap’ is also problematic and should not be adopted as a desirable stance. Instead, there should be strong cognizance of the human tendency to trust the machine to the point of assuming it is always correct or more capable than its human counterpart.

In the context of inadvertent nuclear use, automation bias is a substantial problem when considering integrating ML with NC3 because it exacerbates both the time crunch of a crisis scenario and the ‘black box’ problem. Human operators would be unable to check the math behind the AI’s decision, nor would they have time to even try. Additionally, “operators might therefore be more likely to over-trust the system and not see a need to verify the information that it provides.”[90] Not only does this increase the likelihood that the aforementioned technical flaws of ML systems will go unnoticed, it also represents an effective pre-delegation of authority to these machine intelligences. Automation bias, and the “unwarranted confidence in and reliance on machines… in the pre-delegation of the use of force during a crisis or conflict, let alone during nuclear brinkmanship, might inadvertently compromise states’ ability to control escalation.”[91]

The pre-delegation of authority and automation bias are most evident in the Patriot fratricides (or friendly fire incidents) during the 2003 Iraq War. Three out of twelve successful engagements involved fratricides. This included “two incidents in which Patriots shot down friendly aircraft, killing the pilots, and a third incident in which an F-16 fired on a Patriot.”[92] In the cases where the Patriots fired, and caused the fratricides, the AI guided machines were wrong, and in trusting them their operators succumbed to automation bias. In both cases, the errors in the Patriot systems harken back to the brittleness technical issue. In the first, the system misidentified a friendly fighter as a missile[93], and the second instance involved the Patriot systems tracking an incoming “ghost” missile that wasn’t there. Unfortunately, a nearby friendly fighter was in the wrong place at the wrong time and the Patriot’s seeker locked on and killed the pilots.[94]

In the end, the AI driven systems were wrong and people were killed, illustrating that grave “problems can arise when human users don't anticipate these moments of brittleness”.[95] One aspect of this is ensuring that automation does not result in the undue pre-delegation of authority to a ML enhanced system. Ensuring that the faults and strengths of these machines are understood is critical for their safe use.

4.2.2 Machine Speed

As previously stated, a key potential benefit to increasing the automation of military systems is that they can perform their assigned functions at blinding speeds that drastically outpace human operators. The risk here is that this increased processing speed could push “the speed of war to ‘machine-speed’ because autonomous systems can process information and make decisions more quickly than humans.”[96] Aggressive reactions and decisions could take place in nano-seconds - dubbed ‘hyperwar’ in the West and ‘battlefield singularity’ in China.

Thomas Schelling’s concern that “the premium on haste” is “the greatest source of danger that peace will explode into all out war” is echoed here when discussing machine speed and nuclear decision making. There is a concern that, in an effort to maintain battlefield advantage, states will pursue machine speed in their military operations and risk losing control of their machines, effectively pre-delegating authority to their AI systems to act in their stead. As of now, these systems are brittle and flawed, lacking the ability to think critically or work outside of the box. They are “set up to rapidly act on advantages they see developing on the battlefield” and could easily “miss de-escalatory signals,”[97] echoing R.K. Bett’s conclusion that states often “stumble into [war] out of misperception, miscalculation and fear of losing if they fail to strike first.”[98]

Increasing automation and integrating ML systems with nuclear command would apply all of the issues surrounding machine speed to nuclear decision-making, impacting and changing one of our most consequential weapons systems. Intimidating as that is, the reality of modern warfare could mean that working towards this level of haste may be necessary in order to maintain a competitive deterrence system. Other modern developments - such as the advent of hypersonic weapons or the potential for an offshore attack - have already shaved down a nuclear weapons strike to mere minutes. While distance matters even in an automated world,[99] a lightning fast first strike can only be deterred when a response at the same speed is feasible. Nonetheless, even if the need for speed in nuclear deterrence is unavoidable in our current security climate, it is imperative that we understand these problems and devise solutions or mitigating processes to prevent inadvertent nuclear use.

4.2.3 Out-of-the-loop

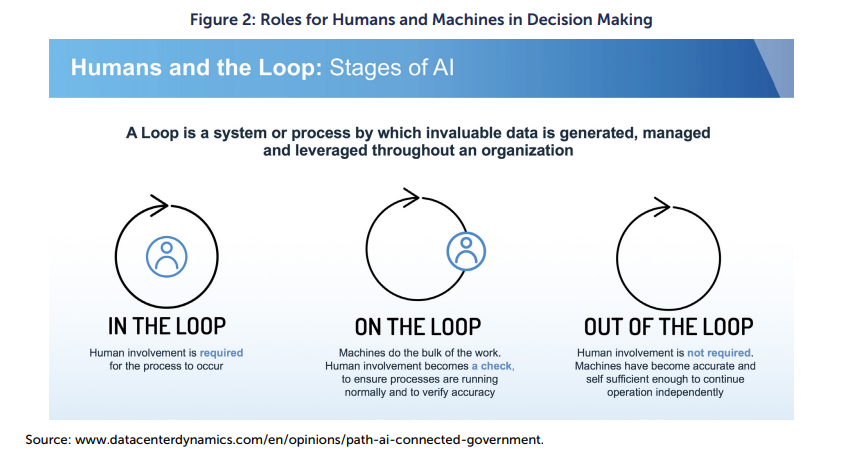

Machine Speed is a key aspect of automation that affects nuclear decision making, but it often does so by impacting the placement of the human supervision element in the decision making loop. As outlined in the below image, “there are three types of human supervision: a human can be in-the-loop, meaning a human will make final decisions; the human can be on-the-loop, supervising the system and data being generated; or the human can be out-of-the loop for full autonomy.”[100]

Roles for Humans and Machines in Decision Making |

The primary problem is that “AI systems operating at machine-speed could push the pace of combat to a point where the actions of machine [actors] surpass the (cognitive and physical) ability of human decision-makers to control (or even comprehend) events.”[101] One proposed method for addressing the flaws of ML systems is ensuring that a human operator remains within-the-loop and able to control or stop automated military systems from acting in a manner that is unaligned with their objectives. However, as Andreas Matthias explained, any impactful human control is likely “impossible when the machine has an informational advantage over the operator ... [or] when the machine cannot be controlled by a human in real-time due to its processing speed and the multitude of operational variables.”[102]

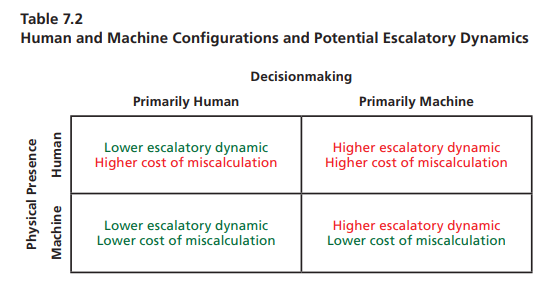

A 2020 RAND report explored the question: ‘How might deterrence be affected by the proliferation of AI and autonomous systems?’ by constructing and experimenting with a wargame to simulate how actors would make decisions in a conflict if automation became far more prevalent. A key insight from their experimentation was that “the differences in the ways two sides configure their human versus machine decisionmaking and their manned versus unmanned presence could affect escalatory dynamics during a crisis.”[103] The following figure demonstrates that escalation is affected by whether the machine or the human makes the decision. (The RAND report also considered the effects of humans remaining physically present at the outset of conflict scenarios, but that is outside the scope of this report). A key takeaway here was that escalation was harder to control or prevent when automated machines were the primary decision-makers.[104]

Note. Reprinted from “Deterrence in the Age of Thinking Machines,” by Wong et al, RAND Corporation, 27 January 2020, page 64.

Human-machine warfighting and ‘teaming’ has been progressively undertaken and its importance and impact will increase as it is attempted in more scenarios. The further humans are removed from the loop, the more reliant we become on possibly faulty technology that does not make decisions like humans do. This can become a strength but, as already discussed, it can easily become a danger instead. Nonetheless, many influential figures are still not opposed to pushing the human element further out. Gen. Terrence J. O’Shaughnessy, commander of NORAD stated that “What we have to get away from is … ‘human in-the-loop,’ or sometimes ‘the human is the loop.’”[105] By doing so we can attempt to leverage the speed and power of automated systems while theoretically still ensuring that human hands guide the ML power technology. Regardless of how we manage this change in the human-machine relationship, the further humans are taken from the loop, the more the risk increases that a technical flaw will impact nuclear decision making. The question remains; at what point will the inflexibility of these systems, and the resulting escalatory potential, outweigh the advantages in speed that they offer?

This possible gap in reliability is a clear problem when deploying ML systems in complex safety critical environments like nuclear command. However, this almost alien decision further complicates issues due to the general human desire to defer to such machines as though they are human. Work done in this area has demonstrated that “humans are predisposed to treat machines (i.e., automated decision support aids) that share task-orientated responsibilities as ‘team members,’ and in many cases exhibit similar in-group favoritism as humans do with one another.”[106] In fact, work by James Johnson showed that instead of constraining the brittleness or flaws of ML systems, keeping a human in the loop can “lead to similar psychological effects that occur when humans share responsibilities with other humans, whereby ‘[[social loafing]]’ arises – the tendency of humans to seek ways to reduce their own effort when working redundantly within a group than when they work individually on a task.”[107]

In the end, however powerful and useful, the decision-making process of ML enhanced systems can result in unexpected deviations or instances of misalignment. Despite their inherently inhuman nature, humans have the tendency to treat ML systems as colleagues rather than instruments, and expect human-like, aligned responses from them.

4.2.4 Misplaced Confidence

Similar to automation bias, or at least its outcome, misplaced confidence occurs when a system that seems superior and capable of providing a military advantage can create a sense of confidence in military leaders that encourages them to take aggressive, risky, or drastic action. This is also linked to the importance of uncertainty in nuclear decision making.[108]

An overzealous commander believing themselves to have perfect information along with the advantage of machine speed might act aggressively if they believed the need was great enough or the chance of reprisal was low enough, thinking that the strength of the AI system would guarantee a successful operation. This problem is twofold; not only could overconfidence risk increasing the likelihood of aggressive actions in general, but it would also make a commander more susceptible to automation bias. Both are inherently escalatory and raise the risk of inadvertent nuclear weapons use. This kind of “overconfidence, caused or exacerbated by automation bias in the ability of AI systems to predict escalation and gauge intentions – and deter and counter threats more broadly – could embolden a state (especially in asymmetric information situations) to contemplate belligerent or provocative behavior; it might otherwise have thought too risky”.[109]

5. Analysis of Potential Solutions

The following section covers solutions designed to address the problems outlined in this report. They are not necessarily unique to the issue of integration, nor are they being suggested for the first time in this report. Nonetheless, here they are evaluated through the combined lens of nuclear strategy and ML technology. In doing so I aim to continue this discussion by adding my thoughts on what could work best and how funders could use this assessment to aid them in nuclear or nearterm AI risk reduction efforts.

In general, I find the first two possibilities in 5.1 unsatisfactory as they fail to take into consideration the wide range of implications of the technical and psychological issues around AI integration on nuclear security. The three solutions in 5.2 are more likely to be successful as they take into consideration the fact that we may not fix the technical flaws within current AI technology. They either pursue stopgap measures to prevent inadvertent use or they attempt to address the psychological side of the problem. In doing so, they work with factors that we can control: policy and people. In 5.3 I present the complicated solution of keeping humans in the loop which, while impactful to pursue, cannot address the problems outlined here by itself.

5.1 Unsatisfactory Solutions

5.1.1 Do not integrate AI with Nuclear Command at all

While this solution would have a high degree of impact on preventing inadvertent use as a result of ML integration, it is not tractable due to the pressures to increase automation with NC3.

At this time, no state or actor is advocating for completely autonomous nuclear weapons systems where the human element is completely out of the loop. This is important, and more states should follow the UK and U.S’s examples of committing to this publicly in official strategy documents. Nevertheless the temptation may remain “for countries that feel relatively insecure about their nuclear arsenal, the potential benefits [of full automation] in terms of deterrence capability may outweigh the risks.”[110]

Nevertheless, not integrating AI into any stages of NC3 at all is not a realistic option. As argued in the modernization section, it is extremely likely that the U.S. government is already pursuing some degree of AI integration at some stages, rendering this suggestion irrelevant. Additionally, there are real benefits to integration at some stages and this should be at least explored. Improving one’s early warning system, if done correctly could help mitigate the dangers faced by nuclear weapons on the road to disarmament.

Beside providing better analysis, AI integration could help ensure robust communication in nuclear command that helps reduce uncertainty during a crisis.[111] Additionally, AI run cyber security could help secure key systems related to deterrence[112] and the safer and less vulnerable these weapons are, the less aggressive state may need to be. This is the same reason submarine based nuclear weapons are often touted as the current pinnacle of deterrence. They cannot be found and thus can reliably ensure a second-strike. Therefore, one can generally act with a greater degree of confidence and with less aggression because of their deterrent work. AI run cyber security could hopefully do the same, and in all honestly may simply be required in the face of AI run offensive cyber actions. Given the security climate, not pursuing AI integration could result in a lopsided or asymmetrical environment that itself encourages the kind of nuclear crisis or coercion that increases the risk of nuclear use.

There is still a lot of value in criticizing what appears to be an inevitable policy. Advocating for the elimination of nuclear silos and landlocked nuclear weapons may be difficult for a number of reasons but researchers and activists should not necessarily stop. The same can be said about advocating for zero ML integration to NC3. Nonetheless, I personally do not suggest this solution for funders and grantmakers attempting to maximize impact per dollar spent.

5.1.2 Improve AI to eliminate technical problems

Attempts to ‘just make it better’ may fix some of the technical problems eventually but the perfect system will never exist. While solving key technical issues would have a large degree of impact, and many AI researchers are exploring this route, it seems like an unlikely achievement. This in and of itself isn’t a failure. Humans are not perfect either, but problems like brittleness or trained AI bias are especially dangerous because they could rear their heads suddenly and without warning and because of the ‘black box’ issue we might not be able to routinely check for these problems nor will we always be able to test for them.

‘Normal Accident Theory’ “suggests that: as system complexity increases, the risk of accidents increases as well and that some level of accidents are inevitable in complex systems.”[113] Therefore,the risk of accidents in complex defence systems that incorporate autonomy may therefore be higher.”[114] The reality is that “even with simulations that test millions of scenarios, fully testing all the possible scenarios a complex autonomous system might encounter is effectively impossible. There are simply too many possible interactions between the system and its environment and even within the system itself.”[115]

Furthermore, even if we implement safety features designed to stop accidents from occurring, it is often these very features that result in deadly errors and accidents. Two recent examples of this include Lion Air Flight 610 on October 29, 2018, and Ethiopian Airlines Flight 302 on March 10, 2019. There was a safety feature that resulted in the planes crashing.[116] The point is that not only are accidents normal and difficult to prevent in complex systems, but that even attempts to combat them can result in accidents themselves.

Perhaps the math works out that humans fail catastrophically more often than AI would (even if the AI is brittle or misaligned). Nonetheless, when combined with the issues forming in the human-machine relationship, the dangers of AI integration could go undiagnosed due to the implicit trust that the AI system is objectively better than its human counterparts. If automation increases and more nuclear decision-making is pre-delegated to machine systems, these problems will grow and risk inadvertent use in this safety critical environment.

Additionally, the consequences of human failure can be mitigated by human uncertainty and the supervision and control of peers and superiors. Throughout our entire history, humans worked together. Switching from human to AI is a clear paradigm shift for both decision making and safety culture, drastically shifting our reactions and approaches to problems in crisis.

This is not an outright rejection of ML in NC3 but rather a claim that we cannot rely on technological improvements to remove the possibility of accidents.

5.2 Potentially Beneficial Solutions

5.2.1 Update Nuclear Posture to Reflect Changing Paradigm

One of the greatest hurdles to mitigating the challenge of ML in NC3 is the fact that the technical problems discussed above may simply be unsolvable in the near-term. Approaching this problem from a non-technical angle may allow us to bypass the inherent technical flaws within ML systems by mitigating the dangers without necessarily resolving these technical problems. Specifically, policy can be restructured to mitigate the potential problem of ML integration with NC3.

Although technical solutions may be developed to lessen the impact of these limitations, the sheer complexity of AI machines and the pressure to integrate them with military systems mean that the problems these technical issues pose must be addressed now. The heavy incentive for states to start incorporating the technology as soon as possible means they may have to accept and implement imperfect systems into critical roles in nuclear command. In the end, however, the real problem is not the AI per se, but a rushed integration of AI with nuclear systems that does not fully take into consideration the heightened risks posed by the technical limitations of current AI technology and the complexity of nuclear security.

One solution is adopting a nuclear policy that expands the decision-making time for launching a nuclear weapon, such as by moving away from launch-on-warning (LOW) strategies or by “de-alerting” silo- based intercontinental ballistic missiles. The LOW strategy keeps missiles alert and constantly ready to fire so they can be launched before the first impact of an incoming attack. By legally increasing the appropriate amount of time to ready nuclear weapons for use, these types of policies would allow leaders more opportunity to assess the nuclear security-related information provided by the AI. In a sense, this would forcibly elongate the ‘loop’ so that regardless of lightning fast AI assessments, a specific no-first-use policy and a restriction on a rapid launch could lead to a less dangerous AI system or even make it effectively impossible for inadvertent use to occur.

Creating shifts in nuclear posture that increase decisions making time or move away from LOW strategies will result in innate advantages for reducing nuclear risk before we even consider their influence on ML integration with nuclear command. The risks of LOW can be broken down into two categories: the intense pressure under which the decisions are made, and the often-questionable quality of the information used to make decisions. As the many close calls and cases of near nuclear use demonstrate, LOW is already a dangerous prospect whose instance of responding before impact allows for the possibility of inadvertent use and catastrophe. The idea of moving away from a LOW posture is not a new idea but it made all the more relevant when considering the potential dangers associated with ML integration with nuclear command systems.

Ultimately, integrating ML into nuclear command would act as a compounding factor or threat multiplier for inadvertent use if done improperly. On the one hand, a working ML system could provide better information faster than our current systems do. This would rescue the chance of inadvertent use and potentially increase the amount of time decision makers have to determine whether they will respond or whether it is a false alarm. This could help reduce the risks associated with LOW strategies. However, while possible, these benefits rely on a number of assumptions regarding the ability of incredibly complex systems to work under immense pressure.

The risks outlined in this report should give one pause when considering the helpfulness of ML systems for reducing nuclear risk. This is not to say they have no place in risk reduction — on the contrary, finding the proper balance for safe AI integration should be pursued in the quest for nuclear risk reduction. Nonetheless, increasing automation within the systems that are integral to managing a LOW nuclear doctrine means potentially falling prey to the various technical and human-machine relationship issues outlined in this document. While we may be able to address some of these problems through technical or training based means, changing policy to make it nearly impossible to launch nuclear weapons within a few minutes could effectively eliminate the greatest risks associated with ML integration. While the danger of inadvertent use would still exist, it would be drastically reduced by this kind of a shift in posture. Still, it should be noted that moving away from Launch-On-Warning is unlikely as there is heavy domestic political pressure to maintain ICBMs and other more static nuclear forces.

An alternative solution is the commitment to move away from LOW during periods of peace and stability. Originally proposed by Podvig, this idea encourages nuclear weapon states to “introduce a policy of keeping their forces off alert most of the time”.[117] This would help reduce the chance of peacetime miscalculations. While escalation in times of stability is not as likely as it is in crisis scenarios, Barrett, Baum, and Hostetler demonstrated that half of all false alarm cases occurred during periods of low tension.[118] Crises are still far more dangerous overall as half of all false alarms and inadvertent use scenarios occur during a comparably tiny amount of time when compared to periods of lower-tension. Nonetheless, this approach would effectively end peacetime inadvertent use risk and present a possible confidence-building-measure that could help increase crisis stability by enabling both informal and more structured talks. Additionally, it would signal the universal desire for safety and stability and a general lack of interest in conducting a first strike with these weapons.

And yet, changing nuclear doctrine is no easy feat even in the best of environments. Given the current war in Ukraine and revamped tensions over Taiwan, advocating for changes to doctrine at this time is perhaps more difficult than ever before. Successfully updating posture could effectively negate the risk of inadvertent use, but the tractability of this is incredibly low in the current international security climate.

5.2.2 Update and ensure adequate training

How we train the human element will be crucial to ensuring the safe integration of the human-machine team. This suggestion covers the training of all human components, whatever role they may play in the decisions making or support process.

Paradoxically, the more autonomous a machine, the more training is required for the humans involved with it. This training needs to reflect functionality as well as the machine-human relationship and the inherent flaws within the technology.[119] In order to maintain the benefits of ‘human uncertainty’ in nuclear decision making, training should embed a healthy degree of skepticism or doubt toward the militarized AI in its human operators. Properly done, this balanced training will avoid creating a ‘trust gap’ or an automation bias by highlighting both the potential benefits and risks of ML integration.

With the understanding that AI is flawed, perhaps the next crisis will reflect the one in the 1980s where Stanislav Petrov doubted an early warning system telling him, with the highest level of confidence, that there was an incoming U.S. nuclear attack.[120] Petrov’s uncertainty saved the day. Conversely, the operators of the 2003 Patriot systems that killed friendly aircraft were found to have a culture of “trusting the system without question.”[121] To properly manage the flaws of powerful ML systems, people need to be trained to understand “the boundaries of the system - what it can and cannot do. The user can either steer the system away from situations outside the bounds of its design or knowingly account for and accept the risks of failure.”[122] Both the Petrov case and the Patriot fratricides outline the importance of instilling the proper amount of confidence, and skepticism, when training operators of automated military systems.

A different but linked idea is creating organizational and bureaucratic solutions to address the technical problem of militarized AI. The SUBSAFE program is a “continuous process of quality assurance and quality control applied across the entire submarine’s life cycle.”[123] Between 1915 and 1963, the U.S. lost an average of one submarine every three years to non-combat losses; since the program was established in 1963 not a single SUBSAFE certified submarine has been lost.[124] This is even more impressive when considering both the increased complexity of modern submarines and their operating environments. This seemingly counteracts Normal Accident Theory.