When it comes to skilling up in AI Safety, there’s one resource that probably everyone will recommend to you: The AI Safety Fundamentals Course by Richard Ngo. For the few people that haven’t heard of it: it’s an 8-weeks program that some EA groups run as fellowship but many people go over themselves. Or in a reading group.

I (Ninell) received funding to run such a reading group in Berkeley over the summer. One attendee, Karan, jumped in and helped with the organization after people didn’t stop joining the group. Here is why and how we did it and what we’ve learned.

This post is especially helpful for people that are new to AI Safety and/or people who are planning on running any reading group. We’ll start with the learnings and will elaborate on those (pretty detailed) below.

7 Learnings

- Making less committed people very committed takes *a lot* of work. This is why fellowship organizers like Joshua Monrad recommend selecting very strongly for people. We did not do that because a) we weren’t running a fellowship as we were also going through the course for the first time, and b) because we ran it on a the-more-the-merrier basis (which is debatable). However, according to Joshua's post and resonating with our experience, making stuff official is the key to great attendance. The more casual everything is, the more people slack off the course. This starts by calling it “fellowship” rather than “reading group”, providing stuff, making people do homework, recording attendance, make rules clear from the beginning (see Joshua’s post for more).

- Provide official meeting spots and snacks. The value of making people feel comfortable, value their time & hunger is surprisingly high. Good snacks, drinks, & food are crucial for keeping up the good work. If you want to put it into a framework, the three dimensions are social interest, food, and internal motivation. As more you have of one of them, the less you need the other. However, our thesis is that if you’re missing one completely, the others can’t make up for it.

- Advertising over the Facebook group and word of mouth worked well for our particular case (in Berkeley where many people came for skilling up). However, if you’re not in a hub, more work is needed here. Possible channels include Twitter (with a reasonably active EA community), Facebook groups, and Slack groups (feel free to ask me (Ninell) if you’re interested in doing so).

- There can’t be enough micromanagement & facilitation. From the exact words that we prepare for the kickoff meeting to the folder structure and useful links in the pinned posts of the Slack channel, putting in the time, careful thought, and effort pays off. People will ask you less often about where to find things, what to do if X, and where to write down Y. Secondly, it forces you to think everything through: does your concept make sense? Is the structure really intuitive? In terms of facilitation, we think that facilitators are crucial for the reading group. We sometimes were tired or not perfectly prepared. But as the anchor of the group, facilitators are crucial for keeping the vibe up.

- It’s good if facilitators are also interested in doing the reading & are highly engaged in the group, it’s better if they’ve already done the course and can actually help answer questions. However, this is no knockout criterium. If you cannot find facilitators for your group, the value of doing the readings + discussion is still high!

- Not talking about your background worked surprisingly well! People did actually discuss and questioned a lot & seemed to defer less. They also needed to think about their own claims more.

- Tessa’s model of roles also worked well in terms of making people actually read the things & making them talk (the two crucial things). We assigned roles for the first reading (out of 4-5) per week, which was sufficient. We also changed roles after every week which was good because some roles seemed to take up more time (summarizer, discussion generator). We found the role of the connector (connect the learnings to your personal life) not as useful because people would either do it automatically if the topic allows it. Or it’d be a very made-up connection that doesn’t really provide value. Instead, more discussion generators would be great!

The What and Why

The AI Safety Fundamentals curriculum is for now the most condensed collection of posts and papers to get a closer grasp of AI Safety. It stretches from: an introduction to ML/DL; the general motivation behind alignment research; concrete examples of threats and problems; current alignment approaches; and frameworks for solutions. The last week encourages participants to do their own projects. The whole curriculum comes with exercises and discussion prompts, which further motivates to do it in a group rather than alone. Lastly, there are also always further readings for each week, which, in my opinion, makes it even more valuable as a resource. However, if you’re planning on doing an AI Safety group yourself, you got plenty more stuff: have a look here, here, here, here, and here. More recent papers e.g. can be found by following the top labs (@OpenAI, @DeepMind, @AnthropicAI, @CHAI_Berkeley) on Twitter and browsing their websites, subscribing to the Alignment Newsletter, and reading stuff on LessWrong and the Alignment Forum.

Back to our group: During the summer of 2022, many people relatively new to AI Safety and EA in general, went to the EA hub Berkeley to skill up in AI Safety including the SERI MATS scholars & MLAB fellows. I won’t go into detail about why this has a comparative advantage over doing it at a non-hub place but Akash wrote a post about this. In general, our experience of getting to Berkeley, finding housing, getting to know people in the community, and skilling up through conversations was very positive and highly productive.

Advertisement, Sign-Up Form, Kickoff Meeting

The neat thing about living in a hub is that everyone is super connected. The neat thing about EA is that everyone is super helpful and interested. These two dimensions put together made it possible that slightly more than 20 people showed interest after I (Ninell) posted in the Facebook group chat with ~100-150 that accumulated the Bay summer visitors. I rolled Karan in as 20 was clearly too many faces and it was good to have a second opinion on stuff (even if it’s “only” a reading group, there are quite a few things to decide on). We set up a sign-up form where we asked for the following things:

- Admin stuff (name, email address, availability)

- Level of commitment (motivation: mixed groups make less committed people more committed)

- Motivation & availability for a retreat (motivation: increase the commitment & excitement for the reading group)

- Availability for the kickoff meeting (motivation: communicate time and place as soon as possible)

- Open answer question for “anything else that you want to share with us” (you never know what people’s questions are)

For the kickoff meeting itself, we had some healthy and unhealthy snacks to symbolize that “something official” is happening and that we value our participants. If you want to do it, don’t hesitate to apply for funding (the general rule of thumb should be: apply >8 weeks in advance; an application should take up no more than two hours usually).

The aim of the kickoff meeting was to divide people into smaller groups and go through the content of week 0. Furthermore, we wanted to use the chance for everybody to come together and get motivated by the other people. We did a quick introduction round (name and one hobby) but decided to not play excessive intro games as we were 20+ people and a stronger bond with everyone’s group members was the primary goal.

Size of Groups, Set-Up, Facilitators

The groups consisted of 4-6 people. We set up two frameworks that we encouraged to apply: not mentioning backgrounds and roles in the discussions.

The background idea came in a conversation of Karan with Sam Brown (Oxford rationalism). It encourages people to not talk about their background. In doing so, we were aiming to avoid deferring from more experienced people and getting a more critical, vibrant conversation/discussion out. That worked pretty well! We had sometimes had some “math/science signaling” as Karan calls it due to a familiarity with certain concepts/ideas. But it was way less than “I worked for org X and therefore know Y”. Secondly, it also enforces people themselves to explain knowledge/ideas rather than deferring them from their experiences.

Furthermore, we followed Tessa’s post on how to run an engaged and highly energetic reading group and chose a role-based reading group set-up. The roles (copied from Tessa’s post, see post for more detail) were:

- Discussion Generator: come up with 1 or 2 questions for the group to discuss

- Summarizer: prepare a 3 to 5-minute summary of the reading

- Highlighter: pick 1 or 2 passages that you think are great and merit further discussion

- Concept Enricher: pick 1 or 2 words or concepts you feel confused about and do a bit of research on them, reporting back on what you learn (e.g. “gain-of-function”, “TET Enzymes”)

- Connector: share 1 way you might apply ideas from the reading in your own life or work

If we had more than 5 people, we assigned the Discussion Generator more often as we thought it might be the most useful role. However, having more people highlight important concepts might be an option as well. An alternative way to divide the roles comes from Colin Raffel and is specialized in CS topics. Note: in both posts (Tessa’s and Colin’s) are more suggestions about different formats of reading groups (like one-to-many, 2-people group, etc.) that are worth a read if you’re planning your group.

Lastly, we assigned ourselves (Ninell & Karan) and one other experienced person as facilitators (one per group). These non-advised / non-experienced facilitators had the advantage that conversations were very free and not topic constrained (I only have anecdotal evidence for this but sometimes, facilitators would end a discussion if it deviated too far from the topic). The clear disadvantage, however, was the increased insecurity & amount of not answered questions a group had.

Notes, Slack, & Micromanagement

Tessa’s and other posts about reading groups encourage people to take notes (or minutes, or meeting minutes, however you wanna call it)—we love notes (minutes)! Why? Several reasons: 1) It keeps the discussion centered around *something* and traces deviating topics. (I know that I just said that shifting is fine and fun but you should be able to remember where you’re coming from and why you ended up discussing the differences between Huel and Soylent). 2) More importantly, if there are any open/unanswered questions, people will be able to retrieve those at any point. E.g. if you happen to run into Paul Christiano in the streets of Berkeley, you could easily pull out your shared notes folder and ask what failure really looks like (we are not saying that you should actually do that).

For the infrastructure, we created a shared google drive with subfolders specifying the weeks. I felt weirdly micromanage-y when I put templates for notes with useful links and prompts into those folders but it turned out quite helpful for most people. The templates made visible what people were expected to write down (e.g. indicating the existence of a notetaker, the roles described above, a link to the role description, and a link to the curriculum). Each group was asked to copy that template and fill it with their weekly notes but everyone had access to each note of every group. The aim of this was to let people see what other people's questions/concerns/thoughts are but it turned out this didn’t really happen.

In terms of Slack, we found it useful to create *yet another* channel. Most EAs already are familiar with using it (many EA groups have their own) and we find that it is a clear, structured, and intuitive way of sending messages, replying in threads, and approaching people. Also, it keeps the vibe of “officialness” alive. However, we found that the engagement wasn’t as high (and are happy about any thoughts about how to increase this) and think that slack was maybe a bit too much (a Facebook group would have done it, too). Maybe there was also a gap between how formal slack seemed to be and how official the rest was. The goal of the engagement would be to enable discussions that exceed the group assigned discussion time. One possible thought is people's comfort in the group or in general with posting to a large, mostly non-friends audience.

Attendance

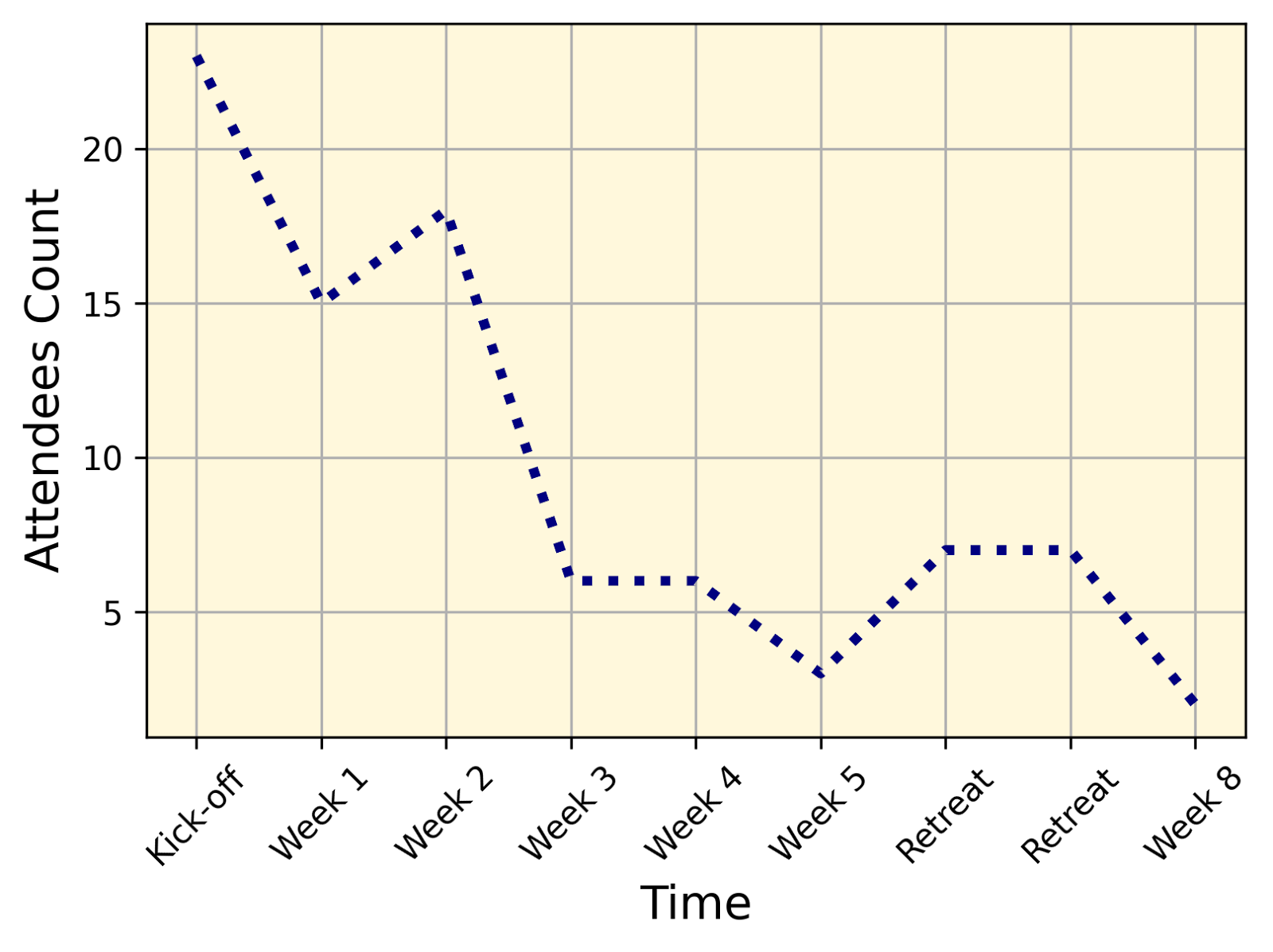

Last but not least, the big question is how people stood engaged and motivated. The attendance graph for us looked like the following. It’s important to note that we didn’t penalize not-attendance at all. We were more acting on the basis that every face is a win, no matter when.

Interesting to note here is that the attendance went up for the retreat. A special note to the retreat is that we also admitted people that didn’t complete any of the weeks before. The reason for this is that, again, we were hoping to “engage & reach as many people as possible and see who stays with AI Safety”. Did that work? Maybe! See learning number 1 for the reasons.

The retreat

We organized the retreat with a minimum level of coordination. There are some opportunities in Berkeley to go to a house close by. We went there for two days (even though, I personally was in quarantine and can therefore only speak from second-hand experiences).

Drawing from the early learnings in the reading group, we micromanaged especially the food chores. For big meals (lunch, dinner), three people were on a cooking shift, for breakfast and afternoon tea only two. Clean-up for every shift was two people, too. This is a system I’ve learned in my EA group house and it’s proven useful as it simplifies social challenges such as fairness a lot.

As we went only for one night, we did not include any introduction games for the first evening. Usually, people like to do introduction games like “hot seat” or throw some icebreaker questions into the room. However, there are already good (better) resources out there for how to run a retreat or a social event as well as several lists with icebreaker questions and questions that lead to impactful conversations if you’re interested in doing such a thing.

For the studying part of the whole experience, we (the attendees) read through one week on each of the days and discussed things in the afternoon.

How the group is doing now

After the retreat, the group pretty much died out. The curriculum suggests that participants do a project on their own four weeks after the course has finished. Two people are doing this right now (stay tuned!). Possible reasons for this could be that most participants are students and are back to university after the summer. Also, most people are not permanently based in Berkeley which makes coordination harder—the current projects are operating with a 15 hours time difference.

People got different things out of the group, presumably based on their motivations. Some report having a better understanding of the risk, taking it more seriously, and appreciating “what a wickedly difficult topic this is” (one attendee). Others (maybe 2-3) report that discussion shaped their future plans to some degree, whereas some others (5-6) feel like they’d like to learn more about the topic before shifting their career towards AIS. However, some attendees also just got better acquainted with other attendees on a non-AI Safety level.

Lastly, we want to thank Akash Wasil for bringing us to Berkeley and all participants of the group for attending and contributing! It was a lot of fun.

Thanks to Elliot Davies for their insightful feedback & comments. Special thanks to Karan Ruparell for feedback, comments, and the willingness to jump into this with me even though you never asked for it—great work, would always do it again!

Thanks for the write up Nell! You obviously put a lot of care into this, and I'm glad to hear it seemed to pay off :)

Thanks for sharing these learnings and for running this group Ninell! I would have been moderately less likely to start start studying AIS on my own if it were not for this group, and I appreciate how thoughtful you were and how much work you put into this group and this post.

Thank you, Jordan! It was great having you there.

Thanks for this detailed write-up, Ninell. I'll be applying several of the principles for organisation and roles to a version of AGISF I'm facilitating in Australia in late 2022.

Thank you and all the best with the fellowship!