We’re really bad at guessing the future

I’m not so actively in EA, so apologies that this will be missing a lot of the jargon that populates so many of the posts I’m reading through.

I think that people now matter more than people in the future.

Two main arguments:

- We are really bad at guessing how many people there will be in the future, so the uncertainty on how much to weight them makes long-future calculations useless.

- We are really bad at guessing what the second-order effect of interventions will be, so we can’t design to mitigate harmful effects in the future.

The framing of long-termism hedges this in the language of probability: “Future people count. There could be a lot of them. And we can make their lives better.”

To put it another way:

- I think the “could” in the second sentence has huge error bars around it.

- There might or might not be a lot of people; we don’t really know.)

- I think the “can” in the third sentence also is very uncertain.

- We might make things better, or in trying to make things better, might make them a whole lot worse.

Bad forecasting

I want to start with 4 case studies:

Nuclear Armageddon

Take one of the most highly studied extinction events in human history: nuclear armageddon. What is the probability that it’s going to happen? Spencer Graves of EffectiveDefense.org presented at the Joint Statistical Meeting (the largest professional conference of statisiticans): “Previous estimates of the probability of a nuclear war in the next year range from 1 chance in a million to 7 percent… If that rate is assumed to have been constant over the 70 years since the first test of a nuclear weapon by the Soviet Union in 1949, these estimates of the probability of a nuclear war in 70 years range from 70 chances in a million to 99 percent.” (Transcript)

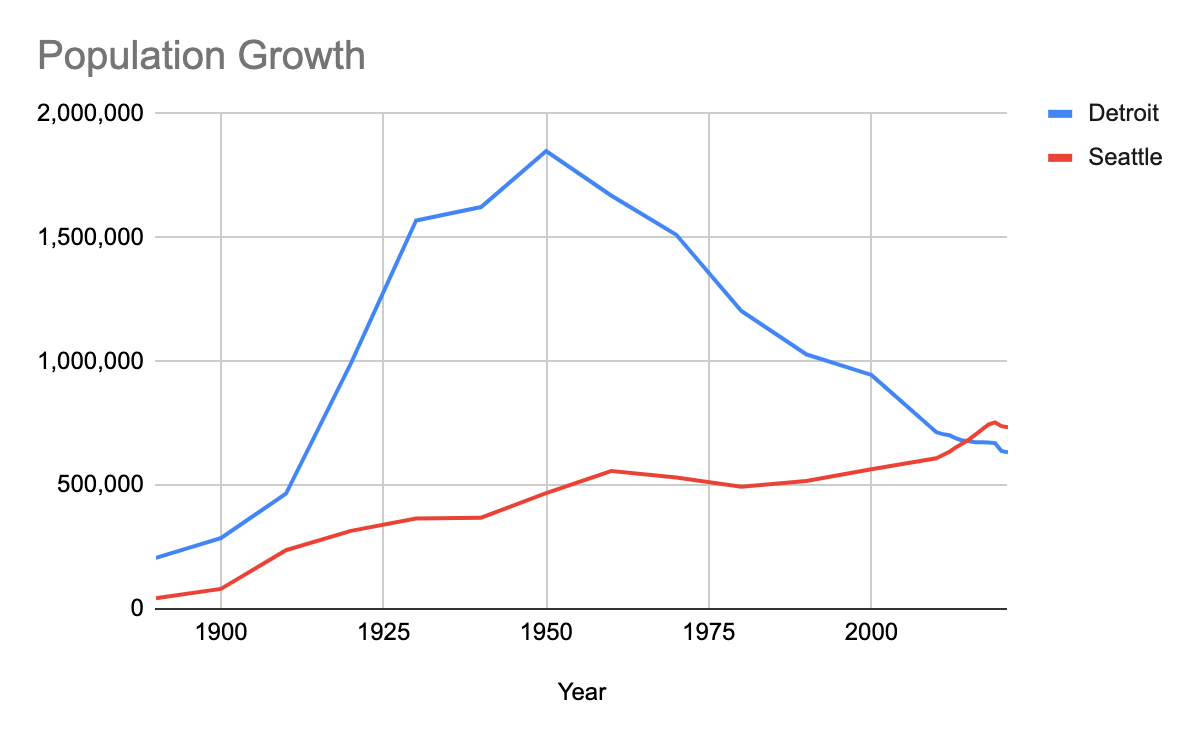

American City Population

Look at the population growth curves for these two American cities. Where would you like to buy a house?

You’re buying blue because it’s going up? You’re totally right. Growth takes off in the twenties as blue has one of the largest industries in America moves in.

For about 50 years, you’re totally right.

Until the world changes on you, in the span of a human lifetime.

We can’t see around the corner in human population growth. Detroit had a meteoric rise and a meteoric fall. Seattle had slow growth. Both had key industries move in, and then move out.

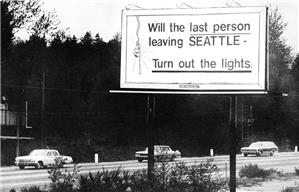

Seattle actually had an extra one as well: “The recession came as The Boeing Company, the region's largest employer, went from a peak of 100,800 employees in 1967 to a low of 38,690 in April 1971.” Which prompted a real estate developer to put up a hilarious and cheeky sign.

In such a tightly bounded area, we can’t guess city growth within a generation. How can we reliably guess it for a bigger area over a bigger timeline?

Malthus & The Population Bomb

The absolute most famous study in us being wrong about human population growth is Thomas Malthus. He took as a basic principle that human population growth would be geometric and that resource growth would be arithmetic.

Quoting his book: “No limits whatever are placed to the productions of the earth; they may increase for ever and be greater than any assignable quantity. Yet still the power of population being a power of a superior order, the increase of the human species can only be kept commensurate to the increase of the means of subsistence by the constant operation of the strong law of necessity acting as a check upon the greater power.”

Can populations grow unbounded? How many people will there be? Our best guess two hundred years ago was wrong.

I learned after initially writing this post about Paul Erlich, professor at Stanford in Population Studies. He published a book in 1968 called The Population Bomb which said: "[i]n the 1970s hundreds of millions of people will starve to death in spite of any crash programs embarked upon now."

I understated this argument: our best guess of how many people would be on earth 50 years ago was dramatically wrong.

COVID Forecasts

Michael Osterholm, director of the Center for Infectious Disease Research and Policy at University of Michigan and member of President Biden’s Transition COVID-19 Advisory Board, is a prominent science communicator during the pandemic. He is also strongly against long-term forecasts: “[B]e careful of forecasting too far into the future, which can at times be based on ‘pixie dust.’” He doesn’t know what is coming next or where it’s coming from: “[T]here just hasn't been a predictability about why or where Covid will take root…If I could understand why surges occur or why they go away or why they don't happen, then I'd be in a better place to answer questions about where Covid is headed.”

Experts on COVID can’t make predictions about the way that a known disease will move. It’s similar to uncertainty about the path of hurricanes, where a reasonable estimation difference can mean hundreds or thousands of deaths.

Some Generally Wrong Future Guesses

You can (and many people have) filled an entire book with people who made wrong guesses. For my favorite, take a look at the People’s Almanac #2, published in 1978, where they asked both psychics and scientists to make a guess about what the future held.

Some of them are vaguely right but with bad timelines: electric cars (popular by 1982), solar power (⅓ of homes will have it by 1989). Some are way off: a human landing on Mars will confirm that there used to be life there, “there will be greater fear of an Ice Age approaching” (possibly the biggest existential threat on Earth right now is the opposite of that happening).

The important part is: everyone talks about space and nuclear technology and nobody talks about the single most important invention of the next 40 years. I was only able to find two references to the future Internet: “television which will deliver news and library materials as we request them”; “Cable television will bring newspapers directly to homes by wire”. There’s one on cell phones: “Miniature television sets the size of cigarette holders will be used as every day video phones.”

You can argue with the quality of the forecasters they found, but my point is: we are SO BAD at guessing the future.

Can we make things better?

I would recommend “The Unanticipated Consequences of Purposive Social Action” by Robert Merton. He points to 5 ways in which people trying to do good things for the world end up doing bad ones:

- Ignorance - sometimes you have to act, even though you don’t know what the long term effects will be.

- Error - you think you know what the downstream effects will be, but sometimes you are wrong.

- This includes the sub-type of error where what has worked in the past may or may not continue to work in the future.

- The “imperious immediacy of interest” - you are so concerned with the immediate impact that you don’t care about what else happens, either as an unintended consequence or in the long term

- This is willful ignorance, as opposed to true ignorance in #1.

- Basic values - very similar to #3, you are so concerned with the moral implications in the present that you don’t care about what happens in the long term.

- Public predictions of social phenomena can change the course of that phenomenon - Merton argues that Marx’s work getting into the public consciousness led to organized labor which slowed some of the processes that Marx predicted.

Long-termism works very effectively against the third source of unintended consequences, by explicitly making the future a criterion in the decision making, but Merton argues that the biggest effects are from Ignorance and Error. People either don’t know what will happen or think they know but they actually don’t.

A couple more examples

A couple more brief examples of incredibly popular things that seemed great at the time, but had large unintended consequences:

- Leaded gasoline reduced engine knocks (invented 1921) and also human IQs (“Exposure to car exhaust from leaded gas during childhood took a collective 824 million IQ points away from more than 170 million U.S. adults alive today, a study has found.”)

- Chlorofluorocarbons (CFCs) were a non-toxic refrigerant (1920s) and destroyed the ozone layer (Montreal Protocol to ban them worldwide, 1987).

- Asbestos is an amazing flame retardant and a carcinogen (“Asbestos exposure is the No. 1 cause of work-related deaths in the world.”)

Am I saying everything is like that? Absolutely not. We’ve invented some great stuff. (See the yo-yo, bifocals and the wheel.)

What I’m saying is that uncertainty about the future cuts both ways, and I’m not sure that we can confidently say that we can make things better with high probability for people in the future. We might not know the effects, or we might think about them and guess wrong.

My Main Point

Circling back around, I think that we don’t know enough about what the future holds to weight future people similarly to present-day people. We don’t really know how many of them there will be, and we don’t really know whether the things we’re doing will help them. The bands of uncertainty get wider and wider as you push forward in time, so we should care the most about people now and less and less as time goes on.

Thanks for the post. Some quick comments!

This could be interpreted as a moral claim that, on an individual basis, a current person matters more than a counterfactual future person. Based on the rest of your post, I don't think you're claiming that at all. Instead you're making arguments about the uncertainty of the future.

I think a lot has been written about these claims around future uncertainty and those well-versed in longtermism have some compelling counterarguments. It would be nice to see a concise summary of the arguments for and against written in a way that's really accessible to an EA newcomer.

Interesting post, I think I agree, but even if we should lower our confidence about almost all future predictions there should be some things that are more likely to happen and some actions that are likely to improve the outcomes of those things.

I would be interested in reflection about what those things might be, one possible example that comes to mind is climate change (which you mention) and another one is economic growth.