I believe that grokking indirect realism about perception is key to understanding much of what people in the camp “qualia/consciousness is real and it’s a physical thing” have to say. It’s a simple but incredibly generative concept with very profound consequences (including for digital sentience).

This post is my attempt to make indirect realism as intuitive as possible using simple illustrations. I then explain how one can get to some form of panpsychism quite naturally, simply by taking seriously the idea that consciousness is real.

Introduction

In Why it’s so hard to talk about Consciousness, Rafael Harth suggests that people often talk past each other because core intuitions about consciousness tend to cluster into two camps with different underlying assumptions:

Camp #1 tends to think of consciousness as a non-special high-level phenomenon. Solving consciousness is then tantamount to solving the Meta-Problem of consciousness, which is to explain why we think/claim to have consciousness. In other words, once we've explained the full causal chain that ends with people uttering the sounds kon-shush-nuhs, we've explained all the hard observable facts, and the idea that there's anything else seems dangerously speculative/unscientific. No complicated metaphysics is required for this approach.[1]

Conversely, Camp #2 is convinced that there is an experience thing that exists in a fundamental way. There's no agreement on what this thing is – some postulate causally active non-material stuff, whereas others agree with Camp #1 that there's nothing operating outside the laws of physics – but they all agree that there is something that needs explaining. Therefore, even if consciousness is compatible with the laws of physics, it still poses a conceptual mystery relative to our current understanding. A complete solution (if it is even possible) may also have a nontrivial metaphysical component.

Over the years, I switched from Camp #1 to Camp #2, and there’s no looking back. Having observed many discussion about consciousness, I now suspect that indirect realism can act as a conceptual stepping stone for those in Camp #1 wishing to better appreciate Camp #2's perspective. I hope this short post contributes to that debate.

Indirect Realism

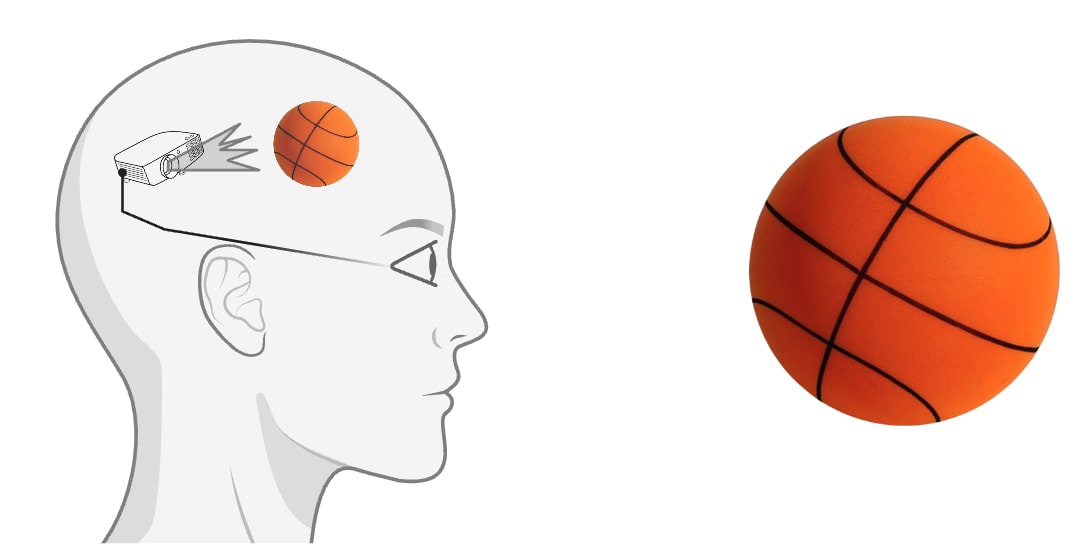

In one sentence, indirect realism about perception is the idea that everything we experience exists as an internal representation inside our brains [1]. One (extremely simplified) way I like to think about it is by imagining the brain as a little projector, taking in some signals from the eyes, ears, etc. and creating a replica of the outside world inside the head.

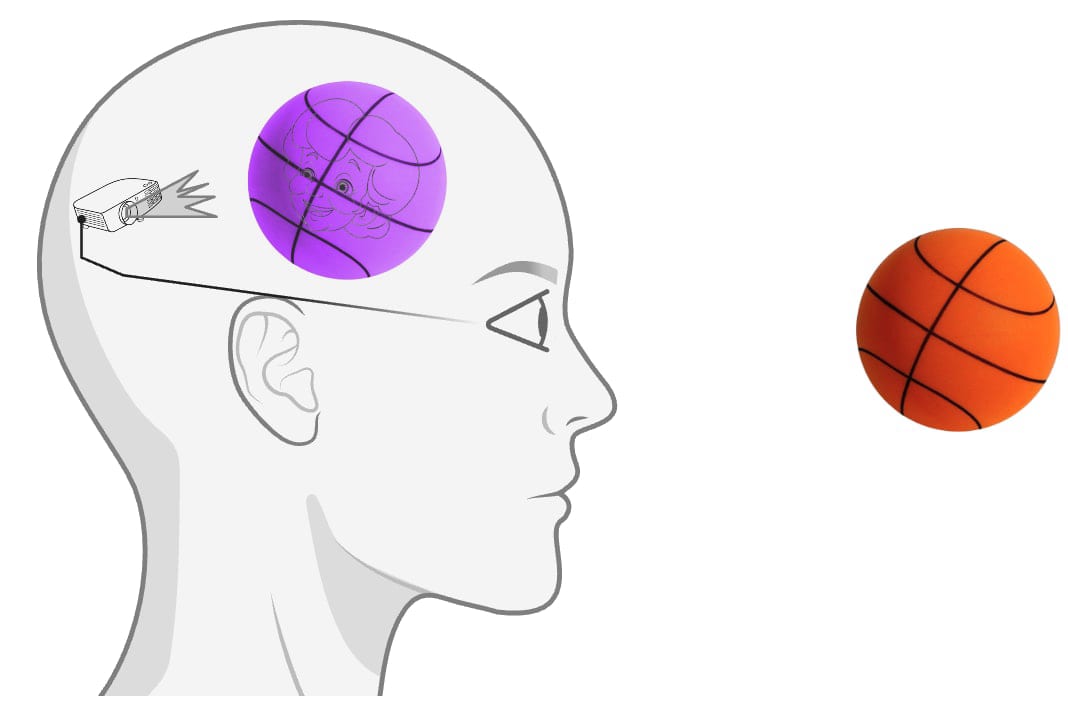

Figure 1 illustrates this idea: When you look at a basketball, light enters the eyes, the eyes transfer some signals to the brain, and the brain somehow manages to create a super detailed, rich, near-real-time replica/simulation of the basketball inside your head. Indirect realism simply says that, at all times, what you consciously see (experience) is the little basketball being “projected” by your brain inside your head, not the actual ball out there in the world.

This is a pretty counterintuitive idea for most people when they first encounter it. After all, it certainly feels like we’re actually seeing (hearing, smelling, etc.) things out there in the world directly. If you’re reading this, though, I suspect you’ve come across the idea of indirect realism and might even believe it to be true. However, I’m under the impression that even people who think a lot about consciousness haven’t fully grasped how profound the idea is.

But before talking about some implications, I’ll briefly try to make it patently obvious to you why indirect realism has to be true, first through a couple of empirical examples and then by outlining a simple logical argument why the alternative view (direct realism) fails.

Empirical Evidence: A Few Simple Examples

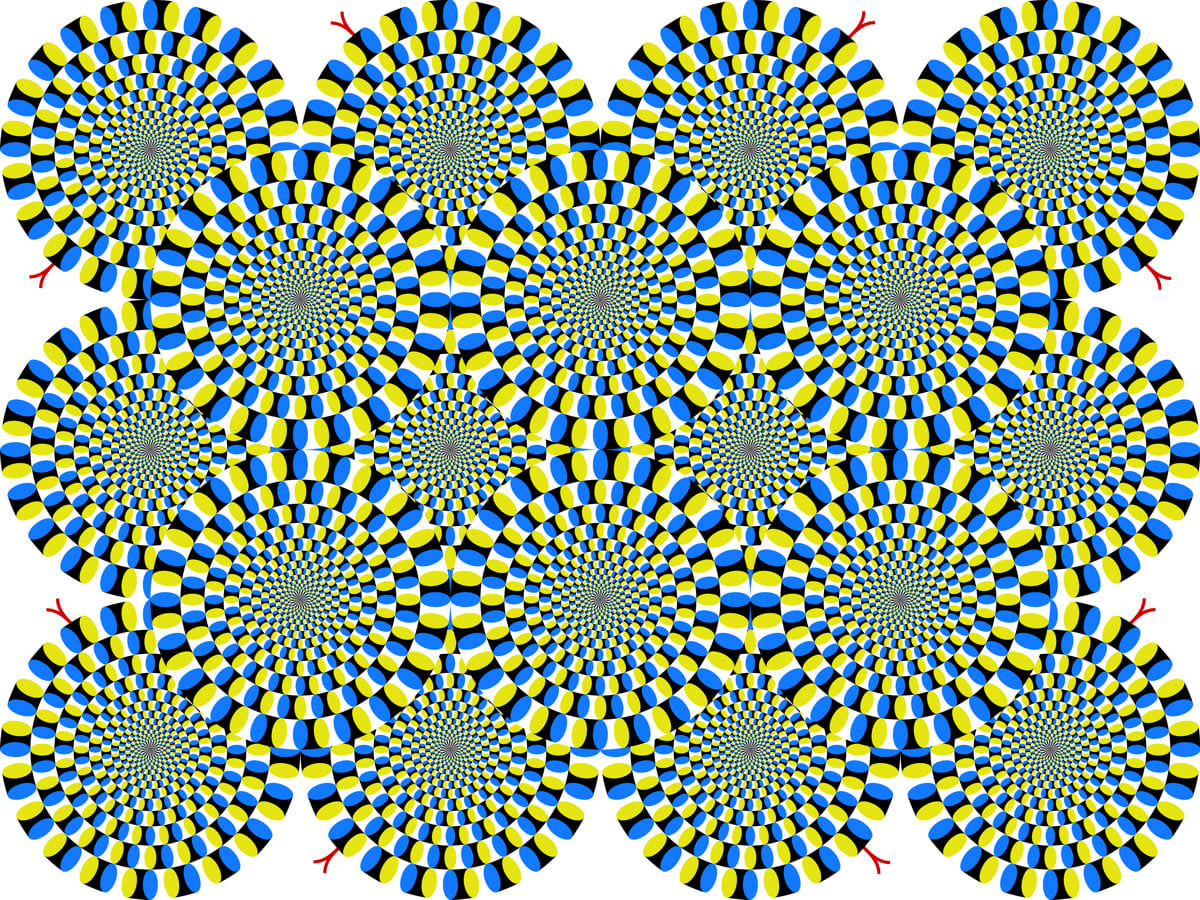

One of the nicest and easiest ways I use to prove to myself that indirect realism is true is with visual illusions. Here’s a famous one by Akiyoshi Kitaoka:

The image itself is not moving, but you probably experience it as moving (the “snakes” rotate). Can both be true? Indirect realism says yes: the image out in the world isn’t moving, but the little movie/simulation being generated by your brain actually is. There’s no paradox.

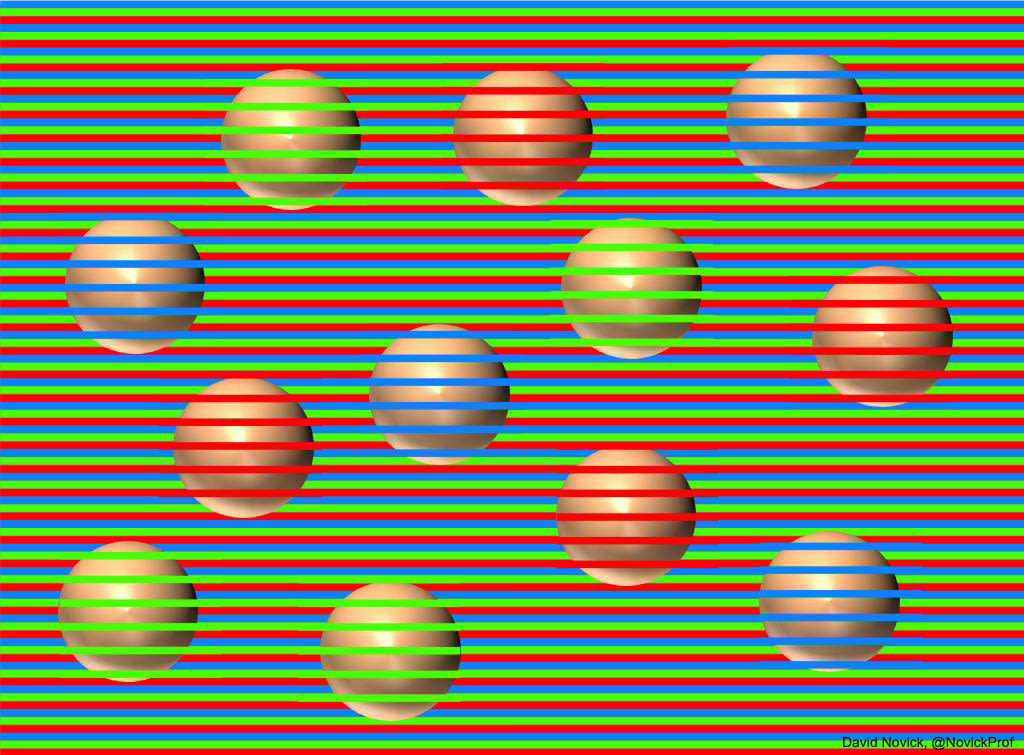

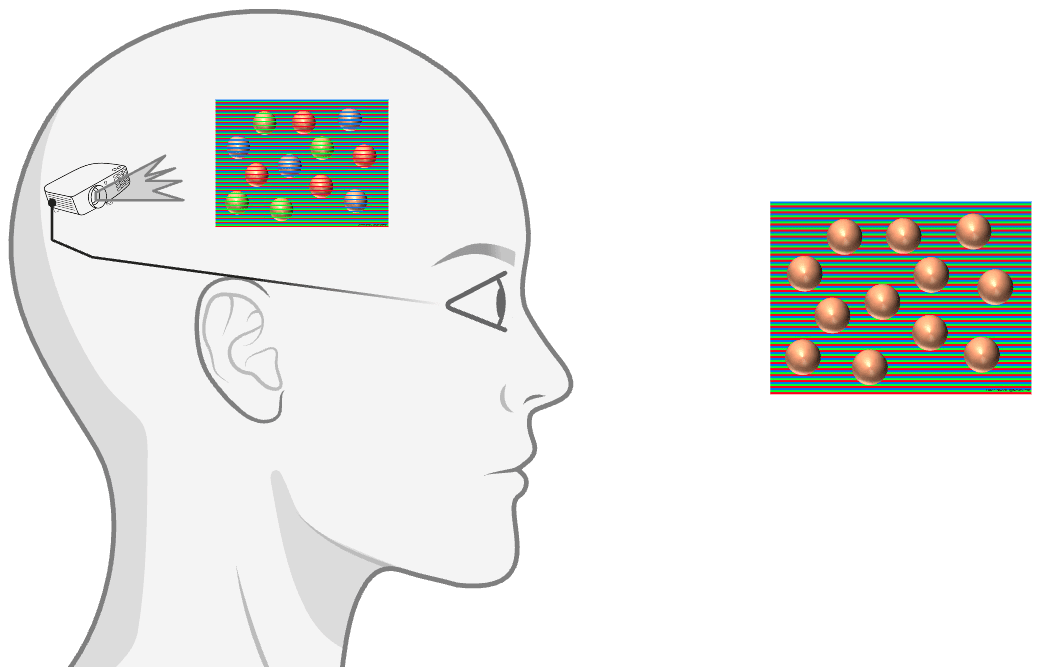

Here’s another one by David Novick:

Here again, it is both true that the spheres are green/red/purple (in the simulation generated by your brain) and all brown (if you sample the RGB values of the image in your screen).

Finally, imagine you take a psychedelic (say, B-BaL-DMT) which causes your basketball to appear purple instead of orange, and you also see your grandma’s face on its surface.[2]

In each of these examples,[3] you would be absolutely, 100% correct in saying that the snakes are rotating, that the spheres are green/red/purple, and that the basketball is purple and has your grandma’s face on it. Those are all actual properties of the simulation being generated by your brain, and the experience you’re having is as real as anything real can be.

Logical Evidence: A Simple Argument

If you’re more philosophically inclined, here’s a logical argument why the alternative view—direct realism—fails (which I learned from Steven Lehar). Direct realism says that we experience the world itself directly. However, this would mean something like:

- Signals from the outside world enter through our senses (eyes, nose, etc.), causing activity in the brain.

- This brain activity results in us having a certain experience, which direct realism claims corresponds to the outside world.

However, notice that “the outside world” appears both at the beginning of the causal chain (it causes the electrical activity in the brain) and at the end (we see the outside world as a result of the brain’s activity), which is not possible.

Indirect realism faces no such paradox: The outside world does cause activity in the brain, but the result is not that we perceive the outside world—we instead perceive a (high-definition, multimodal, near-real-time) replica of the outside world generated by our brain (also sometimes called “world simulation”).

Implications

People in Camp #1 may believe indirect realism to be true, so indirect realism might not be a crux per se. However, I think we can (and should) go one step further.

Hopefully you agree that anything we believe to be real ultimately manifests itself in our conscious experience. Even highly sophisticated experiments in particle physics eventually require a (purportedly) conscious scientist to look at data on a computer screen and interpret the results (“I see black and white stripes characteristic of an interference pattern!”). So when I say that the basketball is actually purple when I take that psychedelic, I mean that there actually is a real, physical image of a purple basketball being instantiated/living inside my brain. This is the core idea I hope to convey with this post.

But how is that possible? After all, if you were to open my skull and inspect my brain while I’m having that conscious experience (and uttering the sentence “I see a purple basketball!”), you wouldn’t see an actual purple ball floating somewhere in my brain, would you? Well, you wouldn’t be able to see it directly with your eyes because the purple basketball in my brain is not made of rubber, so obviously it doesn’t reflect light.

OK, so, if it’s not made of rubber, what is it made of? Well, non-materialist physicalists (like myself) claim that it has to be made of something that our best laws of physics already recognize as being real.[4] A top contender is the electromagnetic field,[5] in part because:

- At our meso level (i.e., neither at the micro level of atoms nor at the macro level of planets), most phenomena are electromagnetic, including the brain [2].

- Especially in altered states of consciousness (deep meditation, psychedelic states, etc.), field-like dynamics become apparent (e.g., liquid crystal dynamics).

So yes, I believe that you should, at least in principle, actually be able to find the purple basketball if you probed my brain with the right instruments.

And now the punchline: If the world simulation going on inside your brain (what you experience consciously) is real and made of, say, electromagnetic stuff, then this implies that the electromagnetic field itself is made of consciousness/qualia. This is what people (e.g., at QRI and adjacent circles) mean when they say that the fields of physics are fields of qualia, i.e., some form of panpsychism.

If this picture is (at least directionally) correct, then there is a clear research agenda to determine, among other things, whether a certain object is conscious. For example, we can ask what properties of the EM field are shared by all objects we suspect to be conscious (starting with humans), or whether digital computers instantiate configurations of the EM field with those relevant properties.[6]

Illusionists (typically in Camp #1) claim that the world simulation being generated by the brain isn’t really there. But I think maybe they’re just married to an ontology where the brain can be abstracted away as a network of digital switches (neurons) that simply sum inputs and fire outputs. I think illusionists are simply giving up too early by not considering other physical ontologies and metaphysical assumptions.

Taking seriously the idea that our conscious experience is real in this way also allows us to make empirically testable predictions (see one example by Susan Pockett). And if we figured out how the brain generates world simulations[7] and what exactly the relevant properties of the underlying physical fields have to be, the doors would be open to developing new and potentially game-changing valence-enhancing technologies.

Acknowledgements

I’m mostly rehashing (and possibly oversimplifying) ideas by Steven Lehar (I particularly recommend his Cartoon Epistemology series and his talk Harmonic Gestalt), David Pearce, Andrés Gómez Emilsson, QualiaNerd, and Atai Barkai. I wrote this post in my personal capacity.

- ^

As QualiaNerd rightly pointed out to me in a private conversation, “counterintuitively, this is actually true—not for the reasons Dennett might think—but rather because, in order to give a full explanation of what makes us utter phrases like ‘I am conscious’ or ‘I experience redness’, etc., we have to explain the consciousness/redness in the first place. In other words, once we have the full account of the qualia reports, it will necessarily include a full account of the qualia about which the reports are.”

- ^

Psychedelic-induced visual hallucinations can (often) be easy to contextualize. Time distortions, on the other hand, can be quite jarring and destabilizing. However, once you really internalize indirect realism, you realize that only phenomenal time is being distorted, i.e., time as generated by your brain. Using the projector analogy, it’s as if the projector is being set to, say, slow motion, generating a slow-motion movie, even though the actual world simply continues its usual course. See also How to use DMT without going insane.

- ^

Dreams are another obvious example.

- ^

Real in the deepest sense, i.e., corresponding to whatever lies at the base layer of reality, since otherwise consciousness would be a strongly emergent phenomenon, a useful fiction, which we want to avoid, as QualiaNerd emphasizes.

- ^

Likely in the form of nonlinear waves driven by neuronal activity, and possibly involving entanglement.

- ^

Spoiler alert: Absolutely not.

- ^

I bet the solution will look a lot like this.

I don't think I fully understand exactly what you are arguing for here, but would be interested in asking a few questions to help me understand it better, if you're happy to answer?

Not the OP, but a point I've made in past discussions when this argument comes up is that this is would probably actually not be all that odd without additional assumptions.

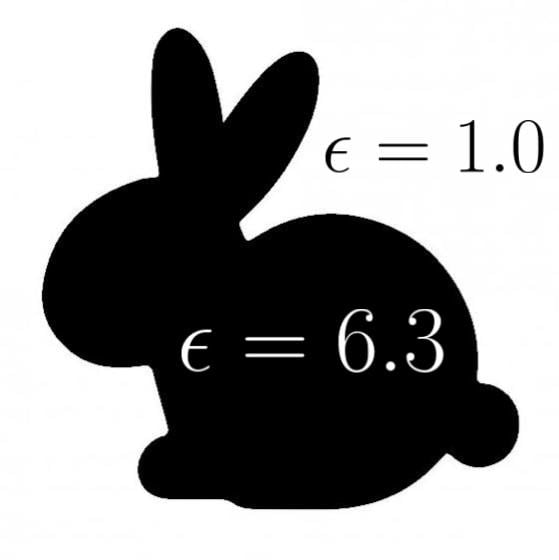

For any realist theory of consciousness, a question you could ask is, do there exist two systems that have the same external behavior, but one system is much less conscious than the other? (∃S1,S2:B(S1)=B(S2)∧C(S1)≈0≠C(S2)?)

Most theories answer "yes". Functionalists tend to answer "yes" because lookup tables can theoretically simulate programs. Integrated Information Theory explicitly answers yes (see Fig 8, p.37 in the IIT4.0 paper). Attention Schema Theory I'm not familiar with, but I assume it has to answer yes because you could build a functionally identical system without an attention mechanism. Essentially any theory that looks inside a system rather than at input/output level only -- any non-behaviorist theory -- has to answer yes.

Well if the answer is yes, then a situation you describe has to be possible. You just take S1 and gradually rebuild it to S2 such that behavior also gets preserved along the way.

So it seems to me like the fact that you can alter a system such that its consciousness fades but its behavior remains unchanged is not itself all that odd, it seems like something that probably has to be possible. Where it does get odd is if also assume that S1 and S2 perform their computations in a similar fashion. One thing that the examples I've listed all have in common is that this additional assumption is false; replacing e.g. a human cognitive function with a lookup table would lead in dramatically different internal behavior.

Because of all this, I think the more damning question would not just be "can you replace the brain bit by bit and consciousness fades" but "can you replace the brain bit by bit such that the new components do similar things internally to the old components, and consciousness fades"?[1] If the answer to that question is yes, then a theory might have a serious problem.

Notably this is actually the thought experiment Eliezer proposed in the sequences (see start of the Socrates Dialogue).

Thank you so much for your questions! :) Some quick thoughts:

The brain operating according to the known laws of physics doesn't imply we can simulate it on a modern computer (assuming you mean a digital computer). A trivial example is certain quantum phenomena. Digital hardware doesn't cut it. And even if you do manage to simulate certain parts of the system, the only way to get it to behave identically to the real thing is to use the real thing as the substrate. For example, sure, you can crudely simulate the propagation of light on a digital computer, but in order for it to behave identically to the real thing, you'd have to ensure e.g. that all "observers" within your simulation measure its propagation speed to be c. I don't believe you can do that given the costs of embodiment of computers.

It would be trivial "qualia dust," like most electromagnetic phenomena (which are not globally bound). (I do think that the brain operates according to the laws of physics.)

I think "gradually replacing the biological brain with a simulated brain bit by bit" begs the question. For example, what would it mean to replace a laser beam "bit by bit"?

Just to be clear, I'm not claiming that the EM field has some additional strange property, but rather that the EM field as it is is conscious (cf. dual-aspect monism). Also consider: When you talk about "the simulation being run," where exactly is the simulation? In the chips? In sub-elements of the chips? On the computer screen? Simulations, algorithms, etc. don't have clearly-delineated boundaries, unlike our conscious experience. This is a problem.

I believe that to be the most parsimonious and consistent view, yes.

Thanks for the reply, this definitely helps!

Could you explain what you mean by this..? I wasn't aware that there were any quantum phenomena that could not be simulated on a digital computer? Where do the non-computable functions appear in quantum theory? (My background: I have a PhD in theoretical physics, which certainly doesn't make me an expert on this question, but I'd be very surprised if this was true and I'd never heard about it! And I'd be a bit embarrassed if it was a fact considered 'trivial' and I was unaware of it!)

There are quantum processes that can't be simulated efficiently on a digital computer, but that is a different question.

Thanks, and sorry, I could have been more precise there. I guess I was thinking of the fact that, for example, some quantum systems would take, I don't know, the age of the universe to compute on a digital computer. And as I hinted in my previous response, the runtime complexity matters. I illustrated this point in a previous post, using the example of an optical setup used to compute Fourier transforms at the speed of light, which you might find interesting. Curious if you have any thoughts!

Thanks for the link, I've just given your previous post a read. It is great! Extremely well written! Thanks for sharing!

I have a few thoughts on it I thought I'd just share. Would be interested to read a reply but don't worry if it would be too time consuming.

I like the 'replace one neuron at a time' thought-experiment, but accept it has flaws. For me, it's that we could in principle simulate a brain on a digital computer and have it behave identically, that convinces me of functionalism. I can't grok how some system could behave identically but its thoughts not 'exist'.

I really appreciate your feedback and your questions! 🙏

I'd love to reply in detail but it would take me a while. 😅 But maybe two quick points:

Executive summary: Indirect realism—the idea that perception is an internal brain-generated simulation rather than a direct experience of the external world—provides a crucial framework for understanding consciousness and supports a panpsychist perspective in which qualia are fundamental aspects of physical reality.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.