Over the last couple of months we have written a series of posts making the case for the Centre for Enabling EA Learning and Research (CEEALAR) and asking for funding - see here, here and here. We are very grateful to those who supported us during the fundraiser, however we did not reach our target and still have a very short runway. Despite these current difficulties, we want to take a moment in this post to outline a few of our achievements from 2023. We are proud of what we have achieved, and looking forward to working hard in 2024 to ensure an impactful future for CEEALAR.

Highlights of 2023

- We hosted ALLFED’s team retreat in which they gathered their full team to set out their theory of change and strategy.

2. We also hosted Orthogonal, who launched their organisation and research agenda while here.

3. We appointed two new trustees, Dušan D. Nešic and Kyle Smith, and said goodbye to outgoing trustees Florent Berthet and Sasha Cooper.

Thank you to Florent and Sasha who have both been supporting CEEALAR since it began. We look forward to working with Dušan and Kyle, and drawing on their expertise in talent management and fundraising.

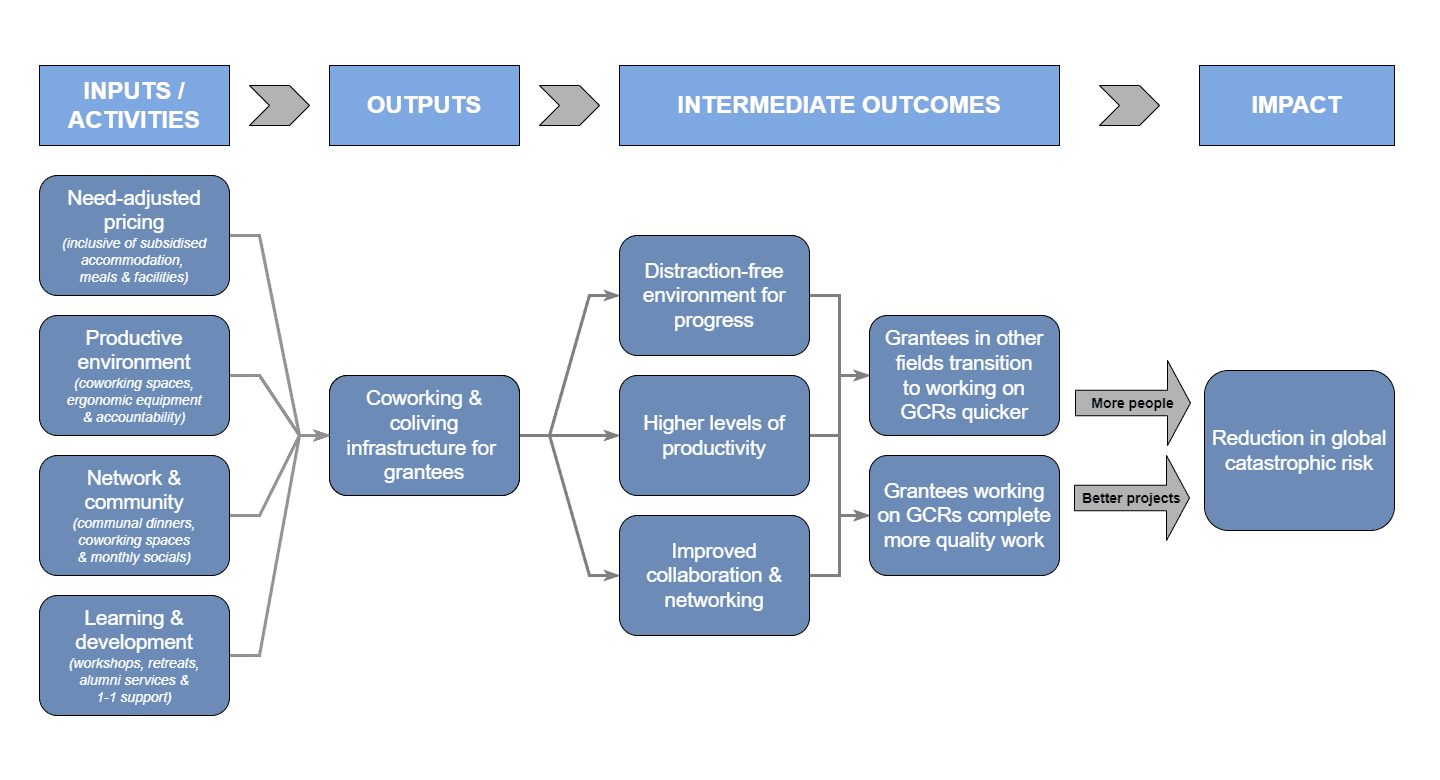

4. We updated our Theory of Change to explicitly focus on work supporting global catastrophic risks.

We believe this reflects the needs of the world, plus in recent months more than 95% of applicants have worked on GCRs.

5. We launched the CEEALAR Alumni Network, CAN, and reconnected with our alumni to begin understanding the impact CEEALAR had on their lives.

80% of respondents were working in EA, the majority of whom were doing AI safety work.

6. We made substantial improvements to the building that helped boost grantee productivity.

Including converting the attics into private studies, purchasing standing desks and creating a lounge area to relax.

7. We improved our application form to ensure we get the very best grantees, and hosted a total of 60 grantees, more than any of the past 3 years.

8. ~7.4% of our funding for 2023 came from guests and alumni, which we see as an endorsement - those closest to us believe we are an impactful option to donate to.

A huge thank you to all of our donors.

9. We launched a new website.

Check it out here: www.ceealar.org

Thank you to grantees Onicah and Bryce, pictured above, who helped us with the design and photos for the website.

10. As always though, the achievements we want to celebrate most are those of our grantees.

To name a few from 2023…

- Bryce received funding to manage Alignment Ecosystem Development, successfully transitioning from his previous career running a filmmaking business in to AI safety

- Nia and George launched ML4Good UK. Alongside running two UK camps, they are building infrastructure so ML4Good can expand to additional countries.

- Michele published a forum post on Free Agents, the culmination of his research into creating an AI that independently learns human values

- Seamus had a research paper accepted to the Socially Responsible Language Modelling Research (SoLaR) conference and is currently completing ARENA Virtual.

- Sam was selected for the AI Futures Fellowship Program. While at CEEALAR he participated in AI Safety Hub’s summer research program and co-authored a research paper accepted to a NeurIPS workshop.

- Eloise secured a place on AI Safety Camp, working alongside Nicky Pochinkov on the project “Modelling Trajectories of Language Models”.

In 2024 we are looking forward to running a targeted outreach campaign to reach high-quality grantees working on global catastrophic risks, hosting the first ML4Good UK bootcamp, and of course to fundraising and working on CEEALAR’s financial sustainability.

Once again, a heartfelt thank you to everyone who has supported CEEALAR.

In my observed experience there are lots of young people (many whom I know personally) who want to help with AI-alignment and are in my opinion capable of doing so, they just need to spend a year or two trying and learning things to get the necessary skills.

These are people who usually lack prior achievement and therefore are not able to access various EA-adjacent grants to buy themselves the slack needed to put in the time and focus on a singular goal. When really what they need is not salary-sized grants but just a guarantee that they will have food, housing and a supportive environment and community, while they take a pause from formal education or jobs meant to keep them afloat.

I personally know one such person who got helped out of prior dependence through CEEALAR and has started to become productive. My own (and Orthogonals) stay at CEEALAR strongly positive as well.

Ive heard that grant makers are often operationally constrained on giving out smaller hit based grants to for example individuals. By giving to CEEALAR, grant makers would outsource this operational cost and would be able to bootstrap people doing AI-alignment, in a hits based manner for low cost compared to alternatives such as individual grants. Which I do think is one of the most cost effective ways to help with AI-alignment. Myself and many others I know doing good work in Alignment would not exist in the space if not for personal hit-based grants.

I'm very confused and sad that CEEALAR has not recieved more funding. Not only do I wish that CEEALAR could stay afloat and expand, but I also think we would STRONGLY benefit from similar institutions aimed at low cost housing for motivated, but potentially unproven individuals, at other geographical locations, say east and west coast in the US, as well as somewhere in Europe. If CEEALAR was able to consistently get funded, that would grant confidence for people to start similar organizations elsewhere.

From having been involved for several years, I can also say we were sufficiently funding constrained that seeking funding to stay afloat took a large proportion of the staff and trustee's time, and made planning for greater long term efficiency very hard. All of these achievements are despite that constant friction, so I really hope the new team get a chance to find out what a fully focused version of the project could do.

Executive summary: CEEALAR, a center supporting EA talent, highlights 10 successes in 2023, including hosting organizations' retreats, appointing new trustees, updating their theory of change, launching an alumni network, improving their physical space, transitioning talented individuals into AI safety careers, and supporting impactful projects. However, they missed their 2022 fundraising target and have limited runway.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.