One of the main obstacles to building safe and aligned AI is that we don't know how to store human values on a computer.

Why is that?

Human values are abstract feelings and intuitions that can be described with words.

For example:

- Freedom or Liberty

- Happiness or Welfare

- Justice

- Individual Sovereignty

- Truth

- Physical Health & Mental Health

- Prosperity & Wealth

We see that AI systems like GPT-3 or other NLP based systems use Word2Vec or other node networks / knowledge graphs to encode words and their meanings as complex relationships. This then allows software developers to parameterize language so that a computer can do Math with it, and transform complicated formulas into a human readable output. Here's a great video on how computers understand language using Word2Vec . Besides that, ConceptNet another great example of a linguistic knowledge graph.

So, what we have to do is find a knowledge graph that helps us encode human values, so that computers can better understand human values and learn to operate within those parameters, not outside of them.

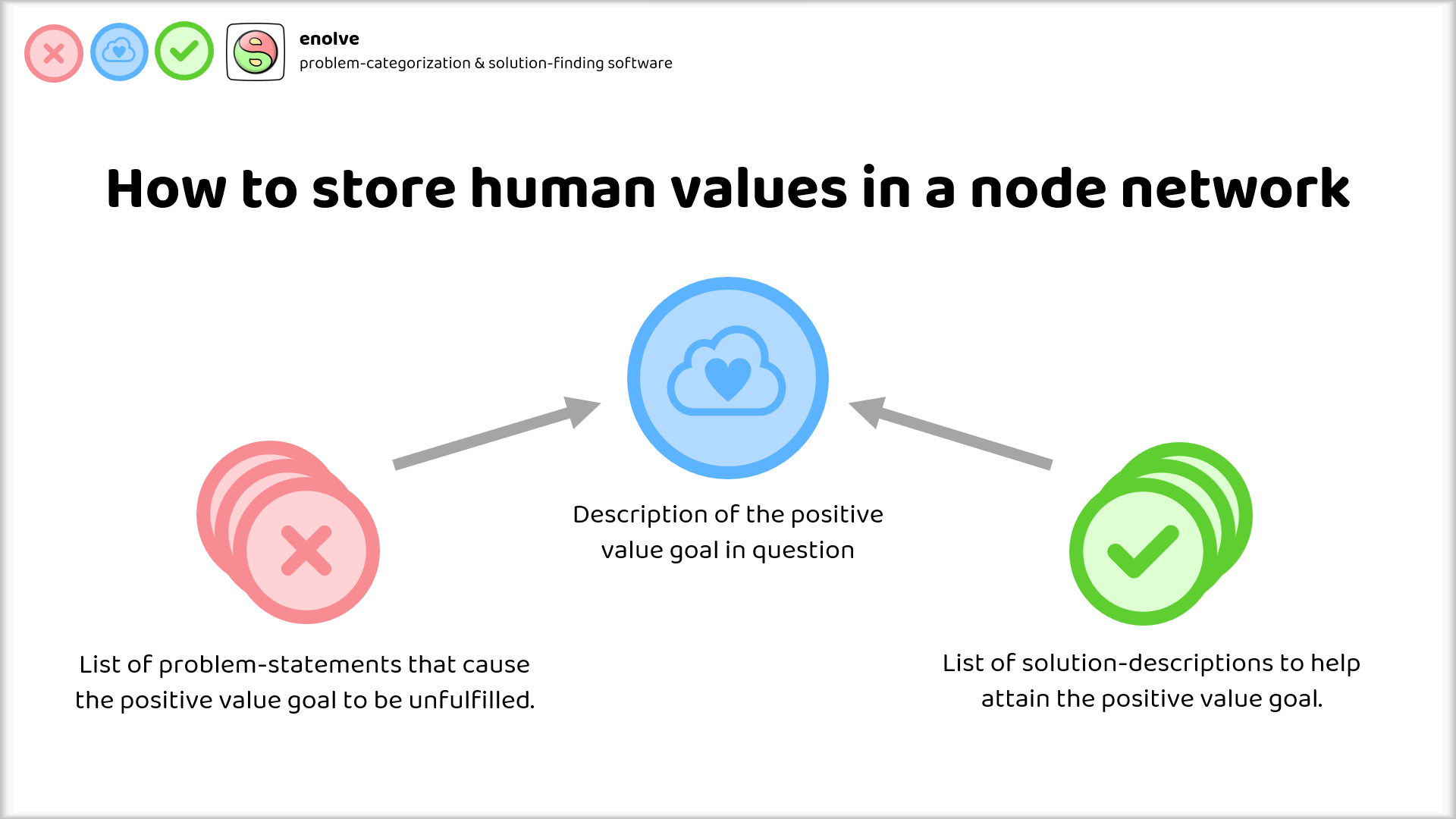

My suggestion for how to do this is quite simple: Categorize each "positive value goal" according to the actionable, tangible methods and systems that help fulfill this positive value goal, and then contrast that with all the negative problems that exist in the world with respect to that positive value goal.

Using the language of the instrumental convergence thesis:

Instrumental goals are the tangible, actionable methods and systems (also called solutions) that help to fulfill terminal goals.

Problems on the other hand are descriptions of how terminal goals are violated or unfulfilled.

There can be many solutions associated to each positive value goal, as well as multiple problems.

Such a system lays the foundation for a node network or knowledge graph of our human ideals and what we consider "good".

Would it be a problem if AI was instrumentally convergent on doing the most good and solving the most problems? That's something I've put up for discussion here and I'd be curious to hear your opinion in the comments!

Sounds like a good plan! I think there are other challenges with alignment that this wouldn’t solve (e.g. inner misalignment), but this would help. If you haven’t seen it, you might be interested in https://arxiv.org/abs/2008.02275

Thank you, this is super helpful! I appreciate it.

Yes, good point, if inner misalignment would emerge from an ML system, then any data source that was used for training would be ignored by the system anyways.

Depends on if you think alignment is a problem for the humanities or for engineering.