(epistemic status: Written in the spirit of "strong opinions, weakly held" and in a way that is accessible for people who are new to effective altruism. Also, I am pretty sure this idea isn't original, but I haven't seen it written up. So please point me there in case I missed something.)

Heavy tails explained

I think of recognizing heavy-tailed distributions in many places as one of the key insights of the effective altruism mindset.

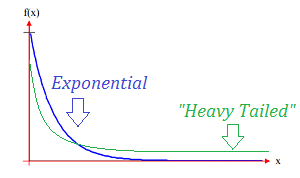

In a heavy-tailed distribution, a large part of the probability mass lies in the “tail”. It goes to zero more slowly than an exponential distribution. This means extreme values are likelier and outlier data points occur more often.

Examples

Here are a few examples that indicate underlying heavy-tailed distributions in real life, as they clearly exhibit a significant skewness.

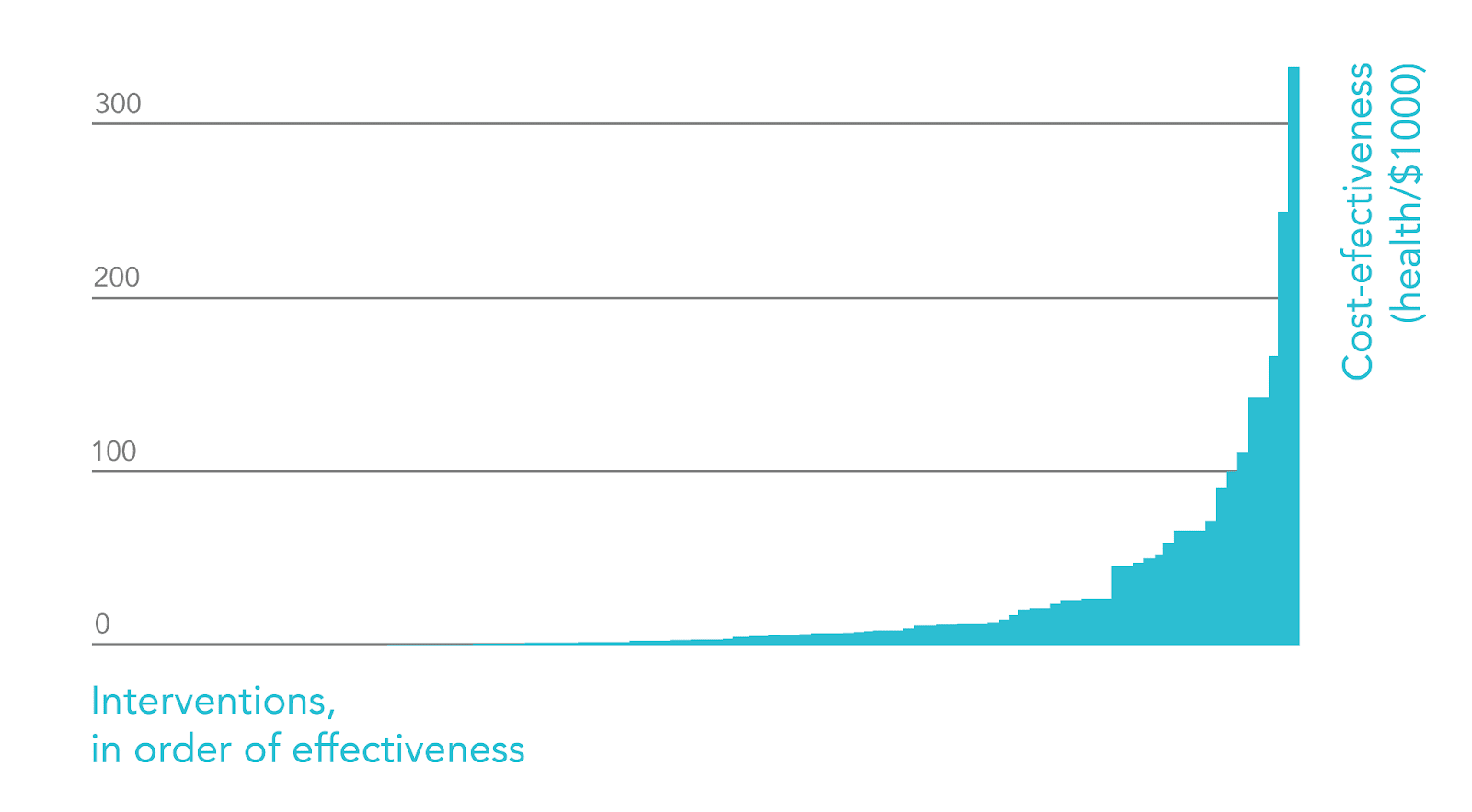

The most effective health interventions are multiple orders of magnitude more cost-effective than the typical intervention.

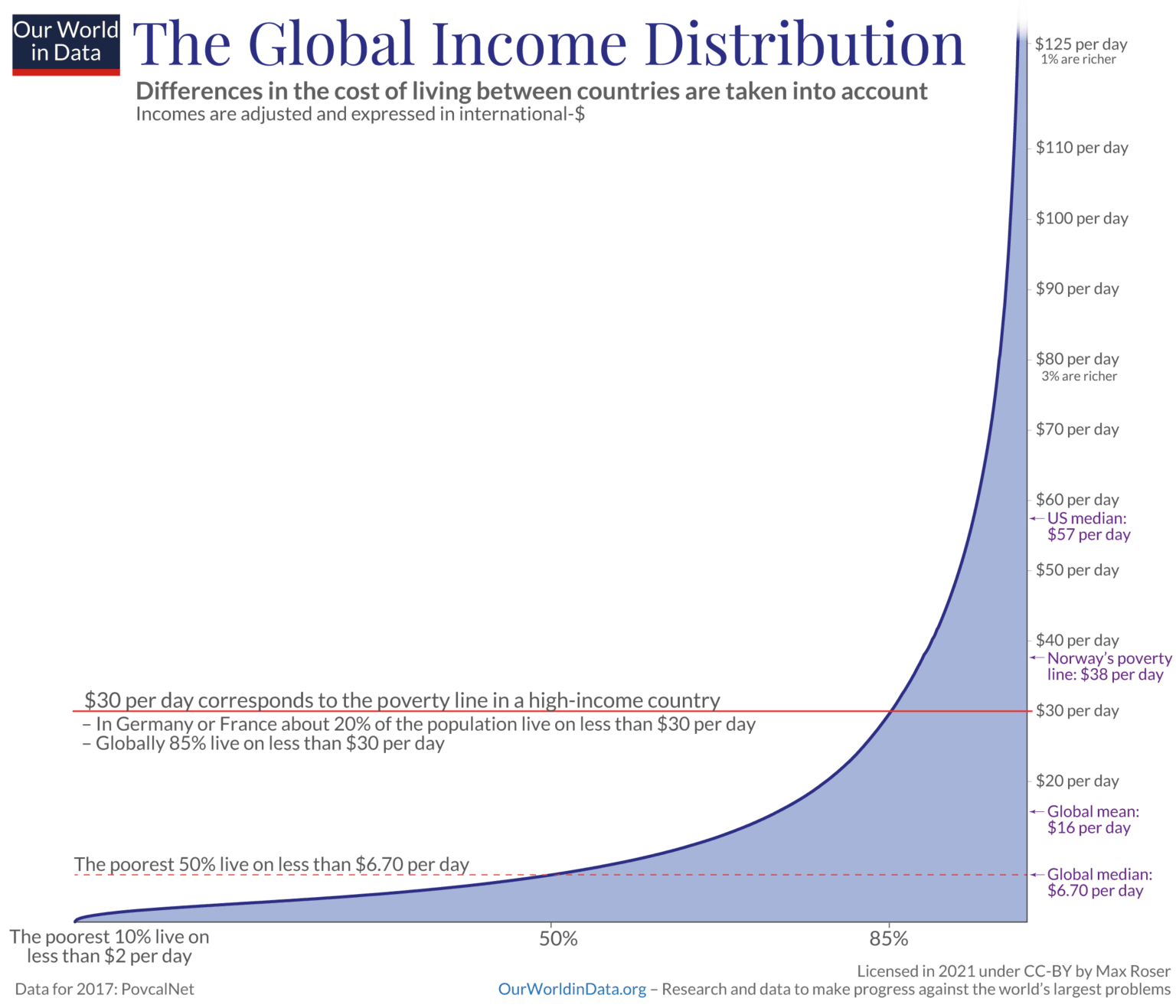

As most people know, global income is distributed highly unevenly.

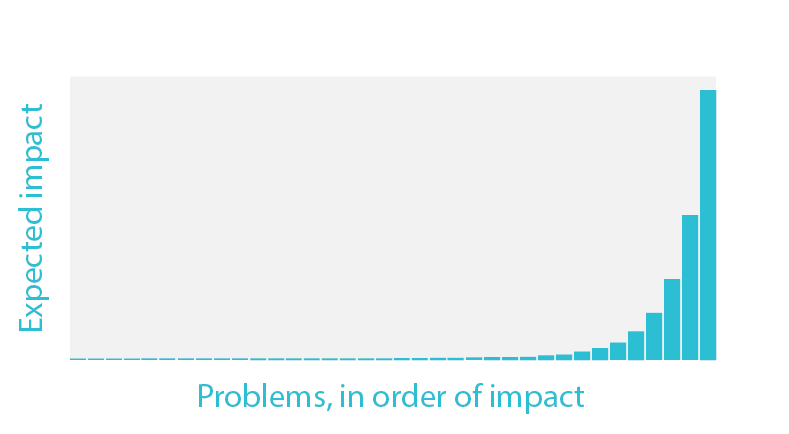

Some problems we can choose to work on are hundreds of times more important than others. For example, depending on your worldview (!), preventing pandemics might be 100x more important than global health, and mitigating risks from AI might again be 100x more important.

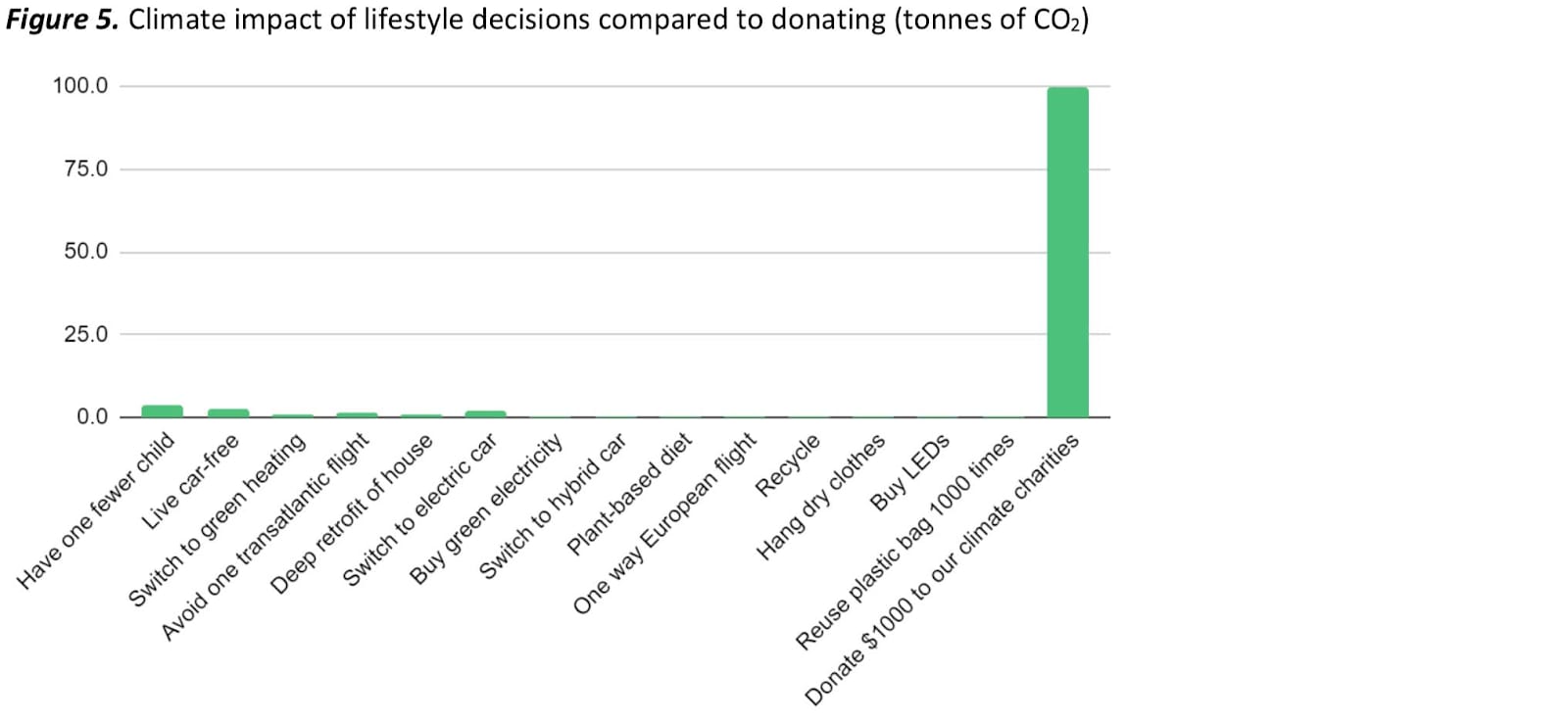

If you want to emit fewer tons of CO2, the lifestyle choice that makes by far the biggest difference is having one fewer child. But then, donating to certain climate charities is again many times more impactful than any lifestyle choice.

Conclusion

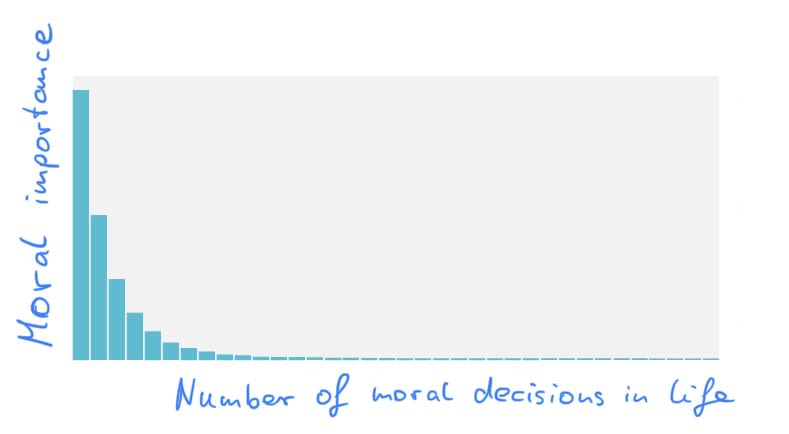

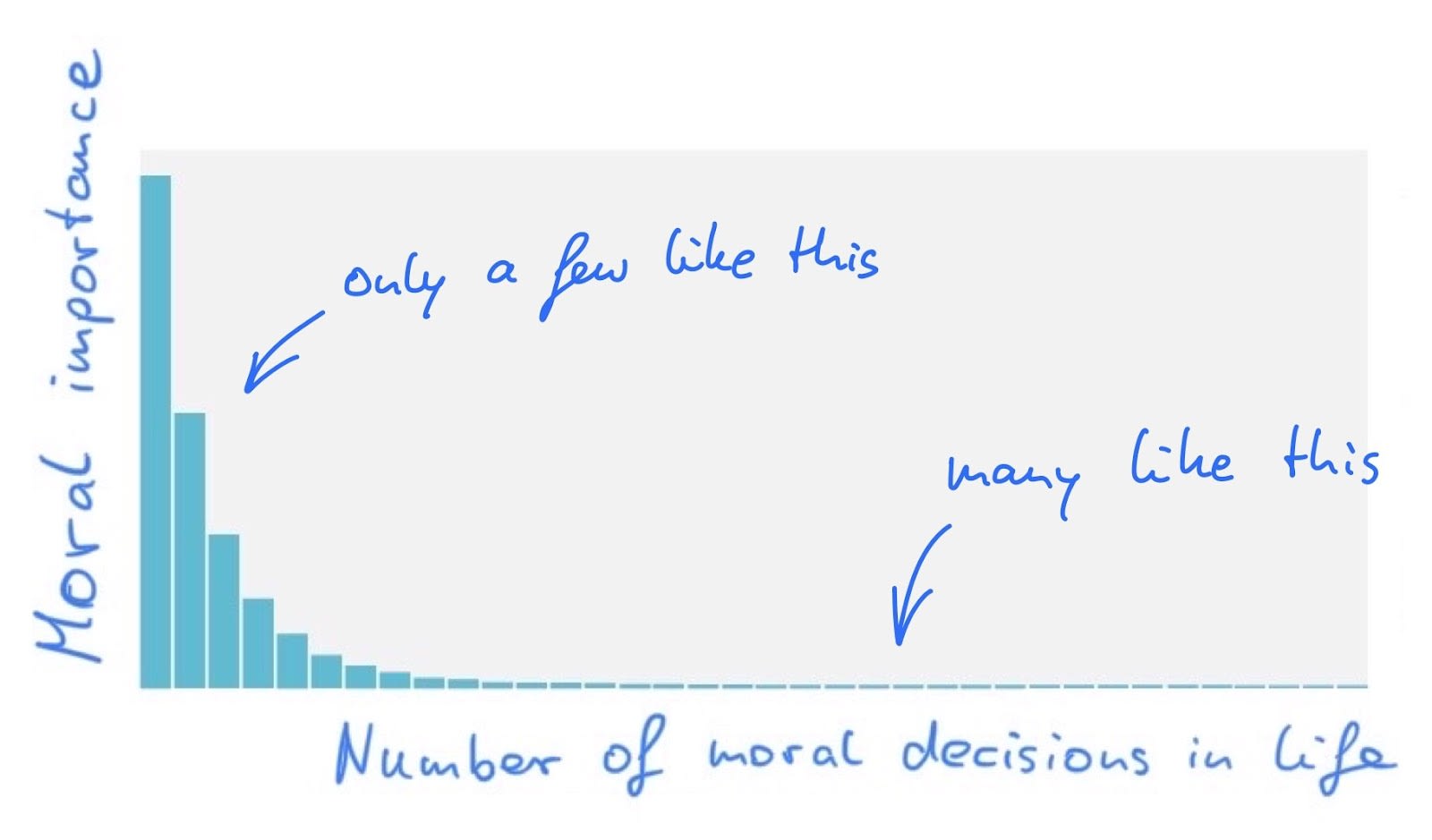

Now, here is what I mean by "all moral decisions in life are on a heavy-tailed distribution":

The intuition is that a few decisions in life are of vast moral importance compared to many others, which are way less important.

Now, what could those crucial decisions be? 80,000 Hours argues that career choice is among them. Donating a significant amount of your lifetime earnings to effective charities could also be high up there.

I often find it helpful to think about this. If this hypothesis is true and I want to live a moral life, I need to get the big things right. This insight should lead me to put a lot of time into thinking about what the massively important decisions are and how to make better choices about them.

Most everyday decisions probably matter less, and I ought not to worry much about, e.g., whether to take the train or a plane in a specific instance. Of course, these more minor things can also add up over a lifetime, and making moral choices on them is better than doing nothing. Nonetheless, I think many people should think less about these and take seriously the possibility that only a few of your judgment calls matter 1000x more than many others.

Figure out what the big, morally important decisions are and try to get them right.

Thanks to Simon Grimm and Katja Michlbauer for their helpful comments on this post.

Appendix

- Click here for a nice explainer of heavy-tailed distributions in video format by Anders Sandberg.

- You might have heard of other names of concepts that hint at a very similar idea as heavy-tailed distributions: Pareto principle, 80-20 rule, power laws, fat-tailed distribution, tail risk, and black swan events.

I agree that something like this is true and important.

Some related content (more here):

Thanks, I was only aware of two of these!

This is interesting, if a bit blithe about the concept of "moral importance". What does it mean? In particular, I think the potential upside and the potential downside are often asymmetric in a way that makes "importance" tricky. For example, most people's donations might be low impact (upside or downside) and donations can have high upside but rarely high downside. So would you consider moral importance to be defined primarily by the potential upside? How does potential downside (e.g. from career choice if your career accelerates AI capabilities risk) factor in?

A note that this is my subjective perspective, not an objective perspective on morality.

Basically, if you're concerned about everyday moral decisions, you are almost certainly too worried and should stop worrying.

Focus on the big decisions for a moral life, like career choices.

You are ultimately defined morally by a few big actions, not everyday choices.

This means quite a bit of research to find opportunities, and mostly disregard your intuition.

On downside risk, a few interventions are almost certainly very bad, and cutting out the most toxic incentives in your life is enough. Don't fret about everyday evils.

Sorry, I don't understand the trickiness in '[...] in a way that makes "importance" tricky'

In my mind, I would basically think about the expected improvement from thinking through my decisions in more detail to be the scale of importance. Here, realizing that I could avoid potential harms by not accelerating AI capabilities could be a large win.

This seems to treat harms and benefits symmetrically. Where does the asymmetry enter? (maybe I am thinking of a different importance scale?)

Thanks for posting this! I'll be sharing some of these graphs with people new to EA