Advance note: This essay is much more personal and "content-light" than most things posted on this forum, but I was encouraged by a few people to post it anyway to communicate the human side of EA. (It’s written in the style of Nate Soares’s excellent blog.) Please be warned if you're primarily interested in the forum for intellectual content, which you can better find in my other recent post.

Brain emulations and wild animals. People in poverty and beings of the far future. What do these all have in common, and how could an effective altruist care about all of them? If you've ever answered such questions for someone new to the movement, you have endured a difficult task. (Although Michelle Hutchinson has taken on this task particularly well.) In this essay, I will make a long-winded attempt to introduce a new term, one that can answer the question of, "Who does effective altruism care about?"

---

I grew up an hour north of New York City in a town called Mahopac. On many weekends I would go camping with the boyscouts. I remember picking flora and smelling it as I hiked past, and staring at stars in the night sky as I lay under them. The woods were a second home.

They were also the first place I ever had the visceral experience of an expanding moral circle. On one camping trip, I came back to our site early from archery. One of the boys was there alone, roasting something over the fire on a stick. As I drew closer, I saw that the thing was moving. It was a frog. On this day it became clear to me that I cared about the welfare of non-human animals.

More recently, I walked through the redwoods with a friend, Lipa, a mathematician, philosopher of physics, and fellow lover of forests.

We descended through a narrow trail. Briefly, I peeked upward. We were surrounded by trees.

(Photo cred: Amy Willey Labenz)

I felt a tightness in my stomach. As a young boyscout, the woods would send me toward rapture. But today I am aware that amongst and along each of these trees, animals slaughter, starve, and suffer. Now, when I walk through woods, an odd feeling creeps. It has three parts, one familiar, and two new: aesthetic immersion; immense sadness; and thick resolve.

Ahead, I noticed that Lipa placed her hand on each tree she passed. She touched them in the same way a matron might rest her hand on the heads of her orphans, checking in, soothing, affirming. I looked at one redwood nearby and placed my palm on the bark. It was softer than I'd imagined. For a anthropomorphic moment, there was a fleeting thought: One day, will the evidence ask me to care about the moral status of trees as well? And if that hypothetical day ever comes, will I be prepared to care?

There are estimated to be 3 trillion trees on earth. Run the thought experiment: If there was new evidence - strong enough to pass your Bayesian scrutiny - that each of these trees were sentient and subject to enormous suffering, would you be prepared to care? Even if convinced by the evidence, could you imagine yourself dropping your current career, changing your donation targets? Would you feel confident explaining to your friends, family that, "I've decided to save the trees. But not in the way you think"?

---

To quote a recent paper entitled "The Possibility of an Ongoing Moral Catastrophe":

...An inductive argument: most other societies, in history and in the world today, have been unknowingly guilty of serious wrongdoing, so ours probably is too.

For all of the intellectual and material effort effective altruism puts into expanding the moral circle, the lesson of this paper is that we should bring caution to confidence that our circle is wide enough. And we ought not let this caution falter over time. There may exist universes of invisible harm, and beneficiaries we may remain unaware of for decades or centuries. This is why, as a community, we do the following:

...regard intellectual progress, of the sort that will allow us to find and correct our moral mistakes as soon as possible, as an urgent moral priority rather than as a mere luxury....

Indeed, this is one reason why I decided on EA movement building as the highest leverage use of my money and time. Have we chosen the best beneficiaries with existing causes? I don't know, but, provided its continued survival and high epistemic standards, I believe effective altruism's intellectual community is our best bet for finding out.

However, you can still imagine an event where arguments for a new beneficiary might fall flat. You can imagine labels like "weird" and "misguided" being attached to those who make these arguments inside the movement. And for understandable reason: the discovery of new beneficiary groups might drastically shift our focus away from current efforts, ones upon which epic altruistic careers are now being built.

As the movement evolves, how can we avoid ossification around a restricted set of beneficiaries? How can we remain cause-neutral enough to give weight to new beneficiaries as they we discover them? Is effective altruism prepared for shock of a greatly expanded moral circle?

---

At one point Lipa and I emerged into a clearing to find a massive Art Deco memorial hidden amongst the trees. It felt mythical somehow, as if it were a ruin within our time.

(Photo cred: Amy Willey Labenz)

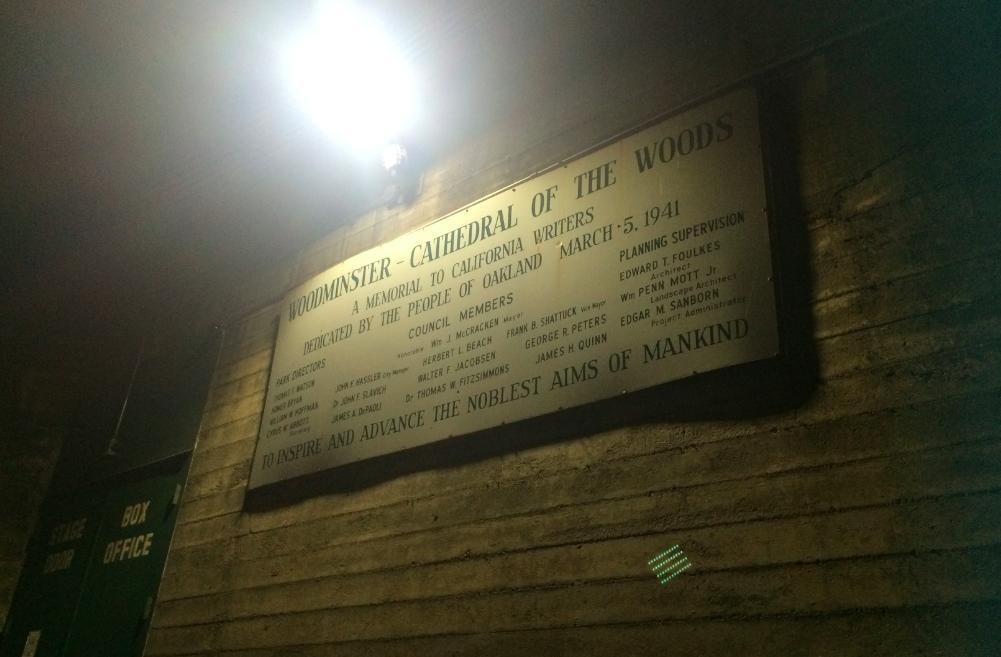

Behind it was the following sign:

We admired the bottom of the sign for some time. Then we descended the steps from the memorial. The steps trailed alongside elliptical ponds. As the sun began to set, the frogs in these ponds began to ribbit.

I asked Lipa, "Do you think they're ribbitting for the noblest aims of mankind?"

She laughed and replied, "I think they're ribbitting for the noblest aims of humankind."

"Indeed!" Then, nudging her with my elbow, "Human and animalkind."

"Hm, but what about digital minds?"

"Ah, and what if there are conscious processes in physics?"

---

If you know me well, you have already guessed how much I fell in love with the sign behind the Cathedral in the Woods. One does not often encounter such earnest idealism. I even took the CEA US team back to see it.

(Not pictured below are Julia Wise and Roxanne Heston, who are to the far, far east of the frame, in Boston and New Orleans, respectively. They were there in spirit.)

(Photo cred: Amy Willey Labenz. From the right: all-stars Amy, Kerry Vaughan, Oliver Habryka, Peter Buckley; me.)

My love of this sign notwithstanding, the scope of the word 'mankind' is narrow, as Lipa pointed out. It evokes eras when our expanding moral circle still fell short of beneficiaries like other species, and people far away from us in space or time - not to mention women. The moral progress we've made since - and that which we continue to make - is nothing short of remarkable. Yet, in all likelihood, it is short of ideal.

Linguistic categories seem to matter. In a former life, I worked in cognitive science labs and came to tentatively endorse the weak Sapir-Whorf hypothesis, the idea that "linguistic categories and usage [...] influence thought and certain kinds of non-linguistic behavior." (Wikipedia) For example:

-

Russian contains two basic-level terms for blue (siniy—dark blue, and goluboy—light blue), whereas English speakers have only one. Paralleling this linguistic difference, native Russian speakers, under normal circumstances, show categorical perception effects in discriminating shades of blue while native English speakers do not. Russian speakers are more quickly able to discriminate a dark blue from a light blue.

-

The Pirahã language is anumerical, having words which only map onto "few" or "many." When an experimenter asked Pirahã to put up as many fingers as pieces of fruit in front of them, their accuracy was poor after four pieces.

-

Speakers of Kuuk Thaayorre use words for cardinal directions (north, south, east, and west) to describe the locations of objects in space, rather than relative spatial terms like left and right. For instance, they might ask you to pass the salt to the south-southeast of the table. As a consequence, they show profoundly good navigational abilities. (I've even read stories that a Thaayorre child can close her eyes, spin around, and correctly point toward any cardinal direction you ask, but I have been unable to verify these stories.)

There is now a thriving cognitive linguistics literature around the Sapir-Whorf hypothesis, some of which is covered by Lera Boroditsky in this Scientific American article (PDF) for those curious.

Each morning I jog with Emily Crotteau, with whom I worked on EA Global. During a recent run, Emily noted that we seem to lack words for an important class of things. That class of things is something like, "All possible entities we might lend moral significance." Or, similarly, "all the entities that the 'process' or 'algorithm' of effective altruism might eventually help." If it is the case that we are cognitively encumbered in areas we lack terms for, having an appropriate term for this class might have some moral importance. What word might fit?

---

Back between the trees, above the steps and ponds and frogs, the night set in.

"There's a word in Croatian, 'svemir,' Lipa said. It means 'universe,' but can be broken into the Slavic roots 'svet' - meaning 'world' - and 'mir' - meaning peace. Maybe svemir could be a word to communicate 'all the beings in the universe that we wish peace unto'."

"That's beautiful," I replied. "But, 'humankind, animalkind, and svemir'? One of these things is not like the others."

We both paused and thought.

Above our heads, the stars were out. I looked up and thought about all the sentient beings that might exist, now or in times to come, near or far, far away.

"Ribbit," said the frogs of earth, demanding continued consideration.

One of us turned to the other.

"Allkind."

---

I think a word like allkind could play an important role. It would allow us to communicate that we care not about particular groups of beneficiaries, but all morally relevant groups, no matter whether they are black, white, male, female, human, nonhuman, present, future, carbon-based, or made of silicon. It may even prepare us to care about groups not described by these adjectives - ones which are today invisible to us, or too strange to accept.

But, of course, words are not enough. As these words are typed, they cannot help the billions of possible beings crying out in ways we cannot hear. It is the underlying concept of allkind which must continue to drive our action - term or none.

A salute to those who strive to inspire and advance the noblest aims of allkind.

Hi! While writing a post on LinkedIn, I naturally wanted to write "allkind" and I really thought that by now, with all of the modification in languages due to differing views on gender, ethnicity, etc., such a word would exist and be in the dictionary for the English language. And to my surprise, not. However, I found it here. I'm going to use it anyway. It's appropriate to convey the idea I want which is in the context of responsibility (in a number of contexts I mention in the post) for "allkind". Whether what I'm referring to in "allkind" is sentient or not isn't even an issue (as I read discussed in a comment made here 8 years ago). I'm not involved nor had much contact with the concept of effective altruism (I'll look it up) and I really don't care whether people are "taken aback" by the term "allkind". As you discuss I think we need to think this way and perhaps we'd have less of the problems we have in society. Anyway, thanks for indirectly helping me to decide this IS the correct term to use.

I appreciate the unusual nature of this piece, and glad you posted it here, even though it's atypical. Yet we as EA participants know the value of weirdness and don't automatically reject it, and I encourage others reading this piece to avoid the temptation to reject it because it's atypical.

Coming down from the meta-level to the object-level, I get what you're saying about the suffering of beings we haven't yet learned about. I think it's a quite important issue to think about. While I'm not so concerned about trees per se, I am concerned about the potential suffering of digital minds as we approach constructing an artificial intelligence, or the potential suffering of sentient aliens who we have not yet met.

As I am personally most engaged in the aspects of EA related to movement building through outreach to a broad audience, I do have some concerns about "allkind" from the perspective of its public impact. If I imagine anyone asking me "what do EAs care about" and I answer "improving the flourishing of allkind," this might put people off. I don't have any problems with the use of this word as internal EA jargon, just want to signal a potential problem with how it would look to outsiders.

Perhaps a more optimal term might be "sentience." If I imagine saying that EA members care about "improving the flourishing of sentience," it wouldn't really put people off, and it conveys the same idea as "allkind" - i.e., if we discover trees have sentience, then we would care about them. Sentience also applies to both animals and future beings, as well as digital minds and aliens. Sentience is also more measurable and quantifiable than "allkind" - i.e., some beings might have more or less sentience and experience more or less suffering. This would be relevant to prioritizing and quantifying various efforts aimed at reducing suffering.