Many people in the effective altruism (EA) community hope to make the world better by influencing events in the far future. But if the future can potentially contain an infinite number of lives, and infinite total moral value, we face major challenges in understanding the impact of our actions and justifying any particular strategy. In this academic session, Hayden Wilkinson discusses how we might be able to face these challenges — even if it means abandoning some of our moral principles in the process.

Below is a transcript of Hayden’s talk, which we have lightly edited for clarity. You also can watch it on YouTube or read it on effectivealtruism.org.

The Talk

To start, imagine that you can either save one person or five people who are tied to trolley tracks. You can stop only one trolley. This is analogous to either donating to one charity, which will save some people, or another charity, which will save many.

Most effective altruists would choose to save five people. Why? Many of us would say that saving five is a better outcome because it contains more moral value. And in this case, with no other considerations present, we ought to bring about the better outcome — or at least we'd prefer to.

But does that justification work? I'm going to argue that it might not when we live in a universe that's chaotic and infinite. You may have heard of the problems of cluelessness [our inability to predict the consequences of our actions] and infinite ethics [the idea that if the universe is infinite, there is an infinite amount of happiness and sadness that will remain unchanged by acts of altruism, making all such acts equally ineffective].

Both have some solutions, but together, are much harder to solve.

First, our universe is chaotic. For many of the dynamic systems within it, if we make small changes, we end up having large, unpredictable, lasting effects. In the case of saving one person or five people, our decision would have major effects — such as identify effects — on the future.

Each person you save may go on to have many generations of descendants who wouldn’t exist otherwise. Those people will affect the world around them, making it better or worse. They might even completely divert world history. We can't predict this. It's way too chaotic.

Even if their lineage doesn't extend forever, they'll mess up other people's children.

Delay a child's conception by a split second and a different sperm fertilizes the egg with very different DNA. Hold someone up by even a second when they're on their way home to conceive a child, and you change the child's identity and that of all their descendants. Those people you save will do this countless times in their lives, as will their children, so there'll be even more unpredictable identity events.

Another classic chaotic system is the Earth's atmosphere, which is affected by having an extra person walking about.

You may have heard of the butterfly effect coined by Edward Lorenz, who claimed that the flap of a butterfly's wings in Brazil can set off a tornado in Texas. It's dubious whether a butterfly's wings could actually overcome the viscosity of air to have that effect, but the CO2 emissions from your daily commute certainly do. So does every human breath, which releases 140 times as much kinetic energy as the flap of a butterfly's wings.

If you save some people rather than others, they'll have a whole lifetime of breaths, CO2 emissions, and so on. This can change weather patterns for millennia, making future lives better or worse.

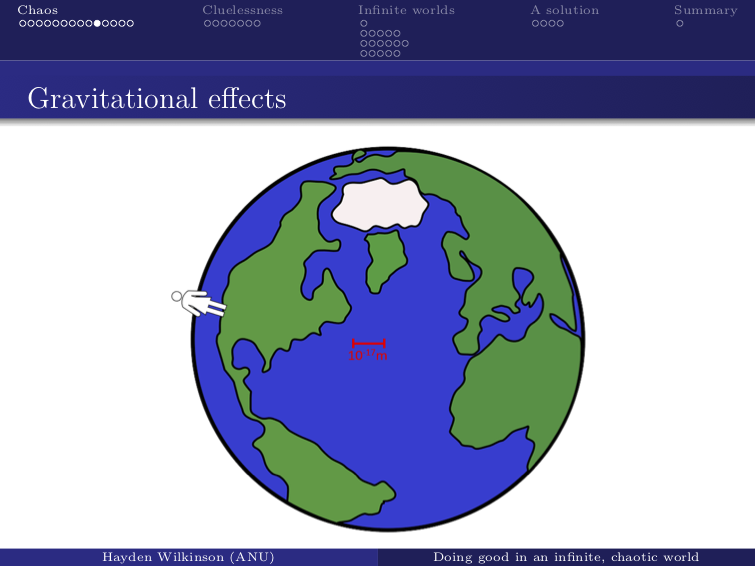

Also, human bodies cause a gravitational field.

When we move our bodies, that field changes. If I save someone's life, their body continues to move around — at most, from one side of the world to the other.

This changes the distribution of mass on Earth, and so changes the force exerted on Earth by other planets due to something called tidal forces. It's as though Earth changes mass by up to six milligrams.

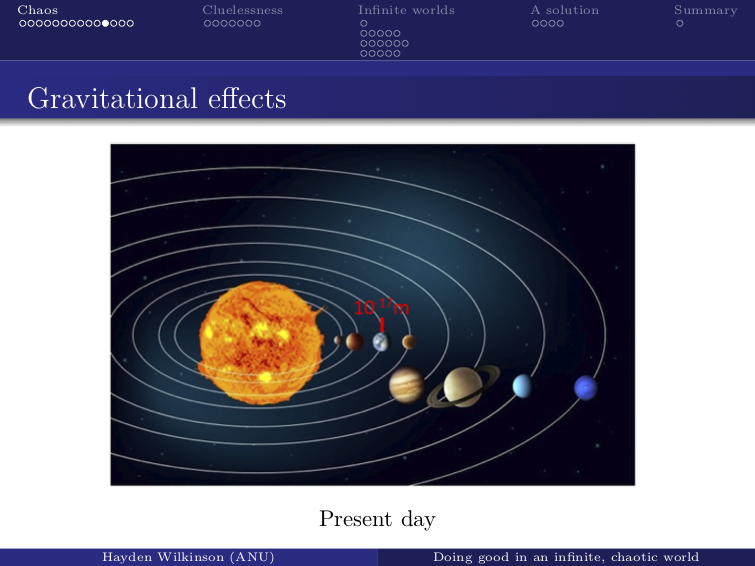

This changes our acceleration and, therefore, our position as well. Over one day, this typically moves us by a tiny amount. But Earth is part of a larger gravitational system.

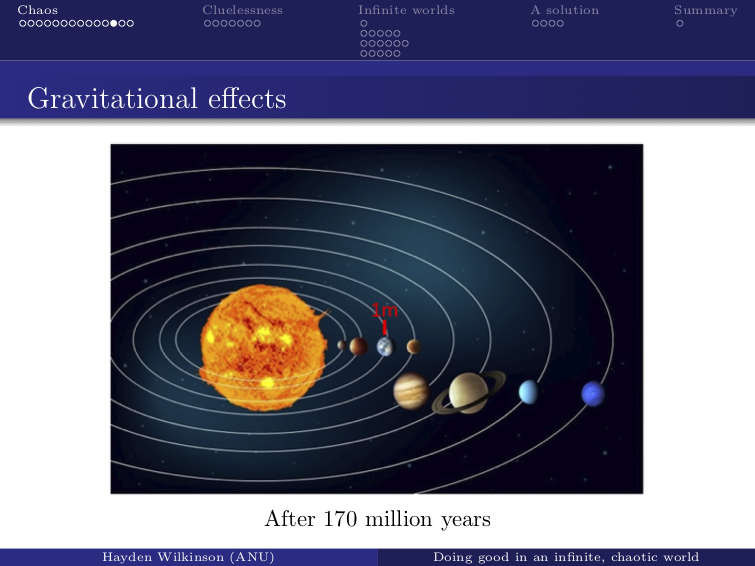

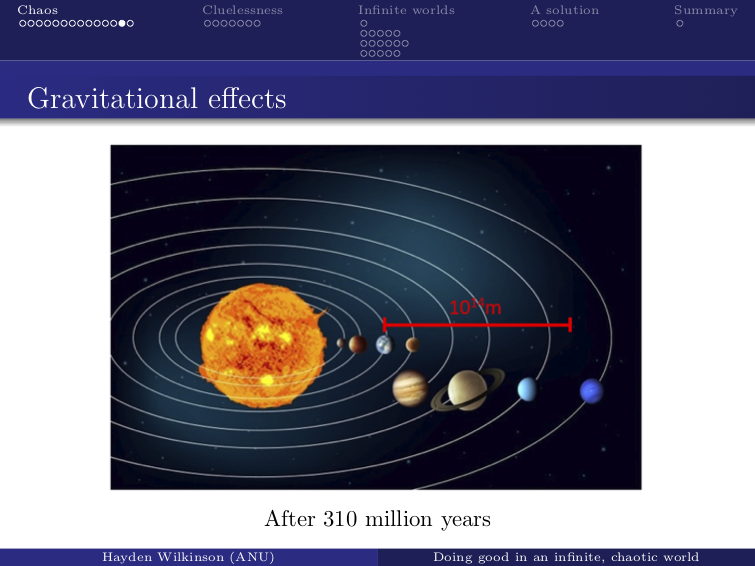

For three or more objects, this gets chaotic, creating what is known as the three-body problem. A change that's small now will typically lead to a change of about a meter after 170 million years.

That’s still not very far, but in 300 million years, as it keeps growing, it becomes a change of a billion kilometers — about the distance of Saturn from the sun.

This will have somewhat of an effect on life on Earth, especially through the climate. Just 1,000 kilometers would affect the climate as much as all human-induced warming to date.

The solar system isn't the only end-body system we're in. If you change the positions of bodies here over a long enough time horizon, you affect other star systems, too. So even if humanity ends or relocates far from here, our actions still affect future life.

There are plenty of other end-body systems. Note that almost all of these effects don't just arise when saving lives, but also in every decision we ever make, including whether or not to take a breath.

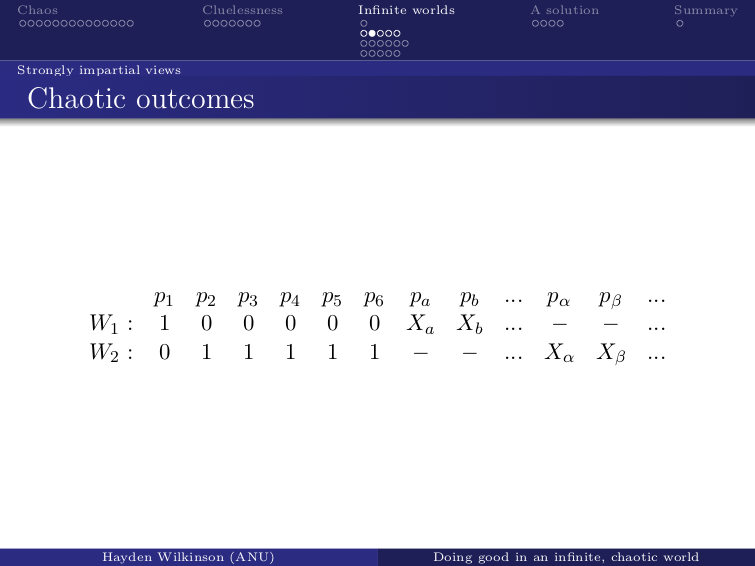

But why is this a problem? Take the case of saving one life versus five lives. We can represent it like this.

The outcomes we could produce are “World 1” and “World 2.” Each contains some value at “time one” (t1) proportional to one or five happy lives.

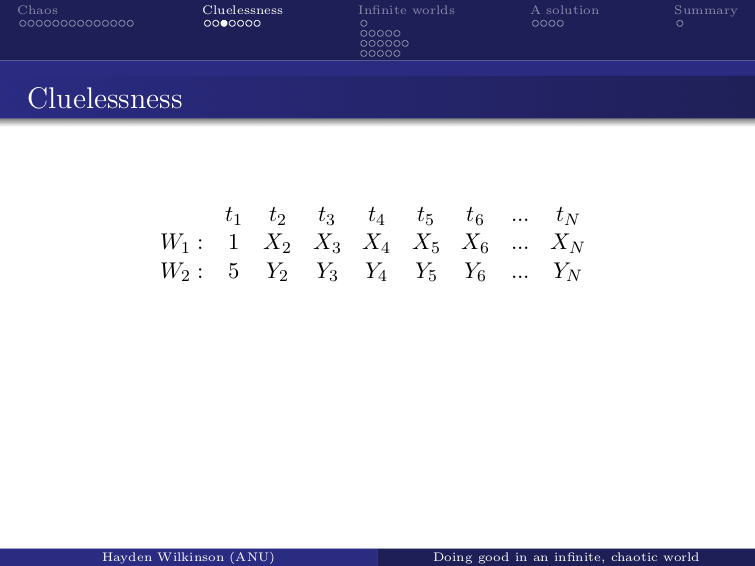

But think about the longer-term effects up to some final time, [designated as] tN. At later times, the value is unpredictable. From our perspective, it's random. So we represent it with some independent, random variables: X and Y.

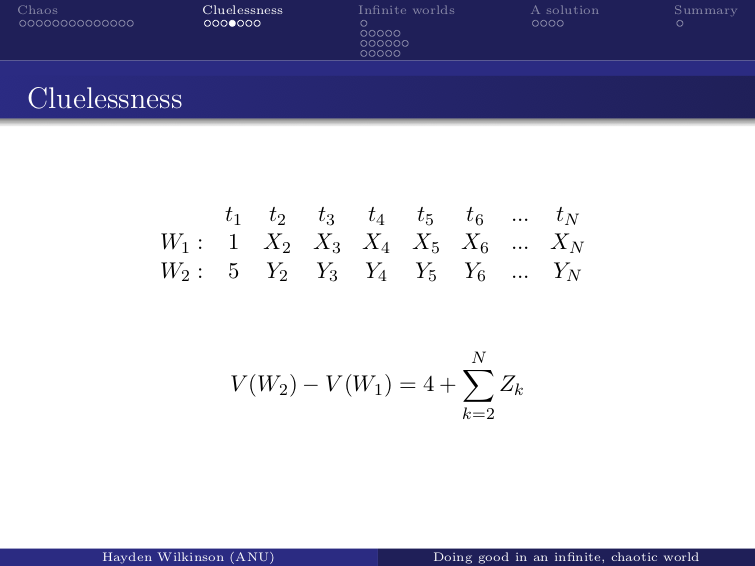

We care about which outcome has the greater total value over all times. We can check this by taking the difference in value between outcomes over all times and seeing if it's positive or not.

We can simplify the difference between each of those to a different variable. These will be independent and identically distributed with an expected value of zero, which means, using this model, a result of something called a symmetric one-dimensional random walk.

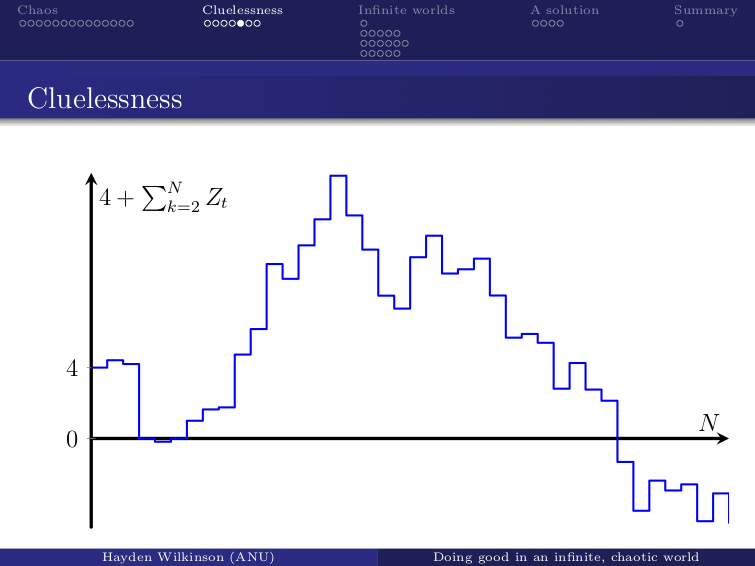

As we add up all the way to tN, the sum will always look something like this. You may see the problem now. For random walks like this, there's always the probability that it's going to come back to zero again. For any large N — any long time horizon — the chances of it being above or below zero by the end are 50-50.

We could save one million lives, and still, an outcome will only be better if the line ends up above zero. Therefore, the odds of whether one action will turn out better than another, in every moral decision we ever make, are always 50-50. That’s the cluelessness problem. As [philosophy professor] Hilary Greaves puts it, "For any acts, we can't have the faintest idea which will have a better outcome."

That seems bad, to put it mildly. We think we should save five people rather than one person, and we think we should contribute to effective causes because it does more good. But actually, we have no idea whether it does more good. It's just as likely to make things worse.

But don't get disheartened. This is a problem based on “objective betterness.” As Greaves puts it, “The same worry doesn't arrive for subjective betterness.” We can say that an action is subjectively better if, given our uncertainty and the probabilities of different outcomes, it has a higher expected value.

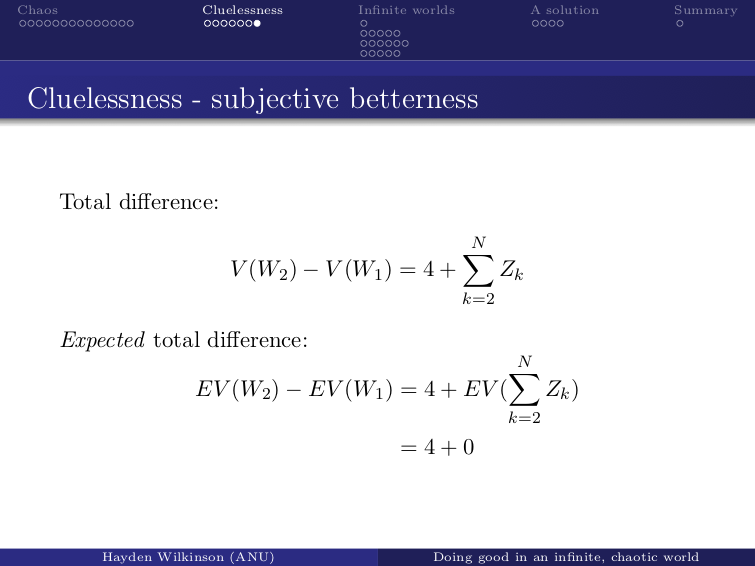

So the difference between Worlds 1 and 2 was this:

And the expected difference is this:

The random variables have an expected value of zero, and they disappear. So it's positive: saving five people is better. Hooray! We still have reason to save people. But we can only assign these expected values when all possible outcomes are comparable. We don't know which outcome will turn out better, but we know that one of them will, and we can average out how good they are.

Here's a second problem: Our universe could be infinite. Some leading theories of cosmology state that we face an infinite future containing infinite instances of every physical phenomenon, including those we care about, like happy human beings.

This makes it hard to compare outcomes. The total value in the world will be infinite or undefined no matter what we do; therefore, we can't say that any outcome is better than another.

Thankfully, a few methods have been proposed that uphold our finite judgments, even when the future is infinite. I'll categorize these judgments as “strongly impartial views,” “weakly impartial views,” and “position-dependent views.” But all of them are problematic when the world's chaotic.

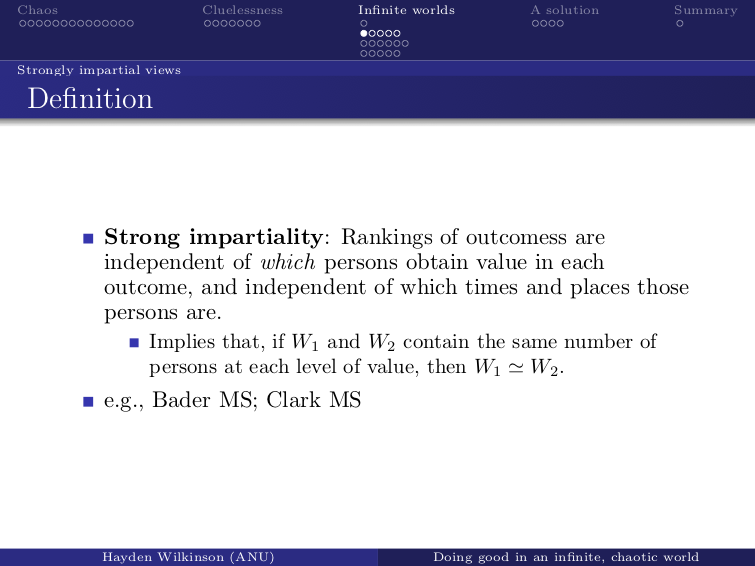

With strongly impartial views, the rankings of outcomes are independent of which persons obtain value in each outcome — and independent of their times and places.

(I'll also assume from here on that the comparisons are independent of other qualitative features like people's hair color, since those seem even less relevant than their position in time and space.)

This seems plausible. With finite populations, it doesn't matter who attains value or where those people are. All that matters is the total number of people. So we'd certainly accept the implication that if two outcomes have the same number of persons, then the outcomes are equally good. Views like this are held by the philosophers Ralph Bader and Matt Clark.

But strongly impartial views have strange verdicts in a chaotic universe. Here are the outcomes for saving one person versus five people, based on [the number of persons, or p] instead of the [times they live in].

In World 1, one person is saved at the start. In World 2, five [are saved], but the values for each person are random since we can't predict them. There are also different people existing in the future — for example, persons Xa, Xb, and so on — but there are an infinite number of them in each outcome.

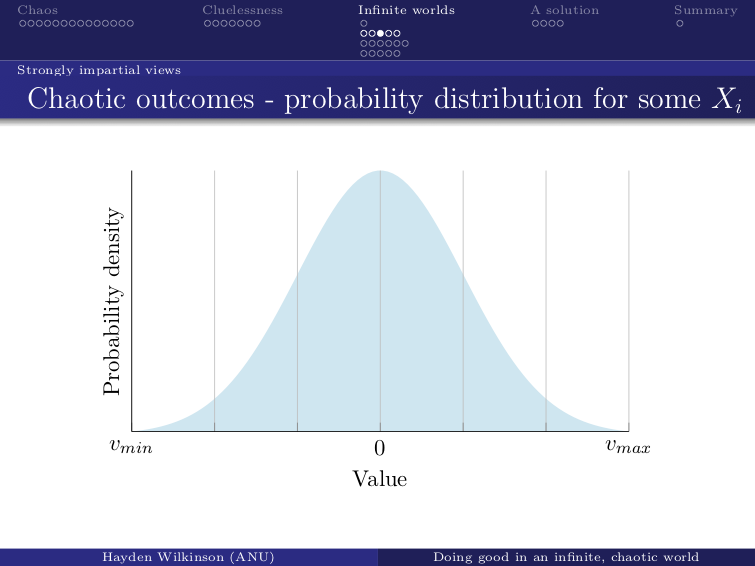

Let’s take a closer look at these Xs. Each Xi has a probability distribution like [the one below], although they need not be identical:

Since we're clueless about the future, each person who does exist has some non-zero probability of any level of value between some minimum and maximum — however much a human life can [accommodate]. But there are infinite persons with distributions like this, so this is what the outcome as a whole looks like:

There’s an infinite number of people at every level of value — the same infinite cardinality. Remember: Strongly impartial views only [reflect] the number of people at every value level, and all of that information is here for some outcome. Every outcome will look exactly the same since they'll all have infinitely many X variables. They're identical in every way that matters. So with any strongly impartial view, all outcomes are equally good. No action we ever take will produce a better outcome than any other. This holds true for expected values too. We never have any reason to save any number of people.

That seems implausible. I think we should reject strongly impartial views. We must in order to be effective altruists.

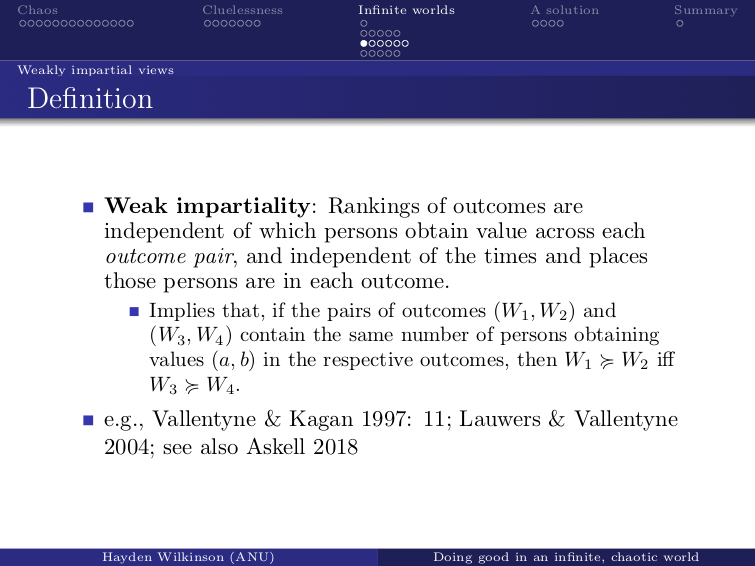

With weakly impartial views, rankings of outcomes are independent of which persons obtain value across each outcome pair, and, as with strongly impartial views, are independent of people’s times and places. This is subtly different.

If we have two pairs of outcomes, — Worlds 1 and 2, and Worlds 3 and 4 — and both pairs contain the same number of people obtaining values (a, b) in the respective outcomes, then we compare those pairs of outcomes the same way. World 1 is as good or better than World 2 if the same is true for Worlds 3 and 4.

This is weaker than strong impartiality. Outcomes don't need to be equally good just because they have the same number at the same values. But two outcome pairs need to be compared the same way without regard for who is in the pair. This seems super plausible. To violate it, our method would have to make reference to specific people — for example, an outcome is better if it is better for Obama.

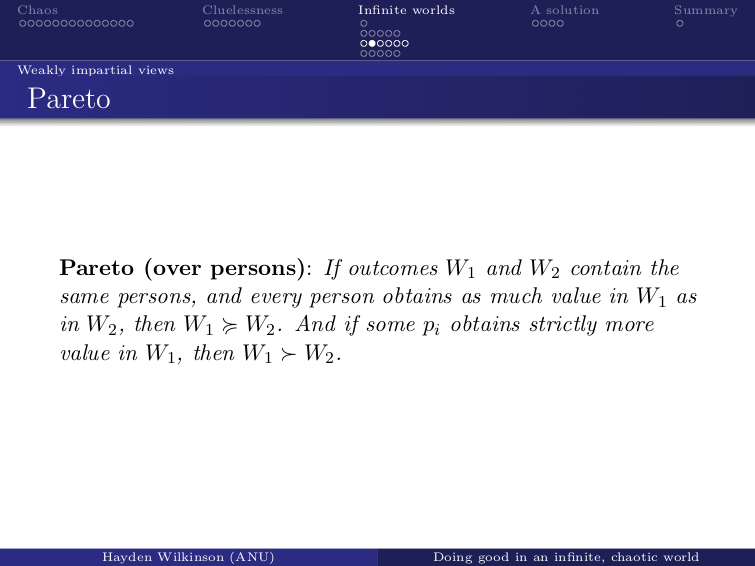

One basic starting principle, which is weakly but not strongly impartial, is Pareto.

According to Pareto, if Worlds 1 and 2 contain the same persons and everyone gets just as much value in World 1 as in World 2, then World 1 is as good or better than World 2. And if someone gets more value in World 1, then it wins.

So if we change nothing about the world except we make a few people better off — or many people better off — that's an improvement. That seems super plausible. If all I'm doing is helping someone, and there are no other effects, then it had better be an improvement. For brevity, I'm just going to assume that any plausible view that's weakly but not strongly impartial satisfies this. (In the literature, they all do.)

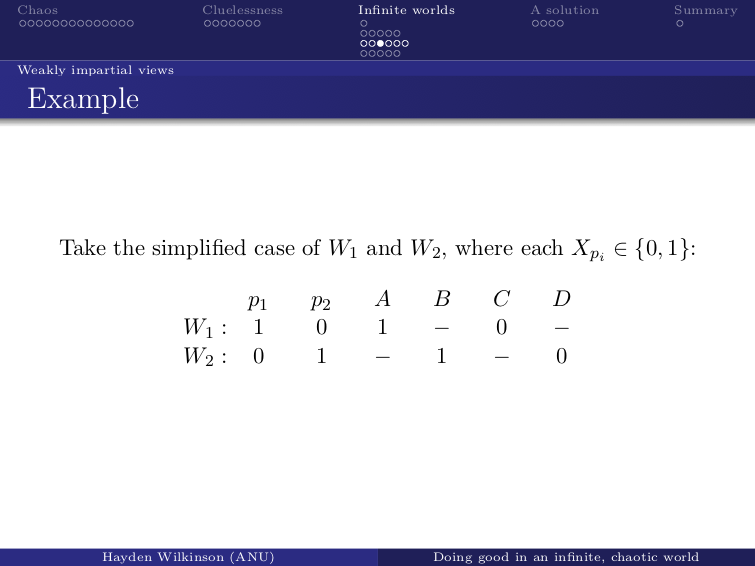

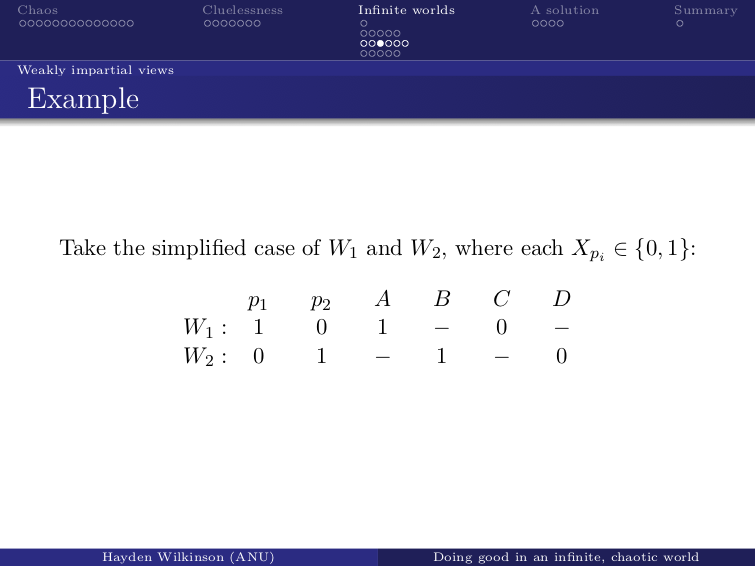

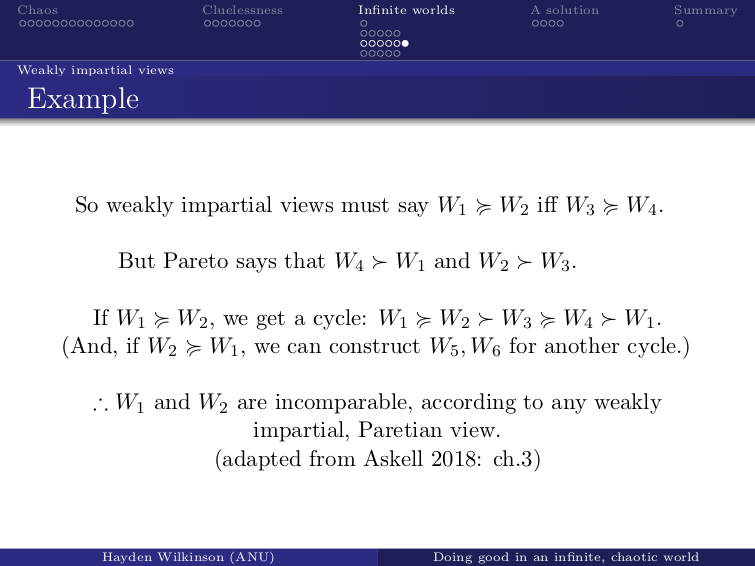

But there's a problem when we're in a chaotic universe. Take this very simple example:

We save one person, p1, or another. That changes which infinite set of people exist in the future — A and C, or B and D — and those people attain random values (for simplicity’s sake, let’s say one or zero). And we can construct another outcome pair. Start with World 4, which looks like this:

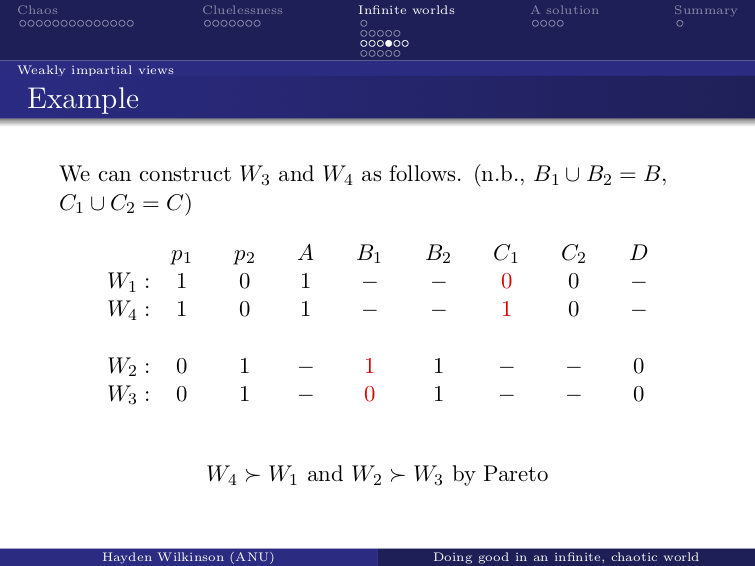

It's the same as World 1, but half of the C group, which I've split, are better off. World 4 is strictly better than World 1; it Pareto-dominates it. World 3 is the same as World 2, but some people in the B group are worse off. Again, Pareto says that World 2 is better.

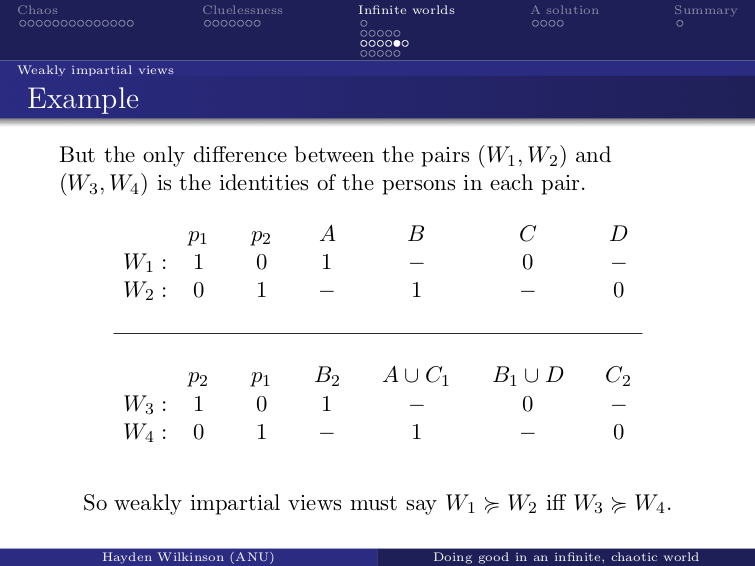

But take another look at these outcomes. We can rearrange them. These pairs, World 1 and 2, and World 3 and 4, have the same number of people in them.

If we're even weakly impartial, there's no relevant difference, per Pareto. Now, if we say World 1 is better than World 2, we get a cycle. Assuming transitivity, that's a contradiction: World 1 is better than itself. That's nuts. Likewise, if we say, World 2 is better, then we can generate a [similar] cycle. So we can't say that World 1 is good or better, nor that World 2 is.

They're incomparable by any weakly impartial view, which satisfies Pareto. And we can run this four-world argument, which I've adopted from Amanda Askell — full credit goes to her — to any realistic pair of outcomes in which our actions have chaotic effects. It gets more complicated, but it still works.

This also holds if we ditch Pareto, but that takes a few extra empirical assumptions, which I won't address here. Where does that leave us?

Well, neither outcome is better than the other, so we have no reason to make either happen, just like before. This carries over to expected values too, which will be undefined. So we're left with no reasons to make the world better. But that seems wrong again. I think we should reject these views as well.

That leaves us with position-dependent views, which make comparisons that are (at least sometimes) dependent on where value is positioned in spacetime, even when the outcomes contain the same persons and obtain the same value.

These views are pretty common, including among economists. One example is discounting the future at some constant rate, which can solve the problem, but that places a different weight on different generations, which is morally kind of awful. Sorry, economists!

Discounting isn't the only option. There are views that give everyone equal weight. Why would we adopt these when it seems morally irrelevant where people are in spacetime? Well, look what happened when we didn't [adopt them]. Even weakly impartial views gave us incomparability everywhere. Weak impartiality is just the denial of dependence on position and other qualitative properties that, like hair color, would seem even less relevant. (As a side note, using those other properties led us to say that all outcomes are equally good — another terrible option.)

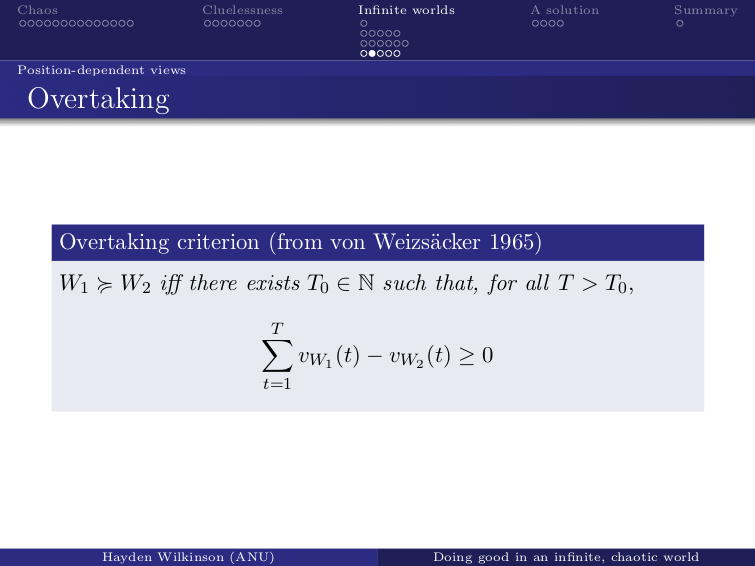

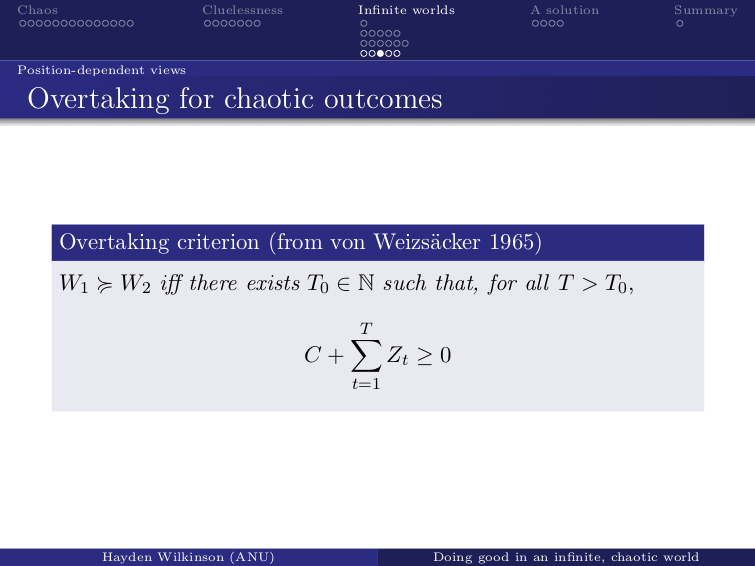

We need to deny weak impartiality, and the least worst way to do it is with position-dependence. But as it turns out, that's not enough. Here's one basic rule: An outcome is as good or better than another if and only if, after some time, the sum of the differences between World 1 and World 2 is always positive or zero.

In effect, we're adding up all of the value, in order, based on time. We keep track of which outcome is in the lead [based on] whether the difference [between worlds] is positive or negative. If there's some time after which World 1 is always in the lead, then it's a better outcome.

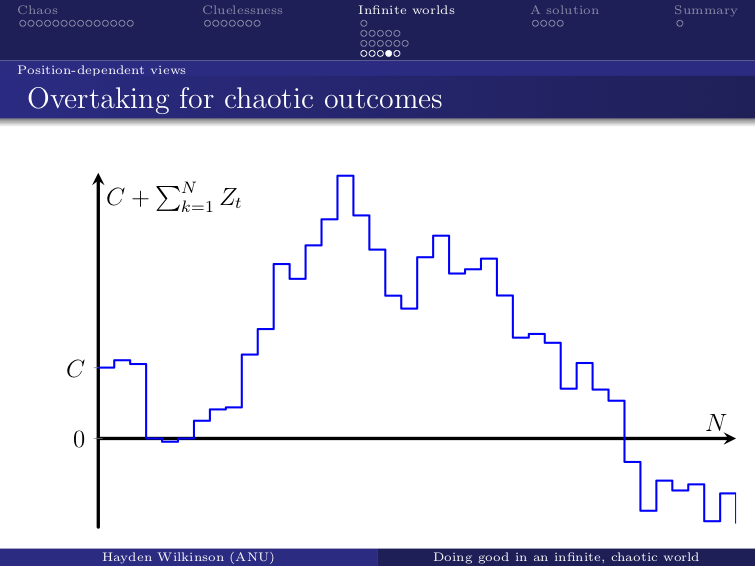

Remember: Due to the cluelessness problem, this difference will mostly be random. There will be some constant value at first, like how many extra lives we save, followed by a series of random variables (Z). Remember that this sum of differences formed a symmetric random walk. As before, this walk will always come back to zero, again and again, infinitely many times. It's what is called recurrent.

But this sum is the difference between outcomes, and if it always comes back to zero, neither outcome keeps the lead forever. Overtaking is never actually satisfied; it leaves every single pair of outcomes as incomparable. Most of the other position-random views give the same result for very similar reasons. Most (but not all) of them just can't deal with a chaotic universe.

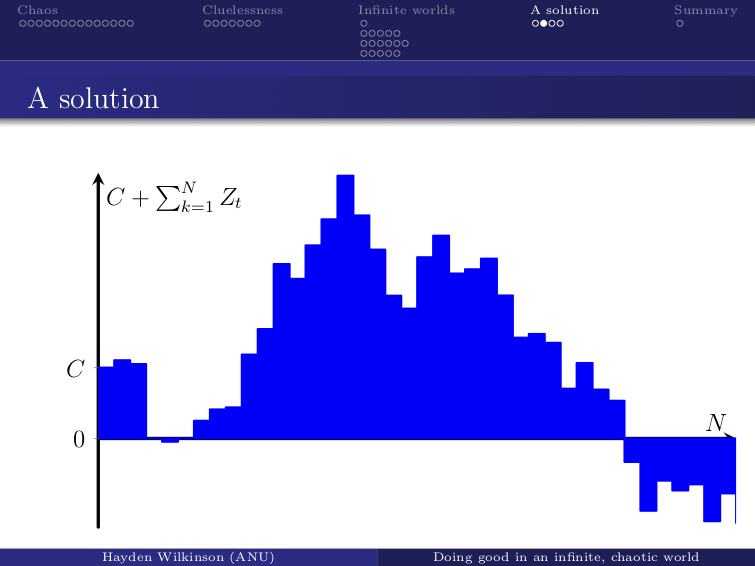

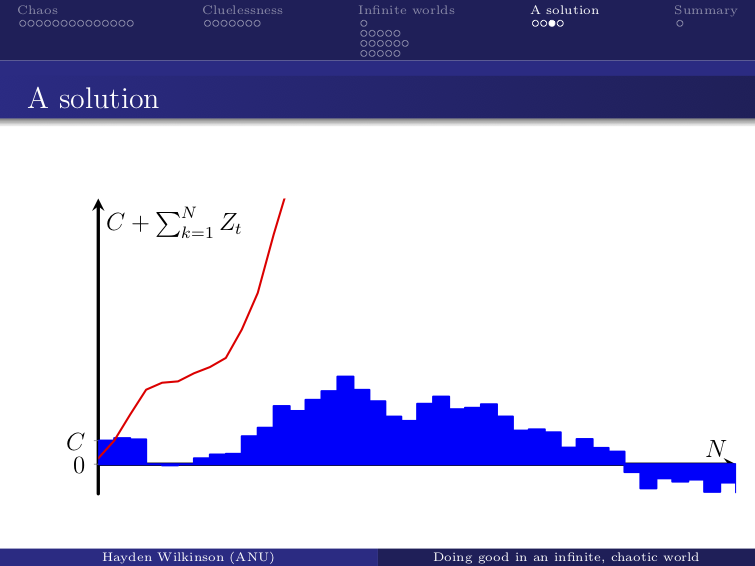

This graph shows how much better one outcome is than the other as we sum the value over time. It might keep going back to zero, but what if it spends most of its time above zero, as it does here?

If we randomly select a time to stop counting, the total at the cutoff point is more likely to be positive than negative. We can think of an expected total value as occurring at a random cutoff, which is proportional to the negative area under the curve. If the walk is, most of the time, positive by a large amount, then the area is going to be positive too.

Random, chaotic walks like this have a nice property of definitely spending more time either above or below zero as time approaches infinity; the area is guaranteed to diverge to positive or negative infinity.

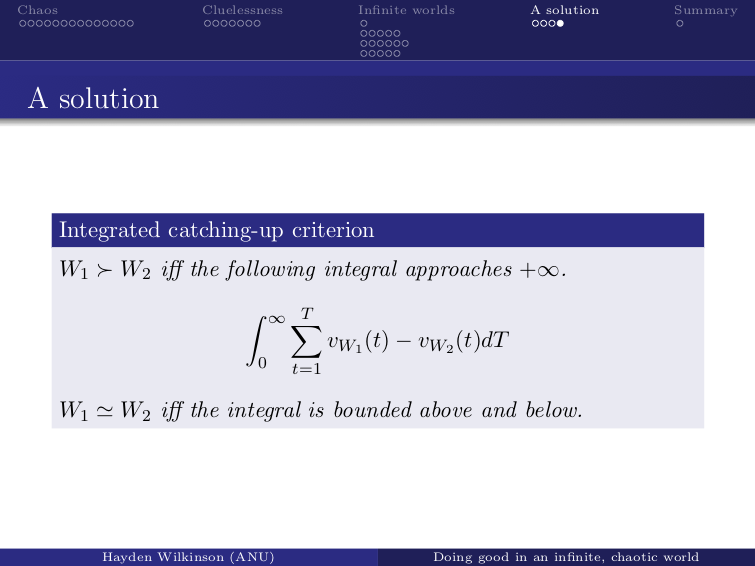

Therefore, we can use this area or expected total to say something like this, which is complicated, so I won't go into it too much:

If that integral diverges to positive infinity, World 1 is better. That's guaranteed to hold for chaotic outcomes like ours. We don't get incomparibility anymore, so the problem is solved.

Of course we're still uncertain. In the long run, the walk could end up mostly positive or negative. The area could diverge either way. No matter how well it starts off, it's 50-50 which way it'll go, so we're still clueless about which action will turn out better. But at least they're comparable, which means we can possibly run expected values and say which actions are subjectively better, as in the finite case. We're back to where we started, which is better than where we were.

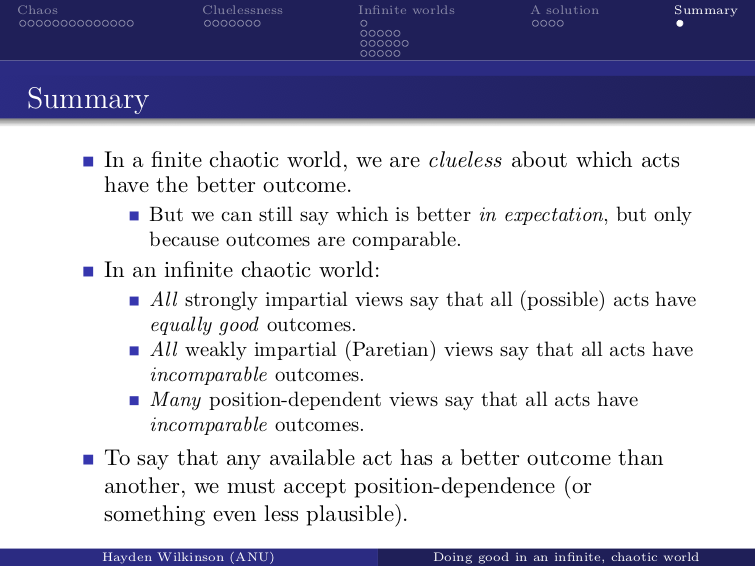

In summary, if you were to take an aggregated view of betterness in a finite world, you'd be clueless about which action will turn out best. But we can still say what's better in expectation, since outcomes are at least comparable.

But in infinite worlds, the problem comes back to bite us. Due to chaos, many views can't say that any outcome is better than another. Those views include all strongly impartial views, all weakly impartial views that respect Pareto, and many (but not all) position-dependent views.

We can still say that some outcomes are better and that we have corresponding reasons to act, but we have to hold a view that's dependent on the positions of value or something even less plausible.

That's a strange conclusion. Either we care a bit about position, which seems morally irrelevant, or we accept that we have no reason to make the world better.

Thank you.

Michelle Hutchinson (Discussant): Thanks so much, Hayden. It was a really interesting talk that clearly addressed some important questions.

It seems absolutely mind-boggling to me that because we're in an infinite world, it could be the case that everything's incomparable — and that somehow it doesn't seem better if a lot of people don't have intestinal worms. That does seem like a really strong reason to accept position-dependence, but then it seems very strange that it matters where in time value actually happens.

One thing I thought was interesting was that the talk just covered what would be the case if we were in an infinite world, rather than what we should believe given that we're uncertain of whether the world is finite or infinite. You might think that given our uncertainty, we should act as if we're in a finite world, because the idea that we're in an infinite world, where everything is incomparable, doesn't seem like it says that much about which actions we should take. On the other hand, the chance of us being in a finite world, with different worlds being comparable in the ways we should expect, seems like it does point to consequences for our actions. So maybe we should just go for that.

Another thing I was thinking about was the fact that as you presented it, position-dependence is inherently implausible. But it seems like there are some ethical theories in which the position of value matters. For example, you might think that egalitarians care about whether value is spread out or clumped. On the other hand, it seems kind of weird if the world being infinite is what makes us accept egalitarianism, if we didn't think that we should act on egalitarianism in a finite world.

So I was trying to think a bit more about why infinity might determine whether or not we accept position-dependence. It seems pretty impossible to think through an infinite world in which there are infinitely many copies of me doing different things. Some are doing the same things and some are doing different things. I guess that feels a bit more plausible — why, from the point of view of our galaxy, does it really matter whether there are fewer people suffering and whether I choose the outcome? From the point of view of all of these infinite copies, it stops mattering whether fewer people suffer.

I guess that pushes me toward position-dependence only mattering at a macro scale, rather than at a scale that we can actually see, in which we have some idea of why our intuitions are the way they are. They're entirely formed based on this galaxy, not on infinities. That seems to indicate that the kinds of position-dependent theories that you covered are the more plausible ones because they're working at the macro level rather than any level that we could notice.

Then, the position-dependent theory that you were covered was based on timing rather than different spatial positions, which seemed more plausible when thinking about actions, because you take an action at a particular time. Therefore, you might think, at the time you’re taking the action, “I only care about the consequences in the future rather than the ones in the past.” So you actually do care where the value is positioned.

Overall, I guess I ended up feeling that I was pretty confused as to how implausible it was to accept a position-dependent view. But even if we did accept a position-dependent view, it would have similar kinds of outcomes for our actions as the more intuitively plausible forms of consequentialism. Also, given that we're not sure about whether we're in a finite or an infinite world, even if we aren't willing to bite the bullet of position-dependence, we're still going to have to act morally rather than just partying all the time.

Hayden: Maybe. Thank you so much. I’ll just follow up on a few particular bits [of your response].

As far as comparing worlds over time rather than over space — taking account of the particular time at which we take actions — I should probably note that I've presented a very simplified version of my view. [It didn’t include] the full four-dimensional metaphor that might be affected. We also want to account for possibly infinite value over space. According to several cosmological theories, that's [the reality] that we must be able to deal with.

There are all sorts of bells and whistles we can add on, which do seem independently plausible. One of them is that we can ignore the time and place at which action is taken. So we super-valuate over all possible times this action might be taken, and only when every possible judgment generated from using a point [in time and place] agrees can we say that one outcome is better than another.

Michelle: I guess that does seem good for being able to compare the value in the world rather than the value in a world shifting all the time [depending on] what action you're looking at or where you are in time.

Hayden: Yeah. For instance, if two different agents are standing on different sides of the room and they're both considering actions, which in turn bring about pairs of actions, which in turn bring about exactly the same consequences in the future, one agent wouldn’t want to do the opposite of what the other does. That sort of agent dependence would be slightly crazy. So we can avoid that. It slightly weakens the view, but not by a significant amount.

It gets more complicated; there are always issues with special relativity.

On another note, how plausible is position-dependence? You mentioned that it would give similar judgments to finite consequentialism. In fact, if the universe turns out to be finite, then it will give you exactly the same judgments. [Position-dependence is] consistent with finite standard maximizing consequentialism. It implies it in the finite context, but if it turns out we're in the infinite context, then it still implies things that we want. It keeps going, whereas standard consequentialism breaks down. So with all of the views I [presented], the citations are equivalent in the finite case. The only situation in which position-dependence (preferring some positions over others) would give surprising results is when we're dealing with infinite collections of positions.

For example, if we look at future years of the universe, we would care more about benefiting every second year than we would care about benefiting every third year, because every third year is more spread out. Even if qualitatively identical people live in those years, it's still the case that there are more of them every second year. But if I think about benefiting someone here, or someone in 10 years, we all say exactly the same thing as the finite consequentialist: They both count equally.

Moderator: One audience member asks, “How does the model change if we assume that the walk isn't random and that, for example, good actions are more likely to have good consequences than bad ones? Is this a decent assumption?”

Hayden: It depends on the situation. In Hilary Greaves' paper, she talks about the basic cluelessness problem, in which both actions have outcomes that randomly vary by roughly the same pattern. We have no reason to think that one will have a better or worse outcome in 100 years than the other.

But other actions are systematic. There are a lot of conflicting reasons pushing us one way or the other. If we have an action that we think will consistently make the world better over time, then of course we'll be able to say that it’s better. In fact, we would probably avoid cluelessness if we have sufficient evidence that we'll continue to have a lasting good effect.

Also, if the random variables are not fully random — if they're not independent and identically distributed over all time — that's okay. For instance, the future could be better than the present. There could be an upward trend, but the variations could be from a very trivial act, like my breathing or not. The variations will be random and symmetrical because I've no reason to think my breathing right now is going to make life better in the future. Life in the future might be better, but the change incurred by me is entirely symmetrically random.

Good job Hayden, nice talk.

-Haydn