| This is a Draft Amnesty Day draft. That means it’s not polished, it’s probably not up to my standards, the ideas are not perfect, and I haven’t checked everything. I was explicitly encouraged to post something imperfect! |

| Commenting and feedback guidelines. I'm slightly nervous about the amount of feedback I'll get on this, but am doing it because I sincerely do want constructive feedback. Please let me know if my assumptions are wrong, my plans misguided, my focus for the course poorly calibrated, or my examples un-compelling. Google Doc for commenting available upon request. |

A few months ago, I posted a diatribe on how to better use evidence to inform education and field building in effective altruism. This a draft where I try to practice what I preach. John Walker and I have been exploring ideas for another EA Massively Open Online Course (MOOC). This is currently Plan A.

Big-picture intent

Why make a MOOC?

There are many great forms of outreach to build the community of people who care about effective altruism and existential risk. In contrast with most approaches, MOOCs provide:

- Authority, via their university affiliation

- Credentials for completion, with a university badge

- Minimal marginal cost per learner, by design

Designed well, they can provide high-quality, evidence informed learning environments (e.g., with professional multimedia and interactive learning). These resources can feed into the other methods of outreach (e.g., fellowships).

Course pitch to learners

- Many people want to do good with their lives and careers.

- The problem is: many well-intentioned attempts to improve the world are ineffective. Some are even harmful.

- With the right skills, you can have a massive impact on the world.

- This MOOC provides many of those skills.

- Through this course, you’ll learn how to use your time and money to do as much good as possible, and give you a platform to keep learning about how to improve the world.

Underlying assumptions

To be transparent, most of these assumptions are not based on direct data from the community or general public. Where support is available, I’ve linked to the relevant section of resources. Where not, I’ve listed methods of testing those assumptions but am open to corrections or other methods.

- There are some skills and frameworks used in EA that help us answer the essential question: “how can I do the most good, with the resources available to me?” (see also, MacAskill)

- Examples of what we mean by ‘skills and frameworks’

- current CEA website: scope sensitivity, trade-offs, scout mindset, impartiality, (less confidently: expected value, thinking on the margin, consequentialism, importance/value of unusual ideas, INT framework, crucial considerations, forecasting, and fermi estimates

- See old whatiseffectivealtruism.com page: maximisation, rationality, cosmopolitanism, cost-effectiveness, cause neutrality, counterfactual reasoning

- Examples of what we mean by ‘skills and frameworks’

- These skills and frameworks are less ‘double-edged’ than the moral philosophy

- The moral obligations in EA (e.g., Singer’s drowning child) are a source of motivation for many but also a source of burnout and distress (see also forum tags on Demandingness of morality and Excited vs. obligatory altruism)

- In contrast, the evidence that ‘improving competence and confidence leads to sustainable motivation’ is supported by dozens of meta-analyses across domains with no major downsides, to my knowledge

- These skills and frameworks are less controversial than the ‘answers’ (e.g., existential risk, farmed animal welfare)

- As put by CEA, “we are more confident in the core principles”

- Misconceptions about EA (e.g., per 80k: ‘EA is just about fighting poverty’; ‘EA ignores systemic change’) seem to stem from ‘answers’ rather than principles

- These skills and frameworks are more widely applicable and approachable than the ‘answers’

- My intuition says a medical student would benefit from learning many of the strategies in Table 1, but might find some of the ‘answers’ hard to stomach (e.g., medicine itself has modest direct impact). This does not mean avoiding something because it’s hard to stomach, but means it might be less confrontational to focus on the skills than the ‘solutions.’

- These skills and frameworks are necessary prerequisites to understand EA cause areas

- For example, possible cruxes of donating to a GiveWell charities is that we can test how good an intervention is, measure its effects, and compare effects across interventions and outcomes

- Similarly, cruxes of existential risk and longtermism are that the future will be good, we can predict the future, and can do things to reliably influence the future

- These skills and frameworks are still novel to many people we’d like to engage with

- The curse of knowledge makes it hard to remember what it was like to not know something. There’s likely a curse of knowledge when EAs recommend ‘high EV options’, those supported by super-forecasters, theories of change, or systematic reviews. Without understanding the rationales for these tools, I think people can talk past each other, and new people can find many other EA ideas (e.g., x-risk) un-compelling.

- I think the skills are less prevalent than we assume (e.g., only 40% of UK MPs know the chance of flipping two heads on a fair coin). This course is not to be Probability 101, but it does shallow dives into a range of these necessary skills.

- Stories frequently used in EA (e.g., GiveWell, or AI X-risk) could be used as compelling motivating examples of skills and frameworks (e.g., cost-effectiveness, forecasting)

- Stories in EA have simple problems with unexpected solutions (e.g., ‘Want to improve education? It’s really good to deworm kids in the developing world.’)

- There’s conventional marketing wisdom and systematic review evidence that concrete, ideally ‘emotive’ stories with unexpected solutions are useful hooks for engaging an audience and helping them remember lessons

- Many specific narratives in EA (e.g., worms) are important to cover, but more generally useful if they are framed as examples of important underlying principles (e.g., ‘there are likely huge differences in cost-effectiveness of interventions addressing similar problems’)

- There is no existing central resource of these skills and frameworks

- Some excellent programs seem to reflect our approach here (e.g., Leaf) but we have not found curated public repositories of training

Alternatives we're considering

We have not ruled these out but think they're less promising than Plan A.

Directly focus on existential risk (project plan available on request)

- Experts in this area have either been strapped for time or feel the area is not yet established enough to have a ‘core curriculum.’

Directly focus on AI safety as a key existential risk (project plan available on request)

- As per x-risk, some AI safety experts we consulted were strapped for time. Others felt the field was pre-paradigmatic and a MOOC would be more valuable once the field had more traction. In the meantime, virtual programs and fellowships can be more dynamic, changing as the field grows.

Focus on a combination of skills, blog posts and ‘moral insights’, like the EA Virtual Program

- At the moment, we judge that directly emulating the Virtual Program curriculum was (1) less likely to be adopted by a university (e.g., the handbook contains many blog posts) and (2) provided less brand differentiation for this product vs. the virtual program

Learning objectives

Through this MOOC, students will learn to

- Implement strategies to address psychological biases that lead to ineffective prosocial interventions

- Identify robust evidence for comparing and quantifying the effectiveness of interventions

- Use effective and pragmatic tools for managing uncertainty

- Plan strategies for having a bigger impact in areas aligned with their values

By the end of this MOOC, we hope you’ll be able to critically evaluate methods of improving the world, and find the methods that best fit you.

Draft structure

| Story for hook in videos | Misconception | Key new idea or skill | Application of idea or skill |

| Why doing good is hard | |||

| Scared straight | Emotions/intuitions are a pretty good indicator of what's helping the world; good intentions are what matter | There's a difference between doing things that make us feel good and those that reduce suffering and death most effectively | Values reflection: feeling good or doing good. How much do you care about each? |

| Birds and nuclear war | Feelings are proportional to the size of problems | Our emotional judgements are incentive to scope | Scope sensitivity and epidemiology 101: find the problems that kill the most people and notice how it tracks with your emotional response |

| Worms; or climate change FP report | Most ways of helping others are pretty similar (±2x) | Some ways of helping to reduce suffering are far more effective than others | Quiz using https://www.givingwhatwecan.org/charity-comparisons and https://80000hours.org/articles/can-you-guess/ |

| Thinking clearly | |||

| How motivated reasoning ruined the life of an innocent man: Dreyfus | Though I'm learning, I see the world clearly | We all dismiss arguments that don't fit our current beliefs, so use these tools... | Scout mindset tests (double-standard test, outsider, conformity, selective sceptic, status quo bias) |

| Vaccine scepticism from Think Again | When people disagree, I should try to more clearly explain my point of view | In a discussion my job is to explain their point of view, so I can properly understand the disagreement and get to the truth | Rapoport's Rules / Ideological Turing test ~= double cruxing: try to state someone else's perspective in a way they would agree with |

| Bay of Pigs vs. Cuban Missile Crisis | Plan for the best, be certain you'll succeed | Think about the worst; brainstorm ways you might be wrong | Red-team / devil's advocacy / pre- / post-mortem: brainstorm ways you're wrong |

| Doing good and doing it better | |||

| Studying medicine; maybe story of Emma Hurst, psychologist turned MP | My impact is determined by what I did | We need to add "...compared against what would have happened otherwise" | Counterfactual estimation of impact / thinking on the margin: try to figure out what would have happened otherwise |

| UBI in Canada vs Kenya | The biggest opportunities for me to have an impact are in my local community | Many people now have a much bigger opportunity to do good by doing good overseas | Impartiality in space: present best arguments for/against parochialism |

| Quantifying education vs cancer drugs | We can't measure what it means to do good | DALYs, QALYs, Wellbys, and Moral Weights all work relatively well for quantifying the impacts of many interventions | Quantifying wellbeing: choose a method of measuring what matters, acknowledging that these simplifications are limited, but incredibly useful as long as those limits are well understood. |

| Playpumps vs. chlorine | 'Which intervention looks to solve the problem best?' | Which intervention solves the problem best, per dollar?' | Cost-effectiveness evaluation: account for both the benefits and costs of an intervention, including uncertainty about outcomes |

| Everything you eat is causing and curing cancer | "New research shows..." is trustworthy; a single, good study (e.g., an RCT) is enough to make an informed decision. | Single studies are relatively weak evidence, and they conflict. Systematic reviews and meta-analyses are much stronger. Work down from meta-reviews, to meta-analyses, to RCTs, to the rest | Hierarchy of evidence: work down from the top rather than up from the bottom |

| Some approaches to climate change are not neglected, others are; some approaches to helping animals are not neglected, others are | I should focus on what's important then... | Weight importance, tractability (inc. your fit), and neglected ness to maximise impact 'on the margin' | Weighted factor models via INT: make decisions when multiple criteria count using heuristics. Mostly talk about prioritising interventions within a cause area, then have learners practise prioritising cause areas as an exercise |

| Managing an uncertain future | |||

| Gambling and lotteries; systemic change and lobbying to remove lead paint | Compare the costs with the possible reward | (Generally) do what has the highest expected value | Expected value calculations: account for the value of success and the probability of it happening |

| Iraq invasion | "I'm 50:50" or "You can't put a number on it" | Using even rough probability estimates are almost always possible and important to calculate expected value | Quantifying uncertainty: quantifying 'maybe' |

| Piano tuners; fermi paradox | Some problems are impossible to quantify | Break hard problems into smaller ones don't ignore the parts that are harder to quantify | Fermi estimation: break down an impossible question into smaller ones; squiggle exercise? |

| Is this the most important century?; AI bio-anchors | The future is predictable, or it's totally unpredictable | We can learn to better predict the future with CHAMPS | Forecasting: make calibrated probability judgements about the future |

| Kahneman book development story | Start with my beliefs then update slowly in light of evidence | Find a robust 'outside view' and update using your information | Finding base-rates: start with an outside view then update using inside knowledge; perhaps use example of AI Impacts ML survey |

| BeerAdvocate?; war in Ukraine? Updates as AI Progresses? | p(disease|positive test) = 'accuracy' of test; p(d|+) = p(+|d) | p(d|+) = p(+|d) * p(+) / p(–), or the 'odds' version from 80k | Bayesian updating (in small increments): update your belief in light of new evidence |

| Cleopatra's extra dessert; Schulman's x-risk EV calculations | I should do good now | Things I do now could have far-reaching, long-run influences that matter | Case study in applying EV assuming impartiality in time: present best arguments for/against longtermism, x-risk reduction |

| Planning your path to impact | |||

| Tom's shoes | Doing good things leads to good outcomes | Mapping how good happens identifies blind spots and reduces risk | Crucial considerations and theories of change: use cluster thinking and short, testable causal chains |

| Givewell's transparency and external critiques | I should argue my case in a compelling way | I should make my reasoning transparent so I can become more accurate | Reasoning transparency: making it clear what you know and how you know it |

| Cassidy Nelson's pivot from medicine | I should do what I'm passionate about | Do what gives your life meaning and fits your skills | Write the retirement speech you'd like people to say about your career. Using that speech, identify one new goal you could set for yourself that would allow you to have a bigger impact. If you want ideas, have a look at these 80k resources. |

| Sam Harris's approach to donating | I should sweat the small stuff, penny pinch, feel guilty | Make advanced commitments and only review every year or so | Go to GWWC pledge or One for the world. Tell them we sent you 😘 |

| Case study of approaches to pandemics, and how GCBRs seems high EV, neglected, tractable | Preventing covid-level pandemics is the focus | If we aim to prevent GCBRs we might reduce the risk of covid-level pandemics *and* prevent the worst possible outcomes, with a modest increase in cost | Final project: translate the GCBR worked example to nuclear risk, AI, farmed animal advocacy, or global health/wellbeing, writing up a case for an intervention to reduce the risk from one of those problems |

Current plan

Completed

- Approach a long list of academics at global top 10 universities to see if any want to 'own' in delivery on a course aligned with their priorities.

In progress

- Lock in approval for this program to be hosted on EdX via UQ

- Have enthusiastic support from some key decision-makers. I'm preparing a business case.

- Continue exploring partnerships with subject matter experts at global top 20 universities, especially as delivery partner on UQx course to establish working relationship and reduce risk/work at the partner’s end

- Approach aligned organisations where their whole expertise relates to a module (e.g., 80k for modules about career planning, GWWC for donations)

- Continue checking uncertainties

- Check back in with some advisors and post on the forum for criticism

- Empirically check a number of the assumptions via:

- Market testing via Adwords ($1000 worth of ads testing different course framing)

- Surveys of EA community (20–50 people)

- Survey of representative sample of population (500 people)

- 2 focus groups with target audiences

Alternative titles

- Increasing social impact

- Psychology of effective altruism

- Having an impactful career

Closest MOOCs

The Science of Everyday Thinking | edX (335,000 enrolments)

Effective Altruism | Coursera (50,000 enrolments)

Global Systemic Risk | Coursera (2,871 already enrolled)

What do modules look like?

What’s the rough format of each module?

- 5-10 minute video(s) with some interactivity overlaid via h5p

- 5-10 minute introductory learning activity

- 10-15 minute challenging learning activity

Example: Hierarchy of evidence—down from the top of the evidence hierarchy rather than up from the bottom or starting in the middle

Learning objective (outcome we hope learners achieve)

- Find and interpret a systematic review and meta-analysis for an intervention

Example introduction video series

Note: UQ expects >70-80% of MOOC videos are bespoke for the MOOC (i.e., not recycled from YouTube). However, as a proof of concept, the following videos demonstrate rough examples of what a better produced, more concise video would say.

- Features and benefits of a systematic review

- What are systematic reviews?

- The Steps of a Systematic Review

I would probably frame the discussion around a hard question using an EA cause/intervention as an example. For example, you want to reduce poverty or farmed animal consumption, and you want to know if conditional cash transfers or leafleting works. Then present similar content to the videos above, but rather than “does garlic prevent colds” use a prosocial example.

Introductory learning activity

You mention GiveWell to your colleagues. GiveWell recommends using insecticide treated bed nets as one of the most cost-effective global health interventions. You’re aware there’s a Cochrane systematic review on the use of these nets (summary below) that found they almost halve the risk of children being infected with malaria, so reduce mortality by almost 20%. But, your colleague sent you an PBS news article saying the nets do not work as well as they used to because the mosquitoes are learning to get around them (summary below).

Answer the following questions based on this scenario.

What is behavioural resistance in relation to malaria control? [truncated for forum post]

Which of the following are true when comparing media articles like these against systematic reviews? [truncated}

The findings from the PBS article are based on an observational study of mosquitoes. The study showed mosquitoes started to bite during the day, rather than at night when bed nets were protective. In contrast, the Cochrane review covered 12 randomised trials and looked at health outcomes, rather than biting behaviour. Which of the following are true of this systematic review, compared with the observational study of mosquitoes? [truncated]

There are some good quality studies (e.g., double-blinded, randomised, prospectively registered) with large samples that find the nets do not reduce mortality (e.g., Habluetzel, 2002, which ticks all the Cochrane criteria for good evidence). What is the value of a systematic review of randomised trials over a single study, even if the single study is large and high quality? Systematic reviews are more reliable because they… [truncated]

Challenging learning activity

- Pretend you’re in charge of foreign aid for your government, and are told to use the aid to help the poor. If you don’t use the money, you’ll use it. If you use the money on an intervention that doesn’t work, there’ll be a public backlash.

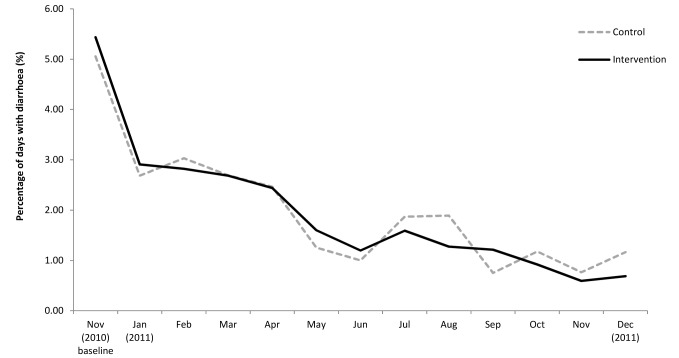

- You know diarrhoea kills 500,000 children under 5 every year. You hear you can reduce these deaths by funding chlorine to clean the water.

- Two academics come to you with randomised trials they’ve published in the last 10 years, both testing chlorine to clean drinking water.

- Boisson says ‘we ran a double-blind randomised controlled trial, which is the gold standard for evaluating the effectiveness of interventions. Also,we used a large sample size, with 2,163 households and 2,986 children under five, which increases the statistical power of the study. We had a one-year follow-up period, which allowed for a more comprehensive assessment of the effect of the intervention on diarrhoea prevalence. We found no effect of chlorine on diarrhoea:

- Haushofer disagrees. He says: “we also used a randomised trial, but of 144 villages and followed them for four years. We found providing free dilute chlorine in rural Kenya reduced all-cause under-5 mortality by 1.4 percentage points, a 63% reduction relative to the control group. We found it only cost $25 per disability-adjusted life year (DALY) averted, which is twenty times more cost-effective than the World Health Organization's "highly cost-effective" threshold.”

- What do you decide to do? Does this intervention work? Find a systematic review or meta-analysis that aggregated randomised trials and decide whether the intervention works.

Multiple choice questions

- Which of the following meta-analyses addresses the research question? [truncated]

- How does the cost per DALY averted from water treatment compare to the widely used threshold for "highly cost-effective" interventions?

- It is about the same

- It is about half as much

- It is about 45 times lower

- Correct: c

- Explanation: as described in the abstract “the cost per DALY averted from water treatment is approximately forty five times lower than the widely used threshold of 1x GDP per DALY averted for “highly cost-effective” interventions first recommended by the WHO’s Commission on Macroeconomics and Health.”

- How many randomised controlled trials were included in the meta-analysis?

- 15

- 29

- 52

- Correct answer: A. 15

- Explanation: The researchers identified 52 RCTs of water quality interventions and included 15 RCTs that had data on child mortality in the meta-analysis.

- What was the expected reduction in the odds of all-cause child mortality in a new implementation according to the study?

- 10%

- 20%

- 25%

- Correct answer: C. 25%

- Explanation: The expected reduction in the odds of all-cause child mortality in a new implementation was 25%, taking into account heterogeneity across studies.

- What is the estimated cost per DALY averted due to water treatment?

- About $10

- About $20

- About $40

- Correct answer: C. About $40

- Explanation: The researchers estimate a cost per DALY averted due to water treatment of around $40 for both chlorine dispensers and a free coupons program, including delivery costs.

- Based on the considerations above, what would you do?

- Ask for more research

- Fund the program

- Recommend against funding the program

- ‘Correct answer’: Fund the program

- Explanation: The program meets the threshold set out in the initial motivating question. As a result of this meta-analysis, chlorine for safe drinking water is now one of the recommended programs from charity evaluator GiveWell. Before this meta-analysis, the effects were controversial. This example shows the benefit of using this kind of evidence to make hard prosocial decisions.