Thanks to Jamie Bernardi, Caleb Parikh, Neel Nanda, Isaac Dunn, Michael Chen and JJ Balisanyuka-Smith for feedback on drafts of this document. All mistakes are my own.

How to read this document

- If you're short on time, I recommend reading the summary, background and impact assessment (in descending order of importance).

- The rest of the document is most useful for individuals who are interested in running similar programmes, either for AI safety specifically, or for other cause areas / meta EA, or for people who are interested in participating or facilitating in the next round.

Summary

Overview

- Over the Summer of 2021, EA Cambridge, alongside many collaborators, ran a virtual seminar programme on AGI safety.

- It had 168 participants and 28 facilitators (from around the world - the majority were not based in the UK), and provided an overview of the AI safety (AIS) landscape and a space for participants to reflect upon the readings with others and experienced facilitators.

- It consisted of an introduction to machine learning (ML); 7 weeks of 1.5-hour cohort discussions in groups of ~5 with a facilitator, with ~2-hours of reading on AIS per week; followed by an optional 4-week self-directed capstone project, finishing with a capstone project discussion.

- This document provides some more background on the programme, feedback from participants and facilitators, mistakes we made, and some things we’ll be doing to improve the programme for the next iteration (taking place in early 2022).

- I believe the main source of value this programme provided was:

- Accountability and encouragement for AI safety-minded individuals to read through an excellently-curated curriculum on the topic.

- Relationships and networks created via the cohort discussions, socials and 1-1s.

- Accelerated people’s AIS careers by providing them with an outline of the space and where opportunities lie.

- Increased the number of people in the EA community who have a broad understanding of AI risks, potentially contributing to improving individuals’ and the community’s cause prioritisation.

- Information value on running a large cause-area-specific seminar programmes.

Further engagement

- If you’d like to participate in the third round of the programme (Jan-March 2022), apply here.

- There are two tracks: a technical AI alignment track for people from a maths/compsci background who are considering technical AI safety research, and an AI governance track for individuals considering a career in policy/governance.

- If you already have a broad background in ML and AI technical safety or AI governance, please consider applying to facilitate here.

- This is compensated with an optional £800 stipend.

- Facilitators have the option to accept this stipend or donate it directly to EA Cam or EA Funds.

- We want to ensure all individuals with the relevant expertise and knowledge have the opportunity to contribute to this programme, regardless of their financial situation.

- We’re likely to be bottlenecked by facilitators with relevant expertise, so we would strongly appreciate your application!

- This is compensated with an optional £800 stipend.

- If you’d like to run your own version of this programme at your local group/region, please fill in this form and we’ll send you our resources, how-to guides, and offer you a 1-1 and other forms of support.

- Note that we will also be helping to organise local in-person cohorts via this global programme.

Why are we writing this post?

- To be transparent about our activities and how we evaluate them as an EA meta org

- To provide anyone who wants to learn more about this specific AI safety field-building effort an insight into how it came about, what took place, and how it went, as well as to gather feedback and suggestions from the community on this project and similar future projects.

- To encourage more people to apply to participate or facilitate in the upcoming round of the programme, taking place Jan-Mar 2022, and future iterations.

- To inspire more people to consider contributing to personnel strategy and field-building more broadly for AI safety.

Background

Reading groups - status quo

This programme came about due to a conversation with Richard Ngo back in November 2020. We were discussing the local AIS landscape in Cambridge, UK, with a focus on the local AIS discussion group - the only regular activity in the AIS community that existed at that time. Attendees would read a paper or blog post and come together to discuss it.

While discussion groups are great for some people, they are not well-optimised for encouraging more people to pursue careers in AIS. In our case, it was additionally unclear whether the reading group required technical ML knowledge, and it was the only landing spot in Cambridge for people interested in AIS.

As a result, regular participants had a wide range of prior understanding in ML and AIS, and had large (and random) gaps in their knowledge of AIS. Keen new members would bounce away due to a lack of any prior context or onboarding mechanism into the group, presumably feeling alienated or out of their depth. Simultaneously, the discussion did not serve to further the understanding or motivation of highly knowledgeable community members, whose experience would vary according to attendance.

Response to the status quo of reading groups

In response to this situation, Richard offered to curate a curriculum that introduced people in Cambridge and beyond to AIS in a structured way. Our first step was to reach out to local AI safety group leaders from around the world to test out the first curriculum and programme, and to train them up such that they could facilitate future rounds of the programme. 10 AIS group leaders signed up, and Evan Hubinger and Richard each facilitated a cohort of 5 for 7 weeks from Jan-Mar 2021.

We learnt a lot from the first round of the programme, and Richard made significant revisions to the curriculum, resulting in the second version of the curriculum. We opened applications for the second round of the programme in late May 2021, and received significantly more applications than expected (I personally expected ~50, we received 268). This may be partially explained by the fact that many people around the world were under lockdowns, and hence particularly interested in virtual programmes. There was also some demand for an AI governance track, so we put together this short curriculum to replace the final three weeks for the governance cohorts. More details on the Summer 2021 round of the programme can be found throughout the rest of this post.

How does this fit into the talent pipeline?

There are a myriad of ways people come across AI safety arguments and ideas: reading Superintelligence or other popular AIS books, learning about EA and being introduced to x-risks, LessWrong or other rationality content, hearing about it from peers and friends, Rob Miles’ YouTube videos, random blogs, etc. However, there hasn’t been a systematic and easy way to go from “public-ish introduction” to understanding the current frontiers. This is where we hope this programme can help.

A curated curriculum and facilitated discussions should help alleviate the issues with the previous status quo. Individuals who were interested would spend months or years reading random blog posts by themselves, trying to figure out who is working on what, and why it is relevant to reducing existential risk from advanced AI. An analogy I like to use is that the status quo is something like a physicist going around telling people “quantum physics is so cool, you should learn about it and contribute to the field!”, but without any undergraduate or postgraduate courses existing to actually train people in what quantum physics is, or accountability mechanisms to help budding physicists to engage with the content in a structured and chronological manner.

Cohort-based learning is also frequently regarded as superior to self-paced learning, due to higher levels of engagement, accountability to read through specific content and reflect upon it, having a facilitator with more context on the topic who can guide the discussions, and the creation of a community and friendships within each cohort. This is especially important for problems the EA community regularly focuses on where we’ve applied the “neglectedness” filter, given the lack of peers to socialise or work with on these problems in most regions of the world.

However, I believe there is still much work to be done with regards to AI safety field-building, including:

- Supporting the seeding and growth of excellent local AIS groups

- Training and upskilling AIS people in ML (and governance?), for example, MLAB

- Compiling more relevant resources (e.g. this programme’s curricula, and this further resources document)

- Creating a landing page where all “core” AIS resources are included (e.g. readings; funding opportunities; relevant orgs; job and study opportunities, etc.) and presented in a clear manner (see the Good Food Institute’s website for an amazing example of this)

- Figuring out whether and how to do AIS outreach to ML experts

- Supporting people in taking their knowledge to actually working on technical projects, for example, more things like Evan Hubinger / SERI’s Machine Learning for Alignment Theory Scholarship (MATS) programme

- Helping people test fit and find the best way for them to contribute to AI Safety (ML research, research engineering, theoretical research, software engineering, etc)

...and likely much more. Some of the above is already happening to various extents, but it seems very plausible to me that we could be doing a lot more of this type of field-building work, without draining a significant amount of researcher mentorship capacity.

What’s next for this programme?

We’re now working on the third round of this programme, which will be taking place from Jan-Mar 2022. We hope to provide infrastructure for local discussions where possible (as opposed to being entirely virtual), to continue to improve the standard curriculum, to finish the development of the new governance curriculum by working with AI governance researchers and SERI (shout out to Mauricio for working so hard on this), and to make other major improvements (detailed below).

I’d like to say a huge thank you to everyone who helped make the Summer 2021 programme happen, including all the facilitators, speakers and participants. I’d also like to say a special thanks to Richard Ngo, Thomas Woodside and Neel Nanda for all their additional work and support.

Impact assessment

Career

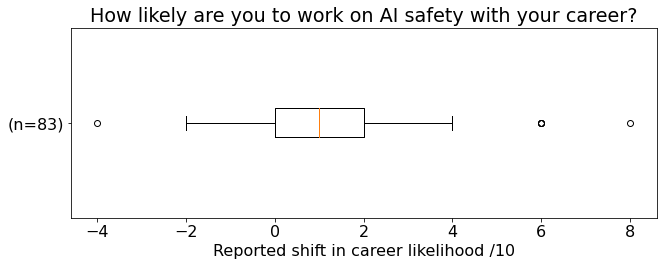

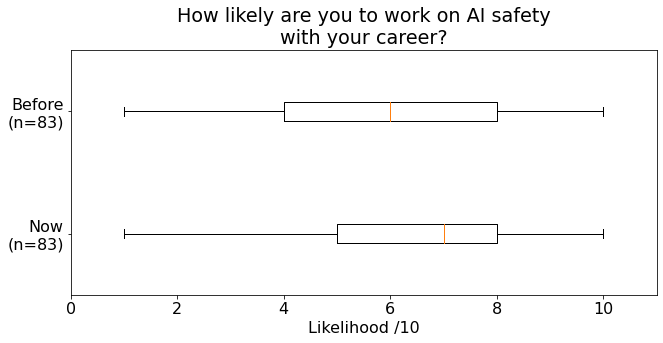

- Participants on average expressed a 10% increase in likelihood that they would pursue a career in AIS.

- This question was asked to participants in the post-programme feedback form, i.e. we asked both questions (current and previous career likelihood) in the same survey.

- I believe this is more likely to provide accurate information, as applicants may feel incentivised to express a higher likelihood of pursuing an AIS career in their initial application.

- We’ve had participants go on to work at ML labs, to receive scholarships from large grantmakers, and to contribute to AIS field-building in other ways

- This question was asked to participants in the post-programme feedback form, i.e. we asked both questions (current and previous career likelihood) in the same survey.

- Some anecdotes from participants can be found here.

Value to participants

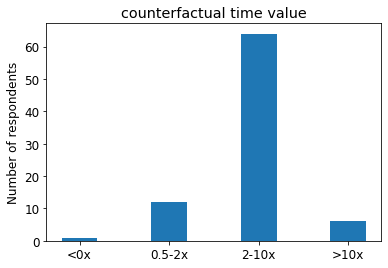

- Participants overwhelmingly expressed that participating in this programme was counterfactually a 2-10x more valuable usage of time than what they otherwise would have been doing.

- However, not all participants filled in the feedback form, so this may not be wholly representative of the entire cohort.

- Some anecdotes from participants on the counterfactual value of this programme can be found here.

General sense of impact

- This programme has helped to create a greater sense of both the demand and possibilities in AIS field-building

- Numerous participants and facilitators are now working on other scalable ways to inspire and support more people to pursue impactful careers that aim to mitigate existential risks from advanced ML systems.

- Anecdotally, I’ve had conversations with individuals who were incredibly surprised by the demand for this programme, and that this spurred them into considering AIS field-building as a potential career.

- It’s also highlighted some of the deficiencies in the current pipeline, by creating a space where over a hundred people from many different backgrounds and levels of expertise reflect upon AIS and try to figure out how they can contribute.

- Numerous participants and facilitators are now working on other scalable ways to inspire and support more people to pursue impactful careers that aim to mitigate existential risks from advanced ML systems.

- Seminar programme model

- The seminar programme model (inspired by the “EA Fellowship”) that this programme followed has also inspired us to develop similar programmes for alternative proteins, climate change, biosecurity and nuclear security (which are all in various stages of development).

- The demand for this programme, and the positive response from the first two rounds, highlights the value of experts curating the most relevant content on a topic into a structured curriculum, receiving an enormous amount of feedback from a myriad of sources and continually improving the curriculum, and participants from similar backgrounds (in terms of prior knowledge and expertise) working through the readings together in a structured manner.

- This is probably especially true for fast-changing and neglected topics, such as those frequently discussed within the EA community, where just figuring out what’s going on is far from a simple affair.

- Learning about and reflecting on these ideas with peers also provides participants with the feeling that these aren’t some crazy sci-fi ideas, but that they’re concerns that real people they’ve met and like are taking seriously and taking action upon.

- The friendships and networks developed during this programme are also valuable for sharing tacit knowledge, job and funding opportunities, and developing collaborations.

- Creation of scalable resources

- Once curated, the curricula are publicly available and highly scalable resources that can be utilised effectively by thousands of individuals (if they’re motivated enough to read through the resources without a peer group).

- We’ve also gained a sense for what introductory resources need to be developed in terms of understanding some key ideas (e.g. from the “what are you still confused about” section in the feedback forms), and what forms of career support they’d appreciate and subsequent resources we could develop to support this.

- Advancing people’s AIS knowledge

- While AIS is often regarded as one of the top priorities within the EA / rationality communities, I would wager that surprisingly few people have a deep understanding of what the underlying problems are and the motivations behind this deep concern for the future of humanity.

- I hope that this programme has provided participants with sufficient context on this issue to start to develop their own beliefs and understanding on what AI safety is all about, and the arguments behind why the current development trajectory of this technology could be catastrophic for society.

- It’s unclear to me how much/whether having more knowledge on AIS improves one’s ability to compare between different career trajectories in terms of lifetime impact, though I would generally guess that knowing more is better than knowing less (at least for people who are intentional about prioritising between different options).

- While AIS is often regarded as one of the top priorities within the EA / rationality communities, I would wager that surprisingly few people have a deep understanding of what the underlying problems are and the motivations behind this deep concern for the future of humanity.

Programme overview and logistics

This section will briefly highlight the steps that were undertaken to make the programme happen this Summer, and what the programme looked like in more detail from a logistics perspective. Skip ahead if you don’t care to read about logistical details.

- Richard improved the curriculum based on the feedback from the first round

- An application form was created for the participants and facilitators

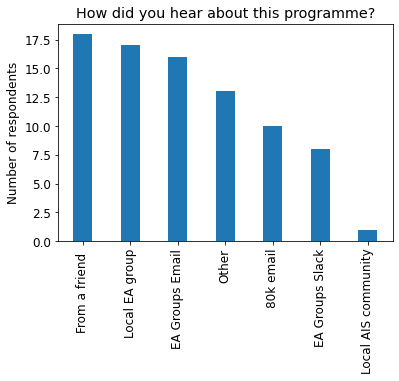

- The forms were promoted in a range of locations

- AIS mailing lists, EA groups mailing list and Slack workspace, and more

- We received funds to provide each applicant with a free AI safety-related book, sent via EA Books Direct (built by James Aung and Ed Fage).

- After the deadline, we evaluated the applications, and accepted the participants who fit the target audience and for whom we had sufficient facilitator capacity (168 participants, 28 facilitators).

- We don’t believe we had a perfect evaluation process, and likely rejected many good applicants. Please apply again if you think this applies to you!

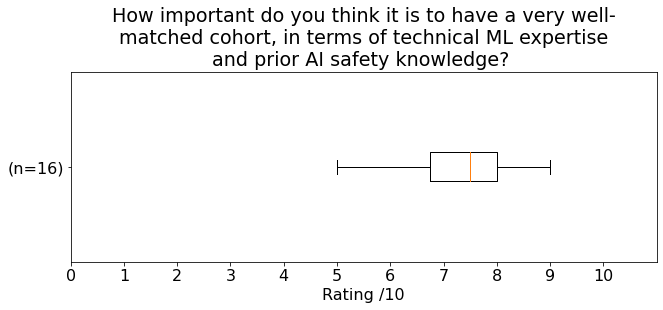

- Participants and facilitators were ranked according to (our estimate of) their prior AI safety knowledge, ML expertise and career level.

- These rankings were used to create cohorts where participants were well-matched in terms of technical expertise and would have the most shared context between them.

- Cohorts were created using LettuceMeet and Thomas Woodside’s scheduling algorithm.

- This was a palava, details in the appendix!

- Participants were added to their private cohort channel, and were invited to a recurring google calendar event with the curriculum and their cohort’s zoom link attached.

- Facilitators were offered facilitator training.

- This consisted of a one-hour workshop, where the prospective facilitators learnt about and discussed some facilitating best practices, as well as role-playing being a facilitator in a challenging discussion situation.

- The programme kicked off in mid-July with a talk by Richard introducing ML to the participants who had less (or no) ML expertise / background.

- For 7 weeks throughout July and August, participants met up in their cohorts once per week to discuss the week’s readings.

- Each cohort was provided with a unique zoom link for their session.

- EA Cambridge has 4 paid Zoom accounts to ensure that we have enough Zoom accounts when sessions (in a variety of our programmes) clash.

- Facilitators had access to a facilitator’s guide, that provided many tips on good facilitating practice, as well as tips and discussion prompts for each week’s session.

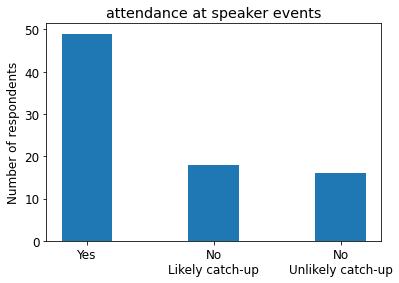

- During this period, we also had 5 speaker events and 2 socials.

- The speaker events and Q&As were hosted by AI safety researchers, and the questions were submitted and voted on in Sli.do.

- Participants had the option to join a #virtual-coffee channel that was paired with the Slack app donut, which randomly paired them with another channel member for a 1-1 on a weekly basis.

- The bulk of the administrative effort during this period was in finding alternative cohorts for participants who missed their week’s session, responding to facilitator’s feedback and providing them with support, hosting and organising speaker events, and other random tasks to keep the programme running.

- Each cohort was provided with a unique zoom link for their session.

- After the 7 weeks of discussions, participants had the option to spend ~10 hours over 4 weeks doing a self-directed capstone project.

- Some of the projects that were submitted, and for which we gained consent to share publicly, can be found here.

- A further resources document was created to help guide participants in developing their ML skills and to support their further engagement with the AI safety community.

Participant feedback

We had 258 applicants, 168 were accepted onto the programme, around 120 (71%) participants attended 75%+ of the discussions, and 83 participants filled in the post-programme feedback form (as of mid-November).

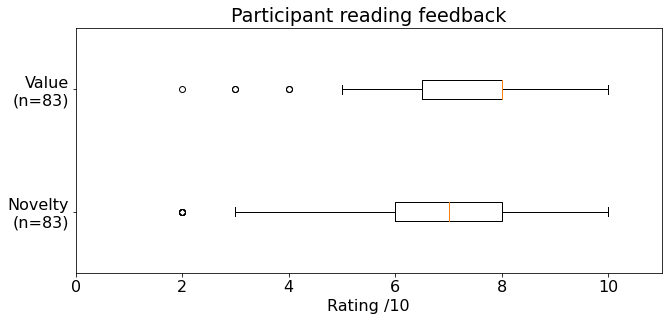

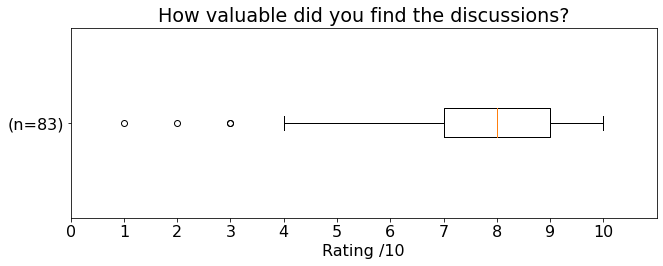

Below is a breakdown of the feedback we received from participants, with some accompanying graphs. Tables with the statistics from the feedback forms are provided in the appendix.

Feedback

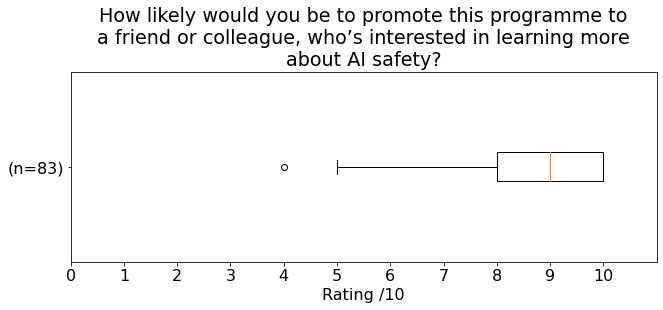

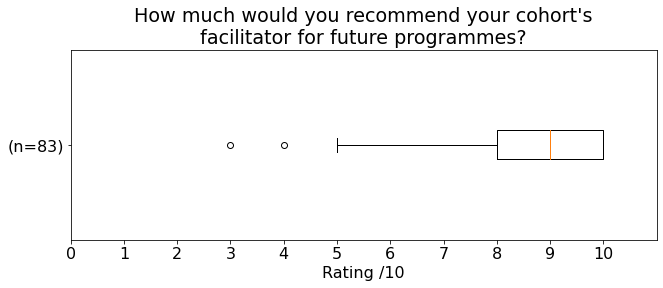

- Participant rating on the programme (e.g. their likelihood of promoting the programme to others) and their rating of their facilitator:

- Curriculum

- Participants regularly mentioned that having the curated readings provided a valuable high-level overview of and a comprehensive introduction to the AGI safety landscape, structured in a way that makes sense to go through and that builds upon prior knowledge.

- A few participants noted that the readings felt very “philosophical” or abstract, and that it could benefit from a sprinkling of more current ML issues, to better ground the discussions in today’s reality.

- Discussions

- Having an accountability mechanism via the weekly discussions to read through and reflect upon many AIS readings was a significant source of value for many participants.

- The sessions created a space where these issues were taken seriously and not treated as sci-fi. Some participants mentioned that this helped them feel like this was a real concern, and that it was valuable to develop relationships with others in the community for this reason.

- Facilitators were able to provide additional context on the readings and the AIS community, and the discussions also served to give participants an opportunity to begin to resolve confusions or uncertainties, or to dig into cruxes with other people who had a similar background understanding.

- One suggestion on how to improve the discussion was to intentionally try to get participants to form explicit takes on things, i.e. breaking down from first principles why or why not someone would have X opinion on Y, thereby attempting to separate knowledge from opinions, have better disagreements, and further improve their understanding.

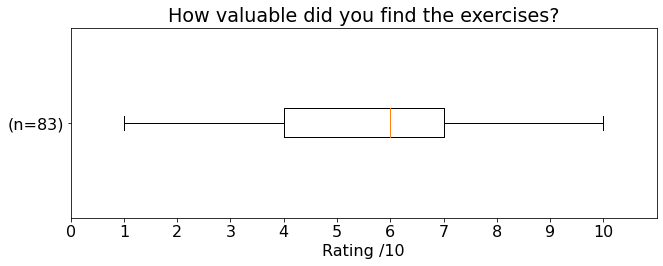

- Exercises

- These largely comprised of longer questions for participants to consider/reflect upon during or after they completed the readings.

- Generally, participants didn’t find the exercises particularly useful, or didn’t end up doing them as they weren’t made relevant for the discussions, i.e. facilitators didn’t explicitly ask about them, so participants didn’t feel compelled to do them.

- Some participants did note that they found the exercises very helpful, but these were a minority.

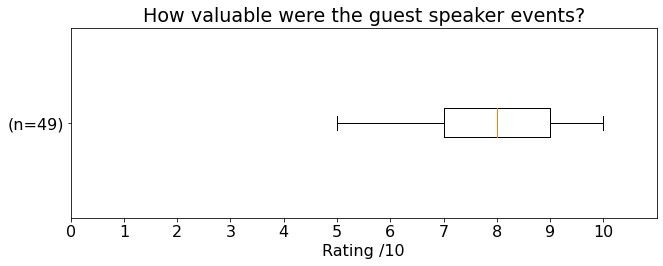

- Speaker events

- Participants in general found the speaker events valuable, though attendance wasn’t high (around 30 on average).

- They were recorded for participants who couldn’t attend.

- The main reason noted for not attending was the speaker event time not being suitable for their time zone, or the event not being advertised soon enough in advance.

- One participant noted that the speaker events made it clear how few women presently work in AI safety, and that we could improve upon this in the next round by including more female researchers as speakers.

- Participants in general found the speaker events valuable, though attendance wasn’t high (around 30 on average).

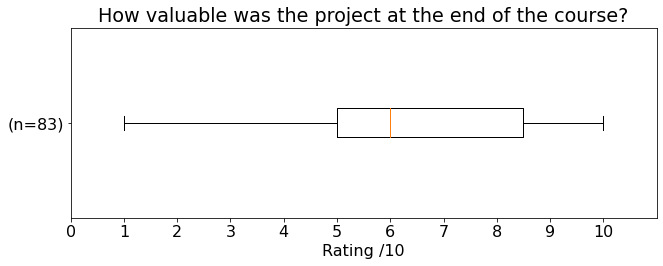

- Capstone projects

- Creating the environment and accountability for participants to do their own research or project for 4 weeks was frequently regarded as a very valuable aspect of this programme, and the most valuable aspect of the capstone projects portion of the programme.

- Some of the projects constituted work that was going to be completed regardless, though participants noted that this provided valuable. accountability to complete the project and gather feedback, as well as it being improved by their learnings throughout the programme.

- Many participants felt like they needed more time to do their project, or more support from their cohort/facilitator/experts.

- Many also wanted to work on a project with a collaborator.

- Some appreciated the chance to develop more ML skills, and feeling proud of what they could achieve over a short period of time.

- Most of the negative ratings for this question in the feedback form was from participants who hadn’t actually completed a project for a variety of reasons.

- Creating the environment and accountability for participants to do their own research or project for 4 weeks was frequently regarded as a very valuable aspect of this programme, and the most valuable aspect of the capstone projects portion of the programme.

- Remaining confusions

- Participants often mentioned they still felt confused about mesa optimisers, iterated amplification and debate, and inner and outer alignment.

- Desired career support, or suggestions on what resources to create

- Introductions to experts or mentors.

- Getting more profs to be interested in AI safety.

- Social encouragement and connections.

- Funding for safety research.

- Career strategy (e.g. PhD vs top ML lab).

- Keep track of open technical questions in AI safety.

- More concrete project suggestions, that participants from a range of backgrounds can take on.

- More work, content and opportunities on AI safety strategy, e.g. when will TAI come, who do we need to solve the problem, how do we get those people into optimal positions, optimal timings, etc.

- Content to send to experienced ML researchers on the risks from AI.

- Turn this programme into a degree course/module.

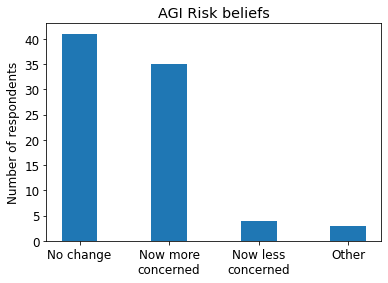

- How did people’s risk perception of future AI systems change during the programme?

- 5% now were less concerned about AI risks.

- They generally felt like they have a better understanding of what’s going on, but also now appreciated the amount of disagreement between researchers.

- 49% experienced no change in risk perception.

- 42% were now more concerned about AI risks.

- Some of the reasons expressed:

- Better understanding of the many different threat models.

- Few concrete paths to safety, current work seems intractable.

- Appreciating how few people are actually working on this.

- Some of the reasons expressed:

- 4% were unsure.

- 5% now were less concerned about AI risks.

- How comfortable were participants in contributing to the weekly discussions?

- ~83% of participants (who filled in the post-programme feedback form) felt comfortable contributing to the discussions.

- ~11% felt they could “kind of” contribute, and ~2% felt they couldn’t contribute.

- Some of the reasons expressed included not feeling as knowledgeable as their peers, not having strong opinions on the week’s topic, feeling more junior than the others in their cohort, and experiencing a language barrier.

- Some next steps from participants (we’ll be collecting this data more systematically in the future, now that we have a sense of the range of possible options).

- Think more about whether AIS is a good fit / the most important thing for them to do.

- Apply to PhD programmes, jobs or internships relevant to AIS or governance.

- Apply to grants to do independent AIS research.

- Continue to learn about and discuss AIS.

- Cheap tests in different facets of AIS work.

- Try to direct their current research in a more safety-oriented way.

- Work on improving their ML skills.

- Get more involved with the AIS community.

- Advice for future participants.

- Being confused is very normal!

- Ask as many questions as you can, both on the Slack workspace and in your weekly cohort discussions.

- Don’t rush the readings just before your session, read them a few days beforehand to have plenty of time to reflect on the ideas and ask questions prior to the session.

- Take notes while doing the readings, and refer back to them later to consolidate understanding.

- Start thinking about what you might do a project on early in the programme.

- Get as engaged as you can, including reading the further readings, attending the socials, and using and chatting on the Slack workspace.

Demographics / other

The proportions below are from the participant post-programme survey, and responses are not mandatory, so this is potentially not representative of all participants/applicants. We’ve added (optional) demographics questions to the application form for the next round, to better capture this data.

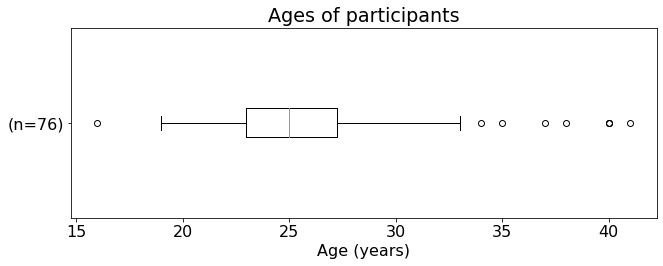

- Participant ages

- The median age of the participants was 24, though the youngest was 16 and the oldest was 41.

- The majority of participants were in their mid-20s.

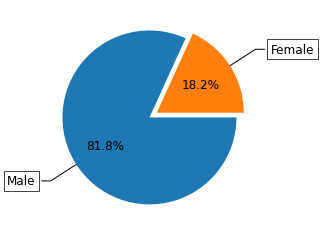

- Gender ratio

- 82% male:18% female:0% non-binary (82:18:0).

- This is roughly the same gender ratio as comp-sci graduates in the US (~82:18), more details here.

- However, this is worse than the gender diversity of Cambridge acceptances into the computer science course (page 12, 76:24), and worse than the EA Community (2019 EA Survey, 71:27:2)

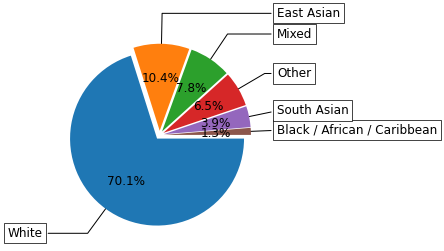

- Ethnicity

- 70% White; 11% East Asian; 8% Mixed Ethnicity; 4% South Asian; 1% Black - African/Caribbean/Other; 7% Other

- This is slightly more ethnically diverse than the wider EA community, again according to the 2019 EA Survey

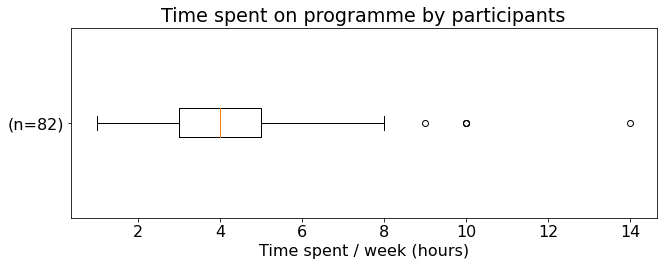

- Time commitment

- Participants put in on average 4.8 hours per week into the programme, including attending the discussions and completing the readings

Selected quotes from participants

Below is a list of hand-picked (anonymised) quotes from the participant feedback forms. They were selected to represent a range of views the participants expressed in the feedback forms, and to provide more qualitative information to readers on how the programme went from the participants’ perspective.

- Curriculum

- Having a concrete curriculum of readings was really helpful because it helped guide me. Normally I might have just ambled around the internet without ever reading anything of real substance, just superficial things.

- Feeling like I can better orient the technical AI literature now and having a good understanding of some core terms and techniques; counterfactually, I would likely have spent another year or two occasionally reading tech AI papers, while being less good at prioritizing what I should read and less good at absorbing what I am reading.

- This helped me build confidence to start participating/writing, whereas I'd otherwise be much more eager to follow *every* link to *every* paper/post I encountered for fear of missing some previously established argument against the thing I'm doing.

- The course provided a nice overview of the major ideas in AI Safety, but I feel like I still don't have enough knowledge to form intelligent takes on some things. Some of the content (i.e. Governance of AI agenda) didn't seem to be conveying actual knowledge but more so repeating intuitions/open questions?

- Discussions

- It showed me the value of reading and reflecting on AI safety every week, rather than it being something I occasionally had time for but never sustained. I'm trying to carve out 4-8 hours per week to read, reflect, write, and get feedback. If I'm successful in that, I expect to converge more quickly on an AI safety career with optimal fit for my skills.

- Projects

- Perhaps it would be useful to do the project in multiple stages. E.g. drafts due or/and group discussion of drafts after a few weeks, and then final projects due after a few more weeks with presentations of final projects.

- I think the biggest counterfactual will be having this final project paper about the ability to use AIs to help us with AI safety. It's work that I'm proud of and I hope to share with other researchers. Makes me far more likely to engage with safety researchers because I feel like I know something that few people know and may be useful to them in their future projects.

- It would have immensely helped me to be told “Think of a project and let's have a 15 min chat next week about what your idea is, and your coordinator tells you that it is nice and gives feedback on scope and possible problems / alternatives.”

- Community

- Though the curriculum was really good, probably the largest benefit was in being part of a community (on Slack, in the individual groups, and elsewhere). It's really valuable to be able to discuss topics, share opportunities and novel AI safety material

- Career

- I became more certain that strict AI safety is not the career path for me. At least not now? I've been playing with the idea of it more-or-less seriously for 5 years now and it doesn't seem like my interest areas align enough with strict AI work. I feel much more certain about that now. I'm still interested in general and getting to do the readings more for fun and to know how the field is developing sounds like a better path to take for me.

- I had intended to leave my job, to spend more time on AI Safety, sometime around January of 2022, but having that regularly scheduled time to think about how I want to engage with AI Safety made it much easier to get moving on planning the exit from my job.

- I updated towards it being less difficult to jump into than I originally thought.

- If I hadn't done this program I would probably continue to wonder whether I should do AI Safety work, and struggle to catch up with current discourse in Alignment. Now I feel caught up, and I have an idea for a plausible research project to pursue.

- Met a couple people who gave me career advice (through the Donut conversations and through my facilitator)

- I found a few points to dig deeper into AI safety and maybe integrate it in my ongoing Master's studies. Maybe I will even be able to find a supervisor for a Master's thesis in this field. I suppose that without participating in the programme I would have gone on the lookout for a topic for my Master's thesis without real insights into the field of AI safety and therefore probably wouldn't have been able to include this field in my studies.

- Uncertainties resolved

- Definitely impressed by how much AI Safety research and methods there are already, but on the other hand we still don't know much; before my state of mind was more like "we have no idea what we're doing.” Specifically with deceptive AI and mesa optimizers I previously thought "Oh, that's just all fancy words for distribution shift" while I think it has a point now. Still confused though

- I am less optimistic about the sufficiency of so-called single-single alignment than I was, and more concerned about society-scale and incentive-structure issues.

- I think the Paul Christiano talk made me realise that it's possible to decompose the AI safety problem into many different subproblems, and in a sense there is much more complexity than I expected!

- My general understanding of what AGI Safety could look like has improved to the point that I feel more comfortable talking with strangers about it and explaining some of the approaches we discussed in this program

- One point that stuck with me is the inherent trade-off between designing AIs whose behavior corresponds to our expectations and designing AIs that find novel and creative solutions to posed problems. Before, I either wasn't clearly aware of this tension or expected that both could be achieved in parallel. Now, I'm less convinced.

- I ended up really believing AI safety is not only an important, but also a very difficult problem

- Some new conclusions: (1) The AGI Safety community is too insular and needs more interdisciplinary dialogue to keep its conclusions grounded. (2) The AGI Safety community attracts highly left-brained people, with a few notable exceptions. This biases the topics considered most important. (3) AGI Safety is split between very long-term (abstract) and very short-term (implementation-specific e.g. NN interpretability) approaches, and is missing mid-term considerations about emerging AI technologies like analog light networks, Spiking neural networks, neuromorphic computing, etc.

- How hard it is to come up with working definitions of intelligence in an area (harder than expected). How little work has been done in certain AI gov and safety areas.

- Before I joined this course, I didn't know what we could do in practice to prevent the risks brought by AGI. Now I know much research we can do even if we are not able to construct AGI now.

- Counterfactual impact / random

- Moved my view of AI safety away from hard-to-take-seriously terminator scenarios to a real research program.

- I would not have read the texts in as much depth, and I would not have written the blog post. A lot of concepts snapped into place, not sure if that would have happened without the programme. Also, I would not have reflected on my career in the same way.

- Without having done this course, I don't think I would have known where to start with either a) Building a mental model of the field of AGI Safety, or b) Approaching material I don't understand in e.g. the Alignment Newsletter. I think that this course, having given me a surface-level understanding of most of the topics I encounter in the alignment forum and the alignment newsletter, has helped me no longer feel overawed.

- I would probably not have taken AI safety seriously at all & I wouldn't have updated on the importance of doing OpenAI-style safety research, as opposed to MIRI style.

- I think I would have tried doing things in the same general direction, but the course enabled me to meet others and get a lot of resources that have greatly multiplied my exposure and knowledge above the "go it alone" baseline.

- In the short term, I probably would've just had a bit of extra downtime over the past few months. In the longer term, the course got me to read things I'd been planning to read for several years, and helped me discover other readings I just missed. I probably would've read most of the materials eventually, but it front-loaded especially valuable readings by months or years.

- I spent 85 hours in total. I read 100% of core readings and 90% of optional readings. I made summaries of all the texts. I went to most guest talks and several socials/one-on-ones. I spent 8 hours on the project.

Facilitator feedback

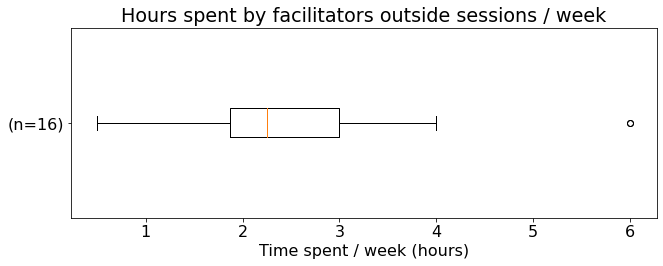

There were 28 facilitators, 5 of whom facilitated 2 cohorts. We had 3 policy/governance-focused facilitators, while the rest were focused on technical AI safety research. 15 facilitators filled in the post-programme feedback form.

- Facilitator programme rating

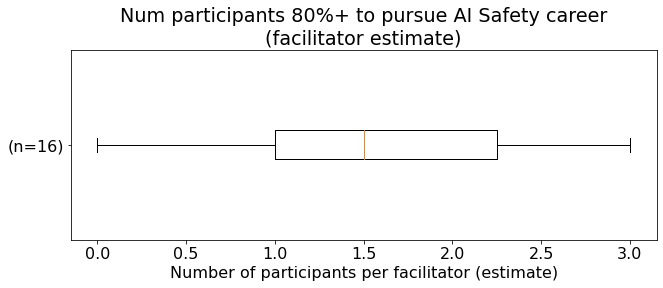

- How many participants did each facilitator estimate was 80%+ likely to pursue an AIS career from their cohort?

- What facilitators thought the main source of value was for participants

- Regular discussions to help clarify understanding

- Getting an overview of the field that is curated by experts

- Cohort creation

- Facilitators generally thought it was important to match participants’ expertise

- Where did you diverge from the guide, that seemed really fruitful? What’s your secret sauce?

- Asking participants to read the prompts and generate their own at the start for like 10 mins, and then upvote the ones they were excited about.

- Have a shared google doc to plan discussion, share questions and comments, etc.

- Ask participants to select a week and present their summary of the readings for that week, and then chair that discussion

- Advice to future facilitators

- Socialise at the start of sessions.

- Ask participants what they want to get out of the session.

- Become very familiar with the AI safety blog posts and articles.

- If the discussion moves onto a tangent, bring it back on track.

- See the main goal of facilitation as “participants are forming thoughts, and exploring ideas, and learning. My job is to guide that in a good direction, and help them form valuable + coherent thoughts.”

- Main source of personal gain

- Very enjoyable and rewarding experience (and relatively low time / week commitment).

- Learnt a lot of new things, deeper understanding of the AIS literature.

- Contributed to AIS field-building (75% of facilitators felt 1+ members of their cohort were likely to spend their career pursuing AI Safety).

- Prior expertise or experience

- Rough ML categories used for evaluation purposes (these were not robust categories, our new application form is much more explicit).

- 11 “compsci undergrad”

- 13 “ML skills no PhD”

- 3 “ML PhD”

- 1 “ML pro”

- Facilitators had a wide range of prior facilitating experience (from none to a lot).

- All facilitators had prior experience in learning and reading about AIS, though to varying degrees.

- Rough ML categories used for evaluation purposes (these were not robust categories, our new application form is much more explicit).

Mistakes we made

This is a long list of mistakes we (or specifically I, Dewi) made while organising this programme. I expect this to only be useful to individuals or organisations who are also considering running these types of programmes.

- Not having a proper team established to run this

- While I was able to delegate a significant number of tasks in running this programme, I believe many of the mistakes listed below would have been alleviated had I built a proper team (consisting either of EA Cambridge members, or programme facilitators or participants) that had concrete responsibilities, e.g. running socials, speaker events, managing the rescheduling system, etc.

- This will be fixed for the next round! And if you’d like to join said team, DM me on the EA Forum or via my email (dewi AT eacambridge DOT org).

- Application form not having drop down options for easier evaluation

- It had vague “open ended” questions, like “what would you like to learn about AI safety.”

- Some people provided loads of information, some people provided 1 sentence answers.

- This made understanding people’s backgrounds quite challenging and time consuming, and led to inconsistent admission decisions. This likely led to us rejecting some good candidates who were terse in their applications.

- We’ve now significantly improved the application forms, which should make candidate evaluation faster and more consistent.

- It had vague “open ended” questions, like “what would you like to learn about AI safety.”

- LettuceMeet disaster for people outside of BST time zone.

- Further details in the appendix.

- Not enough socials

- We only had 2 socials, around half-way through the programme. They weren’t particularly well-attended (~10 each), though those who did attend found them to be very valuable, and they weren’t hard to organise.

- We used Gatheround, and used the discussion prompts from the curriculum as well as standard icebreaker prompts.

- Meanwhile, many participants reflected that creating more connections was a significant source of value, suggesting that having more socials, and perhaps promoting them more and encouraging participants to attend, would have been beneficial.

- We could probably schedule in significantly more socials for next time, and potentially even delegate the organising and running of them (especially ones during Oceania times etc.) to particularly keen facilitators or participants.

- We only had 2 socials, around half-way through the programme. They weren’t particularly well-attended (~10 each), though those who did attend found them to be very valuable, and they weren’t hard to organise.

- Capstone projects promotion

- We only publicised all the details on this aspect of the programme properly around week 6, as we were uncertain what it would look like or how to structure it.

- We now have much more information on the capstone project already, and will be able to provide this from the beginning of the programme.

- Speaker events

- Some speaker events weren’t advertised long enough in advance.

- We also didn’t use speaker view in Zoom initially (we were using “gallery view”)

- Using speaker view makes it much clearer who to focus on and gives the speaker “centre stage.”

- Theory of change in research talk after people had done their 4-week capstone project.

- Michael Aird hosted an excellent “theory of change in research” talk, though we ended up having this towards the end of the capstone project section of the programme, as opposed to before the participants started their projects, or just as they started.

- This was purely due to organising this event entering my ugh field.

- Learning about the concept of theory of change, and then immediately implementing it while completing a project, is much more likely to embed the theory of change process in people’s minds, and is likely to contribute to more impactful projects.

- Michael Aird hosted an excellent “theory of change in research” talk, though we ended up having this towards the end of the capstone project section of the programme, as opposed to before the participants started their projects, or just as they started.

- A few cohorts seemed to whittle away to nothing, while others thrived.

- We want to further improve our facilitator vetting and/or facilitator training, and improve cohort matching.

- Our improved application forms will hopefully do a lot of this work, as the questions are far more explicit regarding prior experience.

- Having pre-designated “backup facilitators” could also be valuable for ensuring that a few people are already committed to stepping in if another facilitator’s availability reduces throughout the programme.

- Better facilitator training should increase the likelihood that cohorts have lively, productive and enjoyable discussions.

- Having to reschedule also caused significant friction for some cohorts, especially those where participants were from very different time zones.

- We are hoping to improve this by creating better and more robust cohort scheduling software within Airtable, and to make it easier for participants to switch cohort for a week if their availability randomly changes for that week (instead of participants just missing a week, or DM’ing me which is fairly high-friction, or the entire cohort having to try to reschedule for a week).

- While we had a drop-out form, and sent it in an email to participants after week 1, I think we could have taken more action on this, been more intentional if participants missed more than one session, and to have been more proactive in disbanding and recreating cohorts in line with extra information on the participants.

- For example, if a cohort turned out to be badly matched, we’ll now try to relocate participants into more appropriate cohorts, and if a particular cohort’s attendance becomes very low, then remaining participants will be more often given the option to relocate to another cohort, and we’ll work with the facilitator to evaluate what happened and see what would work best for them (i.e. whether they’d be keen to join an advanced cohort as a participant, or something else).

- We could also improve the onboarding into the programme.

- This could entail an opening event, where the programme is introduced, participants can ask questions or resolve any uncertainties they have, and they’re provided with an opportunity to socialise with participants from other cohorts.

- This might help create a stronger sense of belonging within the programme, or a feeling like “this is a real thing that other people who I’ve now actually met are also participating in, and it’s awesome!”

- We want to further improve our facilitator vetting and/or facilitator training, and improve cohort matching.

- Donut having severe limitations.

- We had ~200 people on the programme in total (including participants and facilitators), but Donut only allows 24 people to be matched with another person in the relevant slack channel for a 1-1 using the free plan.

- The pro plan is exorbitantly expensive ($479/month for 199 users).

- Perhaps we should have just been willing to spend this money, but it feels ridiculously expensive for what it is.

- I’m keen to explore whether using Meetsy or RandomCoffees would be a suitable and better alternative to Donut.

Other Improvements

Some of these are concrete ideas that we’ll be doing to improve the programme, while some are more vague ideas. As before, feedback on everything is very welcome.

- Discussion timings and location

- Make it more flexible re being 60 to 90 minutes, though maintaining 90 minutes as the default duration.

- Some participants noted that they wanted to talk for many hours, while some noted they felt too tired after 1 hour or ran out of things to say.

- We’ll suggest to facilitators that they should aim to be flexible given the needs of their cohort.

- Emphasizing that cohorts should take a 5-10 minute break half-way through, especially if it’s a 1.5-hour discussion.

- 90 minutes of dense intellectual discussion can be tiring, and bathroom and water breaks are always helpful!

- Regional in-person meetups (when & where covid is not an important consideration).

- We ask about this in our current application forms, and hope to create local cohorts where possible.

- This will also help seed local AIS communities.

- Make it more flexible re being 60 to 90 minutes, though maintaining 90 minutes as the default duration.

- Framing

- Make it clear that it’s unlikely that a career in technical AI safety research, or just in AI safety more broadly, is the best fit for all participants in the programme, especially perhaps at this moment in time given the limited job opportunities.

- This could be done by emphasizing that upskilling in ML research or engineering is a valuable early-career path if they can’t currently get an “AI safety job.”

- To encourage them to use this programme to inform their cause prioritisation and beliefs regarding how they could have the greatest marginal impact with their career.

- It’s plausible to me that many of the participants in this programme could have a greater impact working on other problem areas, especially those that seem more tractable (again, at least at the moment).

- I’m also very keen for more people to proactively think through their cause prioritisation and to develop their own rough models about different global problems, and not to defer to the “EA community consensus” whatever that is.

- Make it clear that it’s unlikely that a career in technical AI safety research, or just in AI safety more broadly, is the best fit for all participants in the programme, especially perhaps at this moment in time given the limited job opportunities.

- Careers fair

- The programme felt like it kind of ebbed away, as opposed to having a definite ending point.

- To improve this, I’m hoping to organise a careers fair with AI safety orgs, career planning organisations, etc., where participants can hear short presentations or talks highlighting what these orgs are up to and what they’re currently looking for in terms of recruiting new people.

- Capstone projects

- Capstone project showcase

- Given the quality and number of participants who spent a significant amount of time on their capstone projects, it would be great to have an opportunity for them to showcase their work via a short presentation or equivalent to other participants, and to have a short Q&A.

- Exact details on what this might look like will depend upon how many participants in the next round are excited about this opportunity.

- Capstone project collaboration

- We may be collaborating with established AIS orgs to provide a small amount of mentorship to some of the capstone projects.

- Participants will also have the opportunity to work in teams on their capstone projects, instead of doing the projects individually.

- This pushes more in the direction of what AI safety camp does.

- While the last round included 4 weeks of capstone projects, the coming round will be more flexible if participants want to do longer-term research.

- If the projects seem promising, we may be able to secure funding to partially support them to do this work, or we may just encourage them to apply for funding from the long-term future fund.

- More regular check-in meetings.

- Given that accountability is an important source of value for the capstone projects, participants will be provided with more opportunities to have check-ins with and receive feedback from other participants.

- Capstone project showcase

- Facilitators guide

- We’ll be encouraging facilitators to think really hard about who is in their cohort and what are their needs, interests and skills, and then use this information to guide how they facilitate discussions, when to encourage someone to contribute their expertise during the discussions, what opportunities to highlight to them, to introduce them to relevant people in the community, etc.

- Relatedly, suggest that facilitators have a 1-1 with each of their participants early on (e.g. before or during week 1) with each of their participants.

- Have a shared document with the discussion prompts, where participants can add confusions, uncertainties, thoughts etc. beforehand.

- Potentially assign one person to summarise each of the week’s readings, via a short informal-ish presentation.

- We’ll be encouraging facilitators to think really hard about who is in their cohort and what are their needs, interests and skills, and then use this information to guide how they facilitate discussions, when to encourage someone to contribute their expertise during the discussions, what opportunities to highlight to them, to introduce them to relevant people in the community, etc.

- Some kind of post-programme survey evaluating people’s understanding of different concepts.

- Airtable infrastructure

- Automated scheduling within Airtable, instead of using LettuceMeet or when2meet (this is still being developed).

- Automated emails when facilitators don’t fill in the post-session forms.

- Automated emails to participants when they miss a session with a link to cohorts later in the week that are a good match for them.

Uncertainties

Below is a non-exhaustive list of uncertainties we have regarding the future of this programme.

- Go big and get loads of people vs focus on what we evaluate to be the “most promising” applicants re their potential to contribute to future AI safety research

- Can we actually determine this accurately?

- Are there other benefits from significantly more people (potentially 1,000+ each year) having a deeper understanding of AI safety arguments?

- Or should there be a separate programme that more explicitly tries to weigh up the arguments for and against AI safety being important / relevant, as opposed to just introducing the broad arguments in favour?

- Local vs global

- Local discussions are probably better in terms of fidelity and establishing a local community.

- However, there won’t be a critical mass of interested applicants and talented facilitators in most of the world’s cities.

- Even if a cohort could be created in a specific region, the cohort will probably be less “well-matched” regarding prior AI safety understanding and ML expertise.

- Capstone project

- Should we create an expectation that everyone does this, given the accountability this provides people, or make it optional because it won’t be valuable to many participants?

- Is 4 weeks long enough? Should we make this more flexible, and offer payment to participants if they’re particularly ambitious about their projects?

- Connections and socials

- How do we create more connections between the participants?

- Should we swap people around into new cohorts half-way through? (I’m leaning quite heavily towards no, but am open to it).

- This would mean each cohort has to rebuild group rapport, and the scheduling could be a nightmare, but it would also plausibly double the number of connections made.

- Relatedly, does the “quality of connections made” experience diminishing returns with each session (implying that swapping might be beneficial in the “maximising valuable connections” metric), or is the quality of connections over time more linear, or even exponential (implying cohorts should stay together throughout the programme)?

Appendix

Participant feedback table

| Ratings from participants | Rating (out of 10) | Standard deviation |

| Promoter Score* | 8.51 | 1.30 |

| Facilitator | 8.41 | 1.59 |

| Discussions | 7.80 | 2.06 |

| Readings | 7.48 | 1.71 |

| Exercises | 5.74 | 1.98 |

| Capstone project | 6.55 | 2.52 |

| Speaker events | 7.76 | 1.32 |

*Promoter score question: How likely would you be to promote this programme to a friend or colleague, who’s interested in learning more about AI safety? 1 = Not at all likely, 10 = Extremely likely.

| Other stats | Average | Standard deviation |

| Reading novelty (/10) | 6.89 | 2.04 |

| Prior likelihood of pursuing an AIS career | 57.5% | 25.7% |

| Current likelihood of pursuing an AIS career | 68.0% | 22.3% |

| Hours spent per week total | 4.8 | 3.1 |

Facilitator feedback table

Mean | Standard deviation | |

Promoter score* | 8.6 | 1.02 |

Facilitator hours spent per week outside sessions | 2.6 | 1.7 |

Estimate of # of participants 80%+ likely to pursue an AIS career | 1.5 | 1.0 |

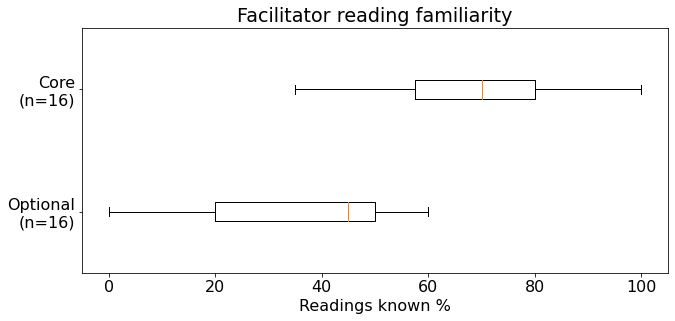

Percentage of core readings they’d done before the programme | 68% | 17% |

Percentage of further readings they’d done before the programme | 38% | 18% |

Cohort Scheduling

- To aid with the cohort creation process, participants were put into groupings of 15 according to their ranking.

- Each group of 15 was sent a unique LettuceMeet link, alongside 3 similarly-ranked facilitators, and Thomas Woodside created a scheduling algorithm that scraped the LettuceMeet data and found 3 times that worked for 3 unique sets of 5 participants + facilitator, thereby creating each cohort.

- Other options would have been to send out one LettuceMeet to everyone, but that would have been really messy, or to create each cohort beforehand and leave them to find their own time where they’re all free, though this likely wouldn’t have worked due to the many different time zones and availabilities.

- LettuceMeet turned out to be really annoying, I would generally urge against using it

- LettuceMeet just didn’t work for anyone in India (+4.5 timezone)

- Instead of showing 24 hours per day within which they’d note their availability, it only displayed 1 hour.

- When we discovered this, we resolved it by sending these participants a when2meet and then I manually added their availabilities into the appropriate LettuceMeet.

- The form had specific dates against each day of the week, which corresponded to the first week.

- Some people thought that the availability they were including in the LettuceMeet was just their availability just for week 1, and not their recurring availability throughout the programme. I did try to communicate but was still misunderstood by many which indicates a comms failure on my part.

- The way LettuceMeet presents the available times in time zones other than the one in which it was created (in this case BST) is a mess and caused significant confusion re which day participants were filling in their time for. It was also very confusing to explain this situation to participants, but basically:

- Each column has a header that corresponds to a certain date / day (e.g. Monday 29 Nov).

- Each row corresponds with an hour in the local time, but the first row is whatever local time corresponded with 12am BST.

- E.g. for people in California, the first row of showed Sunday 4pm PT, corresponding to Monday 12am BST (the time I selected as the first timing in the LettuceMeet).

- When the times went from 11:59pm to 12:01am, it wasn’t clear whether it went to the next day (but under the same column as the previous day) or looping back on itself to earlier in the day.

- E.g. in California, going down the rows in the first column (labelled Sunday), it actually went from Sunday 4pm to 4pm Monday, but without making it clear that the times from 12am onwards were actually for Monday, not looping back to represent earlier times on Sunday.

- LettuceMeet just didn’t work for anyone in India (+4.5 timezone)

- Next steps for cohort scheduling

- We’re working with Adam Krivka to create a new cohort scheduling system in Airtable, and EA Virtual Programs are working with OpenTent to build a solution in Salesforce.

- The main issues as I see them are:

- Having an intuitive and easy-to-use interface for participants to submit their weekly availability, that works across all timezones.

- If a third party app is used (e.g. when2meet), how to scrape that data such that we can use the data.

- Processing the relevant information (weekly availability, prior expertise, prior knowledge, career level, etc.) and turning this into 10s or 100s of well-matched cohorts with times that all participants can regularly make.

- Being flexible enough such that the system doesn’t fail when people’s availabilities inevitably change during the programme.

One of the key benefits I see from this program is in establishing a baseline of knowledge for EAs who are interested in AI safety which other programs can build upon.

I found reading this incredibly helpful, thank you for writing it up!

Also, I just wanted to flag that given that some time has passed since the post's publication, I found two hyperlinks that no longer work:

"5 speaker events" and "4 weeks doing a self-directed capstone project"

Thank you again!

I'm very grateful you wrote up this detailed report, complete with various numbers and with lists of uncertainties.

As you know, I've been thinking through some partly overlapping uncertainties for some programmes I'm involved in, so feel free to reach out if you want to talk anything over.