Epistemic status: Based mainly on my own experience and a couple of conversations with others learning about AI safety. It’s very likely that I’m some overlooking existing resources that address my concerns - feel free to add them in the comments.

I had an ‘eugh’ response to doing work in the AI safety space[1]. I understood the importance. I believed in the urgency. I wished I wanted to work on it. But I didn’t.

An intro AI safety course helped me unpack my hesitation. This post outlines what I found: three barriers to delving into the world of AI safety, and what could help address them.

Why read this post?

- If you’re similarly conflicted, this post might be validating and evoke the comforting, "Oh it’s not just me" feeling. It might also help you discern where your hesitation is coming from. Once you understand that, you can try to address it. Maybe, ‘I wish I wanted to work on AI safety’ just becomes, ‘I want to work on AI safety’.

- If you want to build the AI safety community, it could be helpful to understand how a newcomer, like myself, interprets the field (and what makes me less likely to get involved).

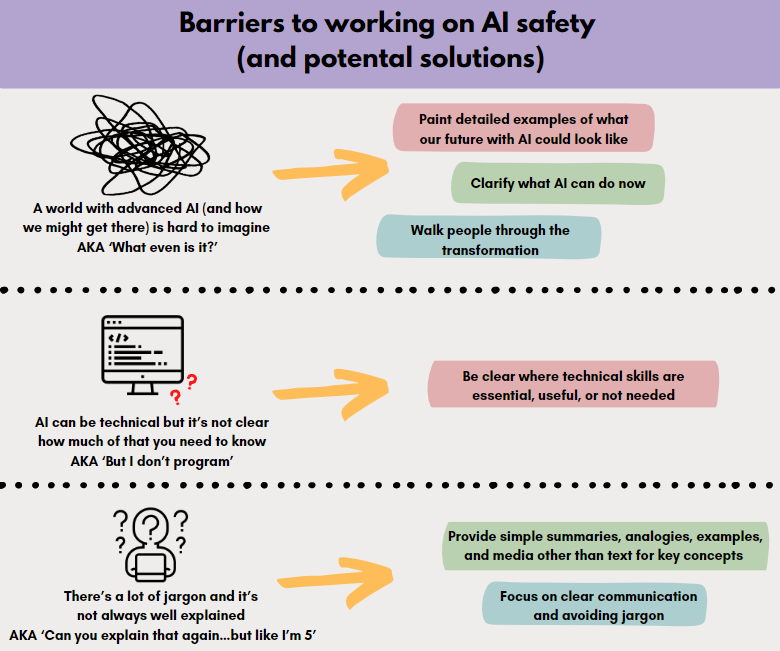

I’ll discuss three barriers (and their potential solutions):

- A world with advanced AI (and how we might get there) is hard to imagine: AKA "What even is it?"

- AI can be technical but it’s not clear how much of that you need to know: AKA "But I don’t program"

- There’s a lot of jargon and it’s not always well explained: AKA "Can you explain that again…but like I’m 5"

Jump to the end for a visual summary.

A world with advanced AI (and how we might get there) is hard to imagine: AKA "What even is it?"

A lot of intro AI explainers go like this:

- Here’s where we’re at with AI (cue chess, art, and ChatGPT examples)

- Here are a bunch of reasons why AI could (and probably will) become powerful

- I mean, really powerful

- And here’s how it could go wrong

What I don’t get from these explanations is an image of what it actually looks like to: 1) live in a world with advanced AI or 2) go from our current world to that one. Below I outline what I mean by those two points, why I think they’re important, and what could help.

What does it look like to live in a world with AI?

I can regurgitate examples of how advanced AI might be used in the future – maybe it’ll be our future CEOs, doctors, politicians, or artists. What I’m missing is the ability to imagine any of these things - to understand, concretely, how that might look. I can say things like, "AI might be the future policymakers", but have no idea how they would create or communicate policies, or how we would interact with them as policymakers.

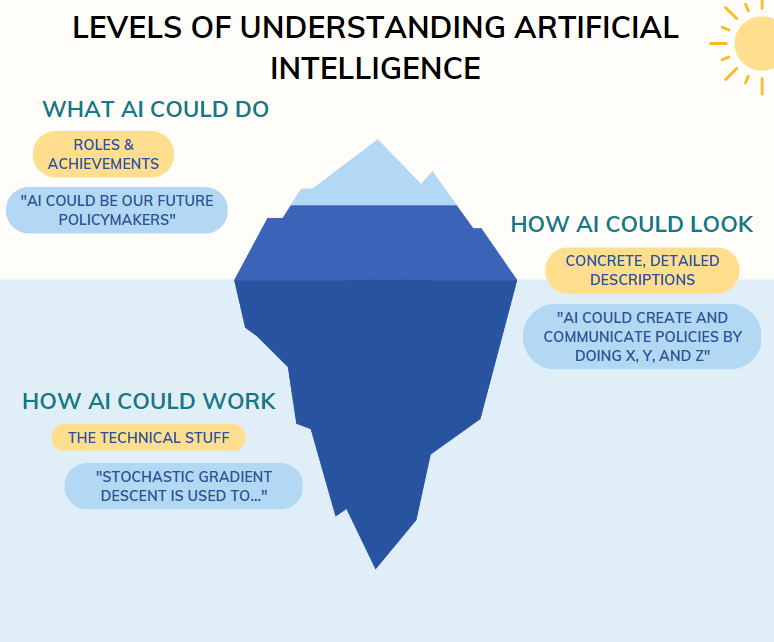

To flesh this out a bit, I imagine there are three levels of understanding here: 1) what AI could do (roles it might adopt, things it could achieve), 2) how that would actually look (concrete, detailed descriptions), and 3) how that would work (the technical stuff). A lot of content I’ve seen focuses on the first and third levels, but skips over the middle one. Here's a figure for the visually inclined:

Why is this important?

For me, having a very surface-level understanding of something stunts thought. Because I can’t imagine how it might look, I struggle to imagine other problems, possibilities, or solutions. Plus, big risks are already hard to imagine and hard to feel, which isn’t great for our judgement of those risks or our motivation to work on them.

What could help?

I imagine the go-to response to this is – "check out some fiction stories". I think that’s a great idea if your audience is willing to invest time into finding and reading these. But, I think fleshed out examples have a place beyond fiction.

If you’re introducing people to the idea of AI (e.g., you’re giving a presentation), spend an extra 30 seconds painting a picture. Don’t just say, “AI could be the future policymakers”. Tell them, “Imagine if you could enter some goal into a system like, ‘Get to net zero emissions by 2030’ and the system would spit back a step-by-step plan to get there”. Now people have a picture in their minds, rather than a statement[2].

It’s hard to paint a picture when no one knows what it will look like. But, I tend to think that a guess (with the requisite caveats) is better than a throwaway line that no one really understands.

How do we go from our current world to that one?

AI feels very abstract. It feels even more abstract when you hear, “It will be unlike anything we have known”. One way to make it more concrete is by drawing links between the future and the present. Rather than just presenting the final outcome ("AI is going to transform society"), step people through how that transformation could come about.

Why is this important?

People learn by connecting new information to what they already know. We learn a second language via our first, gauge the significance of a natural disaster by comparing it to the last, and evaluate the performance of a company by comparing it to others in the same industry.

For me, connecting where we are now with AI (‘the known’) to where we could go (‘the new’) would a) make AI seem more real and b) deepen my understanding of what AI could be. As a result, I may be more motivated to work in this area, and feel more confident and capable when doing so.

What could help?

One key first step is clarifying what AI can already do now. With the extensive publicity about various AI tools (e.g., ChatGPT, DALL·E), I think knowledge of AI capability is increasing. But, I think it's still worthwhile to include examples of what AI can achieve now, or how it's being integrated into organisations[3]. Such examples can make the jump between where we are now and where we could go seem smaller, and can provide strong motivation to work on governance and policy now.

My second suggestion is to explicitly connect the present to the future. Compare these two examples:

Example 1:

In the future, your doctor could be an AI.

Example 2:

In the future, your doctor could be an AI. Here’s how it could happen:

- Your doctor’s clinic introduces a new computer program. The doctor enters patient symptoms into the program, and out spits a diagnosis. Think google, but better.

- The program is continuously improving, so it becomes more accurate than the doctor.

- Mechanical devices (e.g., robotic arms) get better and cheaper. The program uses this technology to collect patient data.

- Through thousands of interactions, the program masters the ‘human’ art of connecting with patients.

If you just heard the first example you might think, ‘How could that ever happen?’. The second example walks you through the transformation. It makes it seem possible.

These are other example formats of what I mean:

- “AI currently does X, what if it could do X AND Y?”

- “We currently use humans to do X, what if Y meant that AI could do X?”

- “What if we could do X, and that led us to do Y, which then resulted in Z [AI-relevant outcome]?”

AI can be technical but it’s not clear how much of that you need to know: AKA "But I don’t program"

AI has strong associations with technical skills like coding. For someone who doesn’t have those skills, that can be a quick turn off. Algorithms, machine learning, and coding can all seem like a black box if you don’t know what’s involved.

Why is this important?

Either consciously or not, I imagine a lot of people use something like, "Do I have a computer science degree?" to filter their eligibility for AI safety work. If that’s filtering out a lot of potentially great candidates, that’s a problem.

What could help?

People encouraging others to focus on this area (e.g., those delivering introductory presentations / courses) should be clear about a) what technical skills are essential (or just useful) for which kinds of AI safety work and b) where a conceptual understanding can suffice (e.g., "I get what stochastic gradient descent is but couldn’t actually do it myself").

I imagine this varies wildly by what someone wants to do. For example, do you want to create regulations, develop technology, survey public attitudes, or build the community? What I would find helpful is a list of potential career pathways in the AI safety space, categorised by the level of technical skills you’ll need (or not) to pursue them.

There’s a lot of jargon and it’s not always well explained: AKA "Can you explain that again…but like I’m 5"

Because the field of AI is so complex, it’s crucial that explanations are as clear as possible.

When reading up on AI safety, I had many questions. Perhaps the most common was, unfortunately, "Am I just dumb, or is this poorly explained?". Some of the texts outlining key ideas are long and academic, the analogies that do exist are limited and repeated (cue, inner alignment is like evolution), and plain-language summaries are growing but still few.

Why is this important?

If the content isn’t accessible, people are either going to a) waste a bunch of time consuming the same content over and over or b) give up because it seems too hard. This seems especially important if you’re trying to attract new people to the field.

What could help?

I’d love to see simple explainers available for all key AI safety concepts and ideas. This could include plain-language summaries (e.g., "Explain like I’m 5" summaries), multiple analogies and examples for key concepts, and media other than text (e.g., videos, infographics).

Where summaries aren’t appropriate or are out of scope, it’s even more important to communicate clearly and avoid unnecessary jargon[4]. Which of these, for example, would you rather read (as someone new to the area):

- “One approach to AI alignment is through the implementation of an explicit value alignment mechanism, which incentivizes an AI system to behave in a manner consistent with human values. This can involve techniques such as value learning, in which an AI system is trained to infer human values from examples, or value specification, which involves explicitly defining the values an AI system should adhere to.”

- “We want AI to do what we intend it to do, so we need to be really clear about what we want. We can do this by providing clear instructions / rules about what is right and wrong, or providing many examples for AI to learn from.”

Simple summaries may sacrifice nuance but can provide the scaffolding people need to get started.

Conclusion and key recommendations

The role that AI could play in the world is already hard to grasp. Add in the complexity of what AI actually is (the technical stuff), sneak in some jargon, and you’ve got a party that few want to come to.

If someone were creating a presentation or course on AI safety just for me, this is what I’d (ideally) ask for:

- Give me some detailed examples of what it could look like to live in a world with advanced AI.

- Clarify what AI can do now, and step me through the transition to a world where AI is doing more extreme things.

- Detail where technical skills are essential, useful, or not needed. My ideal is to know if and where I can be useful with my current skill set (and where I need to upskill).

- Explain ideas as simply as you can in the first instance. Once I'm on board, we can dive into more detail.

- Integrate many analogies and examples throughout to convey key concepts.

And for making it this far (or skipping ahead), you’ve earned the nice visual summary:

Acknowledgements

Thank you to Peter Slattery, Michael Noetel, and Alexander Saeri for their helpful feedback.

- ^

‘Doing work in the AI safety space’ is intentionally vague. For me, this mainly means pursuing research projects related to AI safety. More broadly, it could mean spending any significant amount of time trying to learn about, or contribute to, the area.

- ^

Obviously, there can be cons to creating more detailed examples (especially if they're taken too literally). I see their purpose as providing an entry point, not a prediction.

- ^

Alexander Saeri’s video, ‘AI tools for social and behavioural science‘, provides a great example of outlining what AI can do now.

- ^

If you're looking for recommendations: I found the book, 'The Writer's Diet', especially useful for improving my writing.

For me, the main blockers were:

Thanks for sharing Yonatan, it's always interesting to hear which barriers are the most salient for different people! I imagine those ones are pretty pervasive, especially with regards to AI safety (I can definitely empathise).

My approach to addressing "AI can be technical but it’s not clear how much of that you need to know: AKA "But I don’t program"" is running monthly Alignment Ecosystem Development Opportunity Calls, where I and others can quickly pitch lots of different technical and non-technical projects to improve the alignment ecosystem.

And for "There’s a lot of jargon and it’s not always well explained: AKA "Can you explain that again…but like I’m 5"", aisafety.info is trying to solve this, along with upping Rob Miles's output by bringing on board research volunteers.

I really relate to the 'what would it even look like?' thing.

I also think that coming up with examples and stories like this isn't just important for motivating people - it's also important for epistemics. If you stay too long at the abstract level, I think you can miss things - often flaws or confusions will arise when you try to actually give a very concrete story about what might or will happen.

Thanks for writing this! I had that "eugh" feeling up until not that long ago, and it's nice to know other people have felt the same way.

I'm particularly enthusiastic about more educational materials being created. The AGISF curriculum is good, and would have been very helpful to me if I'd encountered it at the right time. I'd be delighted to see more in that vein.

I agree Jenny - I think educational materials, especially those that collate and then walk you through a range of concepts (like AGISF) are really useful.

I think the main issue with example 1 is that it lacks detail. I think a solution is to be as concrete and specific as possible when describing possible futures, and note when you're uncertain.

I'm not sure if this is currently possible to make, because there are very few established career paths in AI safety (e.g. "people have been doing jobs involving X for the past 10 years and here's the trajectory they usually follow"), especially outside of technical research and engineering careers. I did make a small list of roles at maisi.club/help; but again, it's hard to find clear examples of what these career paths actually look like.

Thanks for sharing, I definitely have the same feeling. Especially on the "but I can't code" bit. I'm gonna read through that Blue Dot course curriculum, it seems like a good step for people in this stage of thinking.

If you want to become a professional software developer (I'm not sure if that's what you're aiming at or not), I have thoughts on how to do it effectively, here.

Yeah I recommend checking that curriculum. I also found it really useful to discuss the content with others (which could be through signing up to the actual AGISF course or finding a reading buddy etc.)