Note: I recently published this guide on OSF - check out that link for the PDF version.

About this guide

As a research consultant, I’ve led several prioritisation projects[1]. These projects typically involve helping organisations determine what they should focus on or recommend. They have varied in intensity and consequence (two collectively influenced approximately AUD $50 million in research funding).

This guide outlines the systematic process I follow to do this, integrating a recently published paper I led as a case study. The process I outline draws heavily from Multi-Criteria Decision Analysis, prioritisation literature (e.g., Rudan et al., 2008), and insights from experts in the field[2].

Who should read this guide?

People who want to make an optimal choice regarding a specific goal, based on a large set of options, with consideration of several criteria. The approach I outline may be particularly useful for those seeking to incorporate input from multiple people or groups.

Examples of those who may find it useful include:

- Organisations aiming to identify next year’s focus areas by evaluating options against criteria like importance, alignment with organisational mission, and potential for funding.

- Grant-makers with the goal of allocating funds effectively, assessing research proposals using criteria such as impact, feasibility, and alignment with funding objectives.

- University students seeking to determine how to spend summer break, choosing from a variety of activities while considering criteria like enjoyment, personal growth, and cost.

Summary of the process

Following a systematic approach to determine priorities can increase impact and transparency.

I consider there to be three main phases in prioritisation:

- Define your goals, criteria, and what you intend to prioritise: clarify the what, why, and how of this process.

- Build a long list of unique options[3]: find out what all the options are, before figuring out which are the best.

- Prioritise the long list of unique options using criteria: apply predefined criteria to the long list of options to see which perform best.

Why conduct a systematic prioritisation process?

There are two parts to this question: 1) why prioritise and 2) why use a systematic process to do it.

Why prioritise things? We have limited resources (e.g., time, energy, money), and there are many endeavours that we could spend these resources on. Some of these endeavours may be better than others (e.g., they might produce more impact or make us happier). To maximise use of our scarce resources, we need to choose and pursue what’s most important.

Why use a systematic process to prioritise things? There are many ways to determine which of several options is a priority. We could rely on chance and draw numbers out of a hat. We could rely on convenience and do what’s easiest. We could rely on defaults and pick what’s next on the to-do list. We could rely on our desires and pursue what’s most enjoyable. So why follow a systematic process?

- It can lead to a better outcome. When we’re trying to prioritise something, there are often many possible options, considerations, stakeholders, and information sources. If we don’t think these through, we’ll likely miss important information. Following a systematic process increases the likelihood that all relevant options and factors are considered, helping us make well-informed decisions.

- It can increase respect for, and commitment to, your selected option. Using a systematic process can make your decision-making steps clearer and more transparent to others. This can foster understanding of, respect for, and commitment to, the outcome you reach.

How to conduct a systematic prioritisation process

Below I outline the three main phases of a prioritisation process and the steps involved, integrating a case study to help demonstrate how this could look[4].

The case study: prioritising funding calls for cardiovascular disease and diabetes

Throughout this article, I will use a project I collaborated on as a case study to illustrate the systematic prioritisation process. I recently published a peer reviewed article describing the process and results of that project. Examples from the case study will be presented in blue boxes throughout. I will also integrate other examples (either hypothetical or from other projects) in text.

| Case study context One-line summary: This project aimed to determine the highest priorities regarding cardiovascular disease and diabetes to guide the allocation of research funding. Detailed summary: In 2020, the Australian Government allocated AUD 47 million to fund cardiovascular disease and diabetes research through the Medical Research Future Fund (MRFF). MTPConnect was responsible for designing and delivering this major MRFF program of funding. To maximise the program impact, MTPConnect embedded a systematic prioritisation process. The case study in this guide was conducted to inform research funding rounds conducted by MTPConnect as part of its program delivery. The goal of this project was to determine the highest health and medical priorities regarding cardiovascular disease and diabetes. The options being considered were unmet needs* suggested by experts and community representatives. The project utilised six explicit criteria (e.g., clinical impact, quality of life) to assess and prioritise options. Results informed three research funding rounds. The project was led by BehaviourWorks Australia (where I worked) in collaboration with Australian National University, Research Australia, MTPConnect, and an independent expert advisory board for the specific MRFF program. *‘Unmet needs’ are medical or health-related issues not adequately addressed by current interventions. |

Phase 1: Define your goals, criteria, and what you intend to prioritise

As with any research project, you need to decide what you’re going to do before you go do it. By the end of this phase, you want to understand the:

- Goal of the project;

- Scope of the project;

- Unit of prioritisation (i.e., what you’re going to prioritise); and

- Prioritisation criteria

What is the goal of the project?

Any prioritisation process takes time, resources, and energy – establish why you’re doing this before you get started. Your goal shouldn’t just be, ‘to know what’s the highest priority’: make it more concrete.

Prompting questions to articulate the goal of your prioritisation process.

| Prompting question | Example |

|---|---|

| What problem or uncertainty could this process help you solve? | “We are trying to decide X, but are unsure about Y.” |

| What specific outcome do you want? | “We want to know what the top five funding calls we should put out to address X problem.” “We want to determine what five interventions we could test in the next stage.” |

| What will happen as a result of that outcome? | “The result will influence X.” “X people will use this outcome to make Y decision.” |

| Case study What problem or uncertainty did this process help solve? MTPConnect was distributing funding and wanted to understand what funding calls would be most valuable. What specific outcome did we want? The systematic prioritisation process aimed to identify the top three priorities for each of the three focus areas: (1) Complications associated with cardiovascular disease; (2) Complications associated with diabetes; and (3) Interactions in the pathogenesis of Type 1 diabetes, Type 2 diabetes, and cardiovascular disease. What happened as a result of that outcome? The top three priorities for each area informed the funding calls that MTPConnect put out. |

What is the scope of the project?

Determine the scope of the project before you start so you can allocate resources accordingly, involve the right people, and not get distracted by things that aren’t in focus.

Prompting questions to articulate the scope of your prioritisation process.

| Prompting question | Example |

|---|---|

| How much time do you have to complete the project? Do you need to make a decision, based on the outcome, by a certain date? If there’s no time pressure, it can still be useful to set an intended completion date. | “We need to know what our priority focus areas are by the end of the year.” “X person has two months to help us on this project, so it needs to be completed by then.” |

| What resourcing do you have to complete the project? This can determine the time frame of the project and level of rigour. | “I can spend three days on this.” “We can only allocate $X to this project, so it will need to be conducted in Y days.” |

| Who will be involved? Consider who is in the project team (e.g., those who set the parameters, organise the project, and do the analysis)[5], who is the user (e.g., the specific people or organisation that need the outcome), and who you want to consult (e.g., advisors or those who you want to help determine the priorities). | “Myself and two others are running this project. We will get advice from X people who have done something similar before. The main person using the outcome to make a decision is Y, so we need to involve them when determining the parameters. We want Z experts to inform the prioritisation process (e.g., assess options against criteria).” |

| What is out of scope? It can be helpful to articulate exactly what is not in scope. | “We are prioritising interventions we could test to address this specific problem, but we are not prioritising problems more broadly – that’s already set.” “We are not assessing which options are feasible to implement at this stage, only which are most impactful.” |

| Case study What were the time and resourcing constraints? The project was conducted over a six-month period and resourcing (e.g., number of days allocated to the project) was confirmed prior to starting. Who was involved? The research team comprised BehaviourWorks Australia, Australian National University, and Research Australia. The client was MTPConnect, who also provided project support. An independent expert advisory board for the MRFF program of funding helped set project parameters and signed off on all major decisions. The participants who contributed to building the long list (Phase 2) then prioritising options (Phase 3) included people with expertise in, or lived experience with, cardiovascular disease and/or diabetes (e.g., clinicians, researchers, people with the disease(s), carers). What was out of scope? This project was limited to Australia, and did not prioritise interventions or populations – only unmet needs. |

What is the unit of prioritisation?

You need to articulate exactly what you will prioritise. The goal is that all options you are prioritising have the same format and level of specificity. This helps ensure: a) you are collecting all the relevant information when building the long list, and b) options in the long list are comparable.

Prompting questions to articulate the unit of prioritisation.

| Prompting question | Example |

|---|---|

| What are you prioritising? Are you trying to prioritise behaviours, problems, interventions, populations, locations, projects, or something else? | “We’re prioritising health-related behaviours (e.g., exercise, dietary habits) that we want to target in a campaign.” “We’re prioritising interventions (e.g., educational programs, community outreach) to address X issue." “We’re prioritising groups most in need of a X service.” |

| How many unique components are you prioritising? Do you just want to prioritise problems or the combination of problems and populations? Note that the more components you include, the more options you will have to prioritise[6]. | “Each option should specify both a problem and a population.” |

What will these options look like in statement form? Considering the above, how will the options be formatted? This is particularly important when you’re trying to deduplicate the long list (i.e., make sure each option is unique), and present the options for people to assess. To determine this, list concrete examples of what the final outcome could look like. If you’re working with a client/team and they can’t think of examples, present some alternatives and get their reactions or endorsement. | “We want to know the top three research questions we should fund regarding X. What are examples of what one of those research questions could look like?” “What if these were the top priorities that we ended up with? Would we be satisfied with this format/result?” “We want options in the format of ‘[Problem] experienced by [population]’.” |

| Case study What were we prioritising? Unmet needs in (1) Cardiovascular disease; (2) Diabetes; and (3) Interactions in the pathogenesis of Type 1 diabetes, Type 2 diabetes, and cardiovascular disease. How many unique components were we prioritising? We were only prioritising one component – unmet needs. However, we collected information on other components (e.g,. the impact of those unmet needs, potential interventions to address them) to provide additional context in reports / briefings. What did these options look like in statement form? Where possible, unmet needs in the finalised long list were presented at two levels: ‘Broader body part / system’, followed by ‘Specific issue / complication’. For example, ‘Cardiac, Cardiac hypertrophy’. |

What are the prioritisation criteria?

In this process, you use explicit, pre-defined criteria to prioritise the options. This ensures that a) the priorities are based on what is most important for the user/outcome, and b) every option is assessed against the same criteria. As the criteria determine the priorities, they’re important to get right.

What do good criteria look like?

They consider the unit of prioritisation. E.g., if you’re prioritising problems, you might want a criterion on the impact / size of that problem. If you’re prioritising potential interventions, you might want a criterion on how feasible it is to implement that intervention.

They have minimal overlap. If you have multiple criteria assessing similar concepts (e.g., Criterion 1 = ‘Impact’ and Criterion 2 = ‘Importance’), that concept will have a greater sway over the prioritisation results. It’s better to minimise the overlap, and instead use explicit weights if some criteria are more important (see ‘Weighting criteria’).

They reflect your values, and what’s most important for the outcome. E.g., if it’s important for you to identify top-priority research questions that are not being addressed by others, this suggests you should incorporate neglectedness as a criterion.

There are probably 2-5 criteria. The more criteria you have, the longer and harder the assessment aspect will be. E.g., Participants will struggle if they have to assess each of the 30 options by 10 criteria.

They are well defined. If people can interpret the criteria in different ways, you may not get consistent or useful ratings. E.g., Some people may interpret ‘Health impacts’ as referring to physical health, while others may think of mental health. Make sure you have a clear definition that reduces the interpretation required.

They can be assessed. It should be feasible to assess the options against the criteria. Something that’s vague and hard to assess like, ‘Impact on global economy’ probably won’t work if you’re conducting this process in a short period of time. If participants are assessing the options against criteria, consider:

- Will they be able to understand these criteria?

- Do they have the experience or expertise needed to assess these options against criteria?

- What would I struggle with if I was trying to assess options against these criteria?

What process could you follow to determine the criteria?

Ideally, work closely with the project team, advisors, and users to determine the criteria. You could do a mini prioritisation process, where you generate a list of potential criteria, then work with the team to select the top ones.

Generating potential criteria

Prompting questions to generate potential criteria.

| Prompting question | Example |

| Are there any criteria you must include? | “The board requires us to include X and Y criteria.” |

| Do you have any initial ideas? | “We will use criteria to prioritise the options, do we have initial thoughts about what we could use?” “Something about feasibility is probably important.” |

| Imagine you’re at the end of the project – what do you want to be able to say/know about the final priorities? Work backwards from the outcome you want. | “We want to say that we know these are the most impactful and feasible problems to work on.” |

| What criteria have been used in previous projects? Try to find people or organisations that have gone through a similar process, and look at what they did for inspiration. | “X organisation was working on a similar project, and they used Y and Z criteria.” |

| What criteria do stakeholders think should be included? This is especially important if you want the result to be accepted/endorsed by particular people or organisations. | “X grantmakers really care about Y criteria, so we should consider including that.” |

Finalising criteria

After generating potential criteria, you need to decide which ones you’ll actually use. To do this, you could:

- If you’re working with a team or client, present the criteria you recommend (and why), and get their reactions.

- Flag criteria that you don’t think are feasible to assess (e.g., “This will be very hard for participants to assess in a survey”).

- Vote for the criteria. A couple of options include:

- Typical voting: each person in the team could vote for five criteria, and the top ones could be used.

- Quadratic voting: people vote on their preferred criteria, and can use up more of their votes to express stronger preferences (this method may take a little longer to implement/explain).

- Pilot the criteria with yourself, the team, colleagues, the client, or your target audience. Try assessing made-up options against those criteria.

- Remove criteria that are difficult to understand or assess (and can’t be improved).

- Assess whether some criteria could be used as a filter (e.g., to determine what options are included in the long list), rather than to assess options. You can think of these as exclusion/inclusion criteria, rather than prioritisation criteria.

- E.g., ‘Relevance to behaviour change’ may be suggested as a criteria. If you only want to include options that are relevant to behaviour change in the long list, this could be a filter for what’s included.

| Case study What were the criteria? There were six criteria: (1) Clinical impact; (2) Quality of life; (3) Consumer expectations; (4) Diversity/regional, rural, remote impacts; (5) Commercial potential; and (6) Productivity outcomes. How were the criteria determined? The advisory board determined the criteria, with support from MTPConnect. Determining criteria was outside of BehaviourWorks’ role in the project. |

Weighting criteria

Once you’ve finalised the criteria, decide whether some are more important than others.

Pairwise comparison can help you determine what, if any, weights should be used. Compare two criteria at a time (either by yourself or with the team/client) and ask whether a) they are of equal importance b) one is slightly more important or c) one is much more important. You then use the responses to determine the weights.

- If you google, ‘Pairwise comparison to determine criteria weights’ you can find more comprehensive instructions (e.g., this site or this paper that uses the method).

| Case study Were any criteria weighted differently? The advisory board decided that Clinical impact and Quality of life should be weighted at double the other criteria, given the program objectives. Determining weighting was outside of BehaviourWorks’ role in the project. |

Phase 2: Build a long list of unique options

Create a thorough list of options to avoid overlooking any possibilities. This is the ‘long list’.

Building the long list of options

The two main methods I’ve used to build long lists are 1) getting people to contribute options (e.g., through a survey) and 2) extracting options from relevant documents or literature. Your approach might involve one or both of these methods.

Getting people to contribute options through a survey

There are many ways you could get people to contribute options, from casual brainstorming sessions to more formal surveys or seminars. Your approach depends on resourcing, the desired level of formality, and your target participants. You may only want people within the team/organisation to generate options, or a broad range of experts. I’ve typically used surveys to collect options from people, so I’ll elaborate on that method below.

A survey might be a good option if:

- You want to reach a large number of people, beyond what’s feasible through individual meetings;

- The topic hasn’t been covered much in relevant documents or literature;

- You want to reduce the work required to code options in the desired format later (this can be minimised by requesting responses in a specific format in the survey);

- Participants prefer to remain anonymous for any reason.

Developing the survey

The purpose of the survey is to get participants to contribute options to your long list. At a high level, you do this by including a survey item that elucidates options in the desired format. These are some key considerations when developing the survey:

Determine who you want to complete the survey. The ideal is to include all relevant groups when building the long list. Consider how you’ll reach participants with different backgrounds (e.g., who work in different areas, are located in different countries, or have different life experiences).

Develop the main survey item based on the unit of prioritisation. If your unit of prioritisation – the type of thing that you’re prioritising – is problems, then the main survey item should be framed to gather problems. You also need to structure questions to elicit responses in the desired format. For example, if you desire outputs in the format, ‘[Population] experiences [problem]’, then instruct participants to provide responses like this (e.g., “Please suggest options in this format…Specify both X and Y”). Providing a specific format can help clarify what’s required from participants, and reduce later coding efforts.

Keep in mind that it’s easier to filter out information than gather more. If you’re uncertain if you want to prioritise multiple things (e.g., populations AND problems), consider asking for more detail, rather than less. You may end up deciding to only prioritise problems, but the extra detail can be included in future briefings or reports.

Consider what participants need to know to ensure their responses are in scope. If you’re only interested in interventions that can be completed in less than a year, or in specific countries, then provide participants with this context. E.g., “We are trying to prioritise interventions that can be actioned in a two-month period”. Note that participants may struggle to judge whether something meets your requirements (e.g., they might not know which interventions can be actioned in two months). Encourage them to provide their suggestions anyway, even if unsure about relevance.

Suggest a minimum number of options you want participants to contribute. This can encourage participants to contribute more options. Note that if you impose a maximum limit, this could make the long list less comprehensive[7].

Consider what information you need to know about participants, such as their work area, location, or professional experience. This can help gauge your sample’s representativeness. Avoid unnecessary questions that may lengthen the survey, feel intrusive, or compromise anonymity.

Build momentum with participants, if useful. Determine what connection you want to have with participants or how you want them to feel (e.g., excited about potential collaborations or being involved in the next stage of the process). Consider how you can build interest (e.g., provide detail in the survey about what’s next and how they can be involved).

Pilot the survey. Check the survey makes sense to people who aren’t in the project team, and that it seems likely you’ll get responses in the desired format.

| Case study How was the long list built? We conducted a national survey of experts and community representatives. We chose this method to maximise national reach and ensure that each respondent could respond anonymously. Who completed the survey? The target audience of the survey was (1) adults with expertise in cardiovascular disease and/or diabetes, including people who specialised in treatment, research, or management; and (2) adults who lived with, or cared for, people with cardiovascular disease and/or diabetes (referred to as community representatives). What was the main survey item? We used different wording for experts and for community representatives to maximise accessibility. We asked experts to provide unmet needs in the format of, “[Intervention] to address [Complication] resulting in [Benefit]”. We asked community representatives to provide unmet needs in the format, “As a result of diabetes, [I OR those I know with diabetes] have [Complication]. This results in [Impact]”. Participants were asked to provide between two and ten options. What participant information did we collect? We collected information on participants’ experience working with, having, or knowing someone with cardiovascular disease and/or diabetes. We also collected basic demographic information on age, gender (optional), and location. You can see the full survey here. |

Collecting responses

Once you’ve developed your survey, it’s time to collect responses. Here are a couple of options:

Email the survey link. This is low effort, though it can be difficult to get people to respond to surveys via emails – consider implementing the strategies below.

Strategies for boosting email survey response rates.

| Strategy | Explanation |

|---|---|

| Personalise the email: include why you’re emailing them in particular. | For example, reference the expertise that they have or the similar projects they have worked on. |

| Explain why this project is important. | Convey that their input is valuable by articulating the importance of the project. E.g., “This project will determine how we allocate $X million” or “This project will inform our focus for the next year”. |

| Make the ask very clear. | Be clear about what you want from them (e.g., “to complete a X-minute survey about Y”). Indicate how long the survey is. Put the ask in bold or a different font so they can immediately see what you want from them in the email. |

| Make the email short and succinct. | Make it clear what you’re doing, what you’re asking for, and why you’re asking them. If you want, you can link to another resource that has more detail (e.g., a plain-language summary of the project). |

| Offer to meet with them to answer any questions. | This shows investment in their involvement and indicates that they’re not just one among thousands. From my experience, relatively few people will ever actually ask to meet. |

| Let them know whether they can share the survey. | Leverage participant networks (e.g., colleagues) to expand the survey's reach and increase sample size. Note that this approach reduces control over the survey audience. If you only want particular people to contribute, this may not be the best approach. Alternatively, you could ask participants to suggest people that you could then send the survey to – after you assess their fit. |

| Provide a survey close date. | Even if you’re not sure when you’ll close the survey, providing a date can add a sense of urgency and motivate people to complete it. |

| Send reminders. | Send 1-2 reminders to complete the survey, perhaps a week after you send it out or just before it closes. |

| Link to an explainer video. | If you’re worried that there may be some confusion for participants (e.g., in what you’re doing or asking for), record a 60-second video explainer. Link to it in the email, emphasising that watching it is optional (if you think it is). |

Organise an event where people can complete the survey. Some participants may be more motivated to go to an event (e.g., a seminar or talk) than click on an email link. This is particularly the case if participants see a personal benefit to their attendance (e.g., they want to hear what you’re working on or connect with others). An event is also a good option if you want to build credibility, connections[8], or participant investment in the project (e.g., because you want them involved in future stages). Below are suggestions for what the event (which could be online) could cover.

Agenda items for a survey event

| Agenda item | Explanation |

|---|---|

| An overview of the project. | Explain what you are aiming to do, why, and how. Emphasise why you need the help of the participants. |

| An explanation of the survey. | The main thing to explain is the item(s) that ask participants to contribute options. Reiterate the format that you want responses in, and convey what is in or out of scope. |

| Question time. | Resolve uncertainty or confusion that participants have, either about the project or survey. |

| Time for participants to complete the survey. | Allocate a set amount of time for participants to complete the survey. Make it clear whether participants should leave once they submit the survey, or stick around if there’s important information to hear at the end (e.g., what’s next in the project). More questions may arise when participants start completing the survey. Consider how you want these to be handled (e.g., should people voice the questions or put them in a chat). |

| Outline what’s next for the project. | It’s good etiquette to let participants know what you’ll do next with their responses. This is also an opportunity to indicate whether you’d like their involvement at a later stage. Be clear about how that will happen (e.g., you’ll reach out to them again). |

Note that you could combine both of the above approaches: email the survey to people and also invite them to an (optional) seminar where they can hear more about the project, ask questions, and complete the survey.

Engage a third party to handle recruitment. Depending on resourcing and your target audience, it may be useful to have a third party handle recruitment. For example, there are companies that specialise in recruiting participants from the general population (e.g., Online Research Unit in Australia) or experts in particular domains (e.g., Research Australia).

| Case study How did we collect responses? MTPConnect and Research Australia distributed the survey to approximately 500 individuals and organisations. The distribution list included Australian health and medical researchers, clinicians, health professionals, community networks, policymakers in state and national health departments, and national and international private organisations with interests in cardiovascular disease and/or diabetes. The team sent a follow-up reminder a week after the initial survey invitation and held the survey open for two weeks. |

Extracting options from relevant documents or literature

Instead of, or in addition to, a survey, you might extract options from relevant documents or literature.

In a more formal, academic process, this could involve a systematic search strategy and a rapid review. You might screen articles and extract the relevant information from those included. For example, “X paper discusses how Y can be used to help this group experiencing Z, so let’s add that intervention to the long list”. I use a spreadsheet to extract relevant information such as author, article, prioritisation units, relevant text extract, and page number. A single article could contain multiple options, especially if it’s a review paper.

In more informal settings, your search might be less systematic or focus on grey literature. For example, if you’re deciding what project to pursue next, you might extract ideas from several blog posts where individuals or organisations list projects they’d like to see happen.

The documents you find may also provide a framework you can use to start coding your options. E.g., You might see that in the literature, interventions are typically grouped under these ten categories. You could then update this based on the other intervention options you gather.

Finalising the long list of options

Once you’ve created your long list of options, you need to process them to ensure they are consistent in format and are each a unique option (i.e., there are no duplicates). This involves:

- Coding them in the same format (e.g., so they contain similar information and are at the same level of specificity). If you don’t do this, you may be comparing things that aren’t comparable.

- Removing any duplicates. It’s likely there will be multiple contributions of the same options when building the long list. Duplicates can distort prioritisation outcomes by splitting votes. This can result in an option seeming like less of a priority than it actually is.

To demonstrate the importance of the above steps, consider these options that could have been generated in a survey:

- Interventions that target blood-borne diseases.

- Provide families with bed nets to prevent Malaria in sub-saharan Africa.

While addressing the same topic, these options are in a very different format. Option 1 is fairly vague, and contains option 2. Option 2 specifies a specific intervention, population, and location, while Option 1 does not. To include both of these options in the prioritisation phase may be confusing and result in unhelpful outcomes (e.g., it’s possible that Option 1 may be too general to be useful to you, or Option 2 may be too specific).

Coding the long list can be the most difficult part of the whole process. It’s also hard to provide specific detail on how to do this without knowing the context (e.g., what you’re prioritising, how formal the process is, or how many options your long list contains).

Considerations during coding

Determine the level of detail you want to capture. This is important for the outcome (e.g., what level of detail is too much or too little) and the process (e.g., how many options would be too many to prioritise). Ask yourself, would it be useful if I found out this option was the highest priority?

- Note that you could code options into multiple levels. E.g., Interventions may be coded into three levels ranging from broad (e.g., does it target the individual, environment, or systems) to specific (e.g., what is the actual intervention). This can speed up coding (e.g, you can just focus on one category at a time), and may be useful if you’re not sure which level the prioritisation will occur at.

Consider whether the options are independent. Does it matter if some of your options contain other options (like the blood-borne diseases example above)?

Make the presentation format consistent. To make things simpler and clearer during the prioritising stage, place options in similar formats. E.g., ‘[Intervention] that addresses [population]’. Hopefully, if you’ve used something like a survey, responses should be vaguely in this format already.

The coding process

You may start off with an initial framework (e.g., that has been used previously). This framework will develop alongside coding (e.g., there may be options that don’t fit into the framework, so you adjust it)

You may have two people coding the same statements for higher rigour, or one person doing a first pass with another person reviewing. If you’re working in a team, it can be useful to check in with others after you’ve coded an initial set, to confirm you’re on the same page.

You might work in Miro or Google Sheets. In Google sheets, you might have a column for the original responses, and a column for each prioritisation unit (e.g., one to code ‘population’ and one to code ‘intervention’). For each prioritisation unit, you may have multiple columns (e.g., ‘Broad intervention’, ‘Specific intervention’).

Note that some responses may be irrelevant or too vague to be coded. You could mark these as excluded.

Deduplicating the long list

Once you’ve coded all the responses/options, make a new list where you remove duplicates. If you’ve coded at multiple levels, you’ll have to decide which level to deduplicate at. E.g., Do you include every unique specific intervention, or only the unique broad-level interventions? Do you care about every unique combination of population AND intervention, or just the unique populations that are mentioned? Note that you should not literally remove duplicates from the original dataset. Avoid deleting any data as it may be useful to come back to.

| Case study How did we code the long list? We conducted inductive thematic analysis to organise the unmet needs identified in the survey. The inductively derived coding framework had two levels: Level 1 codes for the body system (for example, Cardiac), and Level 2 codes for the specific body part or issue within that system (Cardiomyopathy or heart failure). Two researchers independently coded 100 unmet needs to develop an initial coding framework. The research team discussed discrepancies, then refined the coding framework. One researcher coded the remaining unmet needs for each focus area. The research team met regularly throughout the coding process to maximise consistency of the approach and to further refine the coding framework. How did we deduplicate the long list? Once all unmet needs had been coded, we cleaned the dataset and removed duplicates. We removed incomplete survey responses and responses that could not be interpreted as unmet needs (such as generic statements like ‘use technology to improve diabetes management’). Removal of duplicates involved combining unmet needs that were very similar, but which used different wording, into a single coded unmet need (for example, the responses ‘Islet transplantation to address diabetic retinopathy resulting in less vision loss’ and ‘Early treatments for diabetic retinopathy to prevent progression to vision loss’ were combined into the unmet need ‘Eye, Retinopathy’). This meant we gave equal weight to all unmet needs generated in subsequent prioritisation, because the final deduplicated ‘long list’ did not convey how many survey responses had mapped to each coded unmet need. |

Phase 3: Prioritise the long list of unique options using criteria

Now you have the long list of unique options, it’s time to use the criteria you developed earlier to prioritise that list. At a high level, you want to know how each option performs according to the criteria you care about. You then calculate how well each option does overall, which allows you to order the options and determine your top priorities. Below I outline some key considerations.

Sources of data for assessing options

There are two main sources of data for assessing options against criteria: 1) objective data and 2) people. The choice depends on the context: whether data is available or appropriate, whether you can gather the right people, what resourcing you have etc. You could also use objective data (e.g., cost of a potential program, carbon emissions estimates for different environmental problems) to assess some criteria, and people to assess others.

If you’re using people to assess some or all criteria, consider who is best placed to do this. This could include team members, experts, people who will be impacted by the outcome (e.g., users of a product or beneficiaries of an intervention), or the general public.

Methods for assessing options

It sounds simple to say ‘get people to assess the options’, but there are many ways you could do this (again depending on resourcing, expertise, context etc.). Below are just a couple of options.

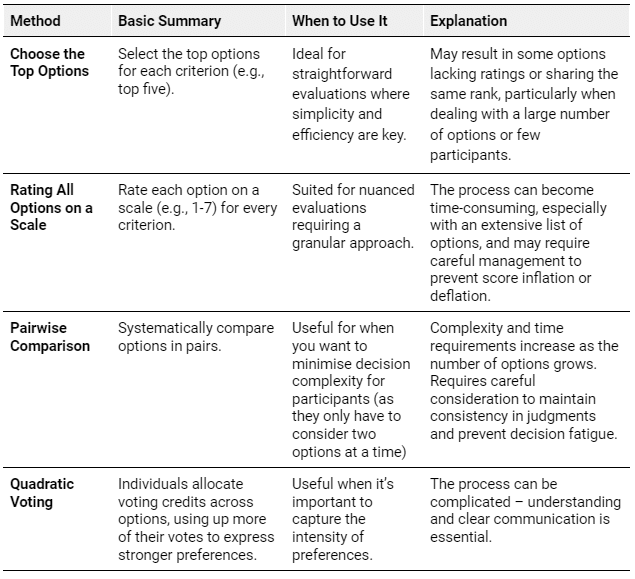

Methods to assess options against criteria.

Using people to assess options

If you’re using people to assess the options, consider how you’ll do this. In my experience, running a workshop can work well. Such an event could cover:

- Overview of project aims, process, and team;

- The purpose of the session, ground rules, and housekeeping;

- Brief participant introductions;

- Questions regarding process and aims of the session;

- Introduction to, and running of, prioritisation activity;

- Break while project team calculates results;

- Presenting top priorities against each of the criteria and overall;

- Breakout rooms to discuss results;

- Reporting back, next steps, and closing.

As with previous steps, consider whether you want participants to be involved in future stages or decisions, and communicate accordingly.

Interpreting the prioritisation results

One important element that’s listed above is discussing the prioritisation results. No matter how sophisticated your assessment process is, relying solely on the prioritised list will likely be an oversimplification. Participants can add nuance that you may want to consider when taking action. For example, they may point out that another project is already addressing the top priority, that there are issues with the coding process (e.g., “X and Y should be coded as the same thing”), or that particular voices were missing from the process.

Some questions to prompt discussion include:

- Were you surprised by the results? Why / why not?

- Are you aware of any initiatives, projects, or organisations currently addressing the priorities?

- How do you think we could make the most progress on these priorities?

- What potential risks or challenges do you foresee in addressing these priorities?

- Are there any reasons we should not pursue these priorities?

Key points from the discussion could be summarised and considered, along with the prioritisation results, when determining what action to take next.

| Case study What information did we use to assess options against criteria? We conducted three roundtables with experts / community representatives to apply the six pre-specified criteria to the deduplicated long lists. Each roundtable addressed one of the three focus areas. How did participants assess options against criteria? We provided participants with an overview of the project and time to read the long list of unmet needs. Participants then considered each prioritisation criterion in turn, and anonymously voted for their top three unmet needs according to that criterion, using an interactive polling platform. We calculated the votes for each unmet need across all criteria (with two criteria weighted at double). How did participants discuss the prioritisation results? Participants discussed the top five overall priorities in facilitated groups. Guiding questions encouraged participants to discuss their initial reactions to the results, their knowledge of the current state of the science, and how the impact of the program funding could be maximised. |

Take action

Once the top priorities are identified, take action aligned with your initial goals. Consider the immediate decisions that can be made and gather any necessary additional information. Communicate the results to relevant stakeholders, including the general public, colleagues, or board members. Follow up with individuals involved in the process to express gratitude, share results, and outline next steps. Finally, take a moment to reflect on the prioritisation process – evaluate its utility, consider lessons learned, and determine if you would apply a similar approach in future decision-making endeavours.

| Case study What action was taken based on the prioritisation results and roundtable discussions? The advisory board used the research report summarising the voting results and key discussion themes to finalise the top three priorities in each focus area. These formed the basis for research funding calls, which were then publicised. |

Acknowledgements

Thank you to Alexander Saeri for your extremely helpful feedback on this guide.

Funding disclosure

I did not receive funding to create this guide.

The case study discussed in this guide was funded by MTPConnect through its delivery of the Targeted Translation Research Accelerator program. The Targeted Translation Research Accelerator program is an initiative of the Australian Government’s Medical Research Future Fund.

- ^

Most of these projects were conducted at BehaviourWorks Australia, a behaviour change research enterprise based at Monash University, Australia.

- ^

Particular thanks to my colleague, Peter Bragge – a Professor of Evidence Based Policy and Practice at Monash Sustainable Development Institute, with extensive experience in prioritisation.

- ^

Note about terminology: ‘Options’ refers to the things that you are prioritising. ‘Long list’ refers to the extensive (and ideally exhaustive) list of options that you gather and then prioritise.

- ^

Artificial intelligence such as large language models (e.g., ChatGPT) could be integrated at various points throughout this process (e.g., to help generate or code options). Discussing this is out of scope for this guide.

- ^

It’s also important to consider typical project management questions. When do you meet? Who is in charge of what? How do you make decisions? How do you communicate (e.g., Signal/Zoom)? How do you keep the timeline on track? Etc.

- ^

To explain this further: if you’re prioritising based on both problems and populations, every unique combination of problem and population will be an option. You may end up with ten options for every one problem, because each of those specifies a different population. You may update your approach later on, depending on how big your long list is (e.g., if you have too many options in your long list, you may decide to just prioritise problems, or just prioritise populations – but not both).

- ^

Even if you don’t impose a maximum limit, participants may essentially do this themselves. For example, it’s likely that they’ll just suggest the main things that come to mind, get bored, or be limited on time. This is a flaw of the process which should be acknowledged. In a way, there is an implicit prioritisation process already going on in the long-listing stage. If participants are only generating five options, it’s probably going to be the five most important in their mind.

- ^

When you’re involving others in your prioritisation process, consider the benefits beyond the specific project. For example, it may be useful to update people on what your organisation is doing, build credibility, build your own connections, or foster a network by introducing various experts / like-minded people to each other.

The CE incubation programme application calls for the submission of a personal weighted factor model of possible career paths ahead of you. I think this report is perfect for that sort of decision making.

In terms of prioritisation for a single person or a student I would really emphasise good criteria selection and weighting + the model itself can be iterated to help build more informed models.

Whilst I was constructing my own model it turned out that certain career paths had scores which were much more based on 'vibes' in their reasoning than on experience and knowledge. It directed me to equalise my understanding of different options.

It also helped remove an emotional overlay people usually fall into when making these decisions in their head or even when writing down options or pros and cons. Something about putting a number for a wieght makes you consider your reasoning it much more closely. How much more do you care about Pay Vs Location Vs Amount you learn in the role? / get from the experience.

Executive summary: This guide outlines a systematic 3-phase process for prioritising options based on predefined criteria, to support effective decision-making.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

Thanks for writing this up!

I love how this report addresses all of the uncertancies of the prioritisation process in a practical and granular manner!