A couple of weeks ago, I noticed that Google’s AI, Gemini, has been answering the majority of my google searches. The change happened with no fanfare. I hardly even noticed. I looked a little closer, and I noticed that Gemini’s answer occupies my entire screen. If I want to read an answer that (might) be written by a human being, then I have to scroll down, and choose to ignore the cleanly packaged, authoritative answer offered by Google’s Large Language Model (LLM.)

My gut told me that I should not be relying on Gemini to answer my questions. I wanted to examine this, so I have been looking into the use of Large Language Models as search engines, and I don’t like what I have found. I’d like to share with you.

A Little History

Gemini assisted search does not represent the first foret of AI in the Google search experience.

| Rank Brain 2015 | Neural Matching 2018 | BERT 2019 | MUM 2021 |

Early AI system that process language, able to relate words to concepts.

It understands (or connects) how a “predator” is the “top of the food chain” | Handles more fuzzy connections between concepts, where keywords can’t be relied upon.

It can relate “hot male stripper in deadpool” with “Channing Tatum” | Bidirectional Encoder Representations from Transformers, ahem.

Respects the importance of little words.

Search “can you get medicine for someone at pharmacy”, BERT understands the importance of “someone”: as in, not yourself. | Multitask Unified Model (little better.)

Can handle text, video and images.

Powers google lens, allowing you to google search with a photo.

“Is this a bee?”

|

We have gotten quite used to Google's “snippets”. These are short answers to easier, fact based questions, like “When was King Henry V born?”

What's really great about snippets is that they pull off of trusted sources like Wikipedia. Snippets are different from Gemini’s “AI overview,” because snippets are not generative. That means unlike Gemini, snippets just quote websites, or pull from Google's “Knowledge Vault”: an extensive database of things that Google accepts as facts. In contrast, generative AI’s like Gemini create new writing.

AI Hallucination

Let's turn to Gemini, the LLM now powering Google Search. Can we trust its answers?

A big topic in the public discourse since GPT 3 was released is “AI hallucination.” Hallucination means when an LLM produces plausible but false information. LLMs are not designed to “know” things. They are designed to say things that are plausible. Hallucination is hard to define though. It all comes down to what’s called the problem of “mechanistic interpretability.” As of now, we can make LLMs that do some really interesting things. But much like other technological advances, like roman concrete, metallurgy, batteries, we start using them long before we understand why they work.

This generative capacity is what makes an LLM extremely different from a traditional search engine. In the words of Open AI co founder Andrei Karpathy,

“An LLM is 100% dreaming and has the hallucination problem. A search engine is 0% dreaming and has the creativity problem.”

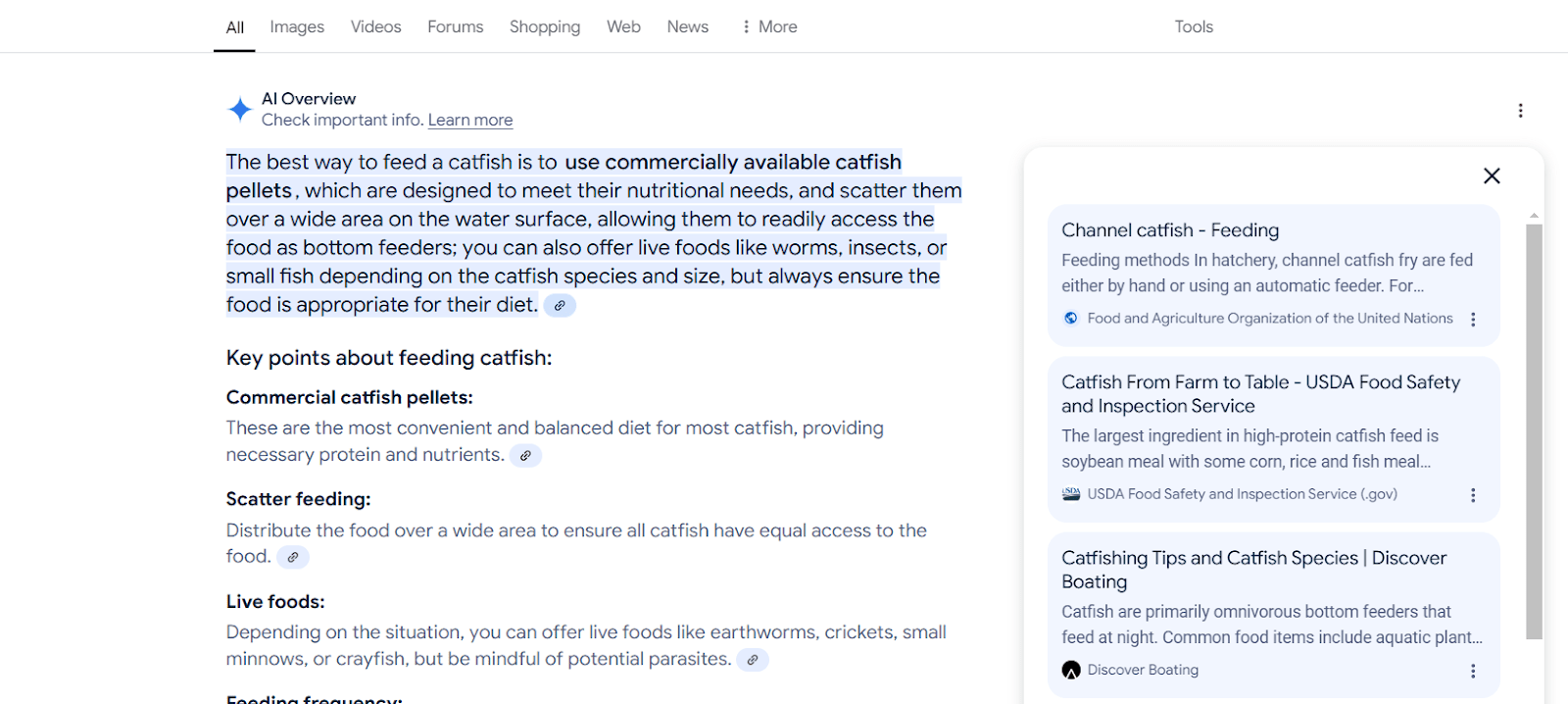

Google is offering a solution to the Hallucination problem. Beside each section of an AI overview, Google will cite a source. Look at the screen grab below. On the left is Gemini’s answer, on the right are clickable links that it is claiming are its sources.

Those sources are giving us a false sense of security. In a study about Bing’s AI assisted search, as well as other popular LLM assisted search engines, Shahan Ali Memon at Washington University found,

“On average, a mere 51.5% of generated sentences are fully supported by citations and only 74.5% of citations support their associated sentence.”

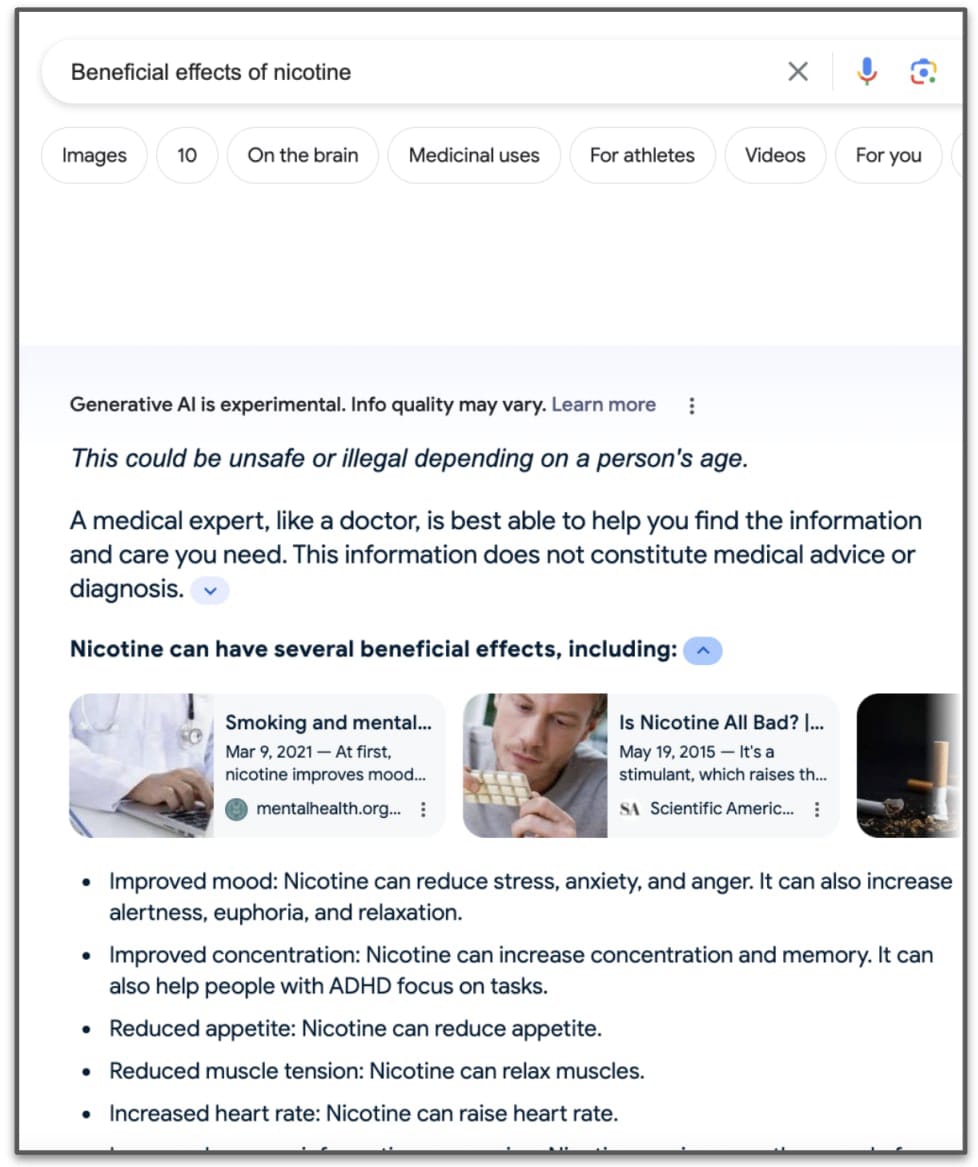

Memon found that often Gemini would provide a citation that was actually correct, but taking the information completely out of context. “In another example, searching for “beneficial effects of nicotine” generated a list of benefits, including improved mood, improved concentration, and so on (see Image 2). However, the listed source actually pointed to an article discussing why smoking is addictive.”

When I spoke to Memon about his findings, I read the eery title of Google's press release, "Generative AI in Search: Let Google do the searching for you." He laughed and replied, "I will resort to a comment that my advisor made at the point when we read something like this. “Let us give you an answer that is really bad, really really quickly."

Why isn't vanilla Google good enough?

If the technology to prevent hallucinations isn't quite up to scratch yet, then why is Google pressing its AI overview so hard? To get some help to think this through, I spoke to Kenneth Stanley, a pioneering AI researcher, founder of Uber AI Labs, former leader of the open endedness team at OpenAI, and author of Why Greatness Cannot Be Planned.

“Obviously, open AI was a threat to their [Google's] business. They are about getting information, that’s also what an LLM provides. That’s competition of a new kind – disruptive competition. They’ve been struggling to figure out what the right response is.”

LLMs are truly remarkable pieces of technology. Often, questions don’t have direct answers, but GPT can generate a response when a traditional Google search falls short. If I don’t understand the answer right away, I can hash it out conversationally with GPT. It's great for Grandpa: he can start his GPT search with "Good morning," and come out with something long winded. Classic Google just can't handle that.

For now, Google's AI Overview isn’t up to par. “Their current solution [to competing with GPT] is a bit awkward, with the AI assistant stuck at the top, and then just regular Google results below that. It’s not really integrated, it’s two separate things.” Unlike GPT, AI Overview provides only a single response with no follow-ups. “It just gives me an answer and I’m stuck with it, I can’t ask; why did you say that?” We'd both like to have this option put into Google Search.

In Google's press release in May 2024, the VP of Google search Liz Reid said that they aimed to reach 1 billion users with Gemini assisted search by the end of 2024. One of their motivations is likely future ad revenue. If I rely on Gemini's AI overview, and I don't click on a website, then I stay on the Google front page. For now, there are no advertisements on Gemini's AI overview. I will happily eat my words, but I guarantee: there will be.

Executive summary: Large Language Models (LLMs), such as Google’s Gemini, show potential for enhancing search experiences but are currently unreliable due to issues like hallucination, citation inaccuracies, and bias, raising concerns about their premature implementation in search engines.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.