I think there is an issue with the community-wide allocation of effort. A very large proportion of our effort goes into preparation work, setting the community up for future successes; and very little goes into external-focused actions which would be good even if the community disappeared. I'll talk about why I think this is a problem (even though I love preparation work), and what types of things I hope to see more of.

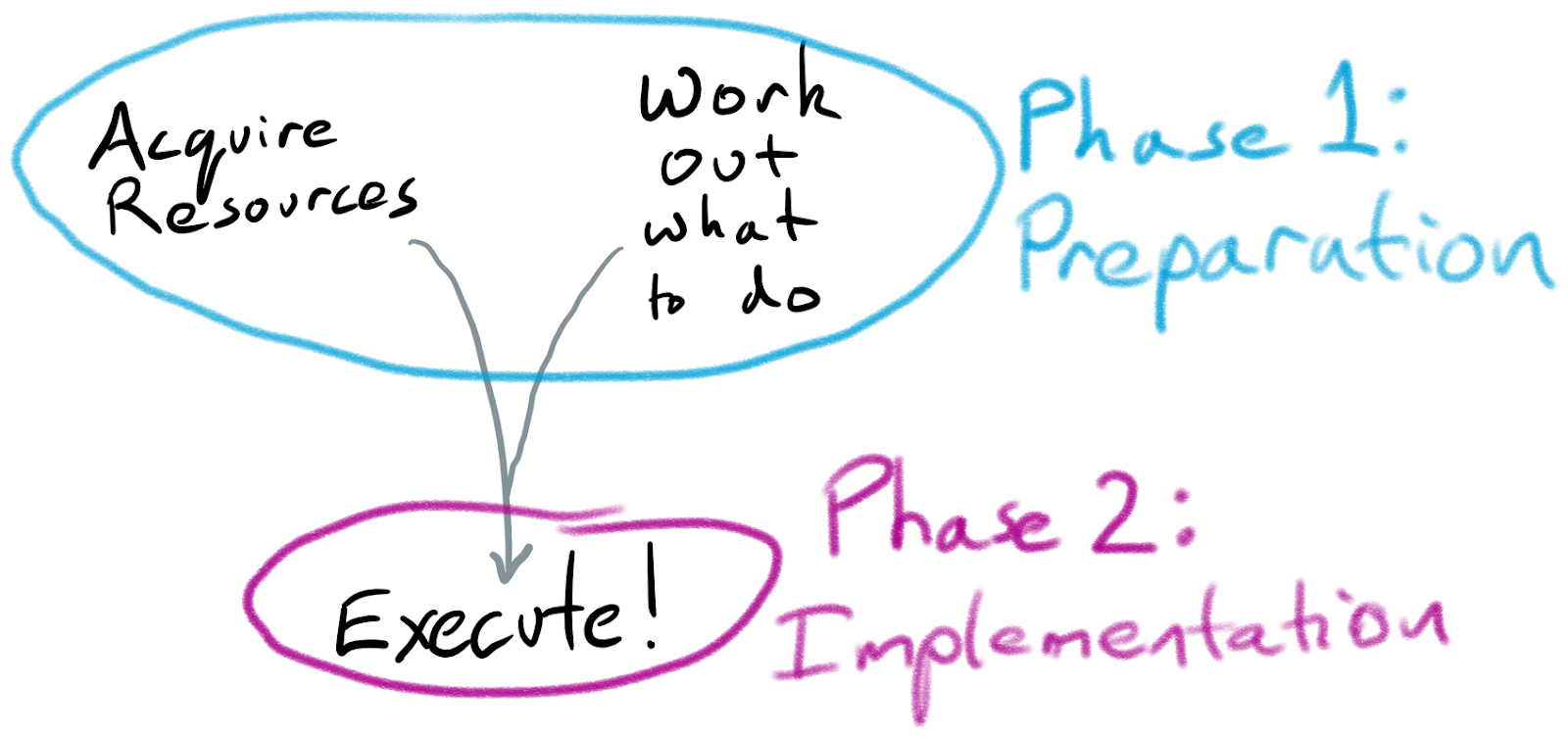

Phase 1 and Phase 2

A general strategy for doing good things:

- Phase 1: acquire resources and work out what to do

- Phase 2: spend down the resources to do things

Note that Phase 2 is the bit where things actually happen. Phase 1 is a necessary step, but on its own it has no impact: it is just preparation for Phase 2.

To understand if something is Phase 2 for the longtermist EA community, we could ask “if the entire community disappeared, would the effects still be good for the world?”. For things which are about acquiring resources — raising money, recruiting people, or gaining influence — the answer is no. For much of the research that the community does, the path to impact is either by using the research to gain more influence, or having the research inform future longtermist EA work — so the answer is again no. However, writing an AI alignment textbook would be useful to the world even absent our communities, so would be Phase 2. (Some activities live in a grey area —for example, increasing scope sensitivity or concern for existential risk across broad parts of society.)

It makes sense to frontload our Phase 1 activities, but we do want to also do Phase 2 in parallel for several reasons:

- Doing enough Phase 2 work helps to ground our Phase 1 work by ensuring that it’s targeted at making the Phase 2 stuff go well

- Moreover we can benefit from the better feedback loops Phase 2 usually has

- We can’t pivot (orgs, careers) instantly between different activities

- A certain amount of Phase 2 helps bring in people who are attracted by demonstrated wins

- We don’t know when the deadline for crucial work is (and some of the best opportunities may only be available early) so we want a portfolio across time

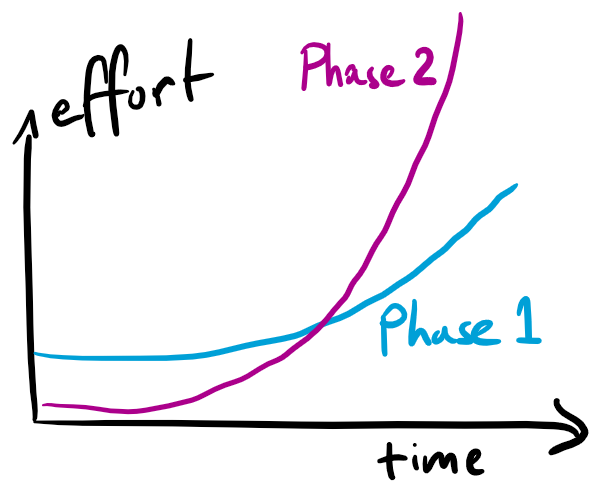

So the picture should look something like this:

I’m worried that it does not. We aren’t actually doing much Phase 2 work, and we also aren’t spending that much time thinking about what Phase 2 work to be doing. (Although I think we’re slowly improving on these dimensions.)

Problem: We are not doing Phase 2 Work

When we look at the current longtermist portfolio, there’s very little Phase 2 work[1]. A large majority of our effort is going into acquiring more resources (e.g. campus outreach, or writing books), or into working out what the-type-of-people-who-listen-to-us should do (e.g. global priorities research).

This is what we could call an inaction trap. As a community we’re preparing but not acting. (This is a relative of the meta-trap, but we have a whole bunch of object-level work e.g. on AI.)

How does AI alignment work fit in?

Almost all AI alignment research is Phase 1 — on reasonable timescales it’s aiming to produce insights about what future alignment researchers should investigate (or to gain influence for the researchers), rather than producing things that would leave the world in a better position even if the community walked away.

But if AI alignment is the crucial work we need to be doing, and it's almost all Phase 1, could this undermine the claim that we should increase our focus on Phase 2 work?

I think not, for two reasons:

- Lots of people are not well suited to AI alignment work, but there are lots of things that they could productively be working on, even if AI is the major determinant of the future (see below)

- Even within AI alignment, I think an increased focus on "how does this end up helping?" could make Phase 1 work more grounded and less likely to accidentally be useless

Problem: We don’t really know what Phase 2 work to do

This may be a surprising statement. Culturally, EA encourages a lot of attention on what actions are good to take, and individuals talk about this all the time. But I think a large majority of the discussion is about relatively marginal actions — what job should an individual take; what project should an org start. And these discussions often relate mostly to Phase 1 goals, e.g. How can we get more people involved? Which people? What questions do we need to understand better? Which path will be better for learning, or for career capital?

It’s still relatively rare to have discussions which directly assess different types of Phase 2 work that we could embark on (either today or later). And while there is a lot of research which has some bearing on assessing Phase 2 work, a large majority of that research is trying to be foundational, or to provide helpful background information.

(I do think this has improved somewhat in recent times. I especially liked this post on concrete biosecurity projects. And the Future Fund project list and project ideas competition contain a fair number of sketch ideas for Phase 2 work.)

Nonetheless, working out what to actually do is perhaps the central question of longtermism. I think what we could call Phase 1.5 work — developing concrete plans for Phase 2 work and debating their merits — deserves a good fraction of our top research talent. My sense is that we're still significantly undershooting on this.[2]

Engaging in this will be hard, and we’ll make lots of mistakes. I certainly see the appeal of keeping to the foundational work where you don’t need to stick your neck out: it seems more robust to gradually build the edifice of knowledge we can have high confidence in, or grow the community of people trying to answer these questions. But I think that we’ll get to better answers faster if we keep on making serious attempts to actually answer the question.

Towards virtuous cycles?

Since Phase 1.5 and Phase 2 work are complements, when we're underinvested in both the marginal analsysis can suggest that neither is that worthwhile — why implement ideas that suck? or why invest in coming up with better ideas if nobody will implement them? But as we get more analysis of what Phase 2 work is needed, it should be easier for people to actually try these ideas out. And as we get people diving into implementation, it should get more people thinking more carefully about what Phase 2 work is actually helpful.

Plus, hopefully the Phase 2 work will actually just make the world better, which is kind of the whole thing we’re trying to do. And better yet, the more we do it, the more we can honestly convey to people that this is the thing we care about and are actually doing.

To be clear, I still think that Phase 1 activity is great. I think that it’s correct that longtermist EA has made it a major focus for now — far more than it would attract in many domains. But I think we’re noticeably above the optimum at the moment.[3]

- ^

When I first drafted this article 6 months ago I guessed <5%. I think it's increased since then; it might still be <5% but I'd feel safer saying <10%, which I think is still too low.

- ^

This is a lot of the motivation for the exercise of asking "what do we want the world to look like in 10 years?"; especially if one excludes dimensions that relate to the future success of the EA movement, it's prompting for more Phase 1.5 thinking.

- ^

I've written this in the first person, but as is often the case my views are informed significantly by conversations with others, and many people have directly or indirectly contributed to this. I want to especially thank Anna Salamon and Nick Beckstead for helpful discussions; and Raymond Douglas for help in editing.

Thanks for writing this. I had had similar thoughts. I have some scattered observations:

One worry I have is the possibility that the longtermist community (especially the funders) is actively repelling and pushing away the driver types – people who want to dive in and start doing (Phase 2 type) things.

This is my experience. I have been pushing forward Phase 2 type work (here) but have been told various things like: not to scale up, phase 1.5 work is not helpful, that we need more research first to know what we are doing, that any interactions with the real world is too risky. Such responses have helped push me away. And I know I am not the only one (e.g. the staff at the Longtermist Entrepreneurship Project team seemed to worry about this feature of longtermist culture too).

Not quite sure how to fix this. Maybe FTX will help. Maybe we should tell entrepreneurial/policy folk not to apply to the LTFF or other Phase 2 sceptical funders. Maybe just more discussion of the topic.

PS. Owen I am glad you are part of this community and thinking about these things. I thought this post was amazing. So Thank you for it. And great reply John.

I agree with you, and with John and the OP. I have had exactly the same experience of the Longtermist community pushing away Phase 2 work as you have - particularly in AI Alignment. If it's not purely technical or theoretical lab work then the funding bodies have zero interest in funding it, and the community has barely much more interest than that in discussion. This creates a feedback loop of focus.

For example, there is a potentially very high impact opportunity in the legal sector right now to make a positive impact in AI Alignment. There are currently a string of ongoing court cases over AI transparency in the UK, particularly relating to government use, which could either result in the law saying that AI must be transparent to academia and public for audit (if the human rights side wins) OR the law saying that AI can be totally secret even when its use affects the public without them knowing (if the government wins). No prizes for guessing which side would better impact s-risk and AI Alignment research on misalignment as it evolves.

That's a big oversimplification obviously, boiled down for forum use, but every AI Alignment person I speak to is absolutely horrified at the idea of getting involved in actual, adversarial AI Policy work. Saying "Hey, maybe EA should fund some AI and Law experts to advise the transparency lobby/lawyers on these cases for free" or "maybe we should start informing the wider public about AI Alignment risks so we can get AI Alignment on political agendas" at an AI Alignment workshop has a similar reaction to suggesting we all go skydiving without parachutes and see who reaches the ground first.

This lack of desire for Phase 2 work, or non-academic direct impact, harms us all in the long run. Most of the issues in AI alignment for example, or climate policy, or nuclear policy, require public and political will to become reality. By sticking to theoretical and Phase 1 work which is out of reach or out of interest to most of the public, we squander opportunity to show our ideas to the public at large and generate support - support we need to make many positive changes a reality.

It's not that Phase 1 work isn't useful, it's critical, it's just that Phase 2 work is what makes Phase 1 work a reality instead of just a thought experiment. Just look at any AI Governance or AI Policy group right now. There are a few good ones but most AI Policy work is research papers or thought experiments because they judge their own impact by this metric. If you say "The research is great, but what have you actually changed?" a lot of them flounder. They all state they want to make changes in AI Policy, but simultaneously have no concrete plan to do it and refuse all help to try.

In Longtermism, unfortunately, the emphasis tends to be much more on theory than action which makes sense. This is in some cases a very good thing because we don't want to rush in with rash actions and make things worse - but if we don't make any actions then what was the point of it all? All we did is sit around all day blowing other people's money.

Maybe the Phase 2 work won't work. Maybe that court case I mentioned will go wrong despite best efforts, or result in unintended consequences, or whatever. But the thing is without any Phase 2 work we won't know. The only way to make action effective is to try it and get better at it and learn from it.

Because guess what? Those people who want AI misalignment, who dont care about climate change, who profit from pandemics, or who want nuclear weapons - they've got zero hesitation about Phase 2 at all.

I agree with quite a bit of this. I particularly want to highlight the point about combo teams of drivers and analytical people — I think EA doesn't just want more executors, but more executor/analyst teams that work really well together. I think that because of the lack of feedback loops on whether work is really helpful for longterm outcomes we'll often really need excellent analysts embedded at the heart of execute-y teams. So this means that as well as cultivating executors we want to cultivate analyst types who can work well with executors.

Sorry for the long and disorganized comment.

I agree with your central claim that we need more implementation, but I either disagree or am confused by a number of other parts of this post. I think the heart of my confusion is that it focuses on only one piece of end to end impact stories: Is there a plausible story for how the proposed actions actually make the world better?

You frame this post as “A general strategy for doing good things”. This is not what I care about. I do not care about doing things, I care about things being done. This is semantic but it also matters? I do not care about implementation for it’s own sake, I care about impact. The model you use assumes preparation, implementation and the unspoken impact. If the action leading to the best impact is to wait, this is the action we should take, but it’s easy to overlook this if the focus is on implementation. So my Gripe #1 is that we care about impact, not implementation, and we should say this explicitly. We don’t want to fall into a logistic trap either [1].

The question you pose is confusing to me:

I’m confused by the timeline of the answer to this question (the effects in this instant or in the future?). I’m also confused by what the community disappearing means – does this mean all the individual people in the community disappear? As an example, MLAB skills up participants in machine learning; it is unclear to me if this is Phase 1 or Phase 2 because I’m not sure the participants disappear; if they disappear then no value has been created, but if they don’t disappear (and we include future impact) they will probably go make the world better in the future. If the EA community disappeared but I didn’t, I would still go work on alignment. It seems like this is the case for many EAs I know. Such a world is better than if the EA community never existed, and the future effects on the world would be positive by my lights, but no phase 2 activities happened up until that point. It seems like MLAB is probably Phase 1, as is university, as is the first half of many people’s careers where they are failing to have much impact and are skill/career capital building. If you do mean disappearing all community members, is this defined by participation in the community or level of agreement with key ideas (or something else)? I would consider it a huge win if OpenAI’s voting board of directions were all members of the EA community, or if they had EA-aligned beliefs; this would actually make us less likely to die. Therefore, I think doing outreach to these folks, or more generally “educating people in key positions about the risks from advanced AI” is a pretty great activity to be doing – even though we don’t yet know most the steps to AGI going well. It seems like this kind of outreach is considered Phase 1 in your view because it’s just building the potential influence of EA ideas. So Gripe #2: The question is ambiguous so I can’t distinguish between Phase 1 and 2 activities on your criteria.

You give the example of

I disagree with this. I don’t think writing a textbook actually makes the world much better. (An AI alignment textbook exists) is not the thing I care about; (aligned AI making the future of humanity go well) is the thing I care about. There’s like 50 steps from the textbook existing to the world being saved, unless your textbook has a solution for alignment, and then it’s only like 10 steps[2]. But you still need somebody to go do those things.

In such a scenario, if we ask “if the entire community disappeared [including all its members], would the effects still be good for the world?”, then I would say that the textbook existing is counterfactually better than the textbook not existing, but not by much. I don’t think the requisite steps needed to prevent the world from ending would be taken. To me, assuming (the current AL alignment community all disappears) cuts our chances of survival in half, at least[3]. I think this framing is not the right one because it is unlikely that the EA or alignment communities will disappear, and I think the world is unfortunately dependent on whether or not these communities stick around. To this end, I think investing in the career and human capital of EA-aligned folks who want to work on alignment is a class of activities relatively likely to improve the future. Convincing top AI researchers and math people etc. is also likely high EV, but you’re saying it’s Phase 2. Again, I don’t care about implementation, I care about impact. I would love to hear AI alignment specific Phase 2 activities that seem more promising than “building the resource bucket (# of people, quality of ideas, $ to a lesser extent, skills of people) of people dedicated to solving alignment”. By more promising I mean have a higher expected value or increase our chances of survival more. Writing a textbook doesn’t pass the test I don’t think. There’s some very intractable ideas I can think of like the UN creates a compute monitoring division. Of the FTX Future Fund ideas, AI Alignment Prizes are maybe Phase 2 depending on the prize, but depends on how we define the limit of the community; probably a lot of good work deserving of a prize would result in an Alignment Forum or LessWrong post without directly impacting people outside these communities much. Writing about AI Ethics suffers from the alignment textbook because it just relies on other people (who probably won’t) taking it seriously. Gripe 3: In terms of AI Alignment, the cause area I focus on most, we don’t seem to have promising Phase 2 ideas but some Phase 1 ideas seem robustly good.

I guess I think AI alignment is a problem where not many things actually help. Creating an aligned AGI helps (so research contributing to that goal has high EV, even if it’s Phase 1), but it’s only something we get one shot at. Getting good governance helps; much of the way to do this is Phase 1 of aligned people getting into positions of power; the other part is creating strategy and policy etc; CSET could create an awesome plan to govern AGI, but, assuming policy makers don’t read reports from disappeared people, this is Phase 1. Policy work is Phase 1 up until there is enough inertia for a policy to get implemented well without the EA community. We’re currently embarrassingly far from having robustly good policy ideas (with a couple exceptions). Gripe 3.5: There’s so much risk of accidental harm from acting soon, and we have no idea what we’re doing.

I agree that we need implementation, but not for its own sake. We need it because it leads to impact or because it’s instrumentally good for getting future impact (as you mention, better feedback, drawing in more people, time diversification based on uncertainty). The irony and cognitive dissonance of being a community dedicated to doing lots of good who then spends most its time thinking does not allude me; as a group organizer at a liberal arts college I think about this quite a bit.

I think the current allocation between Phase 1 and Phase 2 could be incorrect, and you identify some decent reasons why it might be. What would change my mind is a specific plan where having more Phase 2 activities actually increases the EV of the future. In terms of AI Alignment, Phase 1 activities just seem better in almost all cases. I understand that this was a high-level post, so maybe I'm asking for too much.

the concept of a logistics magnet is discussed in Chapter 11 of “Did That Just Happen?!: Beyond “Diversity”―Creating Sustainable and Inclusive Organizations” (Wadsworth, 2021). “This is when the group shifts its focus from the challenging and often distressing underlying issue to, you guessed it, logistics.” (p. 129)

Paths to impact like this are very fuzzy. I’m providing some details purely to show there’s lots of steps and not because I think they’re very realistic. Some steps might be: a person reads the book, they work at an AI lab, they get promoted into a position of influence, they use insights from the book to make some model slightly more aligned and publish a paper about it; 30 other people do similar things in academia and industry, eventually these pieces start to come together and somebody reads all the other papers and creates an AGI that is aligned, this AGI takes a pivotal act to ensure others don’t develop misaligned AGI, we get extremely lucky and this AGI isn’t deceptive, we have a future!

I think it sounds self-important to make a claim like this, so I’ll briefly defend it. Most the world doesn’t recognize the importance or difficulty of the alignment problem. The people who do and are working on it make up the alignment community by my definition; probably a majority consider themselves longtermist or EAs, but I don’t know. If they disappeared, almost nobody would be working on this problem (from a direction that seems even slightly promising to me). There are no good analogies, but... If all the epidemiologists disappeared, our chances of handling the next pandemic well would plunge. This is a bad example partially because others would realize we have a problem and many people have a background close enough that they could fill in the gaps

Re. Gripe #2: I appreciate I haven't done a perfect job of pinning down the concepts. Rather than try to patch them over now (I think I'll continue to have things that are in some ways flawed even if I add some patches), I'll talk a little more about the motivation for the concepts, in the hope that this can help you to triangulate what I intended:

Thanks for the clarification! I would point to this recent post on a similar topic to the last thing you said.

Re. Gripe #3 (/#3.5): I also think AI stuff is super important and that we're mostly not ready for Phase 2 stuff. But I'm also very worried that a lot of work people do on it is kind of missing the point of what ends up mattering ...

So I think that AI alignment etc. would be in a better place if we put more effort into Phase 1.5 stuff. I think that this is supported by having some EA attention on Phase 2 work for things which aren't directly about alignment, but affect the background situation of the world and so are relevant for how well AI goes. Having the concrete Phase 2 work there encourages serious Phase 1.5 work about such things — which probably helps to encourage serious Phase 1.5 work about other AI things (like how we should eventually handle deployment).

Thanks for writing this! One thing that might help would be more examples of Phase 2 work. For instance, I think that most of my work is Phase 2 by your definition (see here for a recent round-up). But I am not entirely sure, especially given the claim that very little Phase 2 work is happening. Other stuff in the "I think this counts but not sure" category would be work done by Redwood Research, Chris Olah at Anthropic, or Rohin Shah at DeepMind (apologies to any other people who I've unintentionally left out).

Another advantage of examples is it could help highlight what you want to see more of.

I hope it's not surprising, but I'd consider Manifold Markets to be Phase 2 work, too.

I have a related draft I've been meaning to post forever, "EA needs better software", with some other examples future kinds of Phase 2 work. (Less focused on Longtermism though)

If anyone's specifically excited about doing Phase 2 work - reach out, we're hiring!

You should post that draft, I've been thinking the same stuff and would like to get the conversation started.

One set of examples is in this section of another post I just put up (linked from a footnote in this post), but that's pretty gesturing / not complete.

I think that for lots of this alignment work there's an ambiguity about how much to count the future alignment research community as part of "longtermist EA" which creates ambiguity about whether the research is itself Phase 2. I think that Redwood's work is Phase 1, but it's possible that they'll later produce research artefacts which are Phase 2. Chris Olah's old work on interpretability felt like Phase 2 to me; I haven't followed his recent work but if the insights are mostly being captured locally at Anthropic I guess it seems like Phase 1, and if they're being put into the world in a way that improves general understanding of the issues then it's more like Phase 2.

Your robustness and science of ML work does look like Phase 2 to me, though again I haven't looked too closely. I do wish that there was more public EA engagement with e.g. the question of "how good is science of ML work for safeguarding the future?" — this analysis feels like a form of Phase 1.5 work that's missing from the public record (although you may have been doing a bunch of this in deciding to work on that).

It's possible btw that I'm just empirically wrong about these percentages of effort! Particularly since there's so much ambiguity around some of the AI stuff. Also possible that things are happening fairly differently in different corners of the community and there are issues about lack of communication between them.

FWIW I think that compared to Chris Olah's old interpretability work, Redwood's adversarial training work feels more like phase 2 work, and our current interpretability work is similarly phase 2.

Thanks for this; it made me notice that I was analyzing Chris's work more in far mode and Redwood's more in near mode. Maybe you're right about these comparisons. I'd be be interested to understand whether/how you think the adversarial training work could most plausibly be directly applied (or if you just mean "fewer intermediate steps till eventual application", or something else).

Who's the "we" in "we are not doing phase 2 work"?

You seem to have made estimates that "we" are not doing phase 2 work, but I don't understand where such estimates come from, and they aren't sourced.

If the point of your post is "more people should think about doing Phase 2 work" then I totally agree with the conclusion! But if your argument hinges on the fact that "we" aren't doing enough of it, I think that might be wrong because it is totally non-obvious to me how to measure how much is being done.

This seems like an easy pitfall, to squint at what's happening on the EA forum or within EA-labeled orgs and think "gee that's mostly Phase 1 work", without considering that you may have a biased sample of a fuzzily-defined thing. In this case it seems perfectly obvious that things explicitly labeled EA are going to lean Phase 1, but things influenced by EA are dramatically larger, mostly invisible, and obviously going to lean Phase 2.

(Context: I run a 2000+ person org, Wave, founded under EA principles but presumably not being counted in the "we" who are doing Phase 2 work because we're not posting on the Forum all the time, or something.)

I was thinking that Wave (and GiveWell donations, etc) weren't being counted because Owen is talking specifically about "longtermist EA"?

Yeah I did mean "longtermist EA", meaning "stuff that people arrived at thinking was especially high priority after taking a long hard look at how most of the expected impact of our actions is probably far into the future and how we need to wrestle with massive uncertainty about what's good to do as a result of that".

I was here imagining that the motivation for working on Wave wasn't that it seemed like a top Phase 2 priority from that perspective. If actually you start with that perspective and think that ~Wave is one of the best ways to address it, then I would want to count Wave as Phase 2 for longtermist EA (I'd also be super interested to get into more discussion about why you think that, because I'd have the impression that there was a gap in the public discourse).

Thanks. I definitely can't count Wave in that category because longtermism wasn't a thing on my radar when Wave was founded. Anyway, I missed that in your original post and I think it somewhat invalidates my point; but only somewhat.

I don't think predating longtermism rules out Wave. I would count Open Phil's grants to the Johns Hopkins Center for Health Security, which was established before EA (let alone longtermism), because Open Phil chose to donate to them for longtermist reasons. Similarly, if you wanted to argue that advancing Wave was one of our current best options for improving the long term future, that would be an argument for grouping Wave in with longtermist work.

(I'm really happy that you and Wave are doing what you're doing, but not because of direct impact on the long-term future.)

Jeff's comment (and my reply) covers ~50% of my response here, with the remaining ~50% splitting as ~20% "yeah you're right that I probably have a sampling bias" and ~30% "well we shouldn't be expecting all the Phase 2 stuff to be in this explicitly labelled core, but it's a problem that it's lacking the Phase 1.5 stuff that connects to the Phase 2 stuff happening elsewhere ... this is bad because it means meta work in the explicitly labelled parts is failing to deliver on its potential".

Quick meta note to say I really enjoyed the length of this post, exploring one idea in enough detail to spark thoughts but high readable. Thank you!

I'd find it really useful to see a list of recent Open Phil grants, categorised by phase 2 vs. 1.

This would help me to better understand the distinction, and also make it more convincing that most effort is going into phase 1 rather than phase 2.

Here's a quick and dirty version of taking the OP grant database and then for ones in the last 9 months categorizing them first by whether they seemed to be motivated by longtermist considerations and for the ones I marked as yes by what phase they seemed to be.

Of 24 grants I thought were unambiguously longtermist, there was 1 I counted as unambiguously Phase 2. There were a couple more I thought might well be Phase 2, and a handful which might be Phase 2 (or have Phase 2 elements) but I was sort of sceptical, as well as three more which were unambiguously Phase 2 but I was uncertain whether they made from longtermist motivations.

I may well have miscategorized some things!

Super helpful, thank you!

Just zooming in on the two biggest ones. One was CSET, which I think I understand why is Phase 1.

The other is this one: https://www.openphilanthropy.org/focus/global-catastrophic-risks/potential-risks-advanced-artificial-intelligence/massachusetts-institute-of-technology-ai-trends-and-impacts-research-2022

Is this Phase 1 because it's essentially an input to future AI strategy?

Yeah. I don't have more understanding of the specifics than are given on that grant page, and I don't know the theory of impact the grantmakers had in mind, but it looks to me like something that's useful because it feeds into future strategy by "our crowd", rather than because it will have outputs that put the world in a generically better position.

I have been ruminating about this issue within EA for ages, and recently got the opportunity to speak to people about it at EAG and was somewhat alarmed at hearing quite half-hearted responses to the question - So thank you for writing this up in an accessible way.

I think as you were suggesting in 'virtuous cycles', phase 2 work (or research) could come to significantly affect phase 1 work.

E.g. If phase 2 research or work on AI alignment came to find that influencing the other groups developing AI to implement the successful phase 1 work is not feasible (foreign governments for example), then it may trigger a reconfiguration of what phase 1 work needs to look like. I.e. is the intention of phase 1 to solve the alignment problem or does it have to become to solve alignment and be the first to develop AGI (excuse my superficial knowledge on AI alignment, the example, not the details, are what's relevant!)

I've also heard from a phase 2 type AI group that they have struggled to get funding and that there are very few people working in this area, suggesting again that your point is very valid and worth attention.

You spent too much time in Phase 1 :P

+1, generally excited about recent EA momentum towards doing the thing.

Unsurprisingly, I see Elicit as Phase 2 work and am excited for more Phase 2 work.

I totally agree with you! In my conversations, there are even a lot of people wanting to work on longtermist issues (to do that Phase 2 work) and we seem to have ideas and funding - but it isn't translating to action and implementation. A challenging issue ...

Thanks for this! I'm curious to better understand what you mean by Phase 1.5 work--do you have in mind work that aims to identify intermediate goals for the community (A) at a somewhat high level (e.g., "increase high-skill immigration to the US") or (B) at a more tactical level (e.g., "lobby Senators to expand the optional practical training program")? I'm a bit confused, since:

(Another way my confusion could be resolved is if you were including AI strategy and AI governance under AI alignment. Although then, "Lots of people are not well suited to AI alignment work" seems less true.)

I meant to include both (A) and (B) -- I agree that (A) is a bottleneck right now, though I think doing this well might include some reasonable fraction of (B).

The best use of time and resources (in the Phase 2 sense) is probably to recruit AI capabilities researchers into AI safety. Uncertainty is not impossible to deal with, and is extremely likely to improve from experience.

That seems archetypically Phase 1 to me? (There's a slight complication about the thing being recruited to not quite being EA)

But I also think most people doing Phase 1 work should stay doing Phase 1 work! I'm making claims about the margin in the portfolio.

Thanks so much for your comment, Owen! I really appreciate it.

I was under the impression (perhaps incomplete!) that your definition of "phase 2" was "an action whose upside is in its impact," and "phase 1" was "an action whose upside is in reducing uncertainty about what is the highest impact option for future actions."

I was suggesting that I think we already know that recruiting people away from AI capabilities research (especially into AI safety) has a substantially high impact, and this impact per unit of time is likely to improve with experience. So pondering without experientially trying it is worse for optimizing its impact, for reducing uncertainty.