This is a shortened version of "We Probably Shouldn't Solve Consciousness" to communicate the argument for Artificial Consciousness Safety (ACS) as a differential technology development strategy worth considering at least in conversations about digital sentience[1]. This post focuses on the risk of brain emulation, uploading, and the scientific endeavour to understand how the brain contributes to consciousness.

Definitions:

Consciousness: Subjective experience.

Sentience: Subjective experience of higher order feelings and sensations, such as pain and pleasure.[2]

Artificial Consciousness (AC): A digital mind; machine subjective experience. A simulation that has conscious awareness. Includes all AC phenomena such as primordial and biosynthetic conscious states.[3]

Digital Sentience: An AC that can experience subjective states that have a positive or negative valence.

TLDR/Summary

The hard problems of consciousness, if solved, will explain how our minds work in great precision.

Solving the problems of consciousness may be necessary for whole brain emulation and digital sentience. [4]When we learn the exact mechanisms of consciousness, we may be able to develop “artificial” consciousness— artificial in the sense that it will be an artefact of technological innovation. The creation of artificial consciousness (not artificial intelligence) will be the point in history when neurophysical developers become Doctor Frankenstein.

Despite digital sentience being broadly discussed, preventative approaches appear to be rare and neglected. AI welfare usually takes the spotlight instead of the precursor concern of AC creation. The problem has high importance because it could involve vast numbers of beings in the far future, leading to risk of astronomical suffering (s-risks). Solving consciousness to a significant enough degree could enable engineers to create artificial consciousness and digital suffering shortly after.

Solving Consciousness

Solving consciousness to a significant degree leads to an increased likelihood of artificial consciousness.

Questions:

- What parts of the brain and body enable experience?

- If found, how could this be replicated digitally?

Auxiliary problems that contribute to understanding consciousness:

- What are the neuroscientific mechanisms of perception? How do sensorimotor cortical columns function? etc

- Hard questions regarding the above neuroscience: Do any of these mechanisms contribute to the phenomenon of conscious experience? If so, how?

Possible interpretations of a “significant degree”:

- A rigorous scientific explanation that has reached consensus across all related disciplines.

- Understanding just enough to be able to build a detection device

- Understanding enough to create a simulated agent that demonstrates the most basic signs of consciousness with a formalised and demonstrable proof

- Understanding consciousness enough for the formalisation to be consolidated into a blueprint of schematic modules, such as a repository on github with a guided and informative readme section explaining each component in enough detail for it to be reproduced.

The Likelihood of Artificial Consciousness

Question: If consciousness could be reduced to a blueprint schematic, how long will it be before it is used to engineer an AC product?

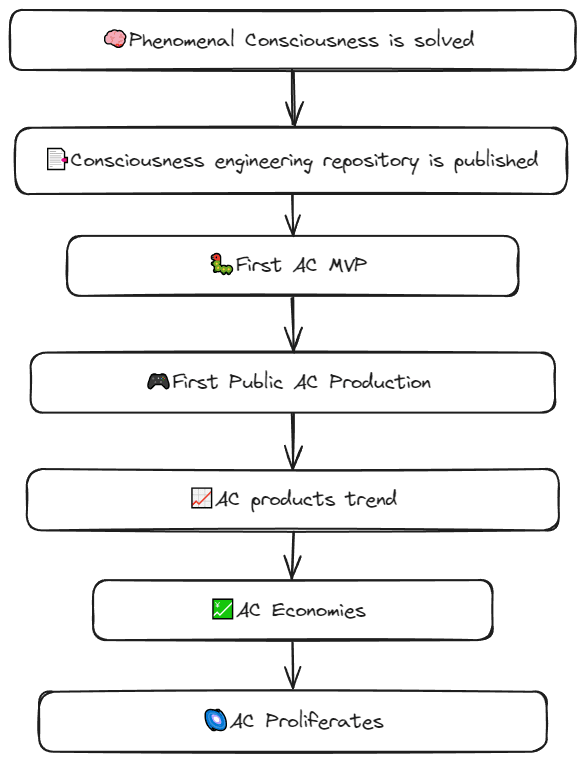

Series of Events

This is the most speculative part of the article and acts as a thought experiment rather than a forecast. The point of this is to keep in mind the key historical moment in which consciousness is solved. It could be in 10, 100, 1000 years or much longer. No one knows. The focus is on how events may play out from the finish of the seemingly benign scientific quest to understand consciousness.

There may be an inflection point in as little as two decades after consciousness is solved when AC products begin to trend. Did we also solve suffering before getting to this point? If not, then this could be where negative outcomes begin to have a runaway effect.

Artificial Consciousness Safety

There are organisations dedicated to caring about digital sentience (Sentience Institute) and extreme suffering (Centre for Reducing Suffering) and even organisations trying to solve consciousness from a mechanistic and mathematical perspective (Qualia Research Institute). We ought to have discussions on the overlap between AI safety, AI welfare and AC welfare. This may seem like arbitrary distinctions though I implore the reader to consider the unique differences. How helpful would it be to have a field such as ACS? I think it would tackle specific gaps that AI welfare does not precisely cover.

I’m not aware of any proposals supporting the regulation of neuroscience as dual use research of concern (DURC) specifically because it could accelerate the creation of AC. This could be due to the fact that there haven’t been any significant papers which would be considered as containing an AC “infohazard”. Yet, that doesn’t mean we aren’t on the precipice of it occurring. On the small chance a paper is published that hints at replicating a component of consciousness artificially, I’ll be increasingly concerned and wishing we had AC security alarms in place.

🚩AC DURC examples:

- Neurophysics publications which detail a successful methodology for reproducing a component of conscious experience.

- Computational Neuroscience publications which provide a repository and/or formula of a component of conscious experience for others to replicate.

- Current Example (possibly): Brainoware—computation is concerning because it may be on a trajectory that breaches the threshold of substrate complexity by combining the biological with the computational. [5]

These examples are flagged as concerning because they may be dangerous in isolation or more likely to be components of larger recipes for consciousness (similar to Sequences of Concern or methodologies to engineer wildfire pandemics).[6] It may be helpful to develop a scale of risk for certain kinds of research/publications that could contribute in different degrees to AC.

How worried should we be?

Now, what if we don’t need to worry about the prevention of AC s-risks because it is not feasible to create AC? The failure to solve consciousness and/or build AC may be positive because AC s-risks could be net-negative. The following points are possible ways these mechanistic failures could occur.

Failure Modes of Science:

- Failure to solve consciousness.

- Failure 1: Physicality. Consciousness may work in ways outside known laws of physics or cannot be physically replicated by the simulation capacities of near-term technology. Another similar possibility would be scientists ruling that the physics of consciousness is too difficult to untangle without a super-duper-computer (this still could be solved with enough time so it might be more of a near-term failure)

- Failure 2: Evolutionary/Binding. The phenomenal binding problem remains elusive and no research has come close to demonstrating non-organic binding or even the mechanisms of binding. Similarly, consciousness may require organic and generational development that can not be replicated in the laboratory; non-organic, single lifetime conditions. No current analysis of biology has revealed why or if that is the case.

- Failure 3: unknown unknowns.

- Failure to build consciousness artificially (even if consciousness is solved).

- Failure 4: Substrate dependent. Solving consciousness proves that it can not be built artificially.

- Failure 5: Complexity Dependent. Consciousness is substrate independent but building the artificial body to house a simulated mind would require enormous amounts of resources, such as computing hardware, intelligence/engineering advances, power consumption, etc.

- Failure to solve either.

- Failure 6: Collapse. X-risks occur and we lose the information and resources to pursue the science of consciousness.

- Failure 6: Collapse. X-risks occur and we lose the information and resources to pursue the science of consciousness.

The real question we should be considering here is: if we do nothing at all about regulating AC-precursor research, how likely is it that we solve consciousness, and as result are forced to try to regulate consciousness technology? Or at worst, respond in reaction to AC suffering after it has already occurred?

I would have loved to have done further research into more areas of ACS but at some point it's necessary to get feedback and engage with public commentary. I’m sure there are many other low-hanging fruit here around the AI safety parallels.

Let me know if it is the case that we should hope for the scientific failure modes of 1 and 2. Indeed some of the feedback received so far has been on how extremely unlikely it is that we will create AC. If that is true, does that mean we should stop funding research into brain emulation and cease discussions about AI welfare? Either way, I think we ought to consider ACS interventions in order to prevent the s-risk nightmares that can emerge from making up AC minds.

- ^

- ^

https://www.sciencedirect.com/topics/neuroscience/sentience

- ^

AC welfare is distinct from AI welfare because AC includes non intelligent, precursor states and strange artificial manifestations of sentience.

- ^

Aleksander, Igor (1995). "Artificial neuroconsciousness an update"

- ^

Dynamical Complexity and Causal Density are early attempts to measure substrate complexity https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1001052

- ^

Kevin Esvelt on bad actors and precise mitigation strategies