Developments in Machine Learning have been happening extraordinarily fast, and as their impacts become increasingly visible, it becomes ever more important to develop a quantitative understanding of these changes. However, relevant data has thus far been scattered across multiple papers, has required expertise to gather accurately, or has been otherwise hard to obtain.

Given this, Epoch is thrilled to announce the launch of our new dashboard, which covers key numbers and figures from our research to help understand the present and future of Machine Learning. This includes:

- Training compute requirements

- Model size, measured by the number of trainable parameters

- The availability and use of data for training

- Trends in hardware efficiency

- Algorithmic improvements for achieving better performance with fewer resources

- The growth of investment in training runs over time

Our dashboard gathers all of this information in a single, accessible place. The numbers and figures are accompanied by further information such as confidence intervals, labels representing our degree of uncertainty in the results, and links to relevant research papers. These details are especially useful to illustrate which areas may require further investigation, and how much you should trust our findings.

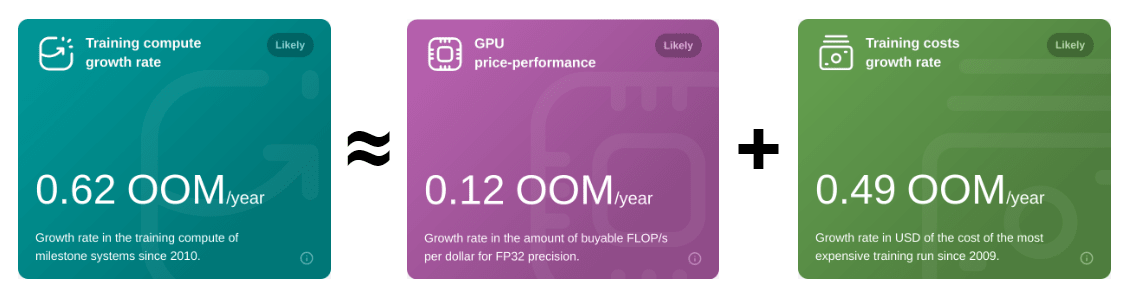

Beyond accessibility, bringing these figures together allows us to compare and contrast trends and drivers of progress. For example, we can verify that growth in training compute is driven by improvements to hardware performance and rising investments:

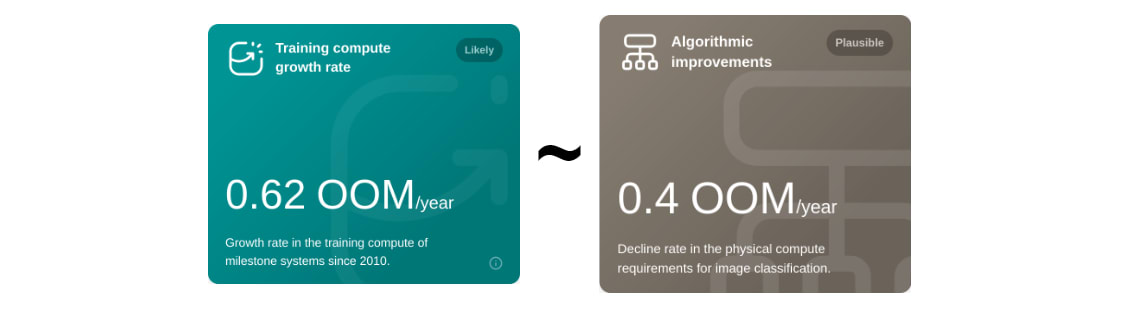

We can also see that performance improvements have historically been driven by algorithmic progress and training compute growth by comparable amounts:

Overall, we hope that our dashboard will serve as a valuable resource for researchers, policymakers, and anyone interested in the future of Machine Learning.

We plan on keeping our dashboard regularly updated, so stay tuned! If you spot an error or would like to provide feedback, please feel free to reach out to us at info@epochai.org.

Great job! The design is impressively sleek. I wish I had this dashboard a few months ago when I was coming up with questions for an AI quiz. Congratulations.

This is great!

Is the prediction that we will run out of text by 2040 specific to human-generated text or does it account for generative text outputs (which, as I understand it, are also being used as inputs)?

It is specific to the human-generated text.

The current soft consensus at Epoch is that data limitations will probably not be a big obstacle to scaling compared to compute, because we expect generative outputs and data efficiency innovation to make up for it.

This is more based on intuition than rigorous research though.

Interesting, thanks for answering!