Introduction

It’s an oft-cited rule of thumb that people saving for their own future consumption hold 40% of their financial wealth in bonds, which offer low expected returns but at low risk, and 60% in stocks, which offer higher expected returns at higher risk. It is sometimes argued, especially in EA circles, that philanthropists should adopt much more aggressive portfolios: investing everything in stocks and similarly risky assets, and perhaps even going further, taking on leverage to achieve higher expected returns at higher risk. I have argued this in the past.[1]

Since the collapse of FTX, many have emphasized that there are limits to this position. Some, like Wiblin (2022), have reminded us that even spending toward large-scale philanthropic goals runs into diminishing returns at large enough scales. Other discussion (I think this is a representative sample) has argued that philanthropists should use utility functions that are roughly logarithmic instead of near-linear.[2] But both these strands of pushback against adopting extreme financial risk tolerance as a philanthropist still end with the conclusion that philanthropists should invest much more riskily than most other people.

This is not how philanthropic endowments are usually invested. On Campbell’s (2011) account, the Harvard endowment consisted of about 70% stocks and 30% bonds until the late ’90s, and the endowment managers then expanded into more exotic asset classes only once they concluded that they could do so without taking on more risk. The story with the Yale portfolio is similar.[3] Investment reports from large American foundations display some variety in investment strategies, but as far as I can tell, they skew only slightly more risk tolerant than university endowments, tending not to depart far from a portfolio of 70% (public and private) equity, 10% cash and bonds, and 20% alternative investments which usually behave roughly like a combination of the two.[4] If anyone reading this knows of (or produces) a more detailed analysis of the risk tolerance currently adopted by philanthropic investors, please share it! But in any event, my strong impression is that extreme financial risk tolerance is at odds with mainstream philanthropic practice.

This post is yet another EA-Forum-article attempt to summarize the considerations for and against adopting an unusual degree of financial risk aversion in philanthropy. It doesn’t produce a quantitative recommendation, but it does conclude that there are issues with many of the arguments for unusual financial risk tolerance most often heard in the EA community, including those from Shulman (2012), Christiano (2013), Tomasik (2015), Irlam (2017, 2020), Dickens (2020), and an old Google doc I’m told summarizes Open Phil’s investment advice to Good Ventures.[5] This is not to say that there are no good reasons for some philanthropists to adopt unusually risky (or, for that matter, cautious) financial portfolios. I’ll flag what strike me as some important considerations along the way. An EA philanthropist’s objectives are indeed different in some relevant ways from those of many other endowment managers, and certainly from those of individuals saving for their own futures. But on the whole, the impression I’ve come away with is that the mainstream philanthropic community may be onto something. On balance, directionally, this post is what its title suggests: a case against much financial risk tolerance.

The arguments for financial risk tolerance explored here are organized into three sections.

- The first discusses arguments stemming directly from the intuition that the marginal utility to philanthropic spending doesn’t diminish very quickly. (I think the intuition has some merit, but is not as well founded as often supposed.)

- The second discusses the argument that, even if the marginal utility to philanthropic spending does diminish pretty quickly, a formal financial model tells us that philanthropists should still be more financially risk tolerant than most people are. (I think the model is usually misapplied here, and that if anything, a proper application of it suggests the opposite.)

- The third discusses arguments that philanthropists might consider equities—which happen to be risky—unusually valuable, even if the philanthropists aren’t particularly risk tolerant per se. (I think this is true but only to a small extent.)

Even if you disagree with the conclusion, I hope you find some of the post’s reexaminations of the classic arguments for financial risk tolerance helpful. I hope the post also sheds some light on where further research would be valuable.

Defining financial risk aversion

To be financially risk averse is to prefer a portfolio with much lower expected returns than you could have chosen, for the sake of significantly reducing the chance that you lose a lot of money. To be financially risk tolerant is to prefer a portfolio with higher expected returns despite a higher chance of losing a lot of money, or, in the extreme case of risk-neutrality, to always choose a portfolio that maximizes expected returns. A portfolio is risky if it has a high chance of losing a lot of its value. So I’ll be arguing that, when constructing their portfolios, philanthropists, including small ones, should typically be willing to sacrifice expected returns for lower riskiness to roughly the same extent as most other investors. I won’t get more formal here, since I think the first section should mostly be accessible without it, but the second section will introduce some formalism.

It may be worth distinguishing financial from moral risk aversion. Throughout the post, I’ll assume moral risk-neutrality: that a philanthropist should try to maximize the expected amount of good she does. So everything written here is compatible with the classical utilitarian position that one should take a gamble offering, say, a 51% chance of doubling the world and a 49% chance of destroying it. If you endorse some degree of moral risk aversion as well, you may think philanthropists should adopt even less risky financial portfolios than would be justified by the points made here.[6]

Arguments from a seeming lack of diminishing marginal utility

The basic argument

A common starting intuition is that philanthropists should be highly financially risk tolerant because the marginal value of spending on philanthropic projects generally seems not to diminish quickly. The idea is, most investors face steeply diminishing marginal utility in spending. Doubling your consumption only helps you a little bit, whereas losing it all really hurts. So it makes sense for them to invest cautiously. By contrast, philanthropists, if they just want to maximize the expected value of the good they accomplish, face very little diminishing marginal utility in spending. Twice as much money can buy twice as many bed nets, say, and save roughly twice as many lives. This might seem to mean that philanthropists should be highly risk tolerant when investing—indeed, almost risk-neutral. Let’s call this the “basic” argument. See Shulman (2012) for an early illustration, and the first half of Wiblin (2022) for a recent one.

But it’s not clear why having philanthropic objectives per se would result in a lack of steeply diminishing returns. The intuitive appeal of the basic argument relies mainly, I think, on the fact that an individual philanthropist often provides only a small fraction of the total funding destined for a given cause. She is often a “small fish in a big pond”. Consider by contrast a philanthropist only interested in a cause for which she provides about all the funding: the public park in her own hometown, say, or some very obscure corner of the EA landscape.[7] The philanthropist would typically not find that doubling her spending in the area would come close to doubling her impact. It would therefore not be right for her to invest almost risk-neutrally.

So this section has two further subsections. The first discusses whether being a small fish in a big pond really should motivate a philanthropist to adopt an unusually risky financial portfolio, and argues that it generally shouldn’t. The second discusses whether our philanthropic objectives per se should be expected to exhibit a lack of steeply diminishing returns, and again argues that they generally shouldn’t (though more tentatively).

The “small fish in a big pond” argument

Without idiosyncratic risk

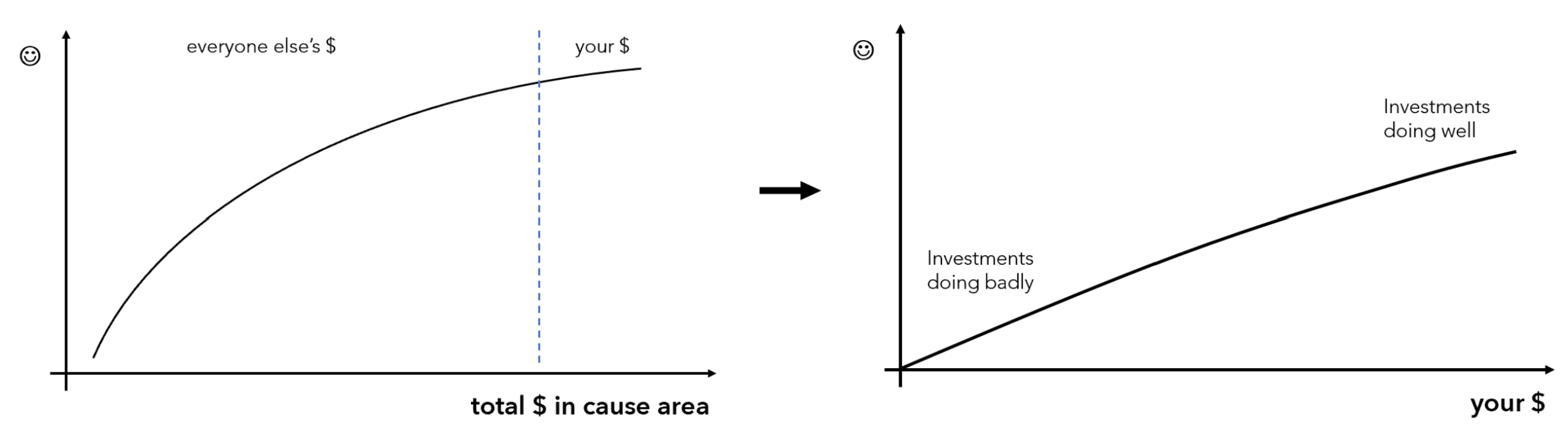

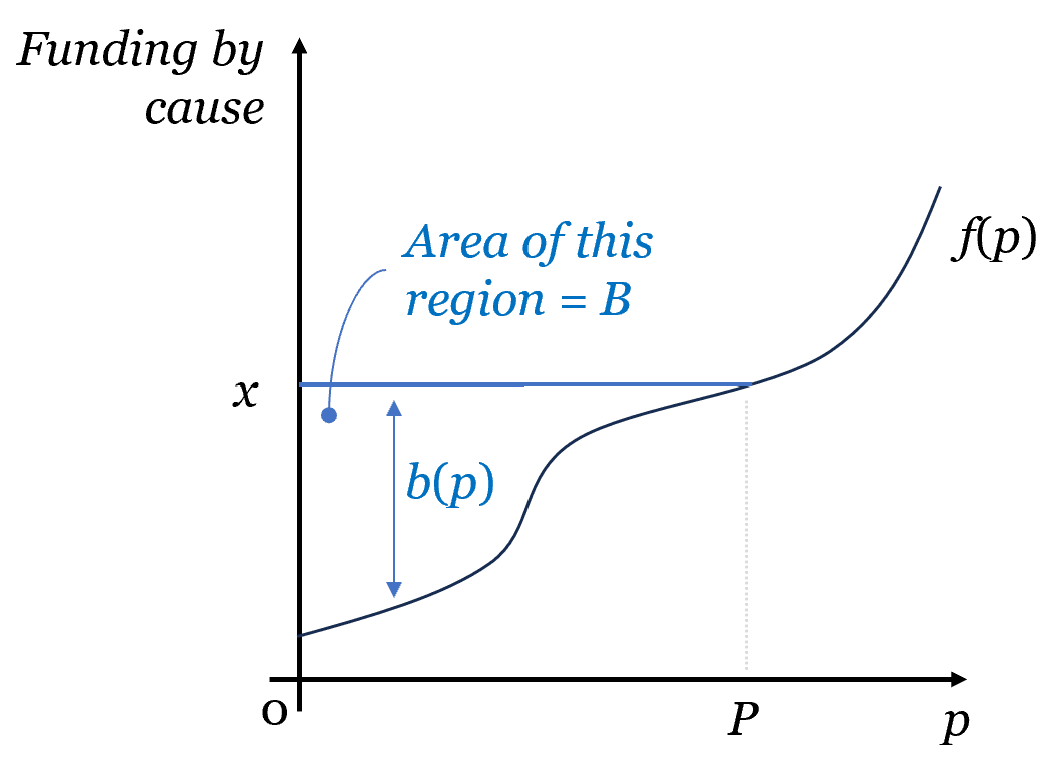

When an individual philanthropist provides only a small fraction of an area’s total funding, the marginal impact of her own spending does tend to be roughly constant. Let’s call such a philanthropist “proportionally small”. Intuitively, being proportionally small might seem to motivate financial risk tolerance. The intuition may be represented visually like this:

But there’s something fishy with this argument. Consider a billionaire, investing for his own future consumption, whose $1B portfolio would optimally contain 60% stocks and 40% bonds. And suppose that, instead of putting the $1B all in one investment account, he divides it among 1,000 managers each responsible for $1M of it. If 999 of the managers all put 60% of their $1M in stocks and 40% in bonds, then of course, manager #1,000 should do the same. She shouldn’t think that, as a small fish in a big pond, she should be risk tolerant and put it all in stocks.

What goes wrong with the argument sketched above is that it fails to account for the possibility that when one philanthropic investor’s portfolio performs well (/badly), the other funders of her favorite causes may also tend to perform well (/badly). This is what happens, for instance, if funders all face the same set of investment opportunities—if they all just have access to the same stock and bond markets, say. In this case, a funder’s distribution of utility gains and losses from a given high-risk, high-return investment actually looks like this:

We now see the wrinkle in a statement like “twice as much money can buy twice as many bed nets, and save roughly twice as many lives”. When markets are doing unusually well, other funders have unusually much to spend on services for the poor, and the poor probably have more to spend on themselves as well. So it probably gets more costly to increase their wellbeing.

Unfortunately, I’m not aware of a good estimate of the extent to which stock market performance is associated with the cost of lowering mortality risk in particular.[8] I grant that the association is probably weaker than with the cost of “buying wellbeing for the average investor” (weighted by wealth), since the world’s poorest probably get their income from sources less correlated than average with global economic performance, and that this might justify a riskier portfolio for an endowment intended for global poverty relief than the portfolios most individuals (weighted by wealth) adopt for themselves.[9] But note that, whatever association there may be, it can’t be straightforwardly estimated from GiveWell’s periodically updated estimates of the current “cost of saving a life”. Those estimates are based on studies of how well a given intervention has performed in recent years, not live data. They don’t (at least fully) shed light on the possibility that, say, when stock prices are up, then the global economy is doing well, and this means that

- other philanthropists and governments[10] have more to spend on malaria treatment and prevention;[11]

- wages in Nairobi are high, this raises the prices of agricultural products from rural Kenya, this in turn leaves rural Kenyans better nourished and less likely to die if they get malaria;

and so on, all of which lowers the marginal value of malaria spending by a bit. A relationship along these lines strikes me as highly plausible even in the short run (though see the caveats in footnote 9). And more importantly, over the longer run, it is certainly plausible that if the global economic growth rate is especially high (low), this will lead both to higher (lower) stock prices and to higher (lower) costs of most cheaply saving a life.[12] But even over several years, noise (both in the estimates of how most cheaply to save a life and in the form of random fluctuations in the actual cost of most cheaply saving life) could mask the association between these trends, since the GiveWell estimates are not frequently updated.

In any event, the point is that it’s not being proportionally small per se that should motivate risk tolerance. The “small fish in a big pond” intuition relies on the assumptions that one is only providing a small fraction of the total funding destined for a given cause and that the other funders’ investment returns will be uncorrelated with one’s own. While the first assumption may often hold, the latter rarely does, at least not fully. There’s no general rule that small fishes in big ponds should typically be especially risk tolerant, since the school as a whole typically faces correlated risk.

Non-optimal risk tolerance by other funding sources

As we’ve seen, when other funding streams are correlated with her own, a proportionally small funder shouldn’t adopt an unusual investment strategy just because she’s small. If she trusts that the other funding sources—the other “999 managers”—aren’t investing too risk aversely or risk tolerantly, she should copy what they do.

If most of the funding in some domain comes from people investing too cautiously, then yes, a proportionally small funder should try to correct this by investing very riskily, as e.g. Christiano (2013) emphasizes. I don’t doubt that in some domains, it can be established that most of the funding does come from people investing too cautiously. But of course it could also go the other way. The next sub-subsection introduces a reason to believe that a lot of philanthropic funding should be expected to come from people investing especially riskily. More generally, if I’m right that the collective EA financial portfolio should be invested even a little bit less riskily than EA discourse to date would suggest is ideal, then a proportionally small reader of this post should invest as cautiously as possible.

Ultimately, a proportionally small philanthropist with the typical suite of investment opportunities just has to make up her own mind about what the collective portfolio toward her favored causes should look like, observe what it does look like, and invest so that the collective portfolio resembles her ideal as closely as possible.[13] We’ll think about how risky the collective portfolio should be in the next subsection.

With idiosyncratic risk

Before doing that, though, sometimes a proportionally small philanthropist does have a private investment opportunity whose performance is relatively uncorrelated—or even anticorrelated—with the wealth of her cause’s other funders. In these cases, at least in principle, we finally have a sound motivation for financial risk tolerance. Suppose you alone get the chance to bet on a coin flip, where you will pay $x if the coin lands on one side and win slightly more than $x if the coin lands on the other (up to some large x). You should typically choose an x that is a small fraction of your wealth if you’re an individual or a proportionally large philanthropist, but all your wealth if you’re a proportionally small philanthropist. The logic illustrated by Figure 1 goes through.

I believe that in practice, the most significant private investment opportunities available to proportionally small philanthropists are startups. A startup, like a coin flip, comes with some idiosyncratic risk. A proportionally small philanthropist should therefore sometimes be willing to take the opportunity to found or join a startup that most people wouldn’t. Indeed, this is the case to which Shulman (2012) applies what at least sounds, without this context, like the “basic argument”.

But startup returns are also largely correlated with the returns of publicly traded companies. Indeed, to my understanding, the startups—especially tech startups—fluctuate more. That is, when the stock market does a bit better than usual, the startups typically boom, and when the stock market does a bit worse than usual, the startups are typically the first to go bust.[14] Proportionally small philanthropists should not much mind the first kind of risk (“idiosyncratic”), but as noted previously, they should indeed mind the second (“systematic”). Let’s now consider how the two effects shake out.

Consider a startup founder who is not a proportionally small philanthropist—e.g. one who just wants to maximize the expected utility he gets from his own future consumption. His ideal financial portfolio presumably contains only a small investment in his own startup, so that he can diversify away the idiosyncratic risk that his startup carries. But a founder typically can’t sell off almost all of the startup, and thereby insure himself against its risks, due to adverse selection and moral hazard. That is, potential buyers naturally worry that a founder selling off most of his startup (a) knows it’s less valuable than it looks, and/or (b) will put less effort into the company when he has less skin in the game. This means that the marginal startup should be expected to offer its founder a premium high enough to compensate him for the fact that, to start it, he will have to fill his own portfolio with a lot of idiosyncratic risk.

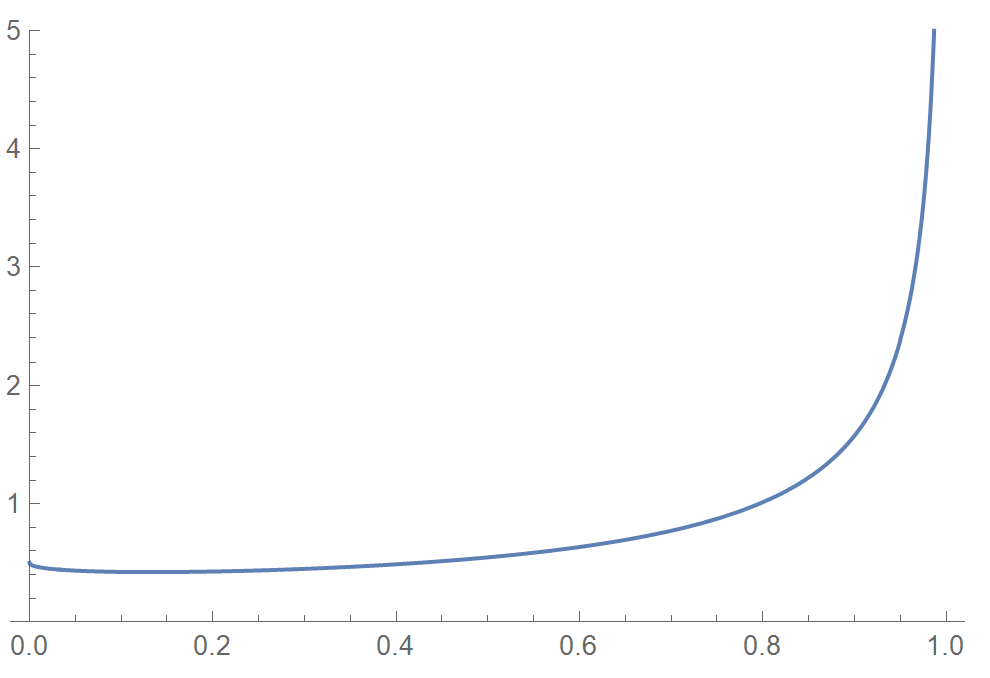

Since proportionally small philanthropists shouldn’t care much about idiosyncratic risk, they should be unusually willing to start startups, and willing to put unusually large shares of their wealth into whatever startups they have. But in doing so, they will load the collective portfolio with the concentrated dose of systematic risk that startups also typically carry. If this is more than the level of systematic risk that the collective philanthropic portfolio should exhibit, then to offset this, the value-aligned philanthropists without startups should invest especially cautiously. For illustration:

- Suppose the optimal collective financial portfolio across philanthropists in some cause area would have been 60% stocks, 40% bonds if stocks and bonds had been the only asset options.

- Now suppose some of the philanthropists have opportunities to invest in startups. Suppose that, using bonds as a baseline, startup systematic risk is twice as severe as the systematic risk from a standard index fund. What should the collective portfolio be?

- First, consider the unrealistic case in which—despite systematic risk fully twice as severe for startups as for publicly traded stocks—the expected returns are only twice as high. That is, suppose that whenever stock prices on average rise or fall by 1%, the value of a random portfolio of startups rises or falls by x% where x > 0.6. For illustration, say x = 2. Then a portfolio of 30% startups and 70% bonds would behave like a portfolio of 60% stocks and 40% bonds. Either of these portfolios, or any average of the two, would be optimal.

- But if startups are (say) twice as systematically risky as stocks (relative to bonds), expected returns (above bonds) should be expected to be more than twice as high. If the expected returns were only twice as high, startup founders would only be being compensated for the systematic risk; but as noted, most founders also need to be compensated for idiosyncratic risk.

- To the extent that startup returns are boosted by this compensation for idiosyncratic risk, the optimal collective financial portfolio across these philanthropists is then some deviation from 30% startups / 70% bonds in the direction of startups. Everyone without a startup should have 100% of their portfolio in bonds.

In sum, 2022 was more than bad luck (even besides the fraud). It’s not entirely a coincidence that the year FTX imploded was also the year in which crypto as a whole crashed, Meta and Asana lost most of their value, and stock markets as a whole lost a lot of their value. An EA-aligned endowment held in safe bonds would not have lost value, and so would have done a lot more good now that the marginal utility to “EA spending” is (presumably permanently) higher than it otherwise would have been.

Finally, note that a given philanthropic community may wind up exposed not to “a portfolio of startups”, whose idiosyncratic risks wash out, but to a small number of startups whose idiosyncratic risks contribute significantly to the variance of the whole portfolio. That has certainly been the situation of the EA community in recent years. In these cases, as many have pointed out already, the proportionally small philanthropists without startups should invest not just to offset the aligned startups’ systematic risk but also to offset their idiosyncratic risks. That is, they should invest in firms and industries whose performance is anticorrelated with the industries of the aligned startups. But I’d like to keep this post focused mainly on philanthropic finance in the abstract, so I won’t think about how to apply this insight to the EA case in more detail.

Arguments about the utility function for “the pond as a whole”

So far, the discussion largely mirrors that of Tomasik (2015), Dickens (2020), and, more briefly, Christiano (2013). All these pieces acknowledge the intuitive appeal of what I’ve called the “small fish in a big pond” argument, explain that its logic only goes through when a proportionally small philanthropist faces an investment opportunity with idiosyncratic risk, and note that such opportunities are rare, with the possible exceptions of startups. They don’t make the point that, if the advice about investing more in startups is taken, then proportionally small philanthropists without startups should invest especially cautiously, to offset the risk others’ startups bring to the collective portfolio. But otherwise, we all basically agree that members of a philanthropic community should generally adopt portfolios about as risky as it would make sense to make the collective portfolio. This subsection considers arguments about how risky that is.

Naturally, it depends on the function from total spending in some domain of philanthropic concern to impact. Let’s call this the “philanthropic utility function”, but remember that the input to the function is total spending in the relevant domain (including spending by governments, and spending by the poor on themselves), not just spending by philanthropists. If the philanthropic utility function exhibits as much curvature as a typical individual’s utility function, then philanthropists should invest about as cautiously as typical individuals do (at least if individuals typically invest about as riskily as is best for them, an assumption I’ll sort of defend when discussing the equity premium puzzle argument). As summarized below, I disagree with most of the arguments I’m aware of for thinking that philanthropic utility functions exhibit unusually little curvature—though one does strike me as strong, and some raise considerations that seem important but directionally ambiguous.

When I refer to a utility function’s curvature, I’m referring to what’s known as its coefficient of relative risk aversion, or RRA. This is a number that equals 0 everywhere for linear utility functions, 1 everywhere for logarithmic utility functions, and a higher number everywhere for yet more steeply curved utility functions. A utility function doesn’t have to exhibit the same curvature everywhere, and estimates of the curvature of people’s utility functions in different contexts vary, but they’re typically found to be well above 1.[15] So this is what I’m defending above as a baseline for the philanthropic case.

Typical risk aversion as a (weak) baseline

Suppose a philanthropist’s (or philanthropic community’s) goal is simply to provide for a destitute but otherwise typical household with nothing to spend on themselves. Presumably, the philanthropist should typically be as risk averse as a typical household.[16] Likewise, suppose the goal is to provide for many such households, all of which are identical. The philanthropist should then adopt a portfolio like the combination of the portfolios these households would hold themselves, which would, again, exhibit the typical level of riskiness. This thought suggests that the level of risk aversion we observe among households may be a reasonable baseline for the level of risk aversion that should guide the construction of a philanthropic financial portfolio.

Also, as noted briefly in the introduction, most philanthropists do appear to invest very roughly as risk aversely as most households.

These arguments are both very weak, but I think they offer a prior from which to update in light of the three arguments at the end of this subsection. I will indeed argue for updating from it, on the basis of, e.g., the facts that not all the households who might be beneficiaries of philanthropy are identical and that not all philanthropy is about increasing households’ consumption. But if we’re looking for a prior for philanthropic utility functions, I at least think typical individual utility functions offer a better prior than logarithmic utility functions, which is the only other proposal I’ve seen.

Against logarithmic utility as a baseline

Instead of taking the curvature of a typical individual utility function as a baseline, logarithmic utility—a curvature of 1—is sometimes proposed as a baseline. I’m aware of three primary arguments for doing this (beyond analytic convenience), but I don’t believe these are informative.

First: if you invest as if you have a logarithmic utility function, even if you really don’t, then under certain assumptions, your portfolio probably performs better in the long run than if you invest in any other way. This point was first made by Kelly (1956), so it might be called the “Kelly argument”. Despite endorsements by many blog posts in the EA sphere,[17] it’s simply a red herring. Under the assumptions in question, though you probably wind up with more money by acting as if you have logarithmic utility than by acting on the utility function you actually have, the rare scenarios in which you wind up with less money can leave you with much less utility. On balance, the move to pretending you have logarithmic utility lowers your expected utility, which is what matters. The economist Paul Samuelson got so tired of making this point that in 1979 he published a paper spelling it out entirely in monosyllabic words.[18]

Second: Cotton-Barratt (2014) wrote a series of articles arguing that, given a set of problems whose difficulties we don’t know, like open research questions, we should expect that the function from resources put into solving them to fraction of them that are solved will be logarithmic. As explained in the first article, this conclusion follows from an assumption that one’s uncertainty about how many resources it will take to solve a given problem follows a roughly uniform distribution over a logarithmic scale. This simply amounts to assuming the conclusion. One could just as well posit a roughly uniform distribution over a differently transformed scale, and then conclude that the function from inputs to outputs is not logarithmic but something else. The later articles develop the view and illustrate its implications, but don’t return to providing a more compelling theoretical basis for it.[19] Finally, even if there is a roughly logarithmic research production function, this does not justify a logarithmic utility function (as Dickens (2020) seems to imply) unless our utility is linear in what we are calling “research outputs”, which I see no particular reason to believe.[20]

Third: Christiano (2013) argues that in the long run, your “influence” (in some sense) will depend on what fraction of the world you control. He then infers that “an investor concerned with maximizing their influence ought to maximize the expected fraction of world wealth they control”, and he argues that this should motivate investing as if you have logarithmic utility. It is an interesting observation that if you just want to maximize the expected fraction of world wealth you control in the long run, then you should invest roughly as if you have logarithmic utility. But even if (a) maximizing influence is all that matters and (b) influence depends only on the fraction of world wealth you control, it doesn’t follow that influence is linear in the fraction of world wealth you control, which is what we need in order to conclude that the thing to maximize is expected fraction of world wealth. Indeed, this would seem to be an especially pessimistic vision, in which control of the future is a zero-sum contest, leaving no ways for people to trade some of their resources away so that others use their resources slightly differently. If they can, we should expect to see diminishing returns to proportional wealth: the most mutually beneficial “bargains over the future” will be made first, then the less appealing bargains, and so on. Double the proportional wealth can then come with arbitrarily less than double the utility. See Appendix A.

Arguments from the particulars of current top priorities

Instead of more reasoning in the abstract, it would be great to try to estimate the curvature of the philanthropic utility function with respect to top EA-specific domains in particular. Unfortunately, I’m not aware of any good estimates. What few estimates I am aware of, though, don’t give us strong reasons to posit very low curvatures.

First, I think the impression that the philanthropic utility function with respect to top EA-specific domains exhibits relatively little curvature can to some extent be traced back to posts in which this is simply claimed without argument. It’s stated offhand in Christiano (2013) and throughout Tomasik (2015), for instance, that a “reasonable” degree of curvature to assume in these domains is less than 1. For whatever it’s worth, I don’t share the intuition.[21]

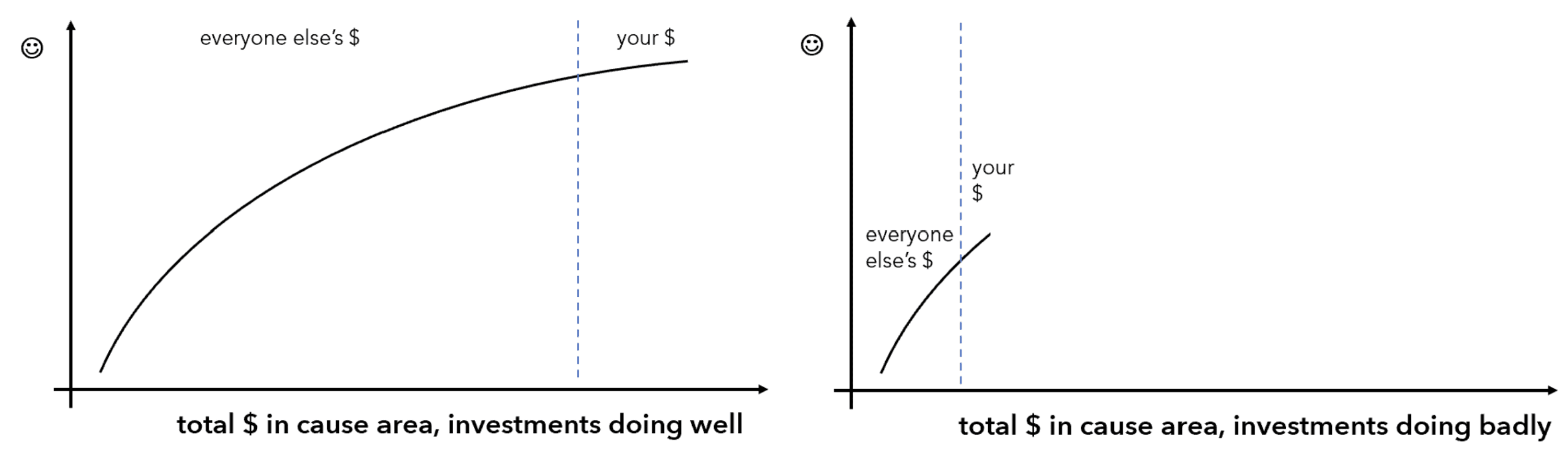

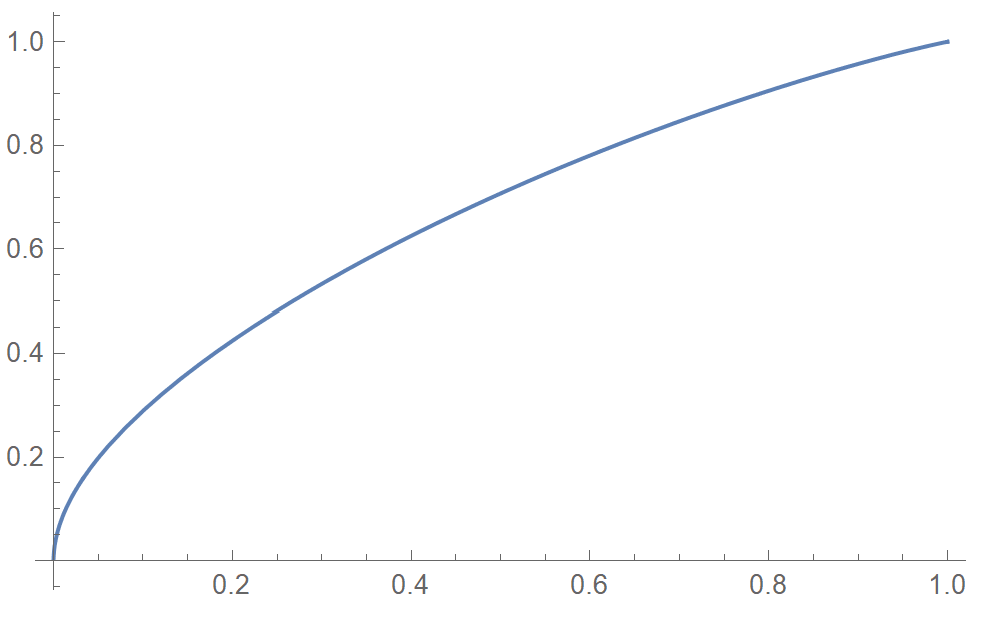

Berger and Favaloro (2021) offer what seems like an estimate of the curvature, in the domain of GiveWell-style global health funding. Their curvature estimate is 0.375.[22] As the authors explain, this estimate was reached “by fitting isoelastic curves to projections GiveWell has performed to model their opportunity set, though they are quite uncertain about those projections and wouldn’t want to independently defend them.” Even if we do trust these projections, though, what they appear to capture is how fast the value of further GiveWell-style spending would fall if such spending were ramped up, all else equal. That is, 0.375 appears to be an estimate of “curvature” in the sense of Figure 1. What should govern how riskily we’re willing to invest, however, is “curvature” in the sense of Figure 2: that is, again, curvature of the philanthropic utility function. This is the kind of curvature that captures how much higher the marginal value of spending is when markets are down, global economic performance is poor, and other relevant spending—including e.g. spending by the poor on their own nutrition—dries up. An accurate estimate of curvature in the first sense would probably be a considerable underestimate of curvature in the sense that matters.[23]

Dickens (2020) offers a very rough estimate of curvature in this second sense, as applied to GiveWell-style global health funding, albeit without GiveWell’s internal projections. He estimates that the curvature is at least 1 and perhaps even over 3.

Besides Berger and Favaloro’s work in the global health domain, others on the Open Phil team have recently been trying to estimate the curvature in other domains. I think it’s great that this work is being done, and I look forward to incorporating it at some point, but much of it is still quite preliminary, and its authors don’t want the conclusions made public. It’s possible, of course, that these analyses will end up largely confirming the old conclusion in favor of risk tolerance, even if the old reasoning for that conclusion was largely faulty. But I worry that, especially in domains where a given project’s path to impact is hard to measure, the analyses will themselves be influenced by intuitions which were developed by a priori arguments like those discussed throughout this post. In conversation, for example, one person contributing to an estimate of the curvature in a certain Open Phil funding domain noted that they had been giving some weight to one of the (I believe mistaken) arguments for using logarithmic utility as a baseline.

Finally, in that spirit, it may be worth briefly considering a simple model of existential risk reduction. Suppose that every unit increase in x-risk-focused spending buys the same proportional reduction in x-risk. This is what happens, for instance, if each unit increase in spending lets us build another safeguard of some kind, if a catastrophe only occurs if the safeguards all fail, and if the safeguards’ failure probabilities are equal and independent. What then is the curvature of the function from spending to impact, as measured by probability of survival? The unsurprising answer is that it could be arbitrarily high or low, depending on the parameters. See Appendix B.

The truncated lower tail argument

You might think: “If the total funding for some philanthropic cause area goes to zero, the results (probably) won’t truly be catastrophic. The world will lack some helpful top-up that the funding in that area would have provided, but otherwise it will carry on fine. By contrast, if an individual loses all her resources, she starves and dies. So philanthropists should worry less about losing all their resources than individuals typically do.” I haven’t seen this argument made explicitly in writing, but I’ve heard it expressed, and I would guess that a thought along these lines underlies the Christiano and Tomasik intuitions that the curvature of the philanthropic utility functions they’re concerned with is less than 1. A function u(x) whose curvature is everywhere 1 or more falls arbitrarily low as x falls to 0; if its curvature is everywhere in the (0, 1) range, it looks like, say, the square root function, bottoming out at some baseline utility level (say, 0) when x = 0.

I don’t think this line of reasoning is right on either count.

First, though individuals suffer some disutility if they lose all their money, they don’t suffer infinite disutility any more than philanthropists do. Not even if they starve, presumably; but certainly not if their welfare just falls to the more realistic baseline, at least in the developed world, of a life on (however meager) social assistance. When we see people acting as if their utility functions have curvatures greater than 1, we usually just infer that their utility functions have curvatures greater than 1 above some low threshold. The fact that such a threshold exists for philanthropists too isn’t a reason to think that the curvature of a philanthropic utility function tends to be everywhere less than 1 either.

Second, to repeat a point from the “small fish in a big pond” subsection, the wholesale loss of a philanthropic portfolio doesn’t just “leave the world as it is today, but without some helpful top-up funding”. A scenario in which, say, the global stock market in 30 years is worthless is likely a scenario in which the world as a whole is worse off than it is today, funding for important projects from other sources has disappeared, and your resources are needed more. It’s a world in which more people are starving.

Ultimately, perhaps philanthropists should be less concerned than typical individuals with ensuring that they don’t lose everything in pessimistic economic scenarios, and perhaps they should be more concerned. It just depends on what the philanthropists are interested in funding.

The cause variety argument

I’ve argued so far that we don’t have much reason to expect that the philanthropic utility function for any given cause exhibits less curvature than a typical individual’s utility function. Even if this is true on some narrow definition of “cause”, though, a cause-neutral philanthropist will want to fund the most important area up to the point that the value of further funding there has fallen to the value of funding the next-best area, then switch to funding both, and so on. As a result, the value of further funding, for such a philanthropist, falls less quickly as funding scales up than the value of further funding for a philanthropist who restricts her attention to a single narrow area. That is, a cause-neutral philanthropist’s philanthropic utility function has less curvature. If we think that the Yale portfolio is ideal for a goal like supporting Yale, we should think that a riskier portfolio is ideal for a goal like doing the most good.

The magnitude of the impact of “cause variety” on the curvature of a philanthropic utility function may not be obvious. On the one hand, as noted in the sub-subsection on using typical risk aversion as a baseline, when a philanthropist is providing only for a range of identical, destitute households, the fact that there are many “causes” (i.e. households) has no impact at all. On the other hand, if the causes a philanthropist might be interested in funding are not structurally identical, the range of causes may not have to be very wide for cause variety to be an important effect. And even if a philanthropist is only interested in, say, relieving poverty in some small region with a bell-shaped income distribution, she effectively faces a continuum of causes: relieving the poverty of those at the very bottom of the distribution, relieving the poverty of those just above them, and so on. Under some assumptions I think are vaguely reasonable, cause variety of this sort can easily be enough to cut the curvature of the philanthropic utility function by more than half, all else equal. The implications of cause variety are explored more formally in Appendix C.

Arguments from uncertainty

So far, we’ve been reasoning as if the function from spending to impact takes a certain shape, and that we just need to work out a central guess about what that shape is and act according to that guess. But even our best guess is bound to be bad. Philanthropists face a lot of uncertainty about how valuable funding opportunities are relative to each other: more uncertainty, arguably, than the uncertainty individuals face about how much less a second car (say) will contribute to their welfare than a first. I’m aware of two arguments that this extra uncertainty systematically justifies more financial risk tolerance. This is possible, but it seems to me that, in both cases, the impact of the extra uncertainty could go either way.

First, one might argue that financial risk tolerance follows from uncertainty about the curvature of the philanthropic utility function per se. The argument goes: “Assuming that we will be richer in the future than today, the possibility that our utility function exhibits a lot of curvature is a low-stakes scenario. In that case we might mess up a little bit by putting everything in stocks, but even if we’d invested optimally, there isn’t much good we could have done anyway. But if it turns out our utility function was close to linear and we messed up by holding too many bonds, we left a lot of expected value on the table.”

This is true, but it rests critically on the assumption that we will be “richer”, in that the most important domains for philanthropic funding will be better funded than they are today. If not, the high-curvature scenarios are the high-stakes ones. This isn’t to say it’s all a wash, just that the implications of “curvature uncertainty” for optimal financial behavior depend on the details of the form that the uncertainty takes, in ways that seem hard to determine a priori. A more formal exploration of the possible asymmetry at play here can be found in Appendix D: Uncertainty about 𝜂.

Second, one might argue that philanthropists have a hard time distinguishing between the value of different projects, and that this makes the “ex ante philanthropic utility function”, the function from spending to expected impact, less curved than it would be under more complete information. But again, this all depends on the form that the uncertainty takes. If possible projects actually differ significantly in impact per dollar but under uncertainty they all offer the same, slightly positive expected value, then the utility function under full information can be arbitrarily curved but uncertainty will make the ex ante utility function linear. On the other hand, if possible projects actually all generate impacts of either +1 or –1 per dollar, but they vary a lot in terms of how easy it is to determine whether the sign of the value is positive or negative, then the utility function under full information is linear (just fund as many of the “+1”s as you can afford to) but the ex ante utility function can be arbitrarily curved.

Arguments from Merton’s model

Here’s a summary of the reasoning of the previous section.

- How riskily a philanthropist should invest depends centrally on the curvature of his “philanthropic utility function”. This is the function from total spending on the things he’s interested in funding to impact.

- There seem to be no good (public) empirical estimates of this curvature, at least in the EA case. And many proposed arguments for guessing a priori that philanthropic utility functions exhibit less curvature than individuals’ utility functions are actually either directionally ambiguous or simply vacuous.

- One argument for lower-than-average curvature seems solid: the “cause variety” argument. This suggests that cause-neutral philanthropists should collectively invest more riskily than most other people.

- On the other hand, small philanthropists should be expected to be unusually willing to start startups. This should make the other philanthropists in the same domain want to invest more cautiously than they would otherwise.

Which of these effects is larger is unclear, at least to my mind. It’s also not clear what to make of the directionally ambiguous arguments. So far, I don’t feel like I can rule out that philanthropists without startups who want to maximize their expected impact basically have no stronger reasons for risk tolerance than typical investors.

But even given a philanthropic utility function whose curvature is the same as that of a typical individual utility function, it doesn’t follow that the philanthropic investment portfolio should be the same as the portfolio that individuals actually choose. This equality could fail in two ways.

First, we might doubt that people invest appropriately given their own utility functions. One might agree that philanthropic utility functions probably look similar to (scaled up) typical individual utility functions, and still argue that philanthropists as a whole should invest unusually riskily, on the grounds that most individuals currently invest more cautiously than is best for them.

It’s often argued, on the basis of a formal portfolio construction model, that a conventional portfolio along the lines of 60% stocks and 40% bonds couldn’t possibly be optimal for most people. When we try to estimate the curvature of a typical individual utility function in practically any way (besides the giant data point of how riskily they’re willing to invest, of course), we find that the curvature may be above 1, but it’s not high enough to warrant such a cautious portfolio. Even if a community of philanthropists believes that their philanthropic utility function exhibits about as much curvature as a typical individual utility function, therefore, they shouldn’t simply adopt the portfolio that they see most people adopting for themselves. Instead, they should plug the curvature they think a typical individual utility function actually exhibits into the formal model, and then adopt the portfolio the model recommends.

The simple model typically used to reach this conclusion, and the one used most frequently by far in EA discussions about all this, is Merton’s (1969) model. This is the formula used to justify investing riskily in the quantitative discussions of philanthropic investment advice by Tomasik, Irlam, and Dickens.

But individuals have good reasons not to behave as Merton’s formulas recommend. That is, the model oversimplifies their portfolio construction problem in important ways. Economists have identified some of the missing complications over the past few decades, and developed more complex models which show how a conventional portfolio might be justified after all. I’ll explain this below. I’ll also argue that, in general, the complications which seem to justify individuals’ cautious investment behavior apply to philanthropists as well.

Second, we might think that, even if people do invest appropriately given their own utility functions, and even if philanthropic utility functions do look similar to (scaled up) individual utility functions, philanthropists should still invest differently, because the amount of wealth being spent pursuing one’s goals in the future depends not only on how well financial investments have performed but also on how much wealth has turned up from elsewhere to pursue those goals. This is true for both philanthropists and typical individuals. A philanthropist should account for the fact that new funders may arrive in the future to support the causes she cares about, for instance, and an individual should account for the fact that he will probably get most of his future wealth from wages, not from the returns on his financial investments. But if this distribution of possible amounts of relevant outside income is different for a philanthropist than for a typical individual, this might motivate the philanthropist to invest differently. In particular, Merton’s model is sometimes used to argue that the difference between these distributions should motivate philanthropists to invest unusually riskily.

I agree that there is a difference between these distributions, and that it might motivate a difference in investment behavior. But I’ll argue that, if anything, it should generally motivate philanthropists—especially in EA—to invest unusually cautiously.

Describing the formula

Suppose an investor wants to maximize the expectation of the integral, over time, of his discounted flow utility at each time, where flow utility at a time t is a CRRA function, u(·), of consumption at t, ct. Formally, suppose he wants to maximize

where

for some RRA 𝜂 and some discount rate 𝛿. T could be finite, perhaps representing the length of the investor’s life, or infinite, if the investor cares about his descendants or is a philanthropist who cares about distant future impacts. T won’t matter much, as we’ll see.

The investor begins at time 0 with wealth W0 > 0. There are two assets in which he can invest.

- The first grows more valuable over time at a constant rate r. I.e. if you buy $1 of it at time t, its price at a time s > t is $er(s–t). It’s essentially a safe bond offering interest rate r.

- The second is risky. Its price jumps around continuously in such a way that, if you buy $1 of it at time t, the price at s > t is lognormally distributed, the associated normal distribution having mean 𝜇(s–t) for some 𝜇 > r and variance 𝜎2(s–t) for some 𝜎 > 0. The risky asset is like an index fund of stocks, offering higher expected returns than the bond but an ever-widening distribution of possible returns as we consider times s ever further in the future from the purchase date t. 𝜇–r is the equity premium.

The investor’s wealth at t is denoted Wt. Wt depends on four things: his initial wealth W0, how quickly he’s been consuming, how much of his savings have been invested in the risky asset, and how well the risky asset has performed.

At each time t, our investor has two questions to answer. First is how fast he should consume at t: i.e. what 𝜈t should be, where 𝜈t denotes his consumption rate at t as a proportion of Wt. Second is, of the wealth not yet consumed, what fraction 𝜋t he should hold in stocks.

As it turns out, under the assumptions above,[24] 𝜋 should be constant over time, equaling

If T = ∞, then 𝜈 should also be constant over time, equaling

This is explained in Appendix D: Understanding the model. If T is finite, the formula for is more complicated, and should rise as t approaches T. But this post is about 𝜋, which in this model is independent of T, so for simplicity we’ll just work with the T = ∞ case.

The equity premium puzzle argument

Of course, the assumptions of Merton’s model are not exactly accurate. There are different kinds of bonds, for instance, and none of them literally offer a risk-free interest rate. Still, though, the model is arguably accurate enough that we can try to “calibrate” its variables: i.e., find values of the variables that relatively closely match the preferences and circumstances that typical investors face. In that spirit, here are some ballpark calibrations of all the variables except 𝜂.

- 𝛿 = 0.01,

- r = 0.01,

- 𝜇 = 0.06,

- 𝜎 = 0.14.

Likewise, we can calibrate 𝜋 and 𝜈 to our observations of people’s investment portfolios and saving rates. A standard calibration of 𝜋 is 0.6, as noted in the introduction, and a standard calibration of 𝜈 is 0.016.[25]

This presents a puzzle. Rearranging (3), people seem to be holding bonds at a rate that only makes sense if the curvature of their utility functions equals

But rearranging (4) (and using the quadratic formula), people seem to be saving at a rate that only makes sense if[26]

One way of putting this is that, given the only-moderately-above-1 𝜂 we estimate from people’s saving rates (and other estimates of how people trade off consumption between periods, which we won’t get into here), the equity premium 𝜇–r is too high to explain the low 𝜋 people choose.

But this is only a puzzle if the assumptions of the Merton model are valid. If they’re not, then a low choice of 𝜋 and a high choice of 𝜈 might be compatible. Many tweaks to the model have been proposed which reconcile this pair of choices. Here are four, for illustration.

Tail risk

The Merton model assumes that stock prices follow a geometric random walk. But historically, they haven’t: sudden collapses in stock prices are much more common than sudden jumps of a similar proportional size. As many (e.g. Weitzman, 2006) have shown, this might make it rational for people to buy a lot of bonds, even if their 𝜂’s aren’t all that high and they still want to do a lot of saving.

Of course, this applies to philanthropists just as much as to anyone else.

Habit formation

The Merton model assumes that an individual’s utility at a given time t depends only on her own consumption at t. But people might value consumption more at times when they have recently been consuming more. As many (e.g. Abel (1990)) have shown, this too might make it rational for people to buy a lot of bonds. Even if a drop in consumption won’t be so bad once you’ve acclimated to it, the pain of moving to the lower level, and perhaps back up again, makes it valuable to hold some of one’s wealth in assets whose value is more reliable.

This effect is usually called “habit formation”, since the most obvious way it can arise is if people literally develop expensive habits. But it can also arise whenever there are concrete transition costs associated with moving between levels of consumption. Suppose you fall on hard times and have to move to a smaller apartment. The walls of your apartment don’t just slide in; you have to pack your boxes and rent a van.

Similar transition costs presumably apply in the nonprofit setting. An organization whose funding is cut has to pay the cost of moving to a smaller office, and some of its employees, instead of hopping immediately to some other valuable line of work, face the costs that come with changing jobs. A philanthropist presumably values the time and other resources of the nonprofits that receive her funding, and so prefers being able to pay out a steady funding stream, to avoid imposing such costs.

Keeping up with the Joneses

The Merton model assumes that an individual’s utility at a given time t depends only on her own consumption at t. But people might value consumption more at a time when others are also consuming more. That is, they might especially prefer to avoid scenarios in which they’re poor but others are rich. This effect is usually called “keeping up with the Joneses”, since one way it can arise is if people care intrinsically to some extent about their place in the income distribution. But this preference can also arise instrumentally. Some people might especially want to buy things in fixed supply, like beachfront property, which are less affordable the richer others are.

Again, as e.g. Galí (1993) shows, this can make it rational to invest less riskily, since risky investing leaves one poorer than one’s former peers if the risky investments do badly. Note that it also leaves one richer than one’s former peers if the risky investments do well, though, so for this effect to motivate more cautious investment on balance, one has to have diminishing marginal utility in one’s relative place. The boost that comes with moving from the bottom of the pack to the middle has to be greater than the boost that comes with moving from the middle to the top.

Philanthropists might care about their place in the distribution too—perhaps even more than most others. They might care somewhat about their “influence”, for example, in the way proposed by Christiano (2013) and discussed at the end of the “Against logarithmic utility…” sub-subsection. Recall, though, that on the model Christiano proposes, the philanthropist cares only about her relative place—more precisely, about the fraction of all wealth she owns—and her utility is linear in this fraction. As he notes, such a philanthropist should invest roughly as if she has logarithmic utility in her own wealth, which would mean investing more risk tolerantly than most people do. By contrast, in a standard “keeping up with the Joneses” model, being relatively richer comes with diminishing marginal utility, as noted in the previous paragraph, and this effect exists alongside the standard sort of diminishing marginal utility in one’s own resources.

New products

The Merton model assumes that an individual’s utility at a given time t always depends in the same way on her own consumption at t. But even if one’s utility level is, at any given time, only a function of one’s own consumption, the shape of the function can change over time for various reasons. One such reason that I’ve explored a bit (PDF, video) could be the introduction of new products. [Edit 4/4/24: Scanlon (2019) beat me to it!] Consider an early society in which the only consumption goods available are local agricultural products. In such a society, once you have acquired enough food, clothing, and shelter to meet your basic needs—a basket of goods that would cost very little at today’s prices—the marginal utility of further consumption might be low. Later in time, once more products have been developed, marginal utility in consumption at the same level of consumption might be higher.

Once again, this can make a low 𝜋* compatible with a high 𝜈*. 𝜂 may really be very high at any given time, motivating people to hold a lot of the wealth they invest for consumption at any given future time in bonds. But unlike in the Merton model, even if we posit such a high 𝜂, people might still be willing to save as much as they currently do. They will probably be richer in the future, and this significantly lowers their marginal utility in consumption relative to what it would have been if their consumption had stagnated at its current level, because 𝜂 is high. But their marginal utility consumption may not fall much relative to what it is today, since new products will raise the marginal utility in consumption at any given level of consumption. On this account, young savers in, say, 1960 were saving in part to make sure they would be able to afford air conditioners, computers, and so on, once such products became available. But they saved largely in bonds, because they understood that what really matters is usually the ability to afford the new goods at least in small quantities, not the ability to afford many copies of them.

Once again, philanthropists might be in a similar situation. The philanthropic utility function at any given time might exhibit a high 𝜂. But a philanthropist might still find it worthwhile to save a lot for the future, even on the assumption that funding levels will be higher than they are today, because in the meantime, a wider range of funding opportunities will be available, as cause areas mature and new projects begin, all of which will benefit greatly from a little bit of funding.

I don’t think this “new products” (/projects) effect is the most important driver of the equity premium puzzle. But in Appendix D: Adding new products to the model, I use it to quantitatively illustrate how these effects can in principle resolve the puzzle, because it yields the simplest equations.

----------

For brevity, we’ve only covered four possible resolutions to the equity premium puzzle. Many more have been proposed. Of the four we’ve covered, only the first applies equally to philanthropists and to other investors. The other three apply similarly, I think, but could apply somewhat more or less strongly than average to philanthropists. More could be said on this, but on balance, my understanding is that the phenomenon of the equity premium puzzle doesn’t give us much reason to think that philanthropists should invest more (or less) riskily than average.

The lifecycle argument

As noted at the beginning of this section, there’s another way in which Merton’s model is sometimes used to argue that a philanthropist should invest more risk tolerantly than most people do invest, even if philanthropic and individual utility functions look the same. The reason—stated a bit more formally now that we’ve introduced the model—is that the model only tells you the fraction 𝜋 of your wealth to invest in stocks if all your wealth is already “in the bank”. If you have a source of outside income (such as a career), then this source is like another asset, offering its own distribution of possible future returns (such as wages). The portfolio you should adopt for your wealth “in the bank” should be such that, in conjunction with the “asset” generating the outside income, the combined portfolio exhibits the desired tradeoff between safety and expected returns.

Applied to individuals, the reasoning typically looks something like this. Wages don’t jump around wildly, like stock returns. They grow steadily and reliably over the course of a career, more like bond payments. A person early in his career usually doesn’t yet have much wealth in the bank, but does have a long career ahead of him, so his “wealth” in the broader sense consists mostly of the relatively low-risk asset that is the rest of his career. As his career progresses, this situation reverses. But equation (3) tells us that the fraction 𝜋 of his “wealth” in risky investments should stay constant. So, over the course of his life, he should shift from holding all his financial wealth in stocks—or even “more than all of it”, via leverage—to holding a mix of stocks and bonds. This is often called lifecycle investing, a term coined by Nalebuff and Ayres, who wrote a popular 2010 book promoting this way of thinking.

The “lifecycle argument” for unusual financial risk tolerance by philanthropists then goes as follows. Most of the resources, in the broader sense, of a typical philanthropic community—most of those that will ever be put toward its philanthropic utility function—don’t consist of financial assets that the community already possesses. Rather, they consist of “outside income”: contributions that are yet to arrive, including many from people who are not yet members of the community. So a philanthropic community is usually like a person early in his career. The small fraction of its total wealth that its current members do control more tangibly, they should invest in stocks, perhaps with leverage. See, again, Dickens (2020) (The “Time diversification” section) for an example of this argument being made.

But this analogy relies on the assumption that the possibility of outside contributions is like a giant bond. In fact, if we’re going to model the possible future funding provided by the rest of the world as an asset, it’s a very risky one: philanthropic fads and hot policy agenda items come and go, leaving the distribution of future funding for a given cause often very uncertain. I.e. the “outside-income asset”, as we might call it, comes with a lot of idiosyncratic risk. And because it constitutes such a large fraction of the community’s total “wealth”, this is idiosyncratic risk of the sort that the community does care about, and should want to diffuse with bonds or anticorrelated investments—not the idiosyncratic risk that comes with an individual proportionally small philanthropist’s startup and is diluted by the uncorrelated bets of other members.

The outside-income asset also presumably tends to come with a fair bit of systematic risk. When the economy is doing poorly and stock prices are down, people have less to give to any given cause. It’s true that, since the world at large isn’t entirely invested in stocks, new contributions to a given cause probably don’t fluctuate as much as if the “asset” were literally stocks. But recall that an unusually large fraction of new EA funding comes from the founders of tech startups, which exhibit high “beta”, as noted in the sub-subsection on proportionally small philanthropists with idiosyncratic risk. So I would guess that the outside-income asset exhibits even more systematic risk for the EA community than for most philanthropic communities.

I don’t think there’s any need to think about this philanthropic investment question from scratch with a tweaked analogy to an individual and his future wages. The math of the “lifecycle”-style adjustment to Merton’s original model that a philanthropist should adopt, in light of the real but risky possibilities of future outside contributions, was worked out by Merton himself in 1993. But the relevant upshot is: suppose the outside-income asset behaves more like the “risky asset” than like the “safe asset”, in Merton’s model. Then for any given target “𝜋”, the more of your wealth is tied up in the outside-income asset, the more of the rest of your wealth you should hold in bonds.

In other words, what we need most isn’t more high-risk, high-return investments; we already have possible future contributions from the rest of the world for that. What we need is a guarantee that we’ll be able to cover the most important future philanthropic opportunities in case markets crash and the rest of the world doesn’t show up.

Arguments for equities

This section will briefly cover three arguments for why philanthropists might consider equities more (or less) valuable than other people, even if they’re not particularly risk tolerant (or averse) per se and none of the arguments above for behaving unusually risk tolerantly (/aversely) go through.

These won’t be arguments that philanthropists should mind financial risk more or less per se. At best they’ll be arguments that a philanthropist should choose a portfolio which has more or less than the usual share of stocks, and which therefore happens to be unusually safe or risky.

Mispricing

A philanthropist, like anyone else, may have reason to believe that financial markets are not very efficient, and that stocks as a whole, or certain stocks, are currently mispriced. If so, she should skew her portfolio toward or away from (perhaps particular) stocks, relative to whatever would have been optimal otherwise.

Such beliefs may follow from her philanthropy. For instance, maybe a search for ways to do the most good has motivated philanthropists in the EA community to reach an unusually deep understanding of the potential of AI, including an unusually accurate assessment of the chance that advances in AI will soon significantly increase the returns to capital.

For my part, I used to think it was almost always a mistake for someone to think they could beat the market like this (and I’m still a bit skeptical), but now I don’t think it’s so unreasonable to think we can, at least to a moderate extent. In any event, this seems like a question on which people basically just have to use their judgment.

Mission hedging

It’s typically assumed that an individual’s utility function doesn’t depend on how well the stock market (or any particular company) is doing, except via how it affects his own bank account.[27] But a philanthropic utility function might look different in different macroeconomic scenarios, for reasons that have nothing to do with the funding available for any given cause. That is, for philanthropists focusing on certain issues, resources might be more (or less) valuable when the market is booming, before accounting for the fact there are then more (or fewer) resources available to spend.

For example, AI risk mitigation might be an issue toward which resources are more valuable in a boom. Maybe boom scenarios are disproportionately scenarios in which AI capabilities are advancing especially quickly, and maybe these in turn are scenarios in which resources directed toward AI risk mitigation are especially valuable.

It might also be an issue toward which resources are less valuable when the market is booming. Maybe scenarios in which AI capabilities are accelerating economic growth, instead of starting to cause mayhem, are scenarios in which AI is especially under control, and maybe these in turn are scenarios in which resources directed toward AI risk mitigation are less valuable.

Philanthropists focusing on issues of the first kind should be unusually willing to buy stocks, and so should probably have unusually risky portfolios. Philanthropists focusing on issues of the first kind should be unusually unwilling to buy stocks, and so should probably have unusually safe portfolios. This is discussed more formally by Roth Tran (2019), and some in the EA community have thought more about the implications of mission hedging in the EA case.[28] I haven’t thought about it much, and don’t personally have strong opinions about whether the most important-seeming corners of philanthropy on the whole are more like the first kind or the second kind.

Activist investing

Throughout this document, we’ve been assuming that the only difference between stocks and bonds is that they offer different distributions of financial returns. But another small difference is that stocks but not bonds usually give their owners the right to vote on how a company is run. The philanthropic impact an investor can have by exercising this right was emphasized on this forum by Behmer (2022). If an investor values that right, he should want to hold more of his wealth in stocks.

By the same token, though, if he just wants to invest passively, like almost all investors, then he should expect that stock prices are slightly higher than they would otherwise be, having been bid up a bit by the few investors who do value their voting rights. I would have thought that this effect would be negligible, but see the first table here: for companies that issue both stock with voting rights and stock without, apparently the stock without voting rights is a few percent cheaper, which isn’t nothing. In any event, an investor not planning to make use of his voting rights should prefer the cheaper, non-voting stock when it’s available, and when it’s not, should want to hold slightly more of his wealth in other assets like bonds.

A philanthropist might know enough about the details of some firm or industry that they can make a difference by “activist investing”: say, by buying large stakes in particular firms and voting (or even organizing votes) against its most destructive practices. If a foundation or philanthropic community is large enough, it may be worth someone putting in the effort to research and advise on how to implement some investor activism. But my guess is that this will only ever be a minor consideration, at best marginally tweaking the optimal portfolio at all, and only very, very slightly making it riskier on the whole (instead of just shifting it toward some risky investments and away from others).

Conclusion

There are many conceivable reasons why philanthropists might want to invest unusually safely or riskily. Here is a summary of the reasons considered in this document, organized by the direction of the implications they have, in my view.

Justifying a riskier portfolio

- The “small fish in a big pond + idiosyncratic risk” argument, part 1:

Proportionally small philanthropists should be more inclined to take investment opportunities with a lot of idiosyncratic risk, like startups. - The “cause variety” argument:

Cause-neutral philanthropists can expand or contract the range of cause areas they fund in light of how much money they have. This lets marginal utility in spending diminish less quickly as the money scales up. - The “mispriced equities” argument:

Certain philanthropists might develop domain expertise in certain areas which informs them that certain assets are mispriced. [This could push in either direction in principle, but the motivating case to my mind is a belief that the EA community better appreciates the fact that a huge AI boom could be coming soon.] - The “activist investing” argument, if it’s worth it:

Stock owners can vote on how a firm is run. Some philanthropists might know enough about the details of some firm or industry that it’s worthwhile for them to buy a lot of stock in that area and participate actively like this—voting against a firm’s bad practices, say—despite the fact that this will probably make their portfolios riskier.

Justifying a more cautious portfolio

- The “small fish in a big pond + idiosyncratic risk” argument, part 2:

Proportionally small philanthropists without startups should invest especially cautiously, to dilute the risk that others’ startups bring to the collective portfolio. - The “lifecycle” argument:

The distribution of future funding levels for the causes supported by a given community tends to be high-variance even independently of the financial investments we make today; risky investments only exacerbate this. [I think this is especially true of the EA community.] - The “activist investing” argument, if it’s not worth it:

Activist investing may be more trouble than it’s worth, and the fact that stocks come with voting rights slightly raises stock prices relative to bond prices.

Directionally ambiguous

- Arguments from the particulars of current top priorities:

The philanthropic utility function for any given “cause” could exhibit more or less curvature than a typical individual utility function. - The “truncated lower tail” argument:

The philanthropic utility function’s “worst-case scenario”—the utility level reached if no resources end up put toward things considered valuable at all— might bottom out in a different place from a typical individual utility function’s worst-case scenario. - Arguments from uncertainty:

Philanthropists may be more uncertain about the relative impacts of their grants than individuals are about how much they enjoy their purchases. This extra uncertainty could flatten out an “ex ante philanthropic utility function”, or curve it further. - The “equity premium puzzle” argument:

The reasons not captured by the Merton model for why people might want to buy a lot of bonds despite a large equity premium could, on balance, apply to philanthropists more or less than they apply to most others. - The “mission hedging” argument:

Scenarios in which risky investments are performing well could tend to be scenarios in which philanthropic resources in some domain are more or less valuable than usual (before accounting for the fact that how well risky investments are performing affects how plentiful philanthropic resources in the domain are).

No (or almost no) implications

- The “small fish in a big pond” argument without idiosyncratic risk:

Small philanthropists supporting a common cause, who have access to the same investment opportunities, should (collectively) invest as risk aversely as if they each were the cause’s only funders. - The “logarithmic utility as a baseline” argument:

None of the a priori arguments I’m aware of for assuming a logarithmic philanthropic utility function are valid.

We’re left with the question of how all these arguments combine. I haven’t come close to trying to work this out formally, since so many of the relevant numbers would be made up. Ongoing Open Phil work may make more progress on this front. But for whatever it’s worth, my own central guess at the moment, with wide uncertainty, is that philanthropists—at least in the EA space, which I’ve thought most about—should invest about as risk tolerantly as other relatively cause-neutral philanthropists do on average (i.e. foundations rather than universities), which is only slightly more risk tolerantly than individuals invest for themselves.

There are other arguments one might consider, of course. I haven’t explored the implications of tax policy, for instance. But I’m not aware of other arguments that seem important enough to have much chance of overturning the broad conclusion.

To close, it may be worth noting a connection between this post and another I wrote recently. There, I reflected on the fact that a lot of my research, attempting to adhere to a sort of epistemic modesty, has followed the following template: (a) assume that people are typically being more or less rational, in that they’re doing what best achieves their goals; and then (b) argue that the EA community should copy typical behavior, except for a short list of tweaks we can motivate by replacing typical goals with the goal of doing the most good. I then explained that I don’t think this template is as reasonable as I used to. I now think there are some questions without much direct connection to doing good on which “EA thought” is nonetheless surprisingly reliable, and that on these questions, it can be reasonable for EA community practice to depart from conventional wisdom.

I also noted that one such question, to my mind, is whether advances in AI will dramatically increase the economic growth rate. I think there’s a good chance they will: a belief that is widespread in the EA community but seems to be rare elsewhere, including in the academic economics community. And I think that, all else equal, this should motivate riskier investment than we observe most people adopting, as noted in the “mispricing” subsection.

But otherwise, here, I have followed the “template”. I’m taking typical observed levels of financial risk aversion as my default, instead of old intuitions from the EA sphere and dismissive claims that most people invest irrationally. This is because, at least from what I’ve read and heard, “EA thought” on philanthropy and financial risk seems surprisingly unreliable, and unreliable in surprisingly important ways. Indeed, as I won’t be the first to point out, it seems that confused thinking on philanthropy and financial risk partly explains Sam Bankman-Fried’s willingness to take such absurd gambles with FTX. In sum, I’m not saying we should never depart from conventional wisdom, in finance or anything else. I’m just saying we should be more careful.

Thanks to Michael Dickens, Peter Favaloro, John Firth, Luis Mota, Luca Righetti, Ben Todd, Matt Wage, and others for reviewing the post and giving many valuable comments on it. Thanks also to Ben Hilton, Ben Hoskin, Michelle Hutchinson, Alex Lawsen, members of the LSE economics and EA societies, and others for other valuable conversations about this topic that influenced how the post was written.

Appendix A: Decreasing returns to proportional wealth

Suppose that, at some future time t, the subsequent course of civilization will be determined by a grand bargain between two actors: the EA community (“EA”), which wants to fill the universe with “hedonium” (computer simulations of bliss), and an eccentric billionaire (“EB”), who wants to fill the universe with monuments to himself. Both utility functions are linear, and neither actor considers the other’s goal to be of any value.

Suppose that by default, if at t party i has fraction xi of the world’s resources, they very tangibly have “fraction xi of the influence”: they get to build fraction xi of the self-replicating robot rockets, and ultimately fill fraction xi of the universe with the thing you value. With no possibility of gains from trade—no room for compromise—party i’s utility, ui, is linear in xi.

But with room for gains from trade, the situation looks different. For simplicity, let’s normalize ui to 0 at xi = 0 and ui to 1 at xi = 1. Also note that xEB = 1–xEA, so we have uEA(xEA) = xEA and uEB(xEA) = 1–xEA. The situation then looks like this:

The dotted line represents the utility levels achieved by each actor without gains from trade, given some x from 0 to 1. The “utility profile” (pair of utility levels) achieved without trade is called the “default point”. The light blue region represents the set of possible “Pareto improvements” (utility profiles that both parties would prefer to the default point). Instead of filling 80% of the universe with hedonium and 20% of it with monuments to EB, the parties could agree to, say, fill the whole universe with computer simulations in which EB is experiencing bliss: only slightly less efficient at producing bliss than simulations that don’t have to include the details of EB’s biography, and only slightly less efficient at leaving a grand legacy to EB than other kinds of monuments.

The general point I want to make is that gains from trade would tend to produce diminishing marginal utility to proportional wealth. This point doesn’t depend on the shape of the possibilities frontier or on the way in which the actors bargain over what Pareto improvement they settle on. When xi = 1, you do exactly what you want, so ui = xi = 1, but when xi < 1, ui > xi if there are any gains from trade. So “on average”, the utility gains from moving from some xi < 1 toward xi = 1 are sublinear in the proportional wealth gains.