Many thanks to Jonah Goldberg for conversations which helped me think through the arguments in this essay. Thanks also to Bruce Tsai, Miranda Zhang, David Manheim, and Joseph Lemien for their feedback on an earlier draft.

Summary

- An influx of interest in EA makes accessibility really important right now

- Lots of new people have recently been introduced to EA / will be introduced to EA soon

- These people differ systematically from current EAs and are more likely to be “casual EAs”

- I think we should try to recruit these people

- There are two problems that make it hard to recruit casual EAs

- Problem 1: EA is (practically) inaccessible, especially for casual EAs

- Doing direct work is difficult and risky for most people

- Earning to give, at least as it’s commonly understood, is also difficult and risky

- Problem 2: EA is becoming (perceptually) inaccessible as a focus on longtermism takes over

- Longtermism is becoming the face of EA

- This is bad because longtermism is weird and confusing for non-EAs; neartermist causes are a much better “on-ramp” to EA

- To help solve both of these problems, we should help casual EAs increase their impact in a way that’s an “easier lift” than current EA consensus advice

A few notes

On language: In this post I will use longtermism and existential risk pretty much interchangeably. Logically, of course, they are distinct: longtermism is a philosophical position that leads many people to focus on the cause area(s) of existential risk. However, in practice most longtermists seem to be highly (often exclusively) focused on existential risks. As a result, I believe that for many people — especially people new to EA or not very involved in EA, which is the group I’m focusing on here — these terms are essentially viewed as synonymous.

I will also consider AI risk to be a subsection of existential risk. I believe this to be the majority view among EAs, though not everyone thinks it is correct.

On the structure of this post: The two problems I outline below are separate. You may think only one of them is a problem, or that one is much more of a problem than the other. I’m writing about them together because I think they’re related, and because I think there are solutions (outlined at the end of this post) that would help address both of them.

On other work: This post was influenced by many other EA thinkers, and I have tried to link to their work throughout. I should also note that Luke Freeman wrote a post earlier this year which covers similar ground as this post, though my idea for this post developed independently from his work.

An influx of interest in EA makes accessibility really important right now

Lots of people are getting introduced to EA who weren’t before, and more people are going to be introduced to EA soon

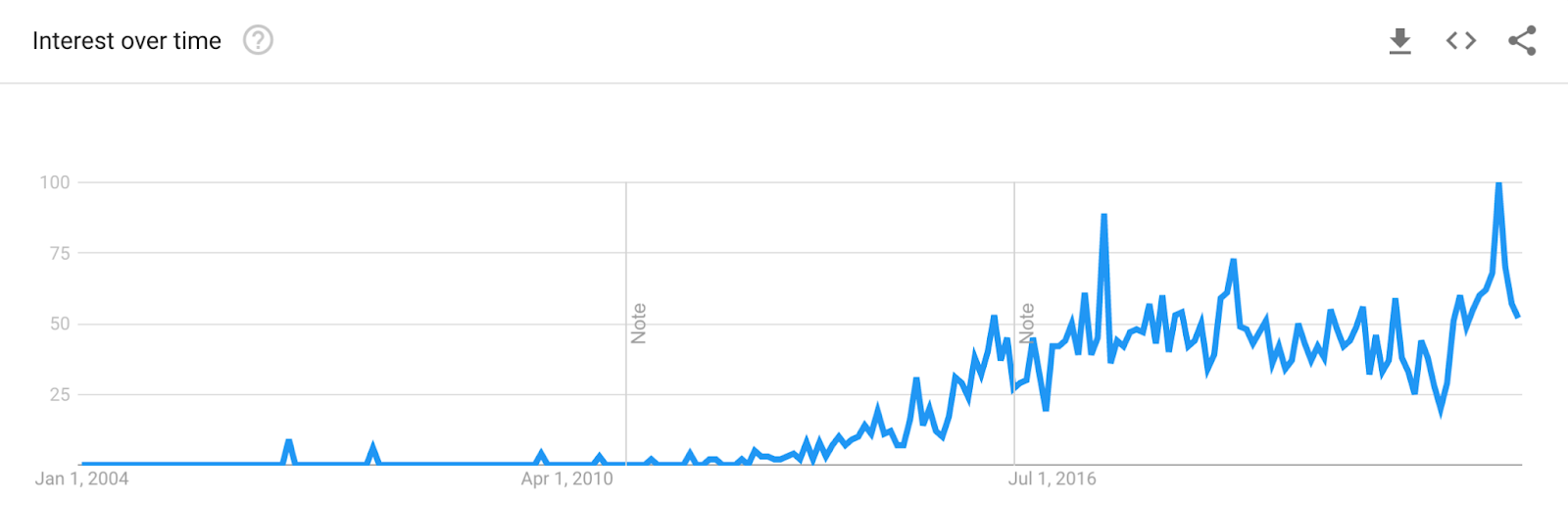

EA is becoming more prominent, as a Google Trends search for “effective altruism” shows pretty clearly.

EA is also making strides into the intellectual mainstream. The New York Times wrote about EA in a 2021 holiday giving guide. Vox’s Future Perfect (an EA-focused vertical in a major news outlet) started in 2018 and is bringing EA to the mainstream. Heck, even Andrew Yang is into EA!

I (and others) also think there will be a lot more people learning about EA soon, for numerous reasons.

- Sam Bankman-Fried and FTX have been all over the news recently, including in articles focused on EA.

- The number of EA local groups has grown hugely and continues to grow (from 2017-2019, there were 30 new EA groups founded per year).

- The huge influx of funding from FTX means that in the coming years more EA grants will be made, more EA orgs will come into existence, and presumably more people will thus learn about EA.

- What We Owe the Future is coming out soon, and there will be a media push and (hopefully) lots of coverage of the book associated with that.

As a result of these factors, I expect that pretty soon EA will be well-known, at least within elite intellectual circles. This will create a step change in EA’s visibility, with an order of magnitude more people knowing about EA than did before. This increased visibility creates a large group of “prospective EAs” who might join our movement.

I believe that these prospective EAs’ first impressions of EA will have a big impact on whether or not they actually join/meaningfully engage with EA. This is because highly-educated people generally have a number of other interests and potential cause areas in which they could work, including many cause areas which are more in line with most people’s intuitions and the work of others in their social circles (e.g., local political advocacy). Without a strong pull toward EA, and with many pulls in other directions, one or two bad experiences with EA, or even hearing bad things about EA from others, might be enough to turn many of them off from engaging.

Today’s prospective EAs differ systematically from yesterday’s prospective EAs, and are more likely to be “casual EAs”

Back in the day, I think people learned about EA in two big ways: 1) they were highly EA-aligned, looking for people like them, and stumbled across EA, or 2) they were introduced to EA through social networks. Either way, these people were predisposed to EA alignment. After all, if you’re friends with a committed EA, you’re more likely than the average person to be weird/radical/open to new ideas/values-driven/morally expansive/willing to sacrifice a lot.

But now people are learning about EA from the New York Times, which (in my opinion) is a much poorer signal of EA alignment than being friends with an EA! Also, as more people are learning about EA, basic assumptions about population distributions would suggest that today’s paradigmatic prospective EA is closer to the mean on most traits than yesterday’s paradigmatic prospective EA.

To be clear, I think most people have some sense of moral commitment and want to do good in the world. But I also think that most people are less radical and less willing to sacrifice in service of their values than most EAs. I will refer to these people as “casual EAs”: people who are interested in EA and generally aligned with its values, but won’t follow those ideas as far as the median current EA does.

I also think that current and future prospective EAs are likely to be older than previous groups of prospective EAs. Right now, most EA outreach happens through college/university campus groups. As EA awareness-raising shifts towards major news outlets, a wider range of ages will be introduced to EA.

I think we should try to recruit casual EAs

There are reasons not to try to attract people who don’t seem values-aligned with EA, or who don't seem committed enough to their values to make the sacrifices that EA can often require.[1] But I also think there are a lot of reasons we should recruit these people.

- I suspect that the size of this population dwarfs the size of the current EA population. On the most basic level, just based on some normal assumptions about population distributions, any group of that size will have many people in it who are above average in intelligence, wisdom, creativity, communication skills, empathy, writing, math, organizational management, etc., and some people who are at the very tail end of the distributions. I want those people to bring their skills and talents to EA.

- Many of these people have skills and career capital that few current EAs do, expanding the types of impact that they — and thus the movement as a whole — can have.

- These people come from a huge range of fields and topic areas, many of which are very poorly represented in EA right now (e.g. sociology, law, consulting, art). This means they have skill sets, perspectives, and approaches that can inform and strengthen EA work. If epistemic humility matters to us, we should want to diversify our movement.

- Some casual EAs will eventually turn into die-hard EAs. A mid-career professional with two kids might feel like they can’t quit their stable job to become an AI alignment researcher or a wild animal welfare advocate. But eventually, that person may get over their fear and make the jump.

I think there are two problems that make EA increasingly inaccessible, especially for casual EAs. I will explore them in turn.

Problem 1: EA is (practically) inaccessible, especially for casual EAs

For many people, working on EA is either impossible or so difficult they won’t do it

There are a lot of reasons for this.

- It’s too hard to switch fields. Most people, especially those who are a bit older, have serious family and/or financial responsibilities (such as kids, a mortgage, aging parents, etc.). These commitments make it hard for them to follow the advice commonly given to students or very young professionals, such as to move across the country for a high-impact job, change fields to a higher-impact field even if it means starting at the entry level in your new field, accept pay cuts or serious financial uncertainty to move to a higher-impact job, work long hours to train for a new field or start a new project, and so on. Additionally, older professionals have likely built up career capital in their existing fields, which means that the comparative advantage for them of switching to another field is lower, even if the second field is higher-impact.

- People don’t have the needed skills. Some people don’t have the right skills to do direct work in the small number of highest-impact jobs and fields. These people may not be able to get the skills needed (e.g., if they don’t have some necessary aptitude), or the other life commitments described above might make it too difficult for them to get those skills.

- There aren’t enough jobs. As many people have noted before, getting a job in EA is hard. This is likely truer in some fields/cause areas than others, but seems to be widespread across at least most of EA.

- The current influx of funding to EA may change this somewhat, but I’m skeptical that it will immediately translate into full-time, stable jobs that casual EAs are willing to take. There just aren’t that many established EA organizations with the capacity to absorb huge numbers of new staff, and it takes time to start and grow such organizations. Right now most EA organizations are young, and joining a young organization feels risky — especially for someone coming from outside EA who’s used to working for long-established organizations. (And applying for EA funding in some sort of grant program is even riskier and further outside of what non-EAs are used to.)

All of these constraints also apply to earning to give, at least as far as it’s commonly understood.[2] 80,000 Hours lists “tech startup founder” and “quantitative trading” as the best earning-to-give paths, with “software engineering,” “startup early employee,” “data science,” and “management consulting” as the second-best list. These career paths are all quite difficult! 80,000 Hours does list other careers which may be easier to enter, but the paradigmatic stereotype of an earning-to-give career is inaccessible to many people.

If direct work and earning to give don’t feel possible, but the community portrays those things as the only ways to make an impact/consider oneself a true EA, then a lot of smart, talented, values-aligned people will feel locked out of EA. Indeed, there’s evidence that this is already happening to some extent.

Today and tomorrow’s prospective EAs are especially likely to find EA inaccessible

The upcoming cohort of prospective EAs, described above, are more likely to be older and thus further along in their careers. This means they'll have more preexisting family/financial commitments and more career capital in their existing fields, both of which make it costlier for them to transition to direct EA work or high-earning careers.

Additionally, casual EAs’ commitment to EA likely isn’t enough to outweigh the risk of upending their lives to follow an EA path, again reducing the likelihood that they transition to EA jobs.

Problem 2: EA is becoming (perceptually) inaccessible, and therefore less diverse, as a focus on longtermism and existential risk takes over

Right now, EA is (perceptually) trending very hard toward longtermism and existential risk

I think this is close to common knowledge, but in the interest of citing my sources, below is a list of some facts which, taken together, illustrate the increasing prominence of longtermism and x-risks within EA.

- A chronological list of some of the most popular/well-known EA books goes: Animal Liberation (2001), Global Catastrophic Risks (2008), The Life You Can Save (2009), Superintelligence (2014), Doing Good Better (2015), The Most Good You Can Do (2015), 80,000 Hours (2016), The Precipice (2020), Moral Uncertainty (2020; this book was geared toward a more philosophical audience, as evidenced by the fact that it doesn’t show up on most lists of EA books), and What We Owe the Future (forthcoming later this year). Most EA books prior to 2016 were neartermist (mostly focused on global poverty and animal welfare). Meanwhile, the two recent EA books directed toward laypeople are longtermist.

- Many of EA’s founders have shifted to embrace longtermism. Holden Karnofsky founded GiveWell (neartermist, focused on global health and development) and then moved to OpenPhil (focus includes longtermism). Toby Ord and Will MacAskill went from founding Giving What We Can (which, at the time, suggested that people donate to neartermist causes) to writing The Precipice and What We Owe the Future, respectively. These people’s views on longtermism vs. neartermism may be more complex than these facts suggest, but at a top-line level, their focus seems to have shifted.

- Some EA organizations and funders have also moved toward longtermism. Giving What We Can initially suggested donating to neartermist causes, primarily in global health and development. It has since expanded its cause areas, including an added focus on longtermism. And of course, a bunch of EA organizations focused on longtermism now exist, when they didn't before.

- 80,00 Hours, which is often pitched as a first stop for people looking to get into EA, focuses heavily on longtermism. On its list of pressing global issues, the highest-priority areas are AI, GCBRs, global priorities research, and building EA. The second-highest priority areas are nuclear security, extreme risks of climate change, and improving institutional decision-making. Factory farming and global health are listed as “Other important global issues we’ve looked into” with the note that “we’d love to see more people working on these issues, but given our general worldview they seem less pressing than our priority problems.”

- There are more forum posts about existential risk than about neartermist causes. Specifically, there are 624 forum posts in the Existential risk tag, compared to 508 in the Global health and development and Animal welfare tags combined. (There are 351 in Longtermism, though there may be overlap between Longtermism and Existential risk). Note that those are historic numbers, and don’t reflect changes over time.

- Looking at recent forum data makes the shift to longtermism clearer. In the past year,[3] there have been 307 posts in the Existential risk tag and 191 posts in the Global health and development tag. To put it another way: the 215th forum post by post date in the Global health and development tag was published in November 2020, while the 215th forum post by post date in the Existential risk tag was published in September 2021.[4]

- The EA Surveys from 2019 and 2020 found that while global poverty remains the most popular single cause area among respondents, the popularity of AI risk and x-risks has grown over time. The 2019 survey found that when asked to choose a top cause from a shortlist (Global Poverty, Long Term Future, Animal Welfare/Rights, Meta, Other), the most popular response was Long Term Future. The 2020 survey found that support for Global poverty has reduced over time.

- Anecdotally, it seems like people are noticing a general shift toward longtermism and x-risk, especially AI. In fact, the perception that EA is only focused on longtermism/x-risk is strong enough that people have written forum posts responding to it.

- From what I've heard (admittedly, a small sample size), it seems like x-risk and longtermism are an increasing focus of many EA groups, especially campus groups.

Actual EA funding is not primarily distributed toward longtermism. But someone new to EA or not deeply involved in EA won’t know that. For those people, a glance at the forum or recent EA books, or a vibe check of the momentum within EA, will give the strong signal that the movement is focused on existential risks and longtermism (especially AI).

Longtermism is a bad “on-ramp” to EA

I support longtermism, and I think it’s good that more people are paying attention to existential risks. But I think it’s bad for longtermism to become the (perceptually) dominant face of EA, especially for those who are new to the movement, because I think it’s poorly suited to bring people in. This is because – to put it bluntly – longtermism and x-risks and AI are weird!

- They’re much further afield from the average person’s understanding of charity, and challenge more assumptions. More formally, let’s say we're trying to convince someone to switch from a non-EA charitable giving strategy (which is likely to focus on local/regional/national charities) to EA giving. To get them to give to global health and development, we have to convince them to expand their geographic scope from local to global. To get them to give to longtermist causes, we have to convince them to expand their geographic scope from local to global and to expand their time scope from present to future.

- They’re more confusing and harder to understand than neartermist causes. AI seems like ridiculous science fiction to most people. (Though COVID-19 has made the risks of pandemics much clearer, so that’s an exception.)

- Others have pointed out some broader trends in EA culture which make the movement less accessible than we’d like, including an aversion to criticism/reliance on received wisdom and a sense of EA as a “cult.” I suspect (and one of these posts suggests) that the weirdness of x-risks compared to other cause areas makes these cultural trends more concerning. For example, AI safety is new and complicated, making it more likely that people either rely on received wisdom when first introduced to the topic (potentially leading to disillusionment later) or find the focus on AI risks to be cult-like (potentially causing them to never get involved with EA in the first place). Fixing those cultural issues may be necessary to make EA accessible, but since others have written about them much more thoughtfully than I could, I am focusing elsewhere in this post.

- They’re less popular — while there’s plenty of room to grow in neartermist giving, malaria bed nets are certainly more mainstream than AI alignment.

Of course, some EAs may wonder why this matters. If longtermism is right, it might be that we only want longtermists to join us. I think there are a few reasons why EA as a whole, including longtermists, should want our movement to be accessible.

First, not all EAs are longtermists. Neartermists obviously believe they are doing great work, and want people to continue to engage with their work rather than being scared off by a perception of EA as only longtermist.[5]

But I think that even longtermists should want EA to be accessible. My experience, and the experience of many others, is that we joined EA because it seemed like the best way to do good in the neartermist cause areas we were already interested in,[6] learned about longtermism through our involvement with EA, and eventually shifted our focus to include longtermism. Essentially, neartermist causes served as an on-ramp to EA (and to longtermism). Getting rid of that on-ramp seems like a bad idea. Getting rid of that on-ramp seems especially bad right now, when we anticipate a large surge of prospective and casual EAs who we’d like to recruit.

Additionally, if the dominance of longtermism and existential risk continues, eventually those areas may totally take over EA — not just perceptually, but also in terms of work being done, ideas being generated, etc. – or the movement may formally or informally split into neartermists and longtermists. I think either of these possibilities would be very bad.

- One of my favorite things about EA is how this movement tries to stay open to new ideas and criticism. “Effective altruism is a question.” But listening to criticism and staying open to new ideas is only possible if there’s a pipeline of people to provide that criticism and those new ideas. If non-longtermists stop joining the movement, there won’t be (as much) criticism or new ideas around to listen to.

- Additionally, people who are focused on x-risks and people who are focused on neartermist, highly certain randomista interventions seem likely to have very different priors, approaches to risk, skill sets, connections, and so on. I think it’s good to have a diversity of opinions and approaches within EA, and that a lot of learning can happen when people engage with those who are very different from them. I don’t want EA to become an echo chamber.

To help solve both of these problems, EA should help casual EAs increase their impact in a way that’s an “easier lift” than current EA consensus advice

A note: I have much less confidence in my arguments in this section (especially “Don’t make people move into new fields”) than I do about my arguments in the earlier sections of this post.

Don’t make people learn new skills

One way to make EA more accessible is to emphasize the ways to do EA work (and even switch into EA fields) without having to learn new skills. EA organizations are growing and maturing, and as this happens, the need for people with all sorts of “traditional” skills (operations, IT, recruiting, UI/UX, legal, etc.) will only grow. EA can do a better job of showing mid-career professionals that it’s possible to contribute to EA using the skills they already have (though this can be tricky to communicate, as a recent post noted).

Don’t make people move into new fields

Obviously, we don’t want to communicate that someone can do EA work from any field – some fields are extremely low-impact and others are actively harmful. At the same time, there’s a spectrum of how broadly EA focuses, and I suggest we consider widening the aperture a bit by helping casual EAs increase their positive impact in the fields they’re already in (or in their spare time).

To help them do this, I’m imagining a set of workshops and/or guides on topics like the ITN framework, how to use that framework to estimate the impact of a job or organization, how to compare probabilities, modes of thought that are useful when looking for new opportunities to be high-impact, ideas on how to convince others in an organization to shift to a higher-impact project or approach, and examples or case studies of how this could look in different fields.

I see a few concerns with this idea:

- Designing and running something like this might trade off with more important work.

- If this was put together quickly enough that it wouldn’t meaningfully trade off with more important work, it might not be good enough to have an impact (or might even be harmful).

- Determining how any given EA professional can make an impact is difficult. EA professionals in the private sector are in many different fields and at many different places in their careers. They have a wide range of skills, including highly-specialized skills, as well as domain-specific knowledge across many domains and niche subdomains. The companies they work at range greatly in size and type. Unlike for many public-sector employees, the paths to promotion and advancement in their fields are often unclear. These factors make it challenging to identify a few paths to impact which will work for most people.

- If we overemphasize that people can have an impact without switching fields completely, we may cannibalize those who would otherwise shift to higher-impact causes.

As a result, I’m not at all confident that this idea would be net-positive or worth the cost, and I’m suggesting it primarily to spark discussion. If I’m right that there’s a very large group of people who are interested in increasing their impact but unwilling to make huge lifestyle changes, helping them shift towards even-slightly-more impactful work would have a large overall impact, so I think this merits a conversation.

Emphasize donations more prominently

As I mentioned above, I think the popular conception of earning to give focuses on high-earning careers. To make EA more accessible, the movement can also be clearer that giving 10% (or more) of one’s income, regardless of what that income is, does “count” as a a great contribution to EA and enough to make someone a member of the community.

Support EA organizations working in this area

Two new EA groups (High Impact Professionals and EA Pathfinder) are working to help mid-career professionals increase their impact, in context of the accessibility barriers that I laid out in this post. Presumably their ideas are much better than mine! Therefore, instead of or in addition to taking up any of the solutions I suggest above, another possibility is to simply put your time, money, and/or effort into helping these groups.

- ^

This post about the importance of signaling frugality in a time of immense resources is tangentially related, and very thoughtful

- ^

Some people see earning to give as simply giving a large portion of what you earn, regardless of career path, and this is how it is technically defined by 80,000 Hours. However, my anecdata suggests that most people see earning to give as only applicable in high-income careers. The 80,000 Hours page about earning to give focuses almost entirely on high-earning careers in a way that reinforces this impression.

- ^

Everything listed as “1y” or sooner on the forum as of July 15, 2022

- ^

I benchmarked to the 215th post in each tag because that’s what the forum gives you when you click “Load More” at the bottom of the list of posts

- ^

I recognize that this won’t convince an extremely dedicated longtermist, but convincing extremely dedicated longtermists to care about neartermism is outside the scope of this post (and not something I necessarily want to do)

- ^

Primarily global health and development, though I think climate change could fall into this category if EA chose to approach it that way

This is not directly responding to your central point about reducing accessibility, but one comment is I think it could be unhelpful to set up the tension as longtermism vs. neartermism.

I think this is true of AI (though even it has become way more widely accepted among our target audience), but it's untrue of pandemic prevention, climate change, nuclear war, great power conflict and improving decision-making (all the other ones).

Climate change is the most popular cause among young people, so I'd say it's actually a more intuitive starting point than global health.

Likewise, some people find neartermist causes like factory farming (and especially wild animal suffering) very unintutive. (And it's not obvious that neartermists shouldn't also work on AI safety..)

I think it would be clearer to talk about highlighting intuitive vs. unintuitive causes in intro materials rather than neartermism vs. longtermism.

I agree there's probably been a decline in accessibility due to a greater focus on AI (rather than longtermism itself, which could be presented in terms of intuitive causes).

A related issue is existential risk vs. longtermism. That idea that we want to prevent massive disasters is pretty intuitive to people, and The Precipice had a very positive reception in the press. Whereas I agree a more philosophical longtermist approach is more of a leap.

My second comment is I'd be keen to see more grappling with some of the reasons in favour of highlighting weirder causes more and earlier.

For instance, I agree it's really important for EA to attract people who are very open minded and curious, to keep EA alive as a question. And one way to do that is to broadcast ideas that aren't widely accepted.

I also think it's really important for EA to be intellectually honest, and so if many (most?) of the leaders think AI alignment is the top issue, we should be upfront about that.

Similarly, if we think that some causes have ~100x the impact of others, there seem like big costs to not making that very obvious (to instead focus on how you can do more good within your existing cause).

I agree the 'slow onramp' strategy could easily turn out better but it seems like there are strong arguments on both sides, and it would be useful to see more attempt to weigh them, ideally with some rough numbers.

To an outsider who might be suspicious that EA or EA-adjacent spaces seem cult-y, or to an insider who might think EAs are deferring too much, how would EA as a movement do the above and successfully navigate between:

1) an outcome where the goals of maintaining/improving epistemic quality for the EA movement, and keeping EA as a question are attained, and

2) an outcome where EA ends up self-selecting for those who are most likely to defer and embrace "ideas that aren't widely accepted", and doesn't achieve the above goal?

The assumption here is that being perceived as a cult or being a high-deferral community would be a bad outcome, though I guess not everyone would necessarily agree with this.

(Caveat: very recently went down the Leverage rabbit hole, so this is on the front of my mind and might be more sensitive to this than usual.)

Agreed, though RE: "AI alignment is the top issue" I think it's important to distinguish between whether they think:

Do you have a sense of where the consensus falls for those you consider EA leaders?

(Commenting in personal capacity etc)

This was such a great articulation of such a core tension to effective altruism community building.

A key part of this tension comes from the fact that most ideas, even good ideas, will sound like bad ideas the first time they are aired. Ideas from extremely intelligent people and ideas that have potential to be iterated into something much stronger do not come into existence fully-formed.

Leaving more room for curious and open-minded people to put forward their butterfly ideas without being shamed/made to feel unintelligent means having room for bad ideas with poor justification. Not leaving room for unintelligent-sounding ideas with poor justification selects for people who are most willing to defer. Having room to delve into more tangents off the beaten track of what has already been fleshed out leaves the danger for that side-tracking to be a dead-end (and most tangents will be) and no-one wants to stick their neck out and explore an idea that almost definitely is going nowhere (but should be explored anyway just in case).

Leaving room for ideas that don't yet sound intelligent is hard to do while still keeping the conversation nuanced (but I think not doing it is even worse).

Also, I think conversations by the original authors of a lot of the more fleshed-out ideas are much more nuanced than the messages that get spread.

E.g. on 4: 80k has a long list of potential highest priority cause areas that are worth exploring for longtermists and Holden, in his 80k podcast episode and the forum post he wrote says that for most people probably shouldn't go directly into AI (and instead should build aptitudes).

Nuanced ideas are harder to spread but also people feeling like they don't have permission in community spaces (in local groups or on the forum) to say under-developed things means it is much less likely for the off-the-beaten-track stuff that has been mentioned but not fleshed out to come up in conversation (or to get developed further).

If you donate 10% of your income to an EA organization, you are an EA. No matter how much you make. No exceptions.

This should be (and I think is?) our current message.

I always interpreted the 10% as a goal, not a requirement for EA. That's a pretty high portion for a lot of people. I worry that making that sound like a cutoff makes EA seem even more inaccessible.

The way I had interpreted the community message was more like "an EA is someone that thinks about where their giving would be most effective or spends time working on the world's most pressing problems."

I agree that it should be! Just not sure it is, at least not for everyone

Yeah, I recently experienced the problem with longtermism and similarly weird beliefs in EA being bad on-ramps to EA. I moderate a Discord server where we just had some drama involving a heated debate between users sympathetic to and users critical of EA. Someone pointed out to me that many users on the server have probably gotten turned off from EA as a whole because of exposure to relatively weird beliefs within EA like longtermism and wild animal welfare, both of which I'm sympathetic to and have expressed on the server. Although I want to be open with others about my beliefs, it seems to me like they've been "plunged in on the deep end," rather than being allowed to get their feet wet with the likes of GiveWell.

Also, when talking to coworkers about EA, I focus on the global health and wellbeing side because it's more data-driven and less weird than longtermism, and I try to refer to EA concepts like cost-effectiveness rather than EA itself.

Hi Ann,

Some quibbles with your book list. Animal Liberation came out in 1975, not 2001.

https://www.goodreads.com/book/show/29380.Animal_Liberation

You overlooked Scout Mindset, which came out in 2021.

https://www.goodreads.com/book/show/42041926-the-scout-mindset

Also,

>Essentially, neartermist causes served as an on-ramp to EA (and to longtermism). Getting rid of that on-ramp seems like a bad idea.

Do you worry at all about a bait-and-switch experience that new people might have?

I think we could mitigate this by promoting global health & wellbeing and longtermism as equal pillars of EA, depending on the audience.

I would hope that people wouldn't feel this way. I think neartermism is a great on-ramp to EA, but I don't think it has to be an on-ramp to longtermism. That is, if someone joins EA out of an interest in neartermism, learns about longtermism but isn't persuaded, and continues to work on EA-aligned neartermist stuff, I think that would be a great outcome.

And thank you for the fact-checking on the books!

I think this is a great post.

One reason I think it would be cool to see EA become more politically active is that political organizing is a great example of a low-commitment way for lots of people to enact change together. It kind of feels ridiculous that if there is an unsolved problem with the world, the only way I can personally contribute is to completely change careers to work on solving it full time, while most people are still barely aware it exists.

I think the mechanism of "try to build broad consensus that a problem needs to get solved, then delegate collective resources towards solving it" is underrated in EA at current margins. It probably wasn't underrated before EA had billionaire-level funding, but as EA comes to have about as much money as you can get from small numbers of private actors, and it starts to enter the mainstream, I think it's worth taking the prospect of mass mobilization more seriously.

This doesn't even necessarily have to look like getting a policy agenda enacted. I think of climate change as a problem that is being addressed with by mass mobilization, but in the US, this mass mobilization has mostly not come in the form of government policy (at least not national policy). It's come from widespread understanding that it's a problem that needs to get solved, and is worth devoting resources to, leading to lots of investment in green technology.

Possible thing to consider re: the public face of the movement -- it seems like movements often benefit from having one "normal", respectable group and one "extreme" group to make the normal group look more reasonable, cf. civil rights movements, veganism (PETA vs. inoffensive vegan celebrities)