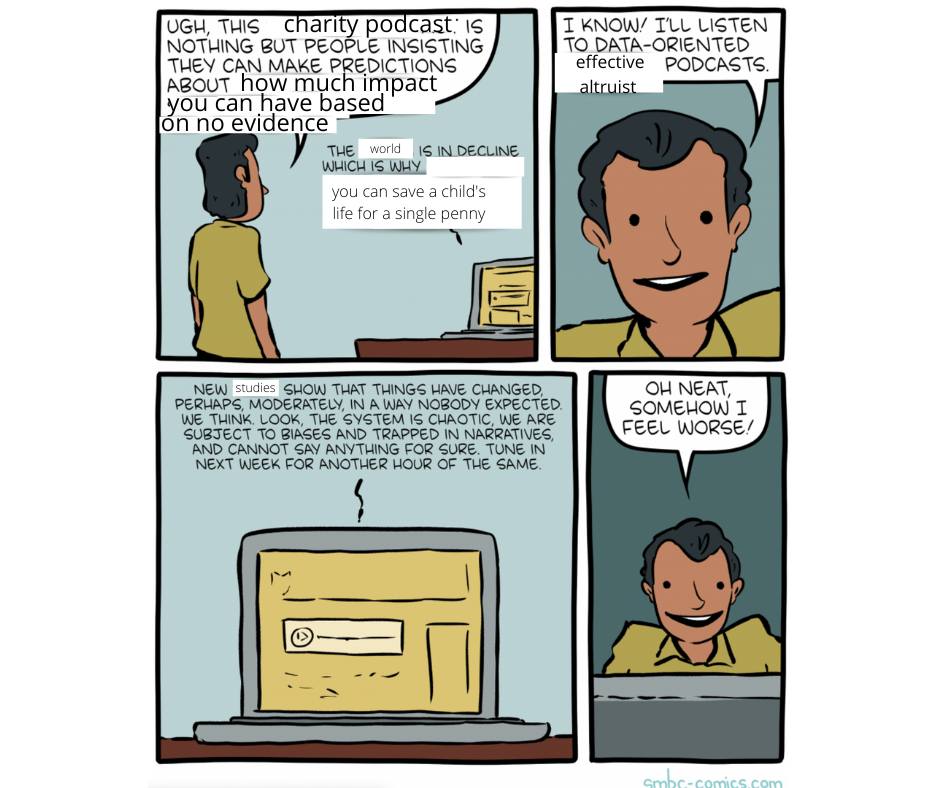

Epistemic status: a rambling, sometimes sentimental, sometimes ranting, sometimes informative, sometimes aesthetic autobiographical reflection on ten years trying to do the most good.

Reminder that you can listen to this post on your podcast player within 24 hours of it reaching 25 upvotes using the Nonlinear Library.

Ten years ago I first heard about effective altruism, and it was one of the best things that ever happened to me. I was immediately sold. In fact, at the time I had been trying to start a movement of “Scientific World-Changers”, so you can imagine my excitement when I discovered that there was already a movement and it had a much better acronym.

Since then it’s been a crazy decade. I’ve started four EA charities in all the major cause areas: global poverty, animal welfare, AI x-risk, and meta.

I’ve been so frugal that me and my partner only had one (always damp) towel between the two of us and thought that getting a bed frame for our mattress would cost too much dead baby currency. I’ve updated to being so time-frugal that I’m working on launching a fund to remove financial barriers to productivity for longtermists, such as providing therapy or dishwashers.

I’ve been on fire, working long hours trying to save the world. I’ve been burnt out, working long hours trying to set up systems so I don’t overdo it again.

And through it all, the EA movement, despite its many flaws, has been such a huge source of meaning, growth, and community in my life.

Which is why I wanted to do something on this special anniversary. I asked on Facebook and somebody suggested the most EA way to celebrate ever: writing a post on the EA Forum about my biggest lessons learned. Hence this post.

So, what have I learned over ten years of obsessively trying to figure out and implement the question: how do I do the most good?

There are a lot of contenders. Here are a few that first came to mind:

- Fundraising is one of the highest leverage meta-skills you can develop. It makes it so that instead of trying to find a job that does the most good, you can just think of what does the most good, then fundraise for that particular thing.

- Hiring is perhaps the second highest leverage meta-skill. Imagine you could have any skill in the world. This is what hiring allows you to do. You don’t have to learn how to code or develop decades of experience in a particular political arena. You can just hire people who already have those skills or experiences, and in fact, are probably better than you’d ever have gotten.

- It’s OK to care about your happiness. And not just because it’ll help you have more impact (which it will). It’s OK to care because you care intrinsically about your happiness. H/t to Spencer’s valuism for convincing me of this in the end. (Great resources on mental health and EA here and here)

- The meta the bettah. While it’s important to avoid meta traps and not have insanely long theories of change, generally speaking, meta is where you can get the truly great hits. It’s where you can set up passive impact streams, apply small multipliers to large numbers, and all other sources of juicy utility. It also tends to leave you with skills that cross-apply to a wide range of cause areas, giving you sweet sweet option value.

Which brings me to the biggest lesson I’ve learned over all this time:

The biggest lesson I learned from my altruistic journey so far: widen your f***ing confidence intervals

You know nothing about doing good in the world.

Seriously.

To be an EA is to find out, again and again and again, that what you thought was the best thing to do was wrong. You think you know what’s highest impact and you’re almost certainly seriously mistaken.

Every single time I have been so damn certain that this was the time we’d finally found the thing that totally definitely helped in a large way.

Every single time I have gone on to be thwarted by:

- Crucial considerations

- Evidence

- Social influence and psychological barriers

Crucial considerations

God, I hate crucial considerations sometimes!

There’s nothing quite like discovering this argument that totally destroys the expected value of the last two years of work. It’s even worse when you find out that there’s a good chance you might have made things worse!

There’s flow-through effects, speciesism, population ethics, meta-ethics, and don’t get me started about counterfactuals.

Damn counterfactuals to all hell.

They complicate everything and yet you can’t ignore them!

In a way, this shouldn’t be surprising. This is probably one of the most complicated problems to dedicate your life to. At least with rocket science you find out if you’ve fired the rocket or not. With trying to live ethically, you never know for sure if you did it right. Even if you get all of the factual elements correct and execute flawlessly, you could still be pursuing the wrong goals since ethics is a deeply unsolved field.

Evidence

And yet, in spite of the fact that your endeavor is founded upon a fundamentally unsolved problem, there’s still plenty of scope for you to get the facts wrong. I spent eight of my ten EA years in neartermist causes where we have these pesky feedback loops that ruin everything all the time.

Great for learning!

Mostly learning that you know nothing, but still.

It was quite the experience. There’s nothing like really engaging yourself in the scientific literature of a field to really make you realize how little we understand about anything at all, and global health studies are no exception. I can’t remember the exact details, but I remember Eva Vivalt doing some amazing meta-science in the field to see how often studies replicated. The results were dismal. Even if you ran the exact same study in the same country but a year later, odds were high you’d get a different result. Read her paper or listen to her podcast episode if you want to experience some epistemic angst.

The scientific method shows us that even when we have all the best arguments we can make from our armchairs, when the rubber hits the road and we can test our hypotheses, we as humans are wrong most of the time. Even if we got all the crucial considerations correct and had our ethics in order, we’d probably find out that something hadn’t worked.

And we don’t even know why.

Science shows us that the world is complicated and we are just monkeys.

Monkeys in shoes. Monkeys who’ve gone to the moon. But monkeys nonetheless.

Social influence and psychological barriers

Another side effect of being a monkey is that other monkeys have an undue influence on us. It’s very easy to think that we’ve come to a conclusion because of rationally considering all the arguments.

And maybe you did indeed come to those conclusions via some very good reasoning.

But it could also be that you haven’t considered some other arguments because if you did then some of your monkey friends would give you funny looks. Or maybe you wouldn’t hang out with them much anymore, because if you really took that argument seriously, you wouldn’t have overlapping interests anymore.

The most common reason, though, that being a social monkey can lead to missing ideas is that one of the best ways to change your mind is to talk to a monkey who disagrees with you. If you read a book that challenges your beliefs, it’s easy to think “Ah, but what about Counterpoint B! This is why the whole idea is wrong”, then proceed to read something by a more reasonable author.

It could be, though, that they covered Counterpoint B in chapter twelve. Of course, it’s not really possible to know that without reading the whole thing, which is not a reasonable strategy. There are too many ideas in the world to give them all your full attention.

This is where talking to a monkey with differing viewpoints can help. If you’re discussing the idea with them and you say Counterpoint B, they can immediately let you know about Counter-counterpoint B. They can force you to look at it, even if your monkey-brain really really doesn’t want to look at it. Cause goddammit, you’ve changed your mind a million times already. Can’t you just be left in peace with your current comfortable views?

This is why so many people opt to only hang out with monkeys who they agree with, or to not “stir the pot” and bring up those pesky disagreements.

This is all well and good if you’re trying to lead a simple happy life. However, if you’re trying to lead a happy and impactful life, you’ll want to maintain friendships with people of diverse viewpoints. You’ll want to purposefully talk with people who disagree with you. Talk to EAs from different cause areas. Talk to people who aren’t EAs at all!

Do not create a sheltered group of friends who all agree, but debate ideas endlessly. Become good at disagreeing, such that it doesn’t feel like a debate, but a joyous exploration of ideas amongst fellow idea-lovers.

Because, if there’s anything that I have most learned in all my years of EA, it’s that we’re almost certainly wrong about what is best. We’re wrong about what is good. We’re wrong about how to go about getting what is good.

And that’s the best part about EA. It’s not an ideology but a question. It’s not a sprint, and it’s not a marathon - it’s a maze. It’s a journey where I don’t quite know the destination, but I do know that it’s the most meaningful one I could ever pursue, and I’m excited to see where the next ten years take me.

I really enjoyed this. Deep truths that address some of my current overconfident tendencies and insecurities while being entertaining! Thank you. :) The post could've been even more awesome if you linked to the other insights (e.g., the post on hiring).

Thanks!

Good idea about linking to the hiring post. I wrote that after this one, but I've gone back and added it. Thanks for the suggestion!

Hi Kat, thanks so much for your post, that's a lot of food for thought! Do you have any examples that you're thinking of when writing about crucial considerations, evidence, social/psychological factors? I'd love to hear more about the specific cases where these were so important. :)

Hi Kat, Thank You!

The biggest lesson I learned from my altruistic journey so far: widen your f***ing confidence intervals

From my 20+ year career as an investor, it is my experience that your observation on confidence intervals is one of the most important lessons we can learn (and then try to implement, which is extremely hard for most people, but is beneficial to all are able to train themselves to do it).

Hi Kat, I've read some of your stuff and I love your thoughts, your attitude, your vibe. I just wanted to respond to this lovely post...your very open and human willingness to constructively criticize or self-audit or whatever you want to call it, is amazing...I see in it a particular quality I'd like to comment on because besides yourself, I think it might be common to the kinds of people drawn to EA...as you mention you had wanted to start "Scientific World Changers" then discovered a different way of saying a similar thing "Effective Altruism"...so you like many in EA have a scientific bent. Which is wonderful of course. But I don't have that. I've spent my whole life in Art and Social Activism and Religion (now I'm not religious anymore but I did it as a profession and spent 30 years going at it hard)...anyways being a person of a very different "bent" than you and a lot of EA'ers I found your beautifully written words in this post very interesting in this way; You seem to have this science based expectation that things should go as scientific laws dictate...if you input correct stuff and the theory is correct then results should always come out the same every time - scientific method experienced! Wonderful...and then so insightfully you explain in your experience, and to great frustration this often doesn't work...you share the "epistemic angst" producing work of Eva Vivalt which coincides with your own hard earned experience...amazing life experience you've had these ten years, and I feel compassion reading this account of it...and so this expectation of some kind of scientifically consistent results contrasted with real world leaves you with this hard earned wisdom that "you know nothing of doing good in the world" - yet - just as in your example that we are monkeys and even though we are monkeys who've gone to the moon (ie. the science worked) we are still monkeys, Kat has learned she doesn't know anything about doing good, yet Kat has started a shitload of charities that are doing good (ie. the Kat good doing worked)...and so here's my thought, or perspective to offer you...nothing at all counter to your amazing post, but simply to say, there's a whole world of us humans who don't have "scientific expectations" as I imagine you and many EA'ers have...and as a result we kind of just do things and just kind of accept the results and don't worry too much because we just thought we'd do our best and didn't have particularly specific expectations of how it would work out. And some of us are like Kats who've started a shit ton of great stuff and it mostly worked out like your stuff. Now I know many would react that yeah that's why all the non-scientific people get such shit results, and we scientific world changers are trying to be more effective in getting our shit done! Yes I love you all and thank you all and if it weren't for you I wouldn't be typing on this computer because there's no f'ing way my types would have ever invented a computer. But we did invent meditation, and songs, and poetry and some other cool stuff...so just to say, there's another way to approach life which is not so scientific and it has some very good results...and that we both need each other, our two sides, and to find balance we could gain a lot from the other sides perspective. As an artist/spirituality/social thinker person I'm deeply enjoying wading in the EA scientific way of life. I'm learning so much every day. I do worry though that you guys are going to freak yourselves out on too much doom of unaligned AI causing us to ruin the millions of longterm future humans who will never get to live because we f'ed up AI alignment...it's like imagining our screw-up holocausted all future humans...that's a heavy load to carry...and thus my comment. Thanks.

Oh gods, please have at least 2 towels, ideally 2-3 towels a piece (1 body, 1 hands after washing post-toilet, 1 face). Damp towels are a breeding ground for pathogens.

This is the best thing I've read on this Forum!

Aww. Thanks for the kind words!