Part I. Superintelligence and Security: Contingencies in the Securitisation of AGI

- 1. Superintelligence may not be the defining feature of military capability…at least for a while

- 2. Superintelligence does not need to be a matter of national security in its entirety

Part II. Power and Polarity: The Limits of US Hegemony

- 3. US hegemony does not guarantee international security

- 4. Winning the arms race does not guarantee hegemony (and we may be screwed if it does)

- 5. A unipolar world order may be unstable in the age of AI

- 6. Hegemonic stability through AI domination fattens the heavy tail of catastrophic risks

Part III. Lessons and Learnings: Takeaways for the Governance of Emerging Technologies

- 7. Export controls are not mutually exclusive from engaging in collaborative governance

- 8. Norms can be powerful and we should shape them accordingly

- 9. We should be prioritising the mitigating of risks through scope-sensitive policies

- 10. We need alternatives to AGI realism

TL;DRs

1. Superintelligence may not be the defining feature of military capability…at least for a while

The assumption that superintelligent AI equals military dominance ignores the reality that most conflicts are limited wars where technological superiority isn't decisive. History shows us that victory often hinges more on political will and resource commitment than technological edge, and optimising AI development for hypothetical total war scenarios could be dangerous and counterproductive.

2. Superintelligence does not need to be a matter of national security in its entirety

The idea that the securitisation of superintelligence (treating it as a national security threat) is inevitable and fixed in nature ignores how security threats are socially constructed and downplays the opportunities we have to shape the securitisation of superintelligence towards safety measures. We need more nuanced frames around AI security—distinguishing between different types of capabilities and development paths —rather than treating all AI progress as an equal security threat, as this oversecuritisation risks becoming a self-fulfilling prophecy.

3. US hegemony does not guarantee international security

Striving for US-led unipolarity in AI may dangerously provoke counterbalancing dynamics, as history shows that hegemonic dominance often sows the seeds of conflict. Additionally, hegemony itself has staunch limitations that may not result in the ability for the US to unilaterally shape superintelligence. Relying on unilateral US control over superintelligence could exacerbate US–China tensions and escalate nuclear risks.

4. Winning the arms race does not guarantee hegemony (and we may be screwed if it does)

Winning the arms race doesn’t equate to securing US hegemony, as true global leadership requires economic clout, institutional legitimacy, and cultural soft power beyond mere military might. Relying solely on military dominance risks destabilising alliances and provoking rival alignments, ultimately escalating the threat of catastrophic conflict.

5. A unipolar world order may be unstable in the age of AI

A temporary US AI lead may not secure enduring global dominance if no clear disjunction exists between highly capable AI systems and superintelligence, leaving room for rival catch-up. This uncertainty could force the US to rely on military coercion for AI safety, exacerbating conflict risks in an inherently unstable unipolar order.

6. Hegemonic stability through AI domination fattens the heavy tail of catastrophic risks

AGI realism’s advocacy for US-led, nuclear-style dominance risks replicating Cold War near misses and existential threats, as relying on military superiority in AI could provoke dangerous escalation and proliferation of hazardous capabilities. Rather than mirroring historical deterrence—which was as much a matter of luck as strategy—we should prioritise robust non-proliferation measures and international safeguards over militarised arms races.

7. Export controls are not mutually exclusive from engaging in collaborative governance

Using export controls to constrain dangerous frontier AI capabilities is not mutually exclusive from while leveraging economic interdependence and mutual interests to promote shared governance. However, heavy-handed AGI realist rhetoric risks exacerbating tensions and inadvertently driving China to accelerate independent, potentially unsafe, AI development.

8. Norms can be powerful and we should shape them accordingly

Robust norms underpin long-term stability and must be actively cultivated alongside pragmatism to secure safer AI futures. Over-reliance on military dominance risks sidelining crucial norm-forming policies such as international cooperation and proper securitisation, which history shows can reduce conflict.

9. We should be prioritising the mitigating of risks through scope-sensitive policies

Mitigating superintelligence risks demands a multifaceted approach—ranging from tighter controls in AI-bio and nuclear domains to hardware restrictions and international verification regimes—rather than a single silver bullet. While no individual policy is a cure-all, the cumulative impact of these targeted measures can significantly lower the likelihood of catastrophic outcomes without unduly stifling competitive progress.

10. We need alternatives to AGI realism

Relying solely on a US victory in the AI race as a silver bullet is perilous and risks accelerating arms race dynamics, isolating allies, and destabilising global governance. Instead, we must adopt a balanced approach that combines norm-forming, incremental safety measures with robust export controls, and international cooperation for sustainable AI futures.

Introduction

In “Situational Awareness”, OpenAI employee Leopold Aschenbrenner sent waves by arguing that the potential of superintelligence to transform military capability means it is a matter of national security. In his parting thoughts, he concluded that “the core AGI infrastructure must be controlled by America, not some dictator in the Middle East”. In response to the recent Deepseek-R1 developments, Anthropic’s CEO, Dario Amodei, argued the same sentiment, suggesting that export controls are imperative to lead us to a unipolar world whereby the liberal United States can set the agenda for AI safety in the long run instead of authoritarian China. Amodei and Aschenbrenner share the tenets of “AGI realism”. Given the recent announcements of Project Stargate, President Trump’s revoking of the executive order on addressing AI risks, and many other responses to Deepseek-R1, AGI realism seems to be increasingly the dominant paradigm.

It’s been a strange time to be a student of international relations (IR). It’s a discipline infamously ladened with disagreements all the way down; dominated by theoretical frameworks with little real-world purchase; and obsessed with its own shortcomings. But it also clearly matters. Cooperation, or the absence thereof, is at the heart of resolving trans-border challenges from climate change to global health and preventing threats such as nuclear weapons and misuse risks from emerging technologies. I’m glad that recent discourse is beginning to appreciate the importance of power structures, securitisation, and arms race dynamics for shaping how AI and other emerging technologies are governed globally. But I am struck by the apparent dominance of this narrative that the securitisation of artificial intelligence necessitates the US winning an arms race. Anyone who has studied international relations will be familiar with the many reasons for doubting this position.

I don’t think it’s clear that AGI realism is the right approach, and I think we may be unnecessarily accelerating arms race dynamics towards dangerous futures by adopting this paradigm uncritically. I think we may be systematically neglecting other opportunities for governance, particularly in shaping the nature of an arms race towards less risky futures, in favour of blindly seeking a competitive advantage against China in ways that may backfire catastrophically. I note that I’m not certain about what we should be doing instead. Given the motivations of the current administration, it may be intractable to move away from this paradigm. It also may be too late. However, I believe there is nowhere near enough public consciousness on why we may be making a grave error in being AGI realists. I hope to make it clearer why.

Part I. Superintelligence and Security: Contingencies in the Securitisation of AGI

1. Superintelligence may not be the defining feature of military capability…at least for a while

TL;DR[1]: The assumption that superintelligent AI equals military dominance ignores the reality that most conflicts are limited wars where technological superiority isn't decisive. History shows us that victory often hinges more on political will and resource commitment than technological edge, and optimising AI development for hypothetical total war scenarios could be dangerous and counterproductive.

The first of the three precepts of AGI realism, according to Leopold Aschenbrenner, is that superintelligence is a matter of national security because it confers so much military advantage. However, an important nuance is missing. Technological advantage is important for military dominance, but it has rarely been necessary or sufficient. I think many of the usual suspects for why this is the case will indeed be upended by the development of superintelligence. Superintelligent systems may very well provide belligerents with better strategies, tactics, and even more appropriate training for specific geopolitical contexts. However, the primary reason that technological advantage doesn’t confer military dominance is that most wars are limited wars in which only a fraction of resources are expended to attain specific political objectives rather than an outright victory.

The United States did not succeed in Afghanistan despite its overwhelming technological superiority. As of 2 February 2024, the GDP of the United States is nearly 1200x larger than that of Afghanistan[2]; it ranks first on the Global Firepower Index while Afghanistan ranks 118, and the US possesses vastly superior military technology in every domain. Were the US to engage in total war against Afghanistan, dedicating all of its resources and accepting the full political and humanitarian costs of unrestricted warfare, it would likely achieve a decisive victory - one that may have cost less than the $2.3 trillion spent on the conflict[3]. But they did not. In 2021, the US withdrew from Afghanistan, and then the Taliban returned to power. The reason why, to a significant degree, is a combination of political will, opportunity costs, and the insensitivity of domestic incentives to outright victory ultimately motivated limited, prolonged deployment fraught with varying levels of engagement over time. Afghanistan is not an isolated instance. Total war is the exception, not the rule, only seen in instances like the Napoleonic Wars or the two World Wars. Otherwise, for the vast majority of conflicts, technological advantage does not guarantee victory, given the broader factors that constrain resource commitments.

In other words, the importance of technological advantage is proportional to the perceived existential threat from losing a conflict, the level of constraints in winning a conflict, and extraneous political factors that motivate limited deployment. In a total war, I do not doubt that technological advantage is critical. However, total war does not represent the vast majority of likely future conflicts the United States will face. Optimising for this type of situation is risky. It would necessarily signal offensive intentions that may act as a self-fulfilling prophecy towards this outcome. Importantly, total war creates immense pressures for innovation, adaptation, and unorthodox methods of warfare that are inherently unpredictable, while developing truly comprehensive capabilities against these eventualities magnifies autonomous AGI risk. In a scenario where states on either side possess great capabilities conferred by superintelligent artificial intelligence, even if mismatched, such a scenario would not only be highly unpredictable but the costs could be catastrophic.

Superintelligence may cripple nuclear deterrence. However, this may push us towards a modality where the mass mobilisation of on-the-ground personnel becomes critical for maintaining an offensive advantage, with highly effective plans having already been developed by superintelligence systems such that countermeasures cannot mitigate much of the risks here. But it could also not. For obvious reasons, I think it would be a very bad call to encourage the United States to ensure artificial intelligence becomes really good at killing lots of people on the ground in the event we end up in this world. This kind of outcome is unlikely and would be just one of many equilibria that a total war could collapse into. Given this kind of outcome is unlikely, unpredictable, and costly, it does not seem sensible to base our entire national security strategy on it.

I note that the counterargument here is that the benefits of the asymmetric possession of superintelligence would be so advantageous that it would confer these benefits even at much lower levels of resource constraints. Not only do I think a high degree of confidence in this view is not warranted, but the United States realising significant relative economic benefits from AI already captures much of this effect by lowering the relative costs of engagement. Even in heated bipolar competition, we see the same dynamics play out during the Cold War, where neither side achieved such an overwhelming advantage as to fundamentally alter the strategic balance —both powers still had to contend with resource constraints and domestic political considerations. However, a widening economic gap ultimately underpinned eventual US success as it was increasingly able to stomach the costs of supporting a wide web of allies, its own arms proliferation, and remain involved in proxy conflicts without leading to too many domestic problems that the Soviet Union could not. The near-term future of US-China competition isn’t one in which most of the probability mass lies in total war scenarios where the possession of superintelligence is the definitive basis for success.

I don’t think the United States should actively avoid developing superintelligence to maintain a military advantage. Not only is this infeasible, but I think maintaining economic competitiveness could be important. However, we should certainly not be seeking to maintain as much technological advantage as possible nor push the narrative that AI will be the defining feature of military capability in the near future. In particular, it is important that we focus on the safe proliferation of capabilities and accept some of the trade-offs in maximising the rate of progress towards superintelligence for safety benefits. This looks like (i) not developing capabilities unlikely to be necessary for most conflicts but that greatly increase the risk of autonomous AGI risks; (ii) developing defence-forward capabilities, particularly those that signal defensive intentions, and (iii) optimising the level of relative military capability grounded in real-world US geopolitical interests and not hypothetical extremes. There may also be something to be said for prioritising flexibility. It seems prudent to maintain a wide manufacturing base to allow the United States to adapt to any level of conflict.

However, what all of this does not necessarily require is the maximal rate of AI progress. Within the next few decades, broader geopolitical constraints will mean an AI advantage may not be sufficient for victory in all limited wars. However, the scenario where an all-out technological advantage would be necessary is the kind of total war between the US and China we should seek to avoid at all costs.

2. Superintelligence does not need to be a matter of national security in its entirety

TL;DR: The idea that the securitisation of superintelligence (treating it as a national security threat) is inevitable and fixed in nature ignores how security threats are socially constructed and downplays the opportunities we have to shape the securitisation of superintelligence towards safety measures. We need more nuanced frames around AI security—distinguishing between different types of capabilities and development paths —rather than treating all AI progress as an equal security threat, as this oversecuritisation risks becoming a self-fulfilling prophecy.

AGI realism emphasises that the transformativeness of superintelligence for shaping military capability means its securitisation—the process by which security threats are socially constructed through actors transforming political problems into security threats[4]—is inevitable and fixed in nature. However, the core insight from securitisation theory is precisely that there is no intrinsic rational or functional logic for what becomes a national security consideration. To the extent that there is contingency built into the extent and nature of securitisation, and because securitisation itself shapes arms race dynamics, I am concerned that the ‘over-securitisation’ of superintelligence is becoming a self-fulfilling prophecy. We should be pushing against brute national security narratives and seeking more refined discursive frames.

In the debate about nuclear security, Nina Tannenwald famously writes that the absence of the use of nuclear weapons can be significantly traced to a “nuclear taboo” that gradually developed after the detonations of the nuclear bombs on Nagasaki and Hiroshima[5]. She describes a genuine consideration to deploy nuclear weapons during the 1953 Korean War invasion even when it seemed unnecessary, with the absence of such a consideration during the 1991 Gulf War when the limited use of small, tactical nuclear weapons may have otherwise been justified.

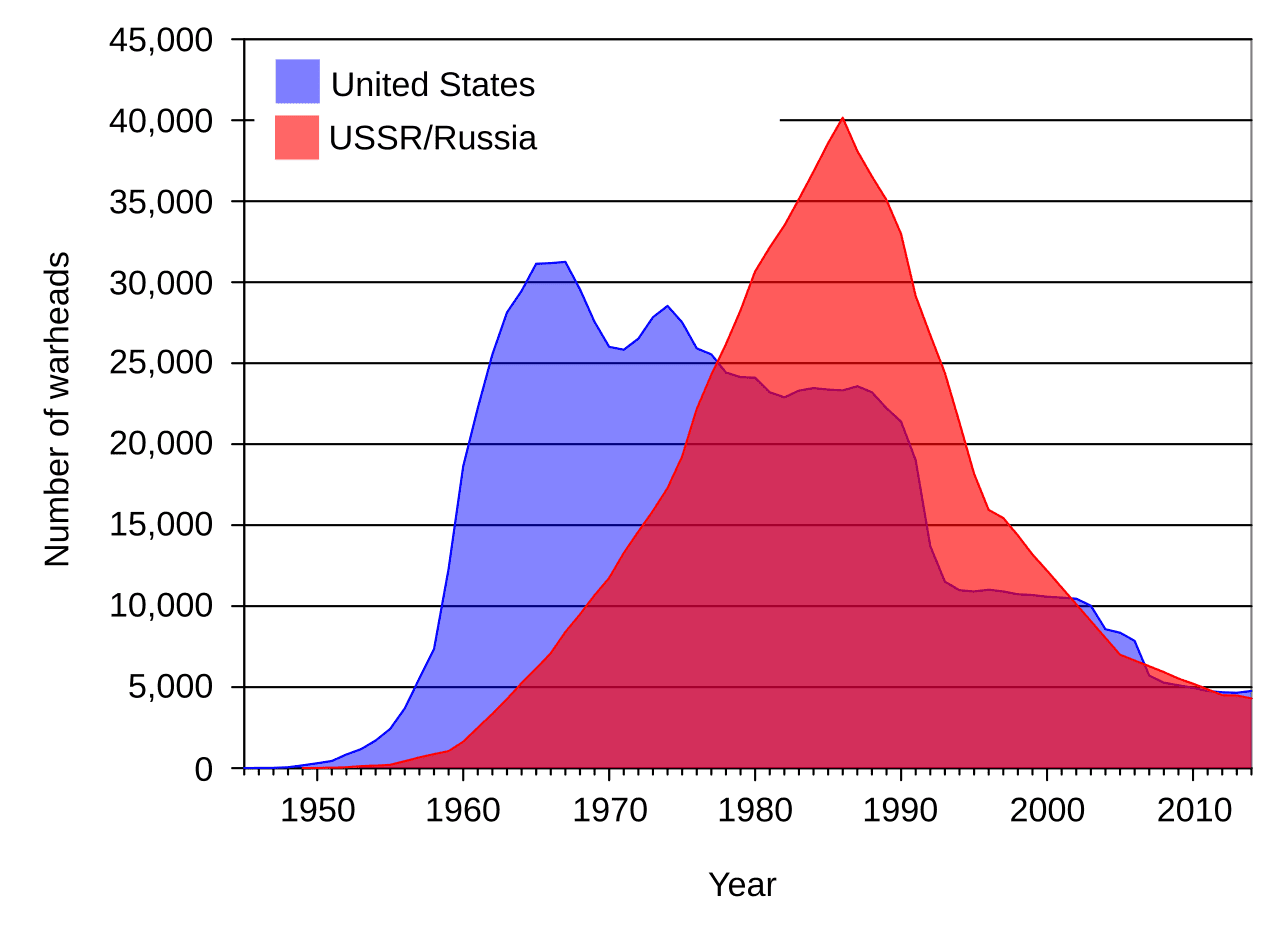

Tannenwald’s nuclear taboo hypothesis remains hotly debated. However, what is clear is that the offensive dominance conferred by nuclear weapons did not translate into clear national security consensus on the benefits of nuclear proliferation, precisely because of several uncertainties, including its potential for catastrophic consequences, the incentives for nuclear proliferation to create unstable and secretive bureaucracies, and risks from nuclear accidents[6]. The upshot is that there is international nuclear governance, as flawed as it is. Both the US and China are parties of the Non-Proliferation Treaty and signatories of the Comprehensive Test Ban Treaty. For all perceptions of Chinese counterhegemonic practices against the US, China still possesses roughly 500 warheads—10x fewer than the United States.

Securitisation theory tells us that there is no intrinsic rational or functional logic for what becomes a national security consideration because security is an “essentially contested concept” [7] that reflects the incentives, interests, and frames of actors rather than any universal logic. The advent of human security in the 1994 UNDP Human Development Report was not due to substantive shifts in the importance of food insecurity, global health, or climate change but a reflection of a post-Cold War world order; the rise of the importance of transnational advocacy groups, and the spread of humanitarianism among other factors[8]. On the other hand, conflict involving Russia in the last 15 years has shown us that energy independence is a much more pressing security issue than it has traditionally been considered[9].

I think we are likely at a point of no return in the securitisation of AI capabilities. However, I want to draw attention to this as the product of a discursive and ongoing process with built-in contingencies. I think there is scope to shape conversations around exactly what is securitised, to what extent, and in what ways. For example, the distinction between ‘strategic’ nuclear weapons (larger weapons designed to cripple the enemy’s ability to engage in conflict) versus ‘tactical’ nuclear weapons (typically smaller weapons designed to be used for particular military objectives on a battlefield)[10] has arguably facilitated the governance of nuclear weapons by allowing staunch regulation of the latter to avoid nuclear escalation while allowing nuclear states to maintain strategic stockpiles for deterrence.

I think the opportunity for similar taxonomic innovation exists in the AI governance world, but AGI realism is pushing towards an intense securitisation of AI capabilities across the board and all of their inputs. I am particularly concerned about decentralised capabilities, the development of frontier capabilities, and the integration of capabilities into weaponisable infrastructure. Concretely, I think counteracting these threats looks like the securitisation of open-sourcing, rapid acceleration of capabilities, and developments in the military-industrial sphere as opposed to mere progress towards superintelligence. These factors are not homogenous across all AI development, and more careful narratives could prevent arms race acceleration around every single progress in capabilities. Here, I think the reaction to DeepSeek-R1 has been a failure in framing. I worry we are over-indexing on the fact that DeepSeek is a Chinese company and under-indexing on the dangers of unrestricted open-sourcing. The upshot has been pushing China towards algorithmic efficiency and forming its own distinct circles of dependency, while failing to generate a norm against unrestricted open-source models of a certain capability.

I am unsure whether the ship has entirely sailed. However, it certainly would’ve been prudent to push against the idea that superintelligence, simpliciter, is a national security threat and any input towards it is a national security concern without more precise specifications of the conditions under which this is the case. I suspect there is a counterfactual where industrial policy was framed around the economic value of superintelligence without this degree of securitisation and that this is better for the world precisely because at least some of this value is not zero-sum. I am ultimately concerned that we are in the middle of the ‘oversecuritisation’ of superintelligence, insensitive to the heterogeneities in how the development of superintelligence may affect the likelihood and nature of great power conflict. We should actively challenge the notion that all development equally threatens US national security, and recognise our agency that this narrative has in shaping the threat itself. I am keen for taxonomic innovation and more grounded frames to take hold.

Part II. Power and Polarity: The Limits of US Hegemony

3. US hegemony does not guarantee international security

TL;DR: Striving for US-led unipolarity in AI may dangerously provoke counterbalancing dynamics, as history shows that hegemonic dominance often sows the seeds of conflict. Additionally, hegemony itself has staunch limitations that may not result in the ability for the US to unilaterally shape superintelligence. Relying on unilateral US control over superintelligence could exacerbate US–China tensions and escalate nuclear risks.

The second of the three precepts of AGI realism is that “America must lead”. Additionally, I felt that the single most striking passage on Dario Amodei’s recent blog post was the following:

“If China can't get millions of chips, we'll (at least temporarily) live in a unipolar world, where only the US and its allies have these models. It's unclear whether the unipolar world will last, but there's at least the possibility that, because AI systems can eventually help make even smarter AI systems, a temporary lead could be parlayed into a durable advantage.”

In international relations, ‘polarity’ refers to the relative distribution of power among great powers. A unipolar arrangement has a dominant hegemon; a bipolar arrangement has two; and a multipolar arrangement has multiple. What I find so striking about this passage is how casually this statement is made when the effects of polarity on international stability is arguably one of the most infamously contested debates in the entire discipline.

Implicit in this passage is the recognition that unipolarity may be short-lived. However, I think this is a gross understatement of why this push for unipolarity may be dangerous. Particularly relevant here is power transition theory, the idea that hegemony itself creates the conditions for counterbalancing and that it is the advent of rising powers that there is conflict. While many in IR go back and forth on whether unipolarity, bipolarity, or multipolarity is more stable, power transition theorists suggest that the periods of greatest instability are those in which the proliferation of capabilities by a rising power challenges status quo powers. For power transition theorists[11], the Alliance System on its own did not cause World War I, but rather, it was the rise of the German Republic challenging the status quo powers of Britain and France. Similar arguments have been made about the rise of Nazi Germany leading up to World War II and the rise of the USSR resulting in the Cold War.

I am not about to resolve the debate on whether power transition theory holds. However, there are two important implications we must keep in mind if the stated goal of AGI realism is US hegemony. Firstly, the magnitude of hegemonic advantage may very well shape the magnitude of counterbalancing—actively increasing the risk of conflict. Proponents of bipolarity—including the father of neorealist thought, Kenneth Waltz[12]—favour bipolarity because it enables great powers to monitor and respond to each other's actions without resulting in unstable power transitions. Even if we buy the importance of hegemonic stability, we should expect such nonlinearities between the level of hegemonic advantage and international stability.

Secondly, an important motivating factor for power transition theory is the fundamental limitations of hegemony itself. The US is undoubtedly the largest economic and most powerful military power right now. For many thinkers, the US is still considered the global hegemon[13]. Yet, the US cannot enforce its own competitive advantage in artificial intelligence—let alone control the norms around AI safety right now. Of course, this could be because it’s not quite enough of a hegemon. But it’s also because hegemony is really, really hard to maintain and fundamentally limited in actuality.

Hegemonic states incur enormous financial, political, and military expenses to project power and manage global order. This looks like sustaining extensive military deployments; establishing many economic support networks; maintaining a large diplomatic apparatus; giving expansive foreign aid, and contributing towards hundreds of international institutions and agreements. They must do this while contending with the inherent dynamism of the international system, where rising states and shifting alliances inevitably challenge its supremacy. There is only so much a hegemon can do, and only so long hegemons can last.

In other words, it’s highly unclear what the United States would be doing in most hegemonic outcomes. The immediate period after the collapse of the USSR was arguably when the US was at its most powerful relative to other states, and we did not see anything close to a unilateral imposition of American foreign policy interests. While the US successfully defended Kuwait from Iraq in the Gulf War in 1991; it was forced to withdraw from Somalia, did not react to Russian aggression in its former sphere of influence (such as limited action in response to the Russian invasion of Chechnya); the US was unable to compel India and Pakistan to join the Nuclear Non-Proliferation Treaty or prevent their 1998 nuclear tests[14]; and the 1997 Kosovo Protocol on climate change proceeded even after the US refused to ratify it.

I’m not just concerned that the hope that a hegemonic United States will singularly determine the rest of the world order is unfounded, but that these frictions will push the US towards seeking higher and higher levels of hegemonic dominance over China. If the bar requires a level of hegemonic dominance required to globally determine the most important technological development in history, then rational calculus may favour extreme action. Precedent does not favour peace here: at least since 1688, we have not seen the emergence of a global hegemon without conflict[15]. Ultimately, AGI realism certainly accelerates nuclear risks for an uncertain chance of mitigating risks from superintelligence.

I’m sympathetic to the need to maintain the US international influence and in favour of the notion that liberal democracies need to be in the driving seat for shaping AI outcomes globally. However, I do not think this looks like ensuring US hegemony in some critical juncture to be able to shape outcomes forever. The United States should be leveraging allies, economic interdependencies, diplomatic relations, and military power to coax us towards favourable outcomes. However, the only guarantee on relying on US hegemony upon the advent of superintelligence allowing the US to unilaterally save the world is that we accelerate arms race dynamics even further, and push the United States and China closer to war.

4. Winning the arms race does not guarantee hegemony (and we may be screwed if it does)

TL;DR: Winning the arms race doesn’t equate to securing US hegemony, as true global leadership requires economic clout, institutional legitimacy, and cultural soft power beyond mere military might. Relying solely on military dominance risks destabilising alliances and provoking rival alignments, ultimately escalating the threat of catastrophic conflict.

The defining aspect of a global hegemon is not merely that they are the defining military power. By the logic of transitivity, there is always a dominant military power. However, they are not always the global hegemon. The 20th century in particular saw no hegemon emerge until the United States took this position after the collapse of the Soviet Union. There are a number of reasons why.

Firstly, true hegemonic dominance is not just a function of military power, but global economic influence. US hegemony has been entrenched through foreign policy such as facilitating the postwar recovery of European states through the Marshall Plan and issuing Structural Adjustment Programs (SAPs) through the IMF and World Bank, cementing US influence in the Global South by tying financial aid to market liberalisation and fiscal austerity. Secondly, hegemony demands the capacity to establish and maintain international institutions that other states view as legitimate, as the US did with the Bretton Woods system, the UN, and NATO.

Thirdly, a hegemon must possess sufficient soft power to prevent its cultural and ideological leadership from appearing coercive, which the United States had succeeded in doing since fuelling the flame of national self-determination with the ‘Wilsonian Moment’ in 1919[16]. The Soviet Union, on the other hand, was not as successful. Fourthly, hegemony requires the ability to create and sustain alliances that other powers view as mutually beneficial rather than merely subordinating. Finally, a true hegemon must be able to provide certain global public goods— like securing maritime trade routes or maintaining monetary stability—that other states come to rely upon, something no power fully achieved in the 20th century until the US in the post-Cold War era.

The hope may be that an advantage in superintelligence will equip the United States with such sheer economic prowess and cultural significance that these relations of dependence, mutual benefit, and institutional reliance on the US practically establish themselves. However, this is far from a given. Autarkic economic policies and an isolationist stance naturally preclude the formation of these ties, and a perceived threat to international security by the United States may encourage bandwagoning around other states, such as China. I cannot overstate enough that the assumption that states abide by a rational actor logic in which they will simply seek to cooperate and comply with the most economically powerful state is not unilaterally true. History largely tells against this account. Whether it is historical examples like the Non-Aligned Movement during the Cold War; historical grudges against the United States, or simply the whims of authoritarian leaders, states often prioritise autonomy and independence over economic advantage, particularly when they perceive a threat to their sovereignty or cultural identity.

I also think emphasising victory for the US “and its allies” is far too insensitive to the realities and uncertainties of US allyship here. Alliances are fickle and dynamic at the best of times. Current alliances exist within a specific geopolitical context and are maintained through complex webs of mutual interests, shared threats, and institutional arrangements. An overwhelming US advantage in artificial intelligence could upset these relations in contingent ways. Dramatic power asymmetries, even between friendly nations, can lead to realignment rather than reinforcement of existing relationships, as evidenced by the rapid deterioration of Sino-Soviet relations in the 1960s despite their earlier alliance. The UK’s withdrawal from the European Union is a useful demonstration of the limits of shared liberal values in maintaining close relationships.

My concern is then not merely that an advantage in superintelligence does not translate into the ability for the US actually to determine global AI policy. However, it may also upset any semblance of the status quo while the US also loses many of its other levers due to nationalist and isolationist industrial policies aimed at guaranteeing its competitive advantage. Military coercion may be the only resource the US has to convert its advantage in superintelligence towards shaping the future of AI globally. I am concerned that AGI realist rhetoric is barreling us towards a future where the threat of war forms the basis of global AI safety, and one in which highly inflamed tensions as a result also increase the risk of catastrophic conflict.

5. A unipolar world order may be unstable in the age of AI

TL;DR: A temporary US AI lead may not secure enduring global dominance if no clear disjunction exists between highly capable AI systems and superintelligence, leaving room for rival catch-up. This uncertainty could force the US to rely on military coercion for AI safety, exacerbating conflict risks in an inherently unstable unipolar order.

The notion that America must win rests on the idea that hegemony can be converted into long-term stability. Dario Amodei writes:

“ It's unclear whether the unipolar world will last, but there's at least the possibility that, because AI systems can eventually help make even smarter AI systems, a temporary lead could be parlayed into a durable advantage”.

Unfortunately, I don’t think we can be so confident that this is the case. I think this claim only works if we assume there will be a clear disjunction between highly capable AI systems and superintelligence such that a US advantage may lead to quelling the proliferation of superintelligence for the rest of the world altogether. However, It’s not clear to me such a disjunction even exists at the technical level, let alone at a capability level. This remains a large uncertainty of mine, and I would be keen for those who have thought about this from a technical perspective to correct me. However, whether we are concerned about value lock-in or the deployment of misaligned AI, it seems that possessing near-superintelligent systems within an authoritarian state apparatus is sufficient to cause many of these harms and put a state pretty close to the commensurate deployment of superintelligent systems.

If we do assume such a disjunction doesn’t exist, then it is difficult to see definitively how a temporary lead necessarily becomes a durable advantage. I do not think it is a given that superintelligent AI systems on the US side competing with proto-superintelligent systems on the Chinese side will confer a straightforward advantage, particularly in a world where these are represented by discrete supply chains, spheres of influence, and mutually exclusive infrastructure. It depends on several unknowns, including the nature of agent-agent interactions when one agent is more sophisticated; the returns to scale on superintelligence for preventing the development of other superintelligent systems; the cost curve for creating future superintelligent systems; whether suitable, well-hidden facilities exist that could later replicate advancements to the distribution of compute; the future distribution of compute, and broader interdependencies between the economies of the US and China.

In other words, any advantage may be much less durable than we may think. While it seems very likely that there is considerable first-mover advantage, it does not seem clear that such first-mover advantage will prevent eventual agent-agent competition, states like China closing the gap in the long run, and confer such an advantage that we will be unilaterally able to prevent the development of superintelligent systems in all the authoritarian ways we should be rightfully concerned about. To the extent that I believe much of this risk is sensitive both to the nature of securitisation and inflaming tensions through aggressive arms race dynamics, we should consider the possibility that seeking such a temporary lead only worsens the risk from eventual post-superintelligence conflict.

It’s much harder to take lessons from history here because superintelligence may truly be disanalogous. However, almost all of the factors we might hope will continue durable long-term advantage have been highly sensitive to exogenous shocks and extraneous considerations in the past. Institutions, allyships, economic interdependencies, and even normative sentiments break down wax and wane in the long run. An asymmetric advantage in superintelligence will certainly create pressures towards stability. But as aforementioned, it does not matter whether non-cooperation with a US-led, superintelligence-charged, world order may be an existential threat for rival states whose incentives are coloured by historical grudges against the United States. History tells us that authoritarian regimes in particular are more than willing to bear such costs.

However, I emphasise almost all factors are sensitive to exogenous shocks and extraneous considerations because I think there is the real possibility that sheer military coercion may end up being the only reliable resource the US has to convert its advantage in superintelligence towards shaping the future of AI globally. Given the many inherent pressures towards dynamism even in a post-superintelligence order, military coercion may not just be the most viable resource for attaining US hegemony, but also for sustaining any regime of AI safety. Once again, I think AGI realism could result in a calculus whereby threatening destructive conflict with states across the globe—and actively inflaming tensions towards this outcome—becomes a necessity.

I do note that if such a disjunction does exist between highly capable AI systems and superintelligence then we may indeed be heading towards an immensely important critical juncture where it is clearly better for the US to win than China. However, the tractability of capitalising on such a critical juncture is a deeply important open question. Any signs of such a disjuncture I think would push us towards a no-holds barred arms race where the time period the United States would have to act is considerably short, and there would simply not be the degree of hegemonic dominance for the United States to truly shape the world order towards safer AI futures through mechanisms like institutions, leveraging allies, or fostering relations of economic interdependence for reasons I argued in the previous two sections.

The central tenet of realism in international relations theory is that while power politics produces differing degrees of transient security, the absence of authority in the international system (anarchy) guarantees inevitable conflict between states in the long run. I am concerned that AGI realism is ultimately built on the same set of assumptions and that the theory of change converges towards eventually having to seek military dominance over China—and potentially over much of the rest of the world—in order to guarantee the ability to shape futures in the long run. I believe such an outcome could be catastrophic, counterproductive, and unnecessary. In much the same way that countless schools of thought challenge realist assumptions and seek avenues for long-term stability through economic interdependence, norms of cooperation, and robust institutionalism, we should be increasing focus on these avenues for governance.

6. Hegemonic stability through AI domination fattens the heavy tail of catastrophic risks

TL;DR: AGI realism’s advocacy for US-led, nuclear-style dominance risks replicating Cold War near misses and existential threats, as relying on military superiority in AI could provoke dangerous escalation and proliferation of hazardous capabilities. Rather than mirroring historical deterrence—which was as much a matter of luck as strategy—we should prioritise robust non-proliferation measures and international safeguards over militarised arms races.

If the passage that struck me the most in Dario Amodei’s write-up was his take on unipolarity, then the passage that struck me the most in Leopold Aschenbrenner’s piece was his analogy to the reliance on nuclear dominance to the Cold War:

“The main—perhaps the only—hope we have is that an alliance of democracies has a healthy lead over adversarial powers. The United States must lead, and use that lead to enforce safety norms on the rest of the world. That’s the path we took with nukes, offering assistance on the peaceful uses of nuclear technology in exchange for an international nonproliferation regime (ultimately underwritten by American military power)—and it’s the only path that’s been shown to work.”

I share the views of several academics in the discipline that the absence of nuclear catastrophe during the Cold War was, in part, due to sheer luck and not the mere success of deterrence theory[17]. A critical reason why is that the proliferation of nuclear weapons not only did not deter conflict (the Cold War was, in fact, pretty hot), but it was a period marred by elevated existential risk. The Future of Life Institute identifies at least 22 near misses, including the notable cases in which individual decision-making by Vasily Arkhipov in 1962 and Stanislav Petrov in 1983 – both individuals who disobeyed orders amidst false alarm incidents by not launching what they thought was a nuclear counteroffensive. Near misses weren’t wholly exogenous either, this risk is built into the nuclear enterprise for at least two reasons.

Firstly, Scott Sagan’s account of organisational theory applied to state decision-making over nuclear weapons provides useful theoretical substantiation of why such near misses are to be expected: organisations operate under severe ‘bounded’ rationality and have inherent limits on coordinating that require simplifying mechanisms to handle[18]. The nature of nuclear bureaucracies magnified this risk for a number of reasons, namely the absence of organisational resources among emergent nuclear powers; the unique opacity of nuclear proliferation for security reasons, and selection pressures for nuclear states having volatile civil-military relations, such as Pakistan. Accidents are not only the only manifestation of this overall phenomena: rogue states pursuing nuclear weapons such as North Korea, nuclear states being overtaken by rogue governments (such as Pakistan)[19], and even nuclear terrorism[20] would all contribute towards existential risk.

Secondly, deterrence logics are in practice at least somewhat contingent, for they make no necessary predictions about the various sensitivities and information asymmetries involved. Namely, the risk aversion of decision-makers; what type of escalation would lead to a pre-emptive strike; what threshold of an attack is required for leaders to follow through on a counteroffensive; the actual level of proliferation in response to a perceived threat, and so on. In game-theoretic terms, deterrence logics not only fail to capture when individuals are irrational, but it also fails to capture when the payoffs rationally motivate war and the frequency at which payoffs tip into this equilibrium – even if it may be infrequent. The most important empirical evidence for this is considering the effect of individual leadership on peace. This is widely discussed concerning John F. Kennedy who, for example, pursued a series of measures designed to relax US-Cuba tensions, most notably in not pursuing nuclear war during the Cuban Missile Crisis[21]. Conversely, Khrushchev was also known to have a “mercurial temperament”[22] that, in part, drove the risky decision to supply Cuba with nuclear weapons in the first place.

The upshot is that near misses and elevated existential risk were systemic features of the Cold War period. Note that if an event has some probability 𝑝 of occurring in a given year, and the event’s occurrence is independent of other years, then over 𝑛 years the probability of the event occurring at least once is 1 − (1 − 𝑝) 𝑛 . If we assume only a 1% chance of an existential catastrophe per year, then over 100 years this still works out to a ~63% chance of the event occurring at least once.

Forgive the exposition about nuclear risk, but I believe AGI realism is sleepwalking us into a commensurate reality of near misses and elevated existential risk in much the same way. However, on top of concerns about deployment by states that lead to catastrophic consequences, an important source of heavy-tailed risks are precisely the consequences of autonomous AGI risks and the proliferation of capabilities that could aid terrorist organisations and lone wolves. For aforementioned reasons, I believe that AGI realism encourages the militarised integration of superintelligence to prepare us for outcomes that need not be likely but guarantee the ability of rogue AI systems to overpower humanity.

OpenAI has not been explicit about the precise nature of its involvement with the US Government for nuclear weapon security. But I note that there is every incentive in a total war scenario for autonomous planning and deployment of nuclear weapons, mainly because of concerns around the severing of communications ties, much the same way that the Soviet Union's Dead Hand system was designed to automatically launch a retaliatory nuclear strike if it detected signs of a nuclear attack and couldn't contact Soviet leadership. I think the likelihood of the need for this capability does not outweigh the risks from autonomous artificial intelligence possessing this capability, but the need for maximal hegemonic dominance and absolute second-strike capability in all contexts precisely pushes us towards this kind of risk.

Similarly, an accelerated AI race to reach a critical juncture of superintelligence without appropriate safety measures in place may lead to the proliferation of dangerous capabilities among terrorist organisations and rogue individuals. This point, in particular, has been stressed widely (but probably not widely enough). However, what does not seem salient is that we are ultimately forced to decide how much short-term risk we should stomach to ‘win’ superintelligence at a future critical juncture. For all the reasons I have pointed out, I am not sure AGI realism gets this calculus correct.

The Cold War is not the environment we want to mirror. However, there are certainly lessons from the Cold War on where our governance priorities should be. However, we should be pushing for a taboo on the proliferation of dangerous capabilities; a taboo on particular types of the militarisation of artificial intelligence, and taboos on the most risk-generating aspects of an arms race, such as unrestricted open-sourcing of risky, frontier capabilities. We should be mirroring the verification measures of the International Atomic Energy Agency into similar verification measures for on-chip governance and AI training runs. Crisis communications, defence-forward technological developments like nuclear hardening, and enabling the signalling of defensive intentions (e.g. through the strategic-tactical distinction or developing defence-forward capabilities) are some of the Cold War inputs that nudged us towards peace we should be learning from.

If success looks like mirroring the Cold War, then we are simply screwed. For much of the Cold War, the United States’ attempt to maintain dominance in nuclear stockpiles did not work. The peak number of nuclear missiles held by any state was by the Soviet Union, who had managed to catch-up. Yet, even while the United States maintained an offensive advantage in the earlier periods we still saw tensions inflamed during the Cuban Missile Crisis and a number of near-misses. If there was a robust norm that played a key role in preventing war, it was the recent horrors of World War II[23]. However, given we are now talking about securing a decisive offensive military advantage against a counter-hegemon, I suspect that the salience of the horrors of World War II has most certainly wane. I think the upshot of AGI realism may be an increased chance of autonomous AGI risk, great power conflict, and the realisation of all sorts of heavy-tailed threats (like AI-enabled bioterrorism) for not much payoff.

Part III. Lessons and Learnings: Takeaways for the Governance of Emerging Technologies

7. Export controls are not mutually exclusive from engaging in collaborative governance

TL;DR: Using export controls to constrain dangerous frontier AI capabilities is not mutually exclusive from while leveraging economic interdependence and mutual interests to promote shared governance. However, heavy-handed AGI realist rhetoric risks exacerbating tensions and inadvertently driving China to accelerate independent, potentially unsafe, AI development.

Before jumping into some higher-level takeaways, it’s worth embedding many of the previous points in the context of what we do about export controls. A starting point is conceiving of a complete counterfactual: governing through interdependence. The idea is rather than seek to establish complete technological dominance for the US and its allies over rival states such as China, we lean into interdependence with China to come to collective decisions about constraining capabilities. A clear example of this is the history of space policy. Space policy has seen joint US-Soviet Union missions since Apollo–Soyuz in 1975; a high level of compliance of international agreements such as the Outer Space Treaty and the Rescue Agreement; and norms against developing orbital weapons.

The ship has undoubtedly sailed on this type of arrangement for the governance of semiconductors, possibly rightfully so. However, the degree to which we can utilise shared infrastructure to come to collective agreements is not only continuous, but also filled with non-linearities. A safe approach to export controls is one in which export controls are used to constrain capabilities while leveraging broader economic interdependence to encourage the safer development of artificial intelligence. I suspect we may be close to missing a critical juncture in being able to utilise on-chip governance mechanisms to have greater visibility on Chinese AI development and focus the use of export controls to constrain the development of dangerous frontier capabilities instead of trying (unsuccessfully) to cripple the entirety of Chinese technological progress. But if not, this is one set of policies I think is worse pursuing.

However, I think it may not be too late to explore other forms of governance through interdependence. Shared cloud infrastructure and APIs could be used to implement monitoring and safety standards; joint research initiatives on AI safety are not mutually exclusive with export controls; and joint regulatory frameworks may still be worth pursuing. Whether it is blockchain-backed tracking systems or building safety norms through private sector consortia, it seems producent to exploiting non-linearities in opportunities for cooperation alongside an export control regime.

You might be confused about why such arrangements would persist alongside an export control regime. However, history tells us this is the norm. Concerns about China threatening US hegemony are decades old[24]. However, the US is China’s largest export market, and China is the US’s largest import market. During the Cold War, trade between the United States and the Soviet Union averaged about 1% of total trade for both countries through the 1970s and 1980s, marred by the obvious dilemma between economic dependence and positive-sum gains. The US and European nations continued scientific collaboration with the Soviet Union on space exploration and nuclear physics even while maintaining strict controls on military technology (like with Apollo–Soyuz). More recently, despite tensions over cyber espionage, the US and China has cooperated with the US on climate change initiatives and pandemic response[25].

‘Trade’ is not a homogenous reflection of geopolitical positioning, but it is composed of discrete networks of actors, interests, and incentives engaged in cross-cutting mutual exclusivity. Even authoritarian regimes must wrestle with the reality of private sector demand for their products, often resulting in a great degree of variance in the liberalisation of sectors. I’ve previously written on the Basel Accords, which is an apt example. While maintaining strict capital controls in many areas, China has adopted these international banking standards to enable its financial institutions to operate globally.

The key worry I have with the future of the export control regime is not just that it may lead to unintended consequences. Here, I do think there is a real risk that tightening export controls further encourages China to hone in on algorithmic efficiency; develop its independent supply chains removed from US visibility, and lead to heightened tensions that worsen race dynamics and magnify risks. I think it’s very possible that, ex post, the current export control regime accelerated the development of DeepSeek-R1 in a way that has been net negative for AI safety, even if export controls seemed sensible ex ante. However, I do think the foreign policy of Donald Trump means there may be no clear possibility for reversal nor no clear counterfactual on the need for further export controls. Rather, I am concerned that the heavy-handed rhetoric underpinning AGI realism unnecessarily pushes us towards total interdependence from China, the severing of all diplomatic relations, and the complete inability to utilise any lever—including those that can be utilised alongside export controls—to guide us towards safer AI futures.

8. Norms can be powerful and we should shape them accordingly

TL;DR: Robust norms underpin long-term stability and must be actively cultivated alongside pragmatism to secure safer AI futures. Over-reliance on military dominance risks sidelining crucial norm-forming policies such as international cooperation and proper securitisation, which history shows can reduce conflict.

Norms can be fickle, imprecise, and difficult to control. Yet, they are fundamental to every period of long-term stability we have seen throughout history. Whether it was the Peace of Westphalia in 1648 introducing notions of sovereignty between European States; the diplomatic norms that underpinned the Concert of Europe in 1815, or the nuclear taboo itself, peace throughout history has always been underpinned by mutual recognition and understood norms. I do not think we get to stable AI futures without working towards robust, safety-oriented norms in the same way. I do not think winning the AI arms race will matter if we end up in a world of limited hegemony, isolationism, and furious technological development through which war is the only recourse for shaping what may be an uncertain future.

Just as realism systematically understates the important and non-mutual exclusivity of work to build robust norms alongside protecting one’s strategic realism, I am concerned that AGI realist discourse is crowding out the importance of norm-forming policies that may be necessary for stable futures. I simply do not think an export control regime precludes maintaining diplomatic relationships with China and improving transparency where it matters.

I have already discussed what I think are two particularly important levers for norm-forming: international institutions and controlling the securitisation narratives. Many people, myself included, are sceptical about the ability for international institutions to effect useful policy in the short run. However, I think most people considerably understate the knowledge-forming and norm-shaping role of institutions. The scientific consensus around climate change that emerged through organisations like the Intergovernmental Panel on Climate Change (IPCC), and the evolution of global health cooperation through bodies like the World Health Organisation (WHO) are examples of these institutions that help establish technical standards, frame key challenges, and gradually shape state behavior.

I think an endemic problem in the discourse around international institutions is the lack of a prior theory of what the necessary constraints are, and in turn, what this means for how much can actually be attained. Realists in international relations are notoriously guilty of asserting that the anarchic structure of the international system precludes peace, yet lambasting organisations such as the United Nations for allowing conflict. If one is indeed a realist, the gains lie in probabilistic effects that cash out in reduced conflict over time rather than a situation of perpetual peace you think is structurally impossible. The UN record on peacekeeping is the appropriate example here, where the overall track record of peacekeeping is one of a reduction in violence (see Hultman et al., 2014[26]; Hegre et al., 2019[27], and Walter et al., 2020[28] for example), even if they do not always end wars, yet notable failures in peacekeeping has led to numerous calls for the abandonment of peacekeeping operations altogether. I think AGI realists are making the same analytical error in downplaying the role of international cooperation in contributing towards safe AI future.

The political scientist Yan Xuetong famously characterised the US-China relationship as a “superficial friendship” in which opacity about the degree of unfavourable interests leads to drastic fluctuations in relations over issues ranging from interference over subsea cables to conflicting interests in the South China Sea[29]. He suggests that “China and the United States should consider developing preventative cooperation over mutually unfavourable interests and lowering mutual expectations of support”[30], and I am inclined to agree. I think this looks like pushing towards international institutions for mediation, maintaining broader economic interdependence where it does not pose a unique vulnerability, and getting explicit commitments to avoid particular types of escalation. We would likely not get clear feedback loops right away, but long-term norm formation increases the expected cost of acting destructively in the future. It is unclear whether the United States ever would’ve used nuclear weapons during the Gulf War in 1991, but we at least know the possibility was not even considered compared to genuine consideration during the Korean War in 1953 due to the evolving norm.

On securitisation, I have already discussed the kinds of discursive shifts I think we need to see. Not all aspects of the AI Arms race are made equal, and I think there should be a more concerted effort to securitise the aspects that specifically increase the risk of conflict and/or autonomous AGI risk rather than frame discourse entirely around the need to win on superintelligence. This looks like the securitisation of unrestricted open-sourcing, the rapid acceleration of capabilities, and developments in the military-industrial sphere. The actual inputs here are precisely the contributions to policy debates: press releases, blog posts, news media, and engagements with policymakers. DeepSeek-R1 was certainly a key moment in the development of AGI. However, much less widely reported is that OpenAI o3-mini is the first model to reach the “medium risk” designation on Open AI’s “model autonomy” metric—a measure of self-exfiltration, self-improvement, and resource acquisition capabilities. The absence of flags about this development, compared to worries about DeepSeek, is precisely what I think flawed securitisation looks like. My call to action is to ultimately take the importance of norm-forming and discursive frames much more seriously and drive us towards more nuanced, safety-oriented frames.

9. We should be prioritising the mitigating of risks through scope-sensitive policies

TL;DR: Mitigating superintelligence risks demands a multifaceted approach—ranging from tighter controls in AI-bio and nuclear domains to hardware restrictions and international verification regimes—rather than a single silver bullet. While no individual policy is a cure-all, the cumulative impact of these targeted measures can significantly lower the likelihood of catastrophic outcomes without unduly stifling competitive progress.

One throughline of my critique of AGI realism is I don’t think there are any silver bullets for ensuring safe AI futures. Winning the AI race is far from a guarantee, and I think the safety of humanity will turn on the aggregation of not only long-term normative change but also the aggregation of measures that each incrementally nudge us towards safer outcomes. We are severely understating the importance of these kinds of marginal improvements.

I think, for example, this looks like mitigating particular catastrophic risks amplified by the development of superintelligence. In the AI-bio nexus, for example, this looks like working towards mandatory DNA synthesis screening; proliferating indoor air quality countermeasures such as far-UVC; exploring new promising technologies such as Blueprint Biosecurity’s investigations into glycol vapours; favouring defence-forward biotechnologies through differential technological development; ensuring appropriate stockpiles of personal protective equipment; better biocontainment of BSL-3 and BSL-4 labs particularly in developing countries; improving model evaluations to ensure decisionmakers are sufficiently conscious of the threat landscape; and pushing for safe academic norms on uniquely dangerous experiments, such as the development of mirror life. I’m also excited about the regulation of automated biolabs; the development of international biosecurity standards; and anticipatory governance of biotechnologies such as benchtop synthesis that involves ‘governability-by-design’, such as appropriate centralisation of manufacturing supply-chains, controlling the expertise barrier, and incentivising market dynamics that favour safe approaches to regulation.

In the nuclear domain, this may look like ensuring the costs of transferring enrichment technology (especially centrifuge expertise) remain high; constraining the development of highly accurate missile delivery systems that could undermine deterrence; and the consideration of whether specific types of nuclear missiles (e.g. long-range vs short-range) can signal defensive intentions. For AI governance, I have already mentioned on-chip governance. Additional measures may include hardware-level restrictions on compute capacity; international frameworks for monitoring and verifying AI system capabilities; standardised safety testing protocols before deployment of advanced systems; mandatory transparency requirements for training runs above certain thresholds; and moving towards the creation of international bodies with real enforcement power over AI development and deployment.

None of these policies on their own will move the needle very much on risks from superintelligence at all. However, a preponderance of measures that make it challenging to misuse superintelligent systems for harm will decrease the risks of catastrophic outcomes in the long run. This, alongside robust norms and institutions, offers the best chance at long-term stability. Many of these policies create trade-offs with accelerating the rate of AI capabilities, but this is the critical nuance I think is missing in AGI realism. There is a careful calculus to be made concerning how much we simultaneously ensure a competitive advantage in the proliferation of AI capabilities while also being sensitive to safety considerations. However, AGI realism posits that winning the AGI race is the only way to guarantee international security in the long run. Not only do I think this is untrue, but the level of hegemonic dominance I think is required to achieve these effects is one I think puts us on a path towards catastrophic conflict.

10. We need alternatives to AGI realism

TL;DR: Relying solely on a US victory in the AI race as a silver bullet is perilous and risks accelerating arms race dynamics, isolating allies, and destabilising global governance. Instead, we must adopt a balanced approach that combines norm-forming, incremental safety measures with robust export controls, and international cooperation for sustainable AI futures.

I want to conclude with three points. Firstly, I want to be transparent that my suggestions we should reorient towards norm-forming, incremental improvements, and international institutions may not be all that exciting. We may not have enough time to develop robust norms and incremental improvements may not be efficacious in time. I have tried not to make this write-up wholly critical and suggest what I think are better alternatives. However, I do not think that uncritically and singularly prioritising a US victory over China in the attainment of superintelligence offers us a better approach at all. I suspect we may be accelerating arms race dynamics, counterproductively worsening international tensions, and establishing relations between states that increase autonomous AGI risks and the risks from great power conflict to attain ends that are uncertain and unstable. If no other approach seems promising, then we should hope for a continuous (even if quick) takeoff that provides us with the time and signals to appropriately mobilise for AI safety. I’m concerned that part of the appeal of AGI realism is that it appears to offer us a silver bullet. However, I think it just may be the global security on the receiving end of that gun.

Secondly, I note my uncertainties about a lot of what I’ve written. The purpose of this piece was primarily to demonstrate the non-triviality of AGI realism rather than suggesting it is flawed in its entirety. However, at the very least, there are many more opportunities to hedge and a lot of policies that are not mutually exclusive that we should consider. Maintaining an export control policy in particular is not mutually exclusive from ongoing trade and cooperation. Since 1985, the Australia Group, a multilateral export control regime regulating the spread of chemical and biological weapons, has maintained an export control regime on chemical, biological, and radiological materials—including on novel technologies such as nucleic acid assemblers—while China continues to be an important player in the global bioeconomy. Similarly, I am excited about Europe emerging as a new focal point for global AI safety governance to ensure that there are indeed strong, liberal, allies for the US to shape AI governance towards better futures in the event that it is somehow able to leverage an asymmetric advantage in superintelligence unilaterally.

Finally, where I agree with Dario Amodei is that China is an “authoritarian government that has committed human rights violations, has behaved aggressively on the world stage, and will be far more unfettered in these actions if they're able to match the US in AI”. I do not want this piece to be interpreted as apologism for the Chinese government. I believe that the proliferation of liberal democratic values could be critical for the long-term stability of mankind. However, I warn against naivety. Just after the collapse of the Cold War, Francis Fukuyama famously preached the ‘‘end of history”, suggesting that social and technological development driving societies toward liberal democracy will lead to a permanent, stable, world order. However, reality has shown us how easy it is for democracies to backslide; the possibility for dictatorships to thrive in a liberalised global market economy; and the ability for democracies to engage in horrific human rights abuses and conflict. Liberal interventionism ultimately backfired and led to devastating consequences in regions like Iraq and Afghanistan, undermining both global stability and faith in democratic institutions.

Similarly, I think AGI realists are preaching the triumph of liberal democracy over authoritarian rule as long as we nail this one critical juncture in which superintelligence is developed. However, we should be concerned about the possibility that this drives us towards the need for military coercion; isolates the US from its allies; strips opportunities for productive governance, and inflames tensions in a manner that increases the risk of conflict and catastrophic outcomes from autonomous AGI risks for outcomes that may be uncertain and stable. Liberal domination may backfire and lead to devastating consequences for the globe in its entirety. We must challenge the uncritical attachment to AGI realism. There is a balance to be struck, and it begins with a holistic and grounded understanding of how we attain stable AI futures.

If you’ve made it this far, thank you! I put together this draft very quickly and published it prior to receiving any feedback, though my thoughts have been gathering here for many months now. These takes are still evolving, so I’ll try and respond to comments and update my views accordingly where possible. Though for discussing this piece further with me, I’ll be most receptive at conrad.kunadu@politics.ox.ac.uk.

- ^

All TL;DRs are largely LLM-generated.

- ^

See World Bank data. As of 2 February 2025, US GDP is $27,720,709.00M compared to Afghanistan’s $23,547.18M.

- ^

Adjusted for inflation, this is just over half the cost of World War Two and more expensive than the likely cost of sustained ballistic missile defence in an all-out nuclear war. It seems totally plausible this price tag would have been more costly than limited war, which is important for flagging that the decision to engage in limited war is not solely a matter of of perceived cost-effectiveness.

- ^

Buzan, B., Wæver, O., Wilde, J. d. (1998). Security: a new framework for analysis. United Kingdom: Lynne Rienner Pub..

- ^

Tannenwald, N. (1999). The Nuclear Taboo: The United States and the Normative Basis of Nuclear Non-Use. International Organization, 53(3), 433–468.

- ^

See the Waltz-Sagan debates for explication of these points. A good source here is Sagan, S.D. (2013). The spread of nuclear weapons : an enduring debate. New York :W.W. Norton & Co.,.

- ^

Baldwin, D.A. (1997). The concept of security. Review of International Studies, 23(1), 5–26. doi:10.1017/S0260210597000053

- ^

An article on this I particularly like is Tarrow, S. (2001). Transnational Politics: Contention and Institutions in International Politics. In Annual Review of Political Science (Vol. 4, Issue Volume 4, 2001, pp. 1–20). Annual Reviews. https://doi.org/10.1146/annurev.polisci.4.1.1

- ^

A good source here is Wilson, J. D. (2019). A securitisation approach to international energy politics. Energy Research & Social Science, 49, 114–125. https://doi.org/10.1016/j.erss.2018.10.024.

- ^

- ^

Notable here is the inventor of this theory, A.F.K. Organski. See World Politics. By A. F. K. Organski. (New York: Alfred A. Knopf. 1958. Pp. xii, 461. $7.50. Text $5.75.). American Political Science Review. 1959;53(2):587-587. doi:10.1017/S000305540023325X.

- ^

Waltz, K. N. (1964). “The stability of a bipolar world,” Daedalus, pp. 881-909.

- ^

Michael Beckley is the leading advocate here, but others include John Mearsheimer, Robert Kagan, Barry Posen, and Joseph Nye who point to factors like its military prowess, its economic superiority, Chinese ownership of US debt, Chinese demographic problems, US superiority in R&D spending, and the absence of entrenched Chinese regional hegemony.

- ^

Mahmood, T. (1995). Nuclear Non-Proliferation Treaty (NPT): Pakistan and India. Pakistan Horizon, 48(3), 81–100. http://www.jstor.org/stable/41393530

- ^

There is some debate here. But generally, this Wikipedia page is broadly reflective of consensus. The Glorious Revolution in 1688 led to a period of British hegemony without much bloodshed in England (although lots of bloodshed in Ireland and Scotland). But successive hegemons would include France after the French Revolution, Britain again after its defeat of France, and then the US emerging as hegemon after the Cold War (which was, in fact, pretty hot).

- ^

Manela, E. (2007). The Wilsonian moment : self-determination and the international origins of anticolonial nationalism. Oxford ; New York :Oxford University Press,

- ^

Namely Scott Sagan, Nina Tannenwald, Richard Lebow, Janice Stein, and Daniel Ellsberg among others.

- ^

Sagan, S. D. (1994). “The perils of proliferation: Organization theory, deterrence theory, and the spread of nuclear weapons,” International Security, 18, 4. pp.66-107

- ^

Ibid.

- ^

See Bunn, M., & Wier, A. (2006). Terrorist Nuclear Weapon Construction: How Difficult? The Annals of the American Academy of Political and Social Science, 607, 133–149. http://www.jstor.org/stable/25097844. They suggest nuclear terrorism was unlikely but seemingly possible.

- ^

A good source here is Getchell, M. (2018). The Cuban Missile Crisis and the Cold War: A Short History with Documents (Indianapolis: Hackett); Chapter 9: “Evaluating the Leadership on All Sides of the Crisis”; Conclusion: “Lessons of the Cuban Missile Crisis”.

- ^

Ibid.

- ^

Mueller, J. (1988) “The essential irrelevance of nuclear weapons: Stability in the postwar world.

International Security, 13, 2. pp.55-79

- ^

For example, see Gries, P. H. (2005). China Eyes the Hegemon. Orbis, 49(3), 401–412. https://doi.org/10.1016/j.orbis.2005.04.013.

- ^

Li, L., Wang, K., Chen, Z., & Koplan, J. P. (2021). US–China health exchange and collaboration following COVID-19. The Lancet, 397(10291), 2304–2308. https://doi.org/10.1016/S0140-6736(21)00734-0

- ^

Hultman, L., Kathman, J., & Shannon, M. (2014). Beyond Keeping Peace: United Nations Effectiveness in the Midst of Fighting. The American Political Science Review, 108(4), 737–753. http://www.jstor.org/stable/44154190

- ^

Hegre, H., Hultman, L., & Nygård, H. M. (2019). Evaluating the Conflict-Reducing Effect of UN Peacekeeping Operations. The Journal of Politics. https://doi.org/10.1086/700203

- ^

Walter, B. F., Howard, L. M., & Fortna, V. P. (2021). The Extraordinary Relationship between Peacekeeping and Peace. British Journal of Political Science, 51(4), 1705–1722. doi:10.1017/S000712342000023X

- ^

Yan, X. (2010). The Instability of China–US Relations. The Chinese Journal of International Politics, 3(3), 263–292. https://doi.org/10.1093/cjip/poq009

- ^

Ibid.

I'm surprised nobody has commented yet, and want to say that I really enjoyed and largely agree with this piece. The logic of needing to accelerate an AGI arms race to stay ahead of China is deeply flawed in ways that mirror unfortunate pathologies in the US foreign policy community, and IMO worsens US national security, for many of the reasons you mention.

Two questions for you:

How politically feasible is it to advance messaging along these lines, given the incoming administration's tech optimism and zero-sum foreign policy mindset (and indeed, the rare bipartisan consensus on hawkishness towards China?) I could see a lot of folks in the EA community saying "You're right, of course, but the train has left the station. We as a community lack the power to redirect policymakers' incentives and perceived interests on this issue any time soon, and the timelines are getting shorter, so we don't have time to try. Instead of marginalizing ourselves by trying to prevent an arms race that is by now inevitable and well underway, or push for collaborative international frameworks that MAGA has no interest in, it'd be more impactful to work within the existing incentives to slow down China and lobby for whatever marginal safety improvements we can."

Why did you label the views we disagree with "AGI realism"? Is that the preferred title of their advocates or did you pick the word realism? I ask because I think much of the argument dramatizing the stakes of China getting this before us is linked with liberal internationalist mindsets that see the 21st century as a civilizational struggle between democracy and autocracy, and see AI as just one complicating wrinkle in that big-picture fight. Inversely, many of the voices calling for more restraint in US foreign policy (ex: abandoning hegemony and embracing multipolarity) call themselves realists, and see the path to peace as ensuring a stable and durable balance of power. So I think of it more as a debate between AI hawks and AI doves/restrainers, both of which could be either realists or something else.

Thank you!