Nick Bostrom is the founder of the Future of Humanity Institute at the University of Oxford.

He is an influential thinker on technology, ethics and the future. He is known for his work on existential risk, artificial intelligence and the ethics of human enhancement, among other areas.

This post is for people who have spent less than a day reading his work. It presents some of his key ideas and links to good places to start going deeper.

If some of these seem interesting, go read the papers. If many are interesting, I suggest you spend a weekend on his website.

This is an early draft. If readers like you suggest I should, then I may do more work on this in the autumn. Please share your thoughts and suggestions on the EA Forum thread or the Google doc. You can also tweet or email me.

Want a PDF, eBook or audio edition of this post and the linked papers? Register your interest here. If a bunch of people request this, I will try to make it happen.

Version: 0.1.0

Last updated: 2021-07-31

0. Summary

I've selected seven papers and two talks. Below is a list, plus a tweet-length takeaway for each:

1. The Future of Humanity

The next 10-10,000 years are somewhat predictable, but few people are seriously trying to understand what's on the cards, and what we might do about it. It is clear that technology will continue playing an important role.

2. The Fable of the Dragon-Tyrant

Scope insensitivity and status quo bias are two common sources of error. Sometimes, explicit reasoning and back-of-the-envelope estimates can help.

3. Humanity’s Biggest Problems Aren't What You Think They Are

Death is a tragedy that we struggle to fully see, because we have learnt to accept it as a fact of life. The risk of human extinction this century is large and underappreciated. Life could be so much better.

4. The Vulnerable World Hypothesis

As technology becomes more powerful, the process of innovation—largely driven by trial and error—generates increasingly catastrophic risks. We now have the ability to destroy civilisation, without the means to reliably avoid doing so.

5. Existential Risk Prevention as Global Priority

Common sense says: "First, don't die". Consequentialist philosophy says: "The future could be huge, so it matters a lot". Very few people are working on existential risk; one strand of the effective altruism movement is trying to fix that.

6. Crucial Considerations

Predicting the future is not impossible, but it is hard. The nascent field of global priorities research aims to identify more "crucial considerations" for people hoping to positively influence the long-term future.

7. The Ethics of Artificial Intelligence

AI is going to be a big deal. We are pretty uncertain about the conditions for consciousness, but we should probably assume they can arise on digital hardware, as well as biological. What happens if (or when) our AIs become capable of pleasure and suffering?

8. The Wisdom of Nature

Every step in an evolutionary process has to be adaptive, so there are places where an intelligent designer—namely us—can probably do better than natural selection. We should be more excited about transhumanism than we are.

9. Letter from Utopia

The future could be very big and very wonderful.

1. The Future of Humanity

As a topic of serious study, the long-term future of humanity is oddly neglected.

Long-term predictions are sometimes thought to be impossible. But, writes Bostrom:

The future of humanity need not be a topic on which all assumptions are entirely arbitrary and anything goes. There is a vast gulf between knowing exactly what will happen and having absolutely no clue about what will happen.

For example, we can be confident that technology will remain a central part of the human story:

Most differences between our lives and the lives of our hunter-gatherer forebears are ultimately tied to technology, especially if we understand “technology” in its broadest sense, to include not only gadgets and machines but also techniques, processes, and institutions. In this wide sense we could say that technology is the sum total of instrumentally useful culturally-transmissible information. Language is a technology in this sense, along with tractors, machine guns, sorting algorithms, double-entry bookkeeping, and Robert’s Rules of Order.

[...]

Technological change is in large part responsible for many of the secular trends in such basic parameters of the human condition as the size of the world population, life expectancy, education levels, material standards of living, and the nature of work, communication, health care, war, and the effects of human activities on the natural environment. Other aspects of society and our individual lives are also influenced by technology in many direct and indirect ways, including governance, entertainment, human relationships, and our views on morality, mind, matter, and our own human nature. One does not have to embrace any strong form of technological determinism to recognize that technological capability – through its complex interactions with individuals, institutions, cultures, and environment – is a key determinant of the ground rules within which the games of human civilization get played out.

Zooming out on human history, we see that the pace of technological development recently began increasing rapidly:

If we compress the time scale such that the Earth formed one year ago, then Homo sapiens evolved less than 12 minutes ago, agriculture began a little over one minute ago, the Industrial Revolution took place less than 2 seconds ago, the electronic computer was invented 0.4 seconds ago, and the Internet less than 0.1 seconds ago – in the blink of an eye.

Less than 80 years ago, nuclear weapons gave a few world leaders the power to more or less destroy civilisation. In the next 80 years, biotechnology, nanotechnology and artificial intelligence seem poised to grant us still greater capabilities, and to reshape the human condition yet more profoundly. For this reason, it seems plausible that events in the 21st century could have an unusually large influence on the long-term future of our civilisation.

The paper considers four scenarios for humanity’s long-term future: extinction, recurrent collapse, plateau, and posthumanity.

See also:

2. The Fable of the Dragon-Tyrant

When faced with a terrible situation that we cannot change, one response is to cultivate serenity and acceptance. In so doing, we stop seeing the situation as terrible, and start to tell stories about why it is normal, natural, perhaps even good.

Such stories are consoling. But, they involve blindness and forgetting. If conditions change, and we get a new chance to remedy the terrible situation, our stories may become a grave hindrance: we will have to re-learn to see the situation as terrible. In the meantime, we may actively defend a terrible status quo as if it were good.

Technological developments give us surprising new abilities to improve the human condition. We should cautiously but urgently explore them, and be wary of consoling stories that blind us to normalised-but-terrible features of the status quo.

See also:

- The Reversal Test: Eliminating Status Quo Bias in Applied Ethics Imagine we found out that adding chlorine to the water supply has the unexpected side-effect of raising average human intelligence by 20%. Would we want to replace the chemical with one that did not have this effect?

- Wikipedia: Scope neglect Our emotional range is quite limited. The death of 100,000 strangers does not feel 100x worse than the death of 100 strangers, even though it is. For reasons like this, we must be careful about relying on intuitive judgements to tell us what matters in the modern world. Intuitions are always crucial at some level, but sometimes, the back of an envelope is an important tool. Sometimes, we need to shut up and multiply.

- Julia Galef: The Scout Mindset

3. Humanity's Biggest Problems Aren't What You Think The Are

Feel like a video? Try this vintage Bostrom TED talk.

The three problems Bostrom highlights are...

- Humans die

- Humanity might go extinct soon, or otherwise fall far short of our potential

- In general, life is much less good than it could be

Bostrom did standup comedy in his youth, and I think that comes through in the talk. For more biography, see his profiles in The New Yorker or Aeon. For interviews, try Chris Anderson or Lex Fridman.

Warning #1: In this video, and in many of his papers, Bostrom does back-of-the-envelope calculations that might seem dubious (e.g. he uses one book to represent the amount of knowledge lost when a person dies). He's doing Fermi estimates—a method that, employed carefully, can be surprisingly good at getting answers that are "roughly right" (to a few orders of magnitude) from very limited information. For a primer on Fermi estimation, see here and here.

Warning #2: Like many academics, Nick Bostrom strikes some people as a bit of a strange guy. One common misconception is that he is a hardcore utilitarian. He is actually more of a pluralist, who takes moral uncertainty seriously, and sees utilitarianism as one among many useful frameworks for thinking about the future.

4. The Vulnerable World Hypothesis

Warning #3: This is where things start getting scary. Generally it is wise to be suspicious of people who present ideas that are big, new and scary, and to take your time to evaluate them. If these ideas are new to you, I encourage you to sit with them for some weeks or months before you consider acting on them. It is often difficult to develop a good relationship with big ideas. Take your time, go easy on yourself, and if you're feeling bad, see the self-care tips at the bottom of this post.

One way of looking at human creativity is as a process of pulling balls out of a giant urn. The balls represent possible ideas, discoveries, technological inventions. Over the course of history, we have extracted a great many balls – mostly white (beneficial) but also various shades of gray (moderately harmful ones and mixed blessings). The cumulative effect on the human condition has so far been overwhelmingly positive, and may be much better still in the future (Bostrom, 2008). The global population has grown about three orders of magnitude over the last ten thousand years, and in the last two centuries per capita income, standards of living, and life expectancy have also risen.

What we haven’t extracted, so far, is a black ball: a technology that invariably or by default destroys the civilization that invents it. The reason is not that we have been particularly careful or wise in our technology policy. We have just been lucky.

The paper describes four ways that a civilisation like ours could be vulnerable to new technological capacities:

1. Easy nukes—If destructive capabilities become cheap and widely accessible, how long would we last? Nuclear weapons have remained expensive, and their raw ingredients hard to source. When these weapons were first designed, it was not obvious that this would be the case.

2. Safe first strike—New weapons may seem to grant a dominant advantage to the first attacker, and therefore give governments an overwhelming incentive to attack. This could lead to stable totalitarianism, or to all-out nuclear war.

3. Worse global warming—New capabilities may cause a collective action problem that we cannot solve fast enough. It might have been the case that the earth's climate was several times more sensitive to CO2 concentration than it actually is, such that a severe runway process was already locked-in before we could coordinate to avoid it.

4. Surprising strangelets—Science experiments might go wrong. Before the first nuclear weapon test, Edward Teller raised credible concerns that the detonation might ignite the atmosphere. Calculations suggested this would not happen, but the possibility of model error was real. A few years later, a significant model error meant that the first thermonuclear detonation created a much bigger explosion than expected, destroying test equipment and poisoning civilians downwind.

The paper does not put explicit probabilities on scenarios such as these coming to pass—it just suggests that they seem like realistic possibilities, and therefore worthy of attention. When people do try to put numbers on it, they rarely put total risk this century at less than 10%. These "order of magnitude" estimates should be taken with a very large pinch of salt (actually maybe a shovel's worth). The important point is that it seems hard to argue that the probability is small enough to be reasonably ignored [1].

Bostrom asks us to consider measures that could reduce the odds of drawing a black ball. As a broad strategy, he suggests:

Principle of Differential Technological Development: Retard the development of dangerous and harmful technologies, especially ones that raise the level of existential risk; and accelerate the development of beneficial technologies, especially those that reduce the existential risks posed by nature or by other technologies.

He also suggests that we may, ultimately, need to significantly change the world order. He highlights three features of our current "semi-anarchic default condition":

Limited capacity for preventive policing. States do not have sufficiently reliable means of real-time surveillance and interception to make it virtually impossible for any individual or small group within their territory to carry out illegal actions – particularly actions that are very strongly disfavored by > 99 per cent of the population.

Limited capacity for global governance. There is no reliable mechanism for solving global coordination problems and protecting global commons – particularly in high-stakes situations where vital national security interests are involved.

Diverse motivations. There is a wide and recognizably human distribution of motives represented by a large population of actors (at both the individual and state level) – in particular, there are many actors motivated, to a substantial degree, by perceived self-interest (e.g. money, power, status, comfort and convenience) and there are some actors (‘the apocalyptic residual’) who would act in ways that destroy civilization even at high cost to themselves.

If the Vulnerable World Hypothesis is true, then so long as these conditions persist—and we have ultra-powerful technology—it seems humanity will face significant risk of ruin.

With the development of nuclear weapons, it seems like we have entered a "time of perils", where we have the ability to cause global catastrophe, but not the ability to reliably avoid doing so.

See also:

5. Existential Risk Prevention as Global Priority

Warning #4: The Universe is really big.

Warning #5: The first part of this section assumes that we or our descendents could engage in significant space-settlement. If you have doubts about that, just keep going for now. We'll come back down to Earth in a moment.

In Astronomical Waste (2003), Bostrom notes that, on the face of it, the long-term aim of a standard consequentialist should be to accelerate technological development as quickly as possible, to the point where we can build flourishing civilisations throughout the galaxy, and beyond.

Given the size of the Universe, the opportunity cost of delaying space settlement is—even with the most conservative assumptions—astronomical. If we consider just the matter in the Virgo Supercluster, then for every second we delay, the potential for one hundred trillion (biological) human beings is lost.

...

...

...

...

...

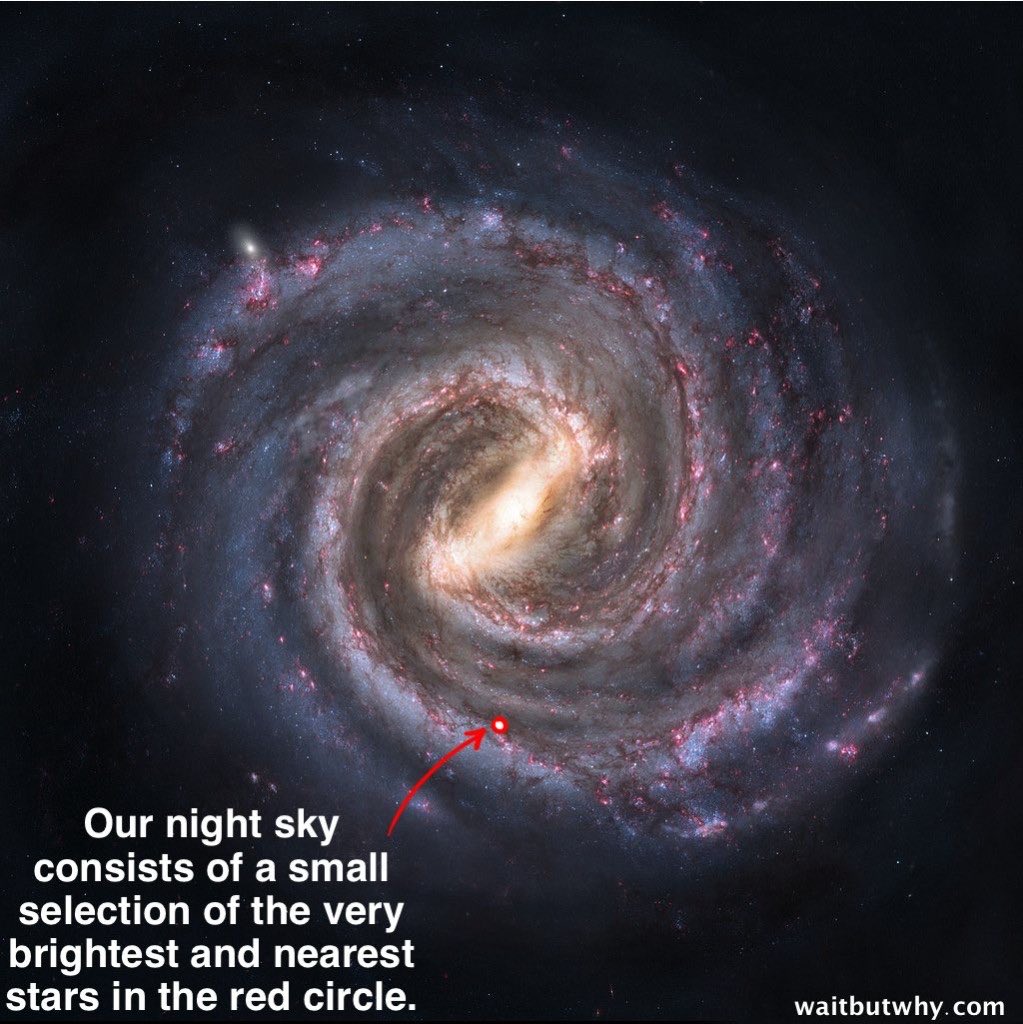

Remember: The Universe is really big. When we gaze at the night sky, the stars we see are just a tiny fraction of those in the Milky Way.

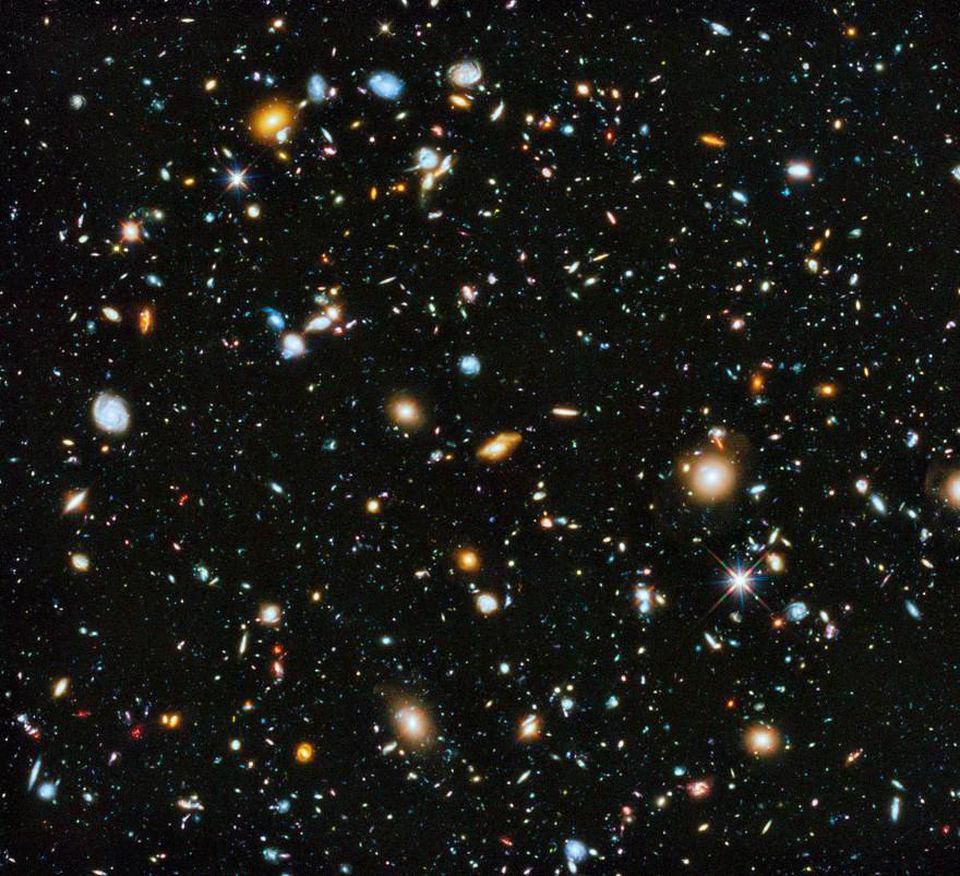

And the Milky Way, well, it's just one among several hundred billion galaxies in the observable universe:

...

...

...

...

...

Here are some natural responses to this line of thinking:

- Is it technologically possible to spread to the stars, let alone between galaxies?

- What about that vulnerable world thing? Won't we wipe ourselves out before we get anywhere close to this?

- I'm just trying to live a nice life and not hurt anyone and make some people smile along the way. Why should I care about any of this? This isn't... a human-sized thing. I just care about my children, and maybe their children too on a good day. If I really took this on board... I don't even know. Someone else should think about this. Someone else is thinking about this, right? Or wait... maybe no one should think about this, maybe we should just let the future take care of itself...

- This kind of thinking seems dangerous. What horrors could powerful humans rationalise in the name of vast, long-term stakes such as these?

On (1), let's keep assuming space settlement is possible, for now. I'll come back to this.

On (2): Bostrom is with you. Indeed, he thinks that a standard consequentialist should prioritise safety over speed:

Because the lifespan of galaxies is measured in billions of years, whereas the time-scale of any delays that we could realistically affect would rather be measured in years or decades, the consideration of risk trumps the consideration of opportunity cost. For example, a single percentage point of reduction of existential risks would be worth (from a utilitarian expected utility point-of-view) a delay of over 10 million years. Therefore, if our actions have even the slightest effect on the probability of eventual colonization, this will outweigh their effect on when colonization takes place.

In short: for a standard consequentialist, "minimise existential risk" seems like a good starting maxim.

In fact, an argument like this goes through even if you're more into deontology or virtue ethics than consequentialism: all plausible value systems care about what happens to some degree. Insofar as you care about what happens, you're probably going to end up with "reduce existential risk" near the top of your priority list. It just may not seem like quite such an overwhelming priority to you as it does for the consequentialist.

This assumes you care at least a bit about the welfare of future generations. A surprisingly common response is to say "I don't care". The reason is probably that our moral intuitions did not evolve to guide us on "large-scale" questions like this. After a bit of reflection, most people come to agree that total indifference to future generations is not a plausible or attractive view [2].

Crikey.

Yes.

Let's forget about the moral philosophy and the astronomical stakes stuff for a bit. Common sense tells us that whatever we're up to, the first order of business is: "Don't die!" We can think of the Vulnerable World Hypothesis as saying: "Eek, our innovations might kill us". So we can put these thoughts together and say: "OK, let's see if we can reduce the chance of our innovations killing us".

Abstract philosophical arguments may help us improve our judgements about absolute importance and in turn help us make better tradeoffs. But at current margins, we probably don't need a lot more philosophy to know what direction we should tack. If we assume that catastrophic and existential risks are high, and that there may be realistic ways to reduce them, then clearly humanity should take a look at that, at least to the tune of (say) millions or billions of dollars of investment per year, globally.

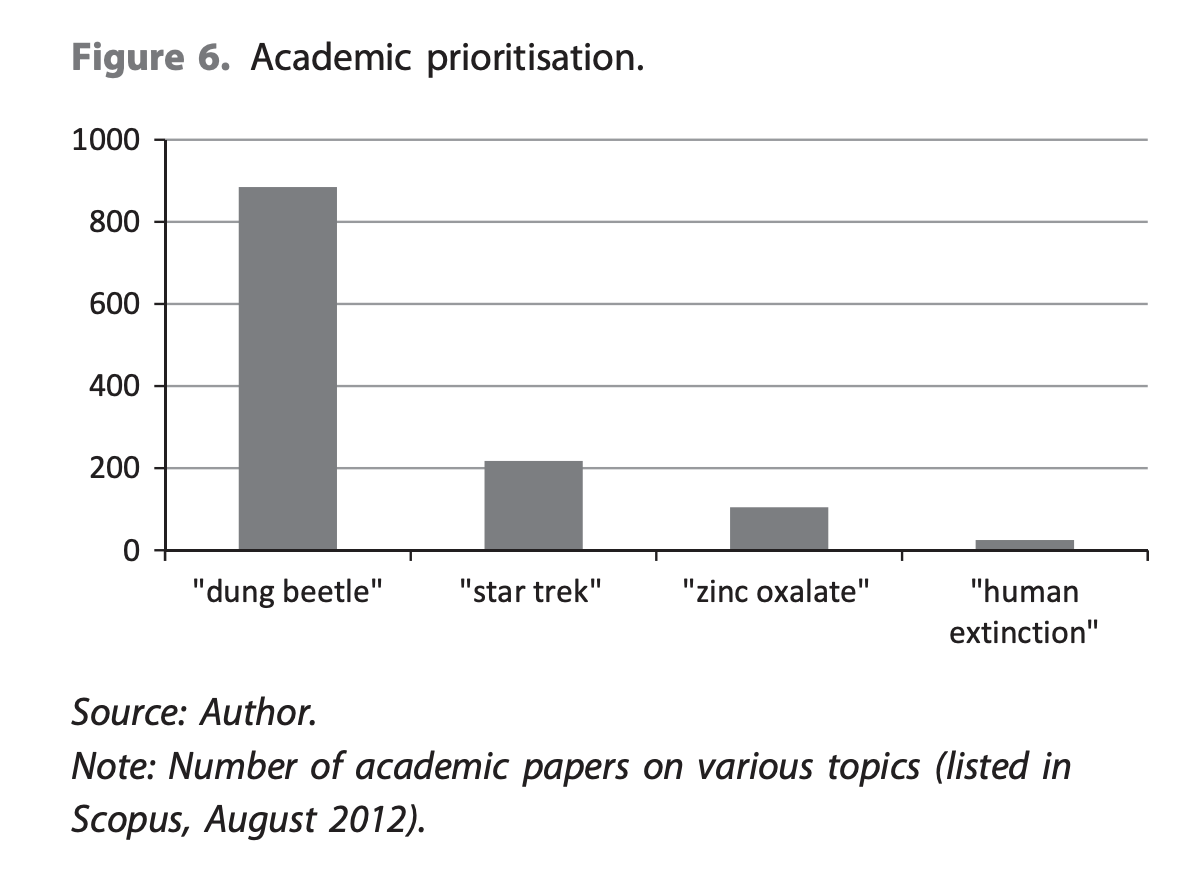

So we have lots of our best and brightest working on this, right? Well, when Bostrom wrote the paper, there were about 10 times more academic papers on the topic of Star Trek than on human extinction. And our global spend on these issues—pretty much however you define it—was far, far lower than the amount we spend on ice cream [3].

So... it seems like there may be some low-hanging fruit here. One major focus of the effective altruism movement has been to persuade more people to seek out and work on such opportunities.

At a high-level, much of this is kinda obvious: Let's try to avoid a great power war, dial up our pandemic preparedness, improve our cybersecurity—and so on. But the world is complicated, so actually promoting these ends is hard. Still worse: some sources of risk may currently have the status of "unknown unknowns".

Why don't we just try to slow down—or even stop or reverse—technological progress? In short: this option seems unacceptably costly—for example, it would presumably mean asking much of the world to remain susceptible to famine, drought, and easily preventable diseases. It also seems politically impossible—competitive nation states face strong incentives to gain technological advantage.

Remember, as a big-picture strategy, Bostrom suggested:

Principle of Differential Technological Development: Retard the development of dangerous and harmful technologies, especially ones that raise the level of existential risk; and accelerate the development of beneficial technologies, especially those that reduce the existential risks posed by nature or by other technologies.

This seems kinda promising. But the devil will be in the details:

- It is hard to tell which technologies will be especially safety promoting.

- It is unclear what effect general economic growth has on total risk. Some arguments suggest that greater wealth means greater investment in safety, so a shorter time of perils, while others dispute that.

- Some people think that risk is concentrated in critical transition moments, and that it'd be good to have more time to prepare for these. (See discussion here.)

- Some people (e.g. David Deutsch), think that pessimism about technology could itself pose a grave risk to the future of civilisation. This suggests that people who promote a concern for extreme risks should also take care to avoid reinforcing unhelpful attitudes of general pessimism, suspicion or hostility towards innovation.

- Circling back to (4), above: High stakes and poor feedback loops mean we should be on guard against rationalisation and fanaticism. Passionate but misguided attempts to avoid catastrophes could conceivably be as catastrophic as the misguided attempts to create utopias in the 20th century. Error-correcting institutions and proper handling of moral uncertainty may help us avoid own-goals.

So yes... more research is needed!

Chris Brass, an infamous naive utilitarian, showing that good intentions are not enough.

See also:

- Pascal's Mugging

- Astronomical Waste: The Opportunity Cost of Delayed Technological Development

- What are the key steps the UK should take to maximise its resilience to natural hazards and malicious threats?

6. Crucial Considerations

Imagine you are navigating a forest with a compass. After a while, you realise your compass is broken, such that the needle is pointing in random directions rather than magnetic North.

With this discovery, you now completely lose confidence in all the earlier reasoning that was based on trying to get the more accurate reading of where the needle was pointing. [...] The idea is that there could be similar types of consideration in more important contexts [than orienteering], that throw us off completely. So a crucial consideration is a consideration such that if it were taken into account, it would overturn the conclusions we would otherwise reach about how we should direct our efforts, or an idea or argument that might possibly reveal the need not just for some minor course adjustment in our practical endeavors, but a major change of direction or priority.

Within a utilitarian context, one can perhaps try to explicate it as follows: a crucial consideration is a consideration that radically changes the expected value of pursuing some high-level subgoal. The idea here is that you have some evaluation standard that is fixed, and you form some overall plan to achieve some high-level subgoal. This is your idea of how to maximize this evaluation standard. A crucial consideration, then, would be a consideration that radically changes the expected value of achieving this subgoal.

We can imagine a researcher doing gain-of-function research to help us prepare for future pandemics. After learning more about the history of research laboratory leaks, they might come to believe that their research actually increases the risk of a severe pandemic. If that's correct, they may conclude that their locally excellent work is actually harmful in expectation.

Bostrom also introduces the concept of a "deliberation ladder", a disorientating process of deliberation in which crucial considerations that point in different directions are discovered in sequence. At some point, our enquiry must stop, but it is often unclear when stopping is warranted. In practice, we usually just stop when we run out of steam.

What's the upshot here? Some think that, fundamentally, there's not much we can do—we fairly quickly hit a point where we just have to cross our fingers and hope for the best. Others are more optimistic about the returns to research and strategic thinking. On the latter view, we should make the study of the future a high-status and well-resourced field that attracts many of the best minds of each generation. Bostrom is more on the latter side of this debate, and he has made big progress on actually making this happen—most notably by founding the Future of Humanity Institute at Oxford. This was soon followed by the Cambridge Centre for the Study of Existential Risk, the Future of Life Institute and the Global Priorities Institute.

See also:

- Hilary Greaves on cluelessness.

- Will MacAskill on moral uncertainty, utilitarianism & how to avoid being a moral monster

- The Unilateralist's Curse: The Case for a Principle of Conformity

7. The Ethics of Artificial Intelligence

Sadly I'm out of time to narrate this one. In short: AI is likely to be a very big deal, and a source of many hard-to-see crucial considerations. It also seems to present a significant existential risk.

Bostrom's summary:

Overview of ethical issues raised by the possibility of creating intelligent machines. Questions relate both to ensuring such machines do not harm humans and to the moral status of the machines themselves.

See also:

- Sharing the World with Digital Minds

- How Hard is Artifical Intelligence? Evolutionary Arguments and Selection Effects

- Superintelligence: Paths, Dangers, Strategies

8. The Wisdom of Nature: An Evolutionary Heuristic for Human Enhancement

Bostrom's summary:

Human beings are a marvel of evolved complexity. Such systems can be difficult to enhance. Here we describe a heuristic for identifying and evaluating the practicality, safety and efficacy of potential human enhancements, based on evolutionary considerations.

See also:

- Smart Policy: Cognitive Enhancement and the Public Interest

- Human Enhancement Ethics: The State of the Debate

- Embryo Selection for Cognitive Enhancement: Curiosity or Game-changer?

- The Transhumanist FAQ

- Human Genetic Enhancements: A Transhumanist Perspective

- Cognitive Enhancement: Methods, Ethics, Regulatory Challenges

- Dignity and Enhancement

- The Future of Human Evolution

9. Letter from Utopia

Bostrom's question:

The good life: just how good could it be?

Bostrom's answer:

Have you ever experienced a moment of bliss? On the rapids of inspiration maybe, your mind tracing the shapes of truth and beauty? Or in the pulsing ecstasy of love? Or in a glorious triumph achieved with true friends? Or in a conversation on a vine-overhung terrace one star-appointed night? Or perhaps a melody smuggled itself into your heart, charming it and setting it alight with kaleidoscopic emotions? Or when you prayed, and felt heard?

If you have experienced such a moment – experienced the best type of such a moment – then you may have discovered inside it a certain idle but sincere thought: “Heaven, yes! I didn’t realize it could be like this. This is so right, on whole different level of right; so real, on a whole different level of real. Why can’t it be like this always? Before I was sleeping; now I am awake.”

Yet a little later, scarcely an hour gone by, and the ever-falling soot of ordinary life is already covering the whole thing. The silver and gold of exuberance lose their shine, and the marble becomes dirty.

Always and always: soot, casting its pall over glamours and revelries, despoiling your epiphany, sodding up your finest collar. And once again that familiar numbing beat of routine rolling along its familiar tracks. Commuter trains loading and unloading passengers… sleepwalkers, shoppers, solicitors, the ambitious and the hopeless, the contented and the wretched… human electrons shuffling through the circuitry of civilization, enacting corporate spreadsheets and other such things.

We forget how good life can be at its best, and how bad at its worst. The most outstanding occasion: it is barely there before the cleaners move in to sweep up the rice and confetti. “Life must go on.” And to be honest, after our puddles have been stirred up and splashed about for a bit, it is a relief when normalcy returns. Because we are not built for lasting bliss.

And so, the door that was ajar begins to close, and so the sliver of hope wanes, until nothing remains but a closed possibility. And then, not even a possibility. Not even a conceivability.

Quick, stop that door from closing! Shove your foot in so it does not slam shut.

And let the faint draught of the beyond continue to whisper of a higher state. Feel it on your face, the tender words of what could be!

Joseph Carlsmith also writes about futures we could wholeheartedly wish for:

- On future people, looking back at 21st century longtermism

- Actually possible: thoughts on Utopia

- Alienation and meta-ethics (or: is it possible you should maximize helium?)

- Creating Utopia (interview with Gus Docker)

10. Next steps

If some of these seem interesting, go read the papers. If many are interesting, I suggest you spend a weekend on Bostrom's website.

If you want to develop or try to act on these ideas, check out the effective altruism community, the progress studies community, and the websites 80,000 Hours and LessWrong.

Did you feel bad after reading this piece?

If you take these ideas seriously, some aspects of Bostrom's "high stakes worldview" can be hard to handle. His papers should probably carry a label: "may trigger anxiety, a sense of powerlessness, or other difficult emotions".

If you find yourself feeling bad while reflecting on these ideas, the first thing I'd say is: on some level, that's a valid response. Some of these ideas are big, vertiginous, and kind of scary. Ultimately though, the question to focus on is: are your emotions helping, or hindering, an appropriate response?

It often takes people years of practice to find a way to relate to ideas like these that preserves a sense of agency, optimism, freedom and gratitude, while also a sense that there is urgent and important work to be done, and that one should consider making a serious effort to pitch in. This is a tricky balance to strike. If you didn't already, start by giving serious attention to the art of self-care—especially sleep, exercise and social connections.

Would you recommend this post to a friend? How could I improve it?

This is an early draft. Please share thoughts and suggestions on the EA Forum thread or the Google doc. You can also tweet or email me.

If you want to do me a 10-second favour, please just tell me: would you recommend this post to a friend?

Want a PDF, eBook or audio edition of this post and the linked papers? Register your interest here. If a bunch of people request this, I will try to make it happen.

In a future version, I hope to add:

- Notable responses, criticisms, and alternative takes

- Frequently Asked Questions. (If you have a question you'd like me to include, please get in touch!)

- Better advice on self-care / how to integrate these ideas

- More jokes

It might be the case that existential risk is high, but there's very little we can do about it. If that were true, then we should presumably focus on other things. ↩︎

In fact, many philosophers who consider the question end up saying that, once we discount for risk, welfare today is just as good as welfare tomorrow. ↩︎

Borrowing a footnote from Toby Ord: "The global ice cream market was estimated at $60 billion in 2018 (IMARC Group, 2019), or ~0.07% of gross world product (World Bank, 2019a). Precisely determining how much we spend on safeguarding our future is not straightforward. I am interested in the simplest understanding of this: spending that is aimed at reducing existential risk. With that understanding, I estimate that global spending is on the order of $100 million." ↩︎