| This is a Draft Amnesty Week draft. It may not be polished, up to my usual standards, fully thought through, or fully fact-checked. |

Commenting and feedback guidelines: This is a Forum post that I wouldn't have posted without the nudge of Draft Amnesty Week. It's my first Forum post after years of lurking, and rough enough that only in the waning hours of Draft Amnesty Week am I sliding it in. Fire away! (But be nice, as usual) |

Are the best looking interventions from the noisiest classes, and can we trust our estimates of effectiveness in such cases?

Background and my question

Over the last decade, the EA community seems to have become increasingly excited about causes and charities with highly uncertain impact: think existential risk reduction, animal welfare for wild and/or small and/or future animals, super long term longtermism, etc.

While I'm sympathetic to high uncertainty causes - I work at an EA-aligned charity (opinions and errors my own) trying to mitigate biosecurity tail risks - I'm also more and more worried that in high uncertainty fields, it's really hard to tell "is good" from "looks good." Slightly more specifically, an observer's estimate of a charity's "goodness" (cost effectiveness, impact, expected value, substitute whatever word makes sense in your moral framework) is a combination of

- the actual goodness of that charity

- how "lucky" the charity gets in aligning with the observer's biases and blind spots

- Biases and blind spots aren't necessarily blameworthy! We might have a bias for not wanting conscious beings to suffer and be blind to totally unpredictable things that will happen tomorrow.

As an observer, you don't directly observe goodness, but rather this complicated and unknown interaction. In simple cases involving low uncertainty fields, this might not be such a problem; for example, with some work and research I could probably get a decent sense of the goodness of my local food bank, or at least reasonable bounds thereof.

But in high uncertainty fields, it's much harder. Charities with the highest plausible impact almost inevitably must be in fields subject to uncertainty or disagreement. Big expected impact requires at least one of:

- integrating effects into the far future

- affecting a huge number of individuals (e.g. insects) of uncertain moral standing

- non-obvious moral theories

- multiplying very large numbers by very small numbers.

It's hard to know things about the future, or non-human minds, or to estimate small probabilities and large quantities well. In these cases, we inevitably use heuristics. So suppose you're an observer evaluating some charity, you do your best evaluation, and find that the charity has a .01% chance of saving 1e13 human lives [or equivalent, again substitute your preferred moral framework] for an expected value of 1e9 human lives. Incredible! But upon reflection, you consider that this expected impact relies on 1e13 human lives existing in the moderate term future (which you're uncertain about), and .01% is worryingly close to zero (maybe your assumptions are wrong?). Maybe the charity isn't actually so incredible after all, and it just got lucky in aligning with how you estimate value?[1]

If the charities and interventions with the highest goodness are found in domains where huge uncertainty is inevitable, how can we trust our estimate that a particular charity is really good? Hence, a question: how do you recommend thinking about effectiveness in highly uncertain areas like existential risk reduction, longtermism, non-human minds, etc.?

Toy simulation

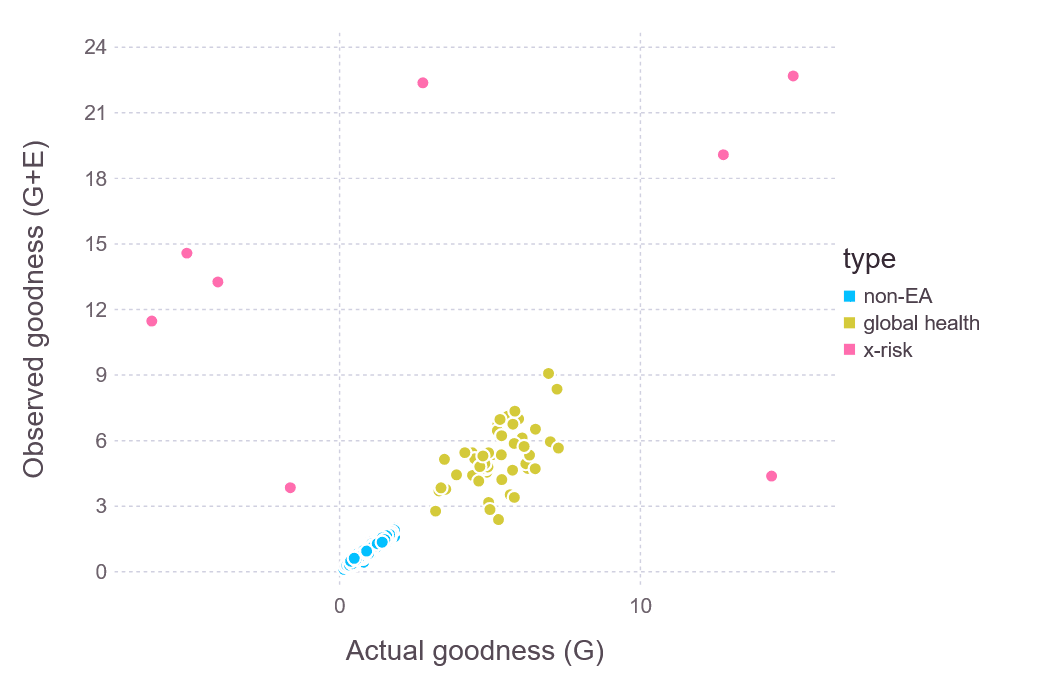

To build some intuition I ran a tiny super-toy simulation where each charity has an actual goodness and a measurement error , yielding observed goodness . Since this is just an intuition pump, I made all random variables normal.[2] Consider three types of charities:

- 1000 "non-EA" charities with and

- I'm being terribly unjust by using "non-EA" as the shorthand label here; plenty of non-EA charities are doing great work, and surely some EA charities are not.

- 50 "Global health" charities with and

- 20 "x-risk" charities with and , i.e. super high variance in both actual quality and measurement error

Here's a visual summary of results:

The best looking charity (top right) is in fact the actual best. But the second best looking charity (top middle) isn't particularly cost effective, and the 4th through 6th best looking are actively super terrible. In general, seeing that an x-risk point has high Y-axis value doesn't tell you much about its X-axis value. (This toy model is simple enough that we can compute the posterior distribution of in closed form, but I don't think it really helps with the very high level intuition.)

It's also interesting to compare the yellow global health cluster to the blue non-EA line. Even though the global health charities have much higher measurement error than the non-EA charities, they still have decent signal to noise ratio, and if you see e.g. a yellow charity with an observed goodness of 6, you can be pretty sure it's not actually less good than a typical standard charity. Of course this is by construction; the whole point of this experiment was to strengthen my own visual intuition :)

So...yeah. In real life, do some classes of charities have high signal to noise uncertainty ratios? And if so, how should we form beliefs about effectiveness, especially knowing that we're biased evaluators who have exactly selected for the highest apparent-effectiveness...which also means selecting for the things we've most overvalued?

PS. Other formulations of this question

- I'm pretty sure I've read on this forum an excellent post about a related topic using the toy (but much less toy than here) example of drug discovery and describing how to do the proper Bayesian updating for this case. With a draft amnesty week level of effort, I'm unable to find that post. One takeaway is that in a Bayesian world we should regularize our belief about an intervention's goodness towards our prior; the amount of regularization depends on the ratio of measurement uncertainty to underlying variance in intervention goodness.

- My question in this post isn't so much how the math works though; I'm more interested for what this means when trying to be an effective altruist (lowercase) out in the world. If something crazy seems really good, what should you do?

- There's a lurking Berkson's paradox framing out there. We filter charities by high , but conditional on that filter and are negatively correlated. Yet we don't observe or directly, so it's hard to know what to do about this.

- https://xkcd.com/882/

- ^

"Be Bayesian" can push this problem a level down, but not eliminate it. Maybe (at least in mathematical asymptopia) I should be a good Bayesian with priors on both my own epistemic/moral uncertainty and the future states of the world, do perfect updating in the face of new information, and this probably makes me somewhat more robust to getting over-excited by charities which conform to specific biases. But a level down, a cause could still appeal to me because it aligns with my literal priors even if it's not particularly good.

- ^

I suspect that a more realistic model would have multiplicative uncertainty as well as additive uncertainty, which probably makes the general problem of signal to noise ratio worse, especially when filtering for whichever interventions look best.

Executive summary: In high-uncertainty fields like existential risk reduction and longtermism, it is difficult to distinguish truly high-impact interventions from those that merely appear promising due to biases and measurement noise, raising concerns about how to reliably assess effectiveness in these areas.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.