This is an old article reposted from my blog that I think might be useful here. Some topics discussed are: methods for reflective equilibrium (which is the process of deciding which intuitions to keep and get rid of in ethics), problems with those methods, arguments against using normative principles in the first place, moral uncertainty, arguments for simple theories, and some counterarguments to those arguments. Let me know what you think (critiques, comments, and other insights are welcome)!

There are generally three levels you can talk about ethics from: 1) applied ethics in which one asks what ethics says we should do in a particular situation (for example, at what point does a fetus have moral consideration), 2) normative ethics in which one finds the principled theories that one should use in individual cases (for example, deontology), and 3) metaethics in which one discusses what ethical statements actually represent (for example, emotions). This list often struck me as incomplete: namely, where does one discuss the methodology of normative ethics?

This discussion seems largely underrated, so this article will focus on issues related to the methodology of normative ethics (which is weirdly still usually considered normative ethics even though there is a subfield called metaethics).

Reflective Equilibrium:

The process by which one determines if some normative ethical theory is correct is typically reflective equilibrium. There are a few aspects to this approach, but some ideas include removing inconsistencies, developing units of value for trade-offs between potentially conflicting values, and usually creating a rule by which you can fit a fairly simple curve (i.e. a rule like Utilitarianism) to the moral data (it fits a lot of intuitions in various cases).

What Kinds of Intuitions?:

As any behavioral scientist will tell you, intuitions are prone to major cognitive biases. Given this information, what do we do about ethics, which must come from intuitions? There are a few approaches:

Scale-Up Theories of Well-being:

Some take the view that we learn what is good for a society (and thereby learn the correct normative ethical theory) by scaling up what is good for an individual. This involves needing a theory of well being, so here are the most popular:

Hedonism: Positive hedonic states (feeling good) and negative hedonic states (feeling bad) are the only things that matter for the wellbeing of an individual.

Objective List: There is a list of values that are objectively good for every individual’s well being (some common examples are virtue, family, friendship, and rights).

Preference/ Desire Satisfaction: Each person has their own set of desires (their favorite ice cream flavor, certain hedonic states like euphoria, etc) and the way to best maximize their wellbeing is by maximizing these preferences.

While there are various problems in aggregating wellbeing across people, the general idea of scaling up seems plausible. For example, under hedonism and certain forms of aggregation (linearity, egalitarianism, aggregationism), you’d get utilitarianism.

Given evolutionary debunking arguments, one may be inclined to only use intuitions from cases that are less subject to evolutionary bias. This might exclude intuitions like thinking you have greater moral obligations to those who helped you (reciprocity), thinking non-reproductive sexual relationships are morally bad, bias towards caring more about one’s own kin, etc.

In order to see where we can still trust our intuitions, we need to look for cases where we trust some faculty despite the potential for evolutionary debunking. One example of this is abstract and generalizable mathematical facts, which seem to be taken seriously despite evolutionary debunking. Therefore, abstract moral principles (such as “we shouldn’t unnecessarily harm people”) seem less subject to bias — whether evolutionary or otherwise. Therefore, one might reason that we should take those intuitions more seriously.

Embracing “Biases”:

If one believes that values cannot be critiqued (as Hume thought, reason is a slave to the passions), they may think that values cannot be subject to biases, and we must embrace things that seem like mere biases. In these cases, even things that seem like they were evolved (and are therefore morally irrelevant), should be taken seriously.

One may also argue that bias assumes knowledge of some ground truth, which we don’t have in the case of normative ethics. Stated differently, Reflective Equilibrium is a process in which one builds a map without a territory, and biases assume the existence of some territory.

Alternatively, one can have a “more sophisticated” version in which they get rid of super obviously wrong intuitions that developed for non moral reasons (say incest) and keep the ones that are less obviously developed (lying). This version can also do some revision in trade-offs and logical consistencies.

Regardless of whether one picks the standard or revised view, they imply that we should assign different moral weights to intuitions based on their relative importance under reflection. The way one would create the best normative ethical theory, then, would be to do some curve fitting.

Problems with Methods:

General Problem:

Some make the following argument:

When we try to achieve some end, we often use heuristics that serve as a proxy towards that end. However, after enough time chasing some proxy, we tend to treat those proxies as ends in themselves. An example is that in order to have a happy life one often needs money. After enough time trying to chase money, some people see money as an end in itself and attempt to maximize it, even when it is not a good proxy for one’s happiness (either because of diminishing returns or sacrificing too many other valuable things for it).

Ethics faces the same problem. For example, many ethical intuitions (democracy, telling the truth, rights, etc) might come from good heuristic proxies that are societally developed as a means of achieving better consequences that later become ends in themselves. Given these theories, it’s not obvious what to do in these cases — which are the first final ends, does it matter, and should we care about them now that they are in our intuitional bucket.

Scaling-Up Method

As we scale up to a societal level, various other intuitions that are not seen in theories of well being in an individual emerge. One example of an intuition like this is justice. While there seems to be nothing important about the concept of justice on an individual level, this emerges when we jump to the societal level. If one takes these emergent intuitions seriously (which it seems like we should), we cannot merely use the scale up approach, as we would miss emergent intuitions

Two critiques can be made about this point:

- All values are just “biases” in that they are based on emotion. This doesn’t mean that we should get rid of them, but rather that we should take them all seriously (with various weights depending on their strength).

- Another is that the term bias assumes some ground truth about the matter (for example, confirmation bias is called a bias because we assume that one should not give some evidence greater weight if it was received first). This is not the case with ethics, however, because we don’t have ground truth to work off of — this equally applies to more abstract moral intuitions.

Embrace Them:

In certain scenarios, this would just lead to absurd conclusions. You would have logical contradictions, leading one to not be able to act. You could have intransitive moral intuitions that are dutch-bookable, which could lead you to losing all of your “ethical utility.” You will likely have clear scope neglect with no method of resolving it (for example, preferring to spend $10 to give to to a charity helping 5 people ten times in a row rather than spending $90 to help 50 people). It can leave you with cases of clear regret under more idealized conditions (for example, one will likely experience short term bias in ethics).

Thanks for reading Irrational Community! Subscribe for free to receive new posts and support my work.

Rejecting Moral Principles:

There seems to be a hidden proposition/method in normative ethics broadly: namely, it is often taken for granted that we should aim for a simple moral theory. But why can’t we reject the concept of moral principles? Why do we need a simple theory? Here, I will discuss some reasons why I think simple theories are overrated, and why we should use a form of intuitionistic moral particularism (in which we use our intuitions to judge individual cases).

Simplicity Isn’t a Moral Virtue:

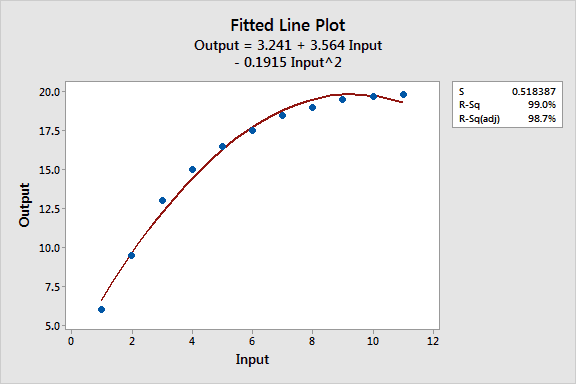

When one fits a curve to some data points, they are often trying to avoid overfitting the data because the curve will not generalize to new cases well.

However, in the moral case, we are not trying to make predictions about the world — we’re merely trying to explain the moral data (ethics in certain cases). Because of this, I don’t see a problem with trying to overfit the data — in which we would use our intuition in a case by case basis instead of making a rule.

We Shouldn’t Expect Simplicity:

Simple moral theories are not obvious considering that most philosophers are objective list theorists with respect to wellbeing, which claim that there are multiple valuable things. It would be unintuitive to suggest that some values actually go away as we move from the individual to societal level. Under an Objective List view, why would we expect there to be a simple theory with one value? Morality seems too complex to be reduced to one or a few principles.

Countercases To Every Theory:

Every moral theory that has been developed thus far has a bunch of unintuitive cases — cases in which our intuitions diverge from the principle’s guidelines. It seems like this won’t change anytime soon. Theories that are more complex often fit more cases (threshold deontology as opposed to deontology, for instance).

Vastly Different Theories:

If there was a hypothetical correct normative theory, it seems like we would expect moral philosophers to largely converge on certain moral rules and guidelines — perhaps they would just be tweaking specific things.

However, this does not seem to be the case at all. Deontology and Contractualism, for example, think about where morality comes from in entirely different ways and have very different normative guidelines. This should give one reason to think that the project of finding some simple moral rules is simply (hehe) misguided.

No Moral Actors:

There is some empirical evidence suggesting that people who consider themselves consequentialists versus those who consider themselves deontologists don’t act so differently in real moral decision making scenarios. If normative ethical principles are making no difference with respect to real moral actors, it seems like they are less useful than we might have thought.

Genealogy of Ethical Principles:

Many of our intuitions around wanting simple moral theories seem largely influenced by religion and a need for rigorously defined governmental laws. From a more theoretical perspective, however, these seem morally irrelevant (at least for non-religious people) and should therefore be taken less seriously.

Furthermore, one can claim that moral principles have been created as a proxy for achieving some end (not having to reason through individual moral cases). If this is true and those proxies don’t actually reflect the end itself, this is a reason not to care so much about the principles themselves.

Lastly, humans generally have a bad tendency to turn complex things into simple things. This may be how rules (the simple theories) were developed from individual cases (which are complex). If this is the case for morals, under some theories, we should attempt to rid ourselves of this bias and just use our intuitions (or at least try to) in every case.

The Solution: Moral Uncertainty and Particularism:

In epistemology, giving credences to particular theories based on evidence and betting on them accordingly, makes you best maximize your expected epistemic utility. Because knowing which moral theory is correct is an epistemological problem, some suggest that we should apply the same principles of credences to different normative ethical theories. This is usually called moral uncertainty.

There are a few different ways to do deal with uncertainty, but here are two:

If you know of different reasonable ways to deal with moral uncertainty that don’t fall into complexity or practically a form of particularism, let me know!

- We can assign different weights to moral theories, assign them values, and maximize some expected moral utility.

- However, it seems like rules in deontology should have some infinite value, which would lead us to being plain deontologists. While there are more complex ways to do the math in cases of moral uncertainty to make this not the case, this seems complicated, and if we’re allowed to make a complicated rule to get the intuitive results we want, we might as well just overfit the moral data.

- We give weight to different moral theories in cases depending on which theory best fits with out intuitions in that case. In specific cases, we should treat whichever rule has the most intuitively plausible solution with the greatest weight and act accordingly. For example, perhaps when deciding whether lying will result in total benefit in some case we treat it as deontology is correct because this aligns with more intuitions. However, in cases where the bad outweighs the good by too much, we move to a more utilitarian framework.

In practice, the second (and, in my opinion, much more plausible option) seems like it does the same thing as overfitting the moral data (finally, we have some theoretical normative ethical convergence). While forms of moral particularism may struggle in morally ambiguous cases, we can and should weigh different ideas that seem to have moral weight as we would do in a case of moral uncertainty, instead of appealing to a particular more abstract normative principle.

The Case For Simple Theories:

Numbers are the name, letters are the explanations, and is are potential refutations.

- The Epistemological Case:

- If we think moral realism is true, we’d expect the best theories of morality to be simple as simplicity is an epistemic virtue. This is because simpler explanations usually involve less assumptions.

- I don’t know why moral theories need to be simple — we haven’t established simplicity as an epistemic virtue for moral theories (especially considering that they are quite unlike any other ontological entity). Simplicity in epistemology is a virtue because positing the existence of more facts requires more assumptions, but it’s not obvious that this would also apply to moral ontology (especially under naturalistic forms of moral realism).

- If one sees each moral case as phenomena to be explained, then, like in scientific theories, it would be too simple if some theory was unable to explain all the phenomena (i.e. not be intuitive in every case like moral rules). A theory that is too complex would explain all the cases and then add additional unnecessary premises. Instead of viewing moral data like data being fit to some curve, we should view it as phenomena that must be explained by our theory.

- I have no idea how to approach the trade off between the epistemic virtue of ethical simplicity and being correct about cases. Even if one does take simplicity seriously, how should that weigh in against being able to fit our moral intuitions in particular cases?

- From an antirealist perspective, it seems like one shouldn’t care about simplicity — there are no moral facts, so we don’t need to posit the extra existence of anything.

- Joe Carlsmith has a great article (here’s the audio version) against this point that I find somewhat compelling and personally motivates me.

- If we think moral realism is true, we’d expect the best theories of morality to be simple as simplicity is an epistemic virtue. This is because simpler explanations usually involve less assumptions.

- The Practical Case:

- Moral principles are merely good for behaving in practice — if utilitarianism is right, for example, it would be really hard to know what is moral in each case, so we need good heuristics. One can also make the claim that this is useful for politics as governments need to create rules rather than relying on singular intuitions — this might be especially compelling for those who think normative ethics is largely for law, economics, and policy.

- This is, however, not what it seems like moral philosophers do when they talk about normative ethics — deontologists, for example, usually think that we actually have rules that all rational agents should follow; not just that it is some good heuristic for some other end.

- Practical for what? What’s the ground truth here that we are trying to achieve? Just intuitions? Whose intuitions do we trust?

- We get very different conclusions when we use moral principles rather than intuition in every case. On this basis, it doesn’t seem like using moral principles are a good practical proxy to becoming more ethical — it seems like we’re coming up with entirely new conclusions.

- As stated before, there is some empirical research showing that those who accept some moral theories over others don’t actually act any differently. If this is true, we should be skeptical of these moral principles helping us be more ethical in practice.

- There are diminishing returns with respect to the complexity of your moral theory to the more intuitive cases you will get. For example, the first bit of complexity (the initial rule) may achieve 70% of cases, the second bit of complexity (perhaps a qualifier like multi-level) may add 10%, another principle might add another 1%, etc.

- If simplicity is not a virtue at all, then the diminishing returns don't matter.

- At what point do we say that some theory is too complex?

- Moral principles are merely good for behaving in practice — if utilitarianism is right, for example, it would be really hard to know what is moral in each case, so we need good heuristics. One can also make the claim that this is useful for politics as governments need to create rules rather than relying on singular intuitions — this might be especially compelling for those who think normative ethics is largely for law, economics, and policy.

- Higher order preferences:

- We should use moral principles for the meta preference of wanting higher order principles (I personally have the meta-intuition that morality should follow a set of rules). Similarly, aside from the meta-preference, we should give weight to the abstract moral principles themselves.

- Practical constraint: how seriously do we take the moral principles over the other individual moral cases? Maybe they should receive more weight (just like some intuitions seem stronger on reflection), but it seems like they shouldn’t account for the vast majority of moral weight.

- Giving more weight to the abstract moral principles may be double counting. Abstract moral principles, as stated, may have (and probably did at least some of the time) come from individual cases, and we are, to use the same terminology as before, optimizing for the proxy rather than the end. If one optimizes both for the proxy associated with some end and the end itself, they are double counting.

- We should use moral principles for the meta preference of wanting higher order principles (I personally have the meta-intuition that morality should follow a set of rules). Similarly, aside from the meta-preference, we should give weight to the abstract moral principles themselves.

- Moral Ambiguity

- There are cases where our intuitions don’t tell us exactly what the moral thing to do is — in other words, there is moral ambiguity. If some rule (or set of rules) works in a lot of intuitive cases, it may be reason to think we’re on track for the actual mechanism behind what the moral good is. This can help us in cases that are out of our intuitive reach.

- We have similar ambiguity problems with respect to which moral principle we should follow in less intuitive cases. These ambiguous cases are also usually the ones where different moral theories diverge (a classic example of this would be the trolley problem), so it seems like following a particular moral principle is not much better than using object-level moral reasoning/intuition.

- There are cases where our intuitions don’t tell us exactly what the moral thing to do is — in other words, there is moral ambiguity. If some rule (or set of rules) works in a lot of intuitive cases, it may be reason to think we’re on track for the actual mechanism behind what the moral good is. This can help us in cases that are out of our intuitive reach.

As always, tell me why I’m wrong!

Cool post!

From the structure of your writing (moslty the high number of subtitles), I often wasn't sure where you're endorsing a specific approach versus just laying out what the options are and what people could do. (That's probably fine because I anyway see the point of good philosophy as "clearly laying out the option space.")

In any case, I think you hit on the things I also find relevant. E.g., even as a self-identifying moral anti-realist, I place a great deal of importance on "aim for simplicity (if possible/sensible)" in practice.

Some thoughts where I either disagree or have something important to add:

(1) It’s under-defined how many new people with interests/goals there will be.

(2) It’s under-defined which interests/goals a new person will have.

(See here for an exploration of what this could imply.)

Morality is inherently underdefined (see, e.g., my previous bullet point), so we are faced with the option to either embrace that underdefinedness or go with a particular axiology not because it's objectively justified, but sbecause we happen to care deeply about it. Instead of "embracing 'biases,'" I'd call the latter "filling out moral underdefinedness by embracing strongly held intuitions." (See also the subsection Anticipating objections (dialogue) in my post on moral uncertainty from an anti-realist perspective.)

Imagine you have a "moral parliament" in your head filled with advocates for moral views and intuitions that you find overall very appealing and didn't want to distill down any further. (Those advocates might be represented at different weights.) Whenever a tough decision comes up, you mentally simulate bargaining among those advocates where the ones who have a strong opininion on the matter in question will speak up the loudest and throw in a higher portion of their total bargaining allowance.

This approach will tend to give you the same answer as the particularist one in practice(?), but it seems maybe a bit more principled in the way it's described?

Also, I want to flag that this isn't just an approach to moral uncertainty -- you can also view it as a full-blown normative theory in the light of undecidedness between theories.

Thanks for the nice comment. Yea, I think this was more of "laying out the option space."

All very interesting points!

The Carlsmith article you linked -- post 1 of his two-post series -- seems to mostly argue against the standard arguments people might have for ethical anti-realists reasoning about ethics (i.e., he argues that neither a brute preference for consistency nor money-pumping arguments seem like the whole picture). You might be talking about the second piece in the two-post series instead?

Good point. Will change this when it’s not midnight. Thanks!

Executive summary: The post critically examines the methodology of normative ethics, highlighting the challenges of reflective equilibrium, the limitations of simple moral theories, and advocating for moral uncertainty and particularism as more effective approaches.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.