I recently spent some time trying to work out some kind of personal view AI timelines. I definitely don’t have any particular insight here; I just thought it was a useful exercise for me to go through for various reasons. I’m sharing this in case other non-experts like me find it useful to see how I went about this, as well as e.g. for exploration value. (note that this post is almost identical to the Google doc I shared in a shortform post last week)

Key points

- I spent ~40 hours thinking about my AI timelines.

- This post includes the timelines I came up with, an explanation of how I generated them, and my reflections on the process and its results.

- To be clear, I am not claiming to have any special expertise or insight, the timelines illustrated here are very much not robust, etc.

- I “estimate” a ~5% chance of TAI by 2030 and a ~20% chance of TAI by 2050 (the probabilities for AGI are slightly higher).

- I generated these numbers by forming an inside view, an outside view, and making some heuristic adjustments.

- My timelines are especially sensitive to how I chose and weighted forecasts for my outside view.

- Interesting reflections include:

- it’s still not clear to me how much I “really believe” these forecasts;

- I was surprised by the relatively small amount of high quality public discussion on AI timelines;

- my P(TAI|AGI) seems to be a lot lower than other people’s (Edit: NB this is more of an inside view and definitely not an overall/"all-things-considered" view, unlike the timelines themselves which are supposed to represent my overall view).

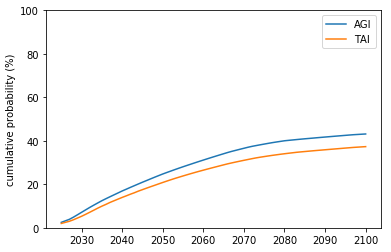

My timelines

Here is a plot showing my timelines for Artificial General Intelligence (AGI) and Transformative AI (TAI):

When I showed them plots like the one above, a couple of people commented that they were surprised that my AGI probabilities are higher than my TAI ones, and I now think I didn’t think about non-AGI routes to TAI enough when I did this. I’d now probably increase the TAI probabilities a bit and lower the AGI ones a bit compared to what I’m showing here (by “a bit” I mean ~maybe a few percentage points).

How to interpret these numbers

The rough definitions I’m using here are:

- TAI: AI that has at least as large an effect on the world’s trajectory as the Industrial Revolution.

- AGI: AI that can perform a significant fraction of cognitive tasks as well as any human and for no more money than it would cost for a human to do it.

The timelines are also conditioned on “relative normalness” by which I mean no global catastrophe, we’re not in a simulation, etc. The only “weird” stuff that’s allowed to happen is stuff to do with AI.

Alternative presentations

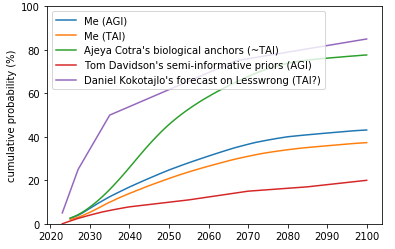

Here are my timelines alongside timelines from other notable sources:

Here are my timelines in number form:

| Year | % chance of AGI by year | % chance of TAI by year |

| 2025 | 3 | 2 |

| 2030 | 7 | 5 |

| 2040 | 17 | 14 |

| 2050 | 25 | 21 |

| 2060 | 31 | 27 |

| 2080 | 40 | 34 |

| 2100 | 43 | 37 |

How I generated these numbers

At a high level, the process I used was:

- Create an inside view forecast

- Create an outside view forecast

- Apply adjustments according to certain heuristics with the aim of correcting for bias

- Combine it all together as a weighted average

For TAI

For the inside view forecast I did the following:

- Gave a 57% probability that AGI (or similar) would not imply TAI, i.e. would not imply an effect on the world’s trajectory at least as large as the Industrial Revolution.

- This was based on: 90% chance of no global catastrophe, 70% chance of no extraordinary increase in growth rates (conditional on no global catastrophe), 90% chance of not causing some other unforeseen trajectory change (conditional on no global catastrophe and no extraordinary growth).

- (note that, effectively, I equate “AGI (or similar) would not imply TAI” with “TAI impossible”).

- The other 43% probability mass went to the world where AGI (or similar) would imply TAI. Within this world, I gave:

- 30% probability to “neural networks will basically work”, and represented this scenario with my version of Ajeya Cotra’s framework (which is less aggressive than e.g. Ajeya’s numbers).

- 70% probability to “it’s not the case that neural networks will basically work”, and gave the following (gut feeling) probabilities for this scenario: 0.3%/1.3%/15%/30%/40%/50%/80% for years 2025/2030/2050/2080/2125/2200/2300 respectively.

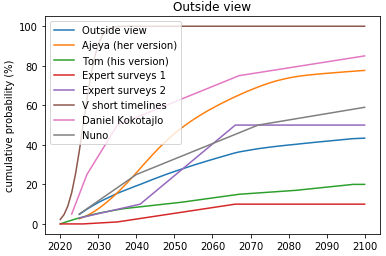

For the outside view forecast I used the following weighted average:

- 20% on Ajeya Cotra’s framework with her own numbers.

- 20% on Tom Davidson’s semi-informative priors framework with his numbers.

- 30% on my TAI-only version of Tom Davidson’s semi-informative priors framework (I used the most TAI-like reference class of the 4 reference classes he described).

- 10% on the 2016 AI Impacts expert surveys (there are many forecasts you could take away from these; I used the AI researcher curve and equally weighted the two framings (“by what year probability X” and “probability at year Y”)).

- 10% on short timelines people: 3% on (my impression of) the forecast of <someone with very short timelines>, and 7% on Daniel Kokotajlo’s forecast from this Lesswrong thread.

- 10% on long timelines people: I just used Nuño Sempere’s forecast from the same Lesswrong thread, with the probabilities reduced by 50% which corresponds to my guess for what Nuño thinks P(TAI|AGI) is (I’m guessing this represents a relatively long timeline within the community of people thinking about this, although I didn’t really check; and obviously using my guess about Nuño’s P(TAI|AGI) is not ideal).

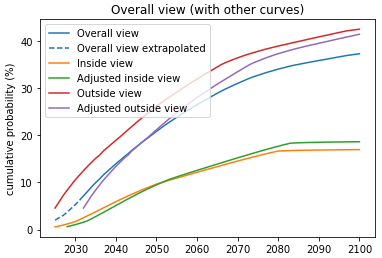

For the adjustments based on heuristics I did the following:

- I wrote down a load of biases I thought might be relevant, and categorised them by the adjustment they suggested: longer vs shorter timelines, and narrower vs broader timelines distributions. I also noted which ones especially resonated with me.

- I did a kind of informal netting of some biases against others.

- I came up with adjustments to make to the inside view and outside view forecasts based on the post-netting lists of biases, using my gut feeling. They were:

- Inside view: push out by 3 years and multiply probabilities by 110%

- Outside view: push out by 7 years

Finally, to combine everything together for my overall (“all-things-considered”) view I did the following:

- I used the following weighted average:

- 20% adjusted inside view

- 40% adjusted outside view

- 40% outside view (unadjusted)

Where “adjusted” refers to the adjustments based on heuristics described above

- Note that I decided to only have 50% of my outside view forecast be adjusted, because the above adjustments felt quite “inside view-y”, and I wanted to have at least some weight on a more “pure” outside view forecast.

- I also ended up extrapolating my final curve backwards to get probabilities for ~2025-2030, using my best judgement (this was necessary because of the heuristic adjustments; probably if I’d done the calculation more carefully I could have avoided needing to extrapolate / reduced the amount of extrapolation needed).

For AGI

I did the process for TAI first and then decided I’d like an AGI curve too. The process for the AGI curve was more or less the same. The main differences were:

- My inside view probabilities for AGI are ~twice as large as those for TAI because my P(TAI|AGI) is ~50%.

- I didn’t include my TAI-only version of Tom Davidson’s semi-informative priors framework in the weighted average for my outside view, instead I gave the corresponding probability mass to the semi-informative priors framework with Tom Davidson’s own numbers.

- I made some minor adjustments for my guess about the time it would take to go from AGI to TAI.

Caveats and what the results are sensitive to

Some caveats:

- Presumably it goes without saying, but overall these numbers are extremely non-robust. They are the byproduct of me spending around 40 hours thinking about AI timelines. I used lots of “judgement” in generating them, on a topic that’s very hard to reason well about and that I don’t know much about.

- Generally, both my inside and outside views are heavily influenced by the community I’m in. It seems like it’s unlikely that my views would deviate from those of the community that much (and that applies to how I construct the outside view too).

- It’s very likely I made one or more arithmetical / coding / basic reasoning mistakes somewhere along the line. I imagine these wouldn’t affect the results too much but I’m not certain about this.

I could easily have done the procedure differently and got different results. Some things the result seems especially sensitive to

- The outside view forecasts I chose: I included almost exclusively “community” forecasts in my outside view.

- (for the TAI curve) The relative weighting of outside and inside views: My outside view and inside view are quite different, and the relative weighting is pretty arbitrary.

- (for the TAI curve, to some extent) my subjective P(TAI|AGI): This is currently relatively low and pushes down my inside view cdf for TAI a lot.

My thoughts on this / interesting things

- The concreteness of having actual slightly-thought-through AI timelines feels like a bit of a "moment" psychologically.

- Still, it’s not clear to me how much I “really believe” these forecasts. (This is despite the fact that e.g. for AGI my “inside view” is more or less the same as my “overall view”, according to the above procedure).

- On an intellectual level, I find it hard to really buy the idea that there’s a significant probability (e.g. 5%) that (say) there’ll be 20% annual growth from 2030 onwards.

- On a gut level, I certainly don’t feel like I’ve internalised that the future might look crazy in the way implied by TAI (or indeed AGI).

- Public discussion on AI timelines is surprisingly thin, especially high quality discussion.

- This partly comes from me having very high expectations here, I think.

- Probably for many people ">1% chance within the next 20 years" is as clear as it needs to be for decision making, so maybe it doesn’t make sense for people to focus more effort on this.

- My probability that AGI leads to TAI seems to be significantly lower than other people’s (for me it’s ~50% whereas for other people I think it’s more like 90%). That seems to come from me thinking it’s relatively unlikely (~30% probability) that AGI would cause >20% per year growth rates (for say a couple of decades).

- I don’t have especially strong reasons for saying there’s a ~30% chance that AGI would cause >20% per year growth rates. But here are a few things that feed in

- I think it’s hard to automate things (although I don’t know, maybe if AGI means “human but better” this turns out not to be a relevant consideration).

- My impression from talking to Phil Trammell at various times is that it’s just really hard to get such high growth rates from a new technology (and I think he thinks the chance that AGI leads to >20% per year growth rates is lower than I do).

- I sort of feel like other people don’t really realise / believe the above so I feel comfortable deviating from them.

- I don’t have especially strong reasons for saying there’s a ~30% chance that AGI would cause >20% per year growth rates. But here are a few things that feed in

- My inside view vs my overall view:

- For TAI, my overall view and inside view are quite different:

- In contrast, for AGI my overall view and inside view are very similar:

- The main reason the TAI views are so different is my P(TAI|AGI) not being close to 1. It’s pretty interesting that the inside view, outside view, and overall view are so similar for AGI; the forecasts that go into the outside view are very different to each other, and e.g. different weightings could have made the outside view very different to the inside view. So you can see what I mean, the forecasts that go into the outside view for AGI are shown below:

- Generally I think this has been surprisingly useful for thinking about other potentially transformative technologies.

- Seeing how other people think about a future transformative technology is instructive in many ways (what’s possible, how you could approach it, examples of specific frameworks, …).

- Having a bit more context on ~how AI safety people think feels pretty useful for communicating with those people.

- Having spent some time reading / thinking about 'influentialness', I didn't include any update on my probabilities based on this – probably a mistake!

- Although reading about it probably did affect my thinking and so my overall view timelines.

- How my views changed after doing this:

- When I got started on this, my inside view “50% probability of TAI” year was ~2100, and my overall view “50% probability of TAI” year was ~2070 (all based on gut feeling + guesses).

- Now my inside view on TAI is ~20% by 2100, and my overall view is ~40% by 2100.

- I think the main differences are: my gut feeling inside view became a bit more pessimistic; outside views are less aggressive than I thought; I think P(AGI|TAI) is not that high and people are often forecasting AGI.

Thanks to Max Daniel for encouraging me to make this a full post.

My impression (I could be wrong) is that this claim is interestingly contrarian among EA-minded AI researchers. I see a potential tension between how much weight you give this claim within your framework, versus how much you defer to outside views (and potentially even modest epistemology – gasp!) in the overall forecast.

I find that 57% very difficult to believe. 10% would be a stretch.

Having intelligent labor that can be quickly produced in factories (by companies that have been able to increase output by millions of times over decades), and do tasks including improving the efficiency of robots (already cheap relative to humans where we have the AI to direct them, and that before reaping economies of scale by producing billions) and solar panels (which already have energy payback times on the order of 1 year in sunny areas), along with still abundant untapped energy resources orders of magnitude greater than our current civilization taps on Earth (and a billionfold for the Solar System) makes it very difficult to make the AGI but no TAI world coherent.

Cyanobacteria can double in 6-12 hours under good conditions, mice can grow their population more than 10,000x in a year. So machinery can be made to replicate quickly, and trillions of von Neumann equivalent researcher-years (but with AI advantages) can move us further towards that from existing technology.

I predict that cashing out the given reasons into detailed descriptions will result in inconsistencies or very implausible requirements.

Thanks for these comments and for the chat earlier!

*I probably missed some nuance here, please feel free to clarify if so.

On the object level (I made the other comment before reading on), you write:

Maybe this is talking about definitions, but I'd say that "like the Industrial Revolution or bigger" doesn't have to mean literally >20% growth / year. Things could be transformative in others ways, and eventually at least, I feel like things would accelerate almost certainly in a future controlled with or by AGI.

Edit: And I see now that you're addressing why you feel comfortable disagreeing:

I'm not sure about that. :)

I think I might have got the >20% number from Ajeya's biological anchors report. Of course, I agree that, say, 18% growth might for 20 years might also be at least as big a deal as the Industrial Revolution. It's just a bit easier to think about a particular growth level (for me anyway). Based on this, maybe I should give some more probability to the "high enough growth for long enough to be at least as big a deal as the Industrial Revolution" than when I was thinking just about the 20% number. (Edit: just to be clear, I did also give some (though not much) probability to non-extreme-economic-growth versions of transformative AI)

I guess this wouldn't be a big change though so it's probably(?) not where the disagreement comes from. E.g. if people are counting 10% growth for 10 years as at least as big a deal as the Industrial Revolution I might start thinking that the disagreement mostly comes from definitions.

I phrased my point poorly. I didn't mean to put the emphasis on the 20% figure, but more on the notion that things will be transformative in a way that fits neatly in the economic growth framework. My concern is that any operationalization of TAI as "x% growth per year(s)" is quite narrow and doesn't allow for scenarios where AI systems are deployed to secure influence and control over the future first. Maybe there'll be a war and the "TAI" systems secure influence over the future by wiping out most of the economy except for a few heavily protected compute clusters and resource/production centers. Maybe AI systems are deployed as governance advisors primarily and stay out of the rest of the economy to help with beneficial regulation. And so on.

I think things will almost certainly be transformative one way or another, but if you therefore expect to always see stock market increases of >20%, or increases to other economic growth metrics, then maybe that's thinking too narrowly. The stock market (or standard indicators of economic growth) are not what ultimately matters. Power-seeking AI systems would prioritize "influence over the long-term future" over "short-term indicators of growth". Therefore, I'm not sure we see economic growth right when "TAI" arrives. The way I conceptualize "TAI" (and maybe this is different from other operationalizations, though, going by memory, I think it's compatible with the way Ajeya framed it in her report, since she framed it as "capable of executing a 'transformative task'") is that "TAI" is certainly capable of bringing about a radical change in growth mode, eventually, but it may not necessarily be deployed to do that. I think "where's the point of no return?" is a more important question than "Will AGI systems already transform the economy 1,2,4 years after their invention?"

That said, I don't think the above difference in how I'd operationalize "TAI" are cruxes between us. From what you say in the writeup, it sounds like you'd be skeptical about both, that AGI systems could transform the economy(/world) directly, and that they could transform it eventually via influence-securing detours.

Thanks, this was interesting. Reading this I think maybe I have a bit of a higher bar than you re what counts as transformative (i.e. at least as big a deal as the industrial revolution). And again, just to say I did give some probability to transformative AI that didn't act through economic growth. But the main thing that stands out to me is that I haven't really thought all that much about what the different ways powerful AI might be transformative (as is also the case for almost everything else here too!).

I don't know, for what it's worth I feel like it's pretty okay to have an inside view that's in conflict with most other people's and to still give a pretty big weight (i.e. 80%) to the outside view. (maybe this isn't what you're saying)

Not sure I understood this, but the related statement "epistemic modesty implies Ben should give more than 80% weight to the outside view" seems reasonable. Actually maybe you're saying "your inside view is so contrarian that it is very inside view-y, which suggests you should put more weight on the outside view than would otherwise be the case", maybe I can sort of see that.

My understanding is that Lukas's observation is more like:

These suggest you're using a different balance of sticking with your inside view vs. updating toward others for different questions/parameters. This does not need to be a problem, but it at least raises the question of why.

Yes, that's what I meant. And FWIW, I wasn't sure whether Ben was using modest epistemology (in my terminology, outside-view reasoning isn't necessarily modest epistemology), but there were some passages in the original post that suggest low discrimination on how to construct the reference class. E.g., "10% on short timelines people" and "10% on long timelines people" suggests that one is simply including the sorts of timeline credences that happen to be around, without trying to evaluate people's reasoning competence. For contrast, imagine wording things like this:

"10% credence each to persons A and B, who both appear to be well-informed on this topic and whose interestingly different reasoning styles both seem defensible to me, in the sense that I can't confidently point out why one of them is better than the other."

Thanks, this was helpful as an example of one way I might improve this process.

Ah right, I get the point now, thanks. I suppose my P(TAI|AGI) is supposed to be my inside view as opposed to my all-things-considered view, because I'm using it only for the inside view part of the process. The only things that are supposed to be all-things-considered views are things that come out of this long procedure I describe (i.e. the TAI and AGI timelines). But probably this wasn't very clear.

Thanks for sharing the full process and your personal takeaways!

Very small note: I'd recommend explaining your abbreviations at least once in the post (i.e., do the typical "full form (abbrev)"). I was already familiar with AGI, but it took me a few minutes of searches to figure out that TAI referred to transformative AI (no thanks to Tay, the bot).

Thanks for this, I've made a slight edit that hopefully makes these clearer.

Can you elaborate on why you think this?

I really don't have strong arguments here. I guess partly from experience working on an automated trading system (i.e. actually trying to automate something), partly from seeing Robin Hanson arguing that automation has just been continuing at a steady pace for a long time (or something like that; possible I'm completely misremembering this). Partly from guessing that other people can be a bit naive here.

This very long lesswrong comment thread has some relevant discussion. Maybe I'm saying I kind of lean towards more of the side that user 'julianjm' is arguing for.

Robin Hanson argues in Age of Em that annualized growth rates will reach over 400,000% as a result of automation of human labor with full substitutes (e.g. through brain emulations)! He's a weird citation for thinking the same technology can't manage 20% growth.

"I really don't have strong arguments here. I guess partly from experience working on an automated trading system (i.e. actually trying to automate something)"

This and the usual economist arguments against fast AGI growth seem to be more about denying the premise of ever succeeding at AGI/automating human substitute minds (by extrapolation from a world where we have not yet built human substitutes to conclude they won't be produced in the future), rather than addressing the growth that can then be enabled by the resulting AI.

This was a fascinating read.

Why did you choose to almost exclusively refer to EAs for your "outside view"? Is that a typical use of the term outside view?

I didn't consciously choose to mostly(?) focus on EAs for my outside view, but I suppose ultimately it's because these are the sources I know about. I wasn't exactly trying to do a thorough survey of relevant literature / thinking here (as I hope was clear!).

I guess ~how much of a biased view that gives depends on how good the possible "non-EA" sources are. I guess I'd be kind of surprised if there were really good "non-EA" sources that I missed. I'd be very interested to hear about examples.

As for the term "outside view", I feel pretty confused about the inside vs outside view distinction, and doing this exercise didn't really help with my confusion :).