This blog would not have been possible without tremendous feedback from Scott Emmons, Alejandro Ortega, James Blackburn-Lynch, Bill Bravman, and Wendy Lynch.

Summary

- The EA community needs to pay attention to long-term effects of traditionally neartermist interventions (what I call TNIs), because they are clearly quite significant. The Charlemagne Effect, whereby present people will reproduce and create huge numbers of future people, is at least one highly significant long-term effect of TNIs. For this reason, I make the longtermist case for neartermism.

- Ignoring the counterfactual of humanity continuing long-term independent of traditionally longtermist interventions (what I call TLIs) has created unfair comparisons. TNIs vs.TLIs have clear tradeoffs in expected value. In the simplest analysis, TLIs gain value by changing the likelihood of long-term survival, while TNIs gain value by increasing the projected number of future beings.

- With the Charlemagne Effect, estimates of future beings do not affect the neartermist vs. longtermist debate when assuming there is no carrying capacity. In these cases, both benefit equally from large numbers of future people, and the potential astronomical scale of future sentience has no bearing on the discussion.

- Just because an intervention is a TLI does not mean it obviously must trump the impact of a TNI. In fact, based on estimates from Greaves and MacAskill in “The Case for Strong Longtermism,” via the Charlemagne Effect malaria bednets may have greater longtermist expected value than risk reduction from meteors, pandemics, and AI.

- Based on this analysis, we can no longer say that many of the most well-known TLIs (i.e. reduction of asteroid, pandemic, or AI existential risk) are strictly and obviously more cost-effective than the best TNIs from a longtermist perspective.

- If this analysis is correct, we should ensure EA supports a healthy balance of TNIs and TLIs, with more rigorous discourse about the long-term impact of TNIs vs. TLIs informed by both qualitative and quantitative analysis.

Coming to Terms with Longtermism

I came to this community in a way similar to many others — being inspired by the tremendous opportunity to improve lives through effective charities in the developing world (shoutout to Doing Good Better!). However, after reading more about longtermism over the past few months, I better understand the arguments that future people matter, that there will be a lot of them in the future, and that we can and should try to reduce existential risk.[1]

That being said, I believe that to shift my time and money to focus primarily on longtermist cause areas is an incredibly important decision. Reallocating money from the GiveWell Maximum Impact Fund — which mostly funds highly cost-effective global health interventions — to existential risk reduction initiatives to try to save future people means that, in all probability, many present people will die. Extrapolating from my own actions to those of others in EA, the reality is that what the EA community chooses to spend its resources on has enormous life-or-death consequences for millions of people who are suffering today. The stakes could not be much higher.

This may be an uncomfortable fact, and for this reason it is unsurprising that there has been pushback on the longtermist framework. There have been several critiques of longtermism, and at least one attempt to demonstrate convergence between neartermism and longtermism.[2] However, for the purposes of this post I will assume a longtermist framework, and instead ask, “Given longtermism, what should EAs spend their time and money on?”[3]

The Charlemagne Effect

To address this question, it is important to point out that I am not aware of any GiveWell equivalent in longtermism.[4] This makes some sense given how speculative forecasting in longtermism can be (e.g. calculating existential risk probabilities and how much investment affects existential risks, etc.), but I found it more surprising that I haven’t been able to find a direct comparison of highly effective traditionally neartermist interventions (e.g. Against Malaria Foundation (AMF), Evidence Action, etc. I will call traditionally neartermist interventions TNI for short) with traditionally longtermist interventions (e.g. investment in reducing existential risk through AI alignment research, pandemic prevention, etc. I will call traditionally longtermist interventions TLI for short).

So far, I have just found qualitative arguments that assert that TLIs are many magnitudes more effective than TNIs because any intervention focusing on reducing the chance of human extinction and therefore supporting the potential existence of a mind bogglingly enormous number of future beings will virtually always be more impactful than a TNI, which is usually assumed to only have significant short-term impacts. For example, In Greaves and MacAskill’s “The Case For Strong Longtermism,” they write that “we emphasize that we are not considering the long-run knock-on effects of bednet distribution. It is possible, for all we say in this paper, that bednet distribution is the best way of making the far future go well, though we think this unlikely.”[5]

But when you start to think more deeply about this, the long-run knock-on effects are probably very, very important. The easiest way to illustrate this is thinking about reproduction. If I give $4,000 to AMF to distribute malaria-preventing bednets, which on average saves the life of one person, this person will have an expected number of children based on the fertility rate in their region.[6] Those children will go on to have children, and those children will have children, and so on, following an exponential curve. The more time goes by, the more the number of descendants will accelerate until there are millions, billions, or even more future people.

Amazingly, none of these people would ever have existed if I didn’t donate that $4,000. Because of the oft-quoted fun fact that Charlemagne is a common ancestor of most people of European pedigree because of this same principle, I call this the Charlemagne Effect.[7] Within the longtermist framework, the biggest relative benefit of my donation is not the lives I saved in the present, but the huge number of lives I helped bring into existence in the future.

Calculating Longtermist Value

This principle of exponential returns is the crux of the argument of Greaves and MacAskill when arguing for investing in existential risk reduction. Thus, one might respond to the above analysis by saying that by distributing bednets I don’t do anything to increase the probability of long-term human survival, and thus these benefits are overwhelmingly outweighed by effective investments in existential risk reduction. This might seem intuitive, but when applying an expected value calculation, we can quickly see why this is not necessarily the case.

The reason is that there are two key parts of a longtermist value calculation — the expected value of increasing the chance of long term human survival, and the expected value of there being more people to experience such survival. Reducing existential risk has a significant value for the first part of the equation, but minuscule/negligible value for the second, and vice versa for distributing bednets. Most discussions of the value of longtermism over neartermism seem to overlook the second part of the equation.

In its most basic forms, the “Longtermist Value Calculation” can be written as two equations, one for TNIs and one for TLIs. In these equations, for simplicity we assume that TNIs don’t reduce existential risk, and that TLIs don’t save the lives of present people, though a more rigorous analysis could include these factors.

The TNI Longtermist Value Calculation is f(x) = a * b(x) where x = resources (money, time, etc.) put into TNIs, a = Likelihood of Long Term Human Survival, and b = Total Number of Future People Existing Because of the People The TNI Helped in the Present.

The TLI Longtermist Value Calculation is f(y) = c * d(y), where y = resources (money, time, etc.) put into TLIs, c = Total Future People If Long Term Human Survival, and d = Change in Long Term Human Survival Probability.[8]

Let’s look at Greaves and MacAskill’s example of reducing existential risk from asteroids. In their paper, they estimate that NASA’s “Spaceguard Survey, on average, reduced extinction risk by at least 5 * 10-16 per $100 spent.”[9] Let’s also use their “main estimate” of the expected number of future beings at 1024 (they believe that this is a reasonable, conservative estimate and estimate that the true number is likely higher, but they also estimate that the true number could end up being significantly lower than this in theory).[10] So from these two numbers, we can predict that the expected value in terms of the total number of saved future beings to be 500,000,000, or 5,000,000 per dollar spent.

Compare this to bednets. With $100, we will save 0.025 present lives on average. Let’s assume that any saved people reproduce at the average rate for all present people on Earth.[11] We will then estimate how many of the total future people will be descendants of the 0.025 present people saved assuming that humanity achieves long-term survival. A simple way to do this is to find the proportion of saved present people compared with the total present population of Earth, and multiplying that by the total number of future people assuming long-term human survival. Using 50% as the probability of long-term human survival as a somewhat arbitrary base rate,[12] we predict that the expected value in terms of the total number of saved future beings to be an astounding 1.57 trillion, or 15.7 billion per dollar spent.[13]

As one can see, accounting for long-term effects in TNIs can have surprising results — in this simple analysis, bednet distribution is estimated to be approximately 3,000 times more effective than NASA’s Spaceguard Survey.

But wait! Isn’t the bednet example assuming that we avoid human extinction? And if we’re investing in bednets instead of preventing human extinction, there might not be any long-term future people who will benefit from this intervention, right?

There must be a non-zero chance of long-term human survival even without further TLIs since no one can be certain of our extinction, so no matter how pessimistic you are about humanity’s long-term future, there must be at least some long-term expected value from TNIs. However, the base rate for long term human survival will linearly affect the value calculation. If instead of giving ourselves a 50% chance of long term survival we gave ourselves a 1% chance, the expected number of saved future beings would be 50 times less — 31.4 billion, or 314 million per dollar spent. That being said, forecasting 1% for long term human survival seems outside the norm for what I have been able to glean from research and conversations with longtermists, so I will continue using 50% as a reasonable guess for my calculations.

But what about the two problems estimated to have the biggest risk for human extinction, pandemics and AI safety? How do they stack up to bednets?

Using numbers estimating risk reduction from pandemics and Artificial Superintelligence (ASI), Greaves and MacAskill project that “$250 billion of spending on strengthening healthcare systems would reduce the chance of such extinction-level pandemics this coming century by at least a proportional 1%,” and estimate a 1/22,000 risk of extinction from a pandemic in the coming century.[14] For ASI, they estimate that “$1 billion of spending would provide at least a 0.001% absolute reduction in existential risk.”[15] With these estimates, spending $250 billion on pandemics would give an expected number of saved future beings of 4.54 * 10¹⁷, or 1,820,000 per dollar spent. For ASI, $1 billion would give an expected number of saved future beings of 10¹⁹, or 10 billion per dollar spent. Running the numbers shows that bednets still come out on top in the expected value calculation, even against AI safety.[16]

Given the immense amount of forecasting guesswork, it should be abundantly clear that comparing the effectiveness of bednets and AI safety are within any reasonable margin of error. And even though asteroids and pandemics lag behind by several orders of magnitude in this analysis, the estimates are so sketchy that I would argue that working to prevent asteroids and pandemics should not be discounted as ineffective longtermist interventions without further analysis. Further, because Greaves and MacAskill intentionally use very conservative estimates of existential risk reduction in these two cases, I don’t find this simple analysis to land a clear-cut verdict on whether TNIs and TLIs are better longtermist investments (see discussion below).

However, in this simple calculation, it is clear that TNIs can compete with, or even beat, TLIs. In a simple continuous growth model, TNIs will be estimated to be more effective than TLIs if the proportion of people saved to total current world population is greater than a TLI’s reduction of the probability of human extinction.[17] In a more conceptual sense, this equation says that if there is to be long-term human survival, the more people around to enjoy it, the better!

But intuitively, how could TNIs beat out TLIs if the impact of reducing existential risk could save so many future beings? The answer is that on a cosmic scale, currently there are relatively few people. New people will be the ancestors of a significant fraction of future people if the population reaches the dramatic scale that is predicted in the coming millions of years.

Another important corollary here is that the total projected number of future people benefits TNIs as much as TLIs, since for both types of interventions the expected value would scale up proportionally assuming there is no carrying capacity for future beings (for more on carrying capacity, see below). This means that arguments conveying the extreme vastness of sentience in the long term future, often present in the writings of Toby Ord, Will MacAskill, and other leading longtermists, only have relevance on evaluating between TNIs or TLIs in special circumstances, though it would still strengthen the case for supporting longtermism in general.

Counterarguments

There are several important counterarguments within the longtermist framework.

Counterargument: Digital Beings

First, this analysis assumes that most beings in the future will be descendants of current people. If there are digital beings that are worthy of moral consideration in the future, and the number of them dwarves physical people, then it’s possible that the Charlemagne Effect won’t be nearly as strong compared to the expected value of TLIs, even if existential risk is reduced by a minuscule amount. This returns us to the original argument that Greaves and MacAskill make.

But the future existence of digital sentience is still quite speculative, and by no means a certainty or even necessarily a likelihood.[18] And even if it was, it is possible that digital sentience might not take the form of a huge number of independent beings. It could be one or a few digital beings that have many manifestations but share a single unified consciousness, similar to how cells in my body wouldn’t be considered separate consciousnesses from me.[19] In this case, it is possible that the total number of digital beings could be many magnitudes smaller than the total number of physical people.

All of this analysis is still highly uncertain. More research could help to better understand the likelihood that there will be digital beings worthy of moral consideration, and if so, how many of these beings we would estimate existing. At the same time, this sort of forecasting will likely remain highly uncertain in the near term.

Counterargument: Conservative Estimates

Second, Greaves and MacAskill intentionally used very conservative estimates of risk reduction for pandemics and ASI in order to make a “steel man” argument for their case.[20] If the ex ante rate of existential risk reduction per dollar spent is much higher than their conservative estimates, then these TLIs could end up being much more effective than any TNI.

But these numbers inherently have much uncertainty because of the nature of the forecast (an unprecedented event in human history that could take place at any time in the future). Reasonable people could adjust the rate of existential risk reduction by several orders of magnitude in either direction. More research and credible forecasting is needed to reduce the high degree of uncertainty in these estimates.

Just like with predicting the existence of sentient digital beings, we must come to terms with the fact that this sort of long-term forecasting is inherently uncertain, and we may have to concede that, to some degree, there will be large, reasonable disagreement on these numbers.

Counterargument: Carrying Capacities

Third, the importance of the Charlemagne Effect appears to assume continuous growth for the rest of human history. One might believe that the Charlemagne Effect would be dampened if humanity hit a carrying capacity. For example, if by Year 2100 we hit a carrying capacity of, say, 10 billion people, and then between Year 2100 and the end of the stelliferous era[21] we stay at constant population, then the Charlemagne Effect may have helped us reach 10 billion people in Year 2100, but it can’t take credit for the huge numbers of people who live from Year 2100 onwards.[22] This is because presumably we would’ve reached 10 billion people eventually and then stayed constant at that number for a long time even without a TNI, so the counterfactual of not employing the TNI shows the TNI may not have mattered that much for the far-off future at the end of the day. In this case, reducing existential risk takes on much greater strength.

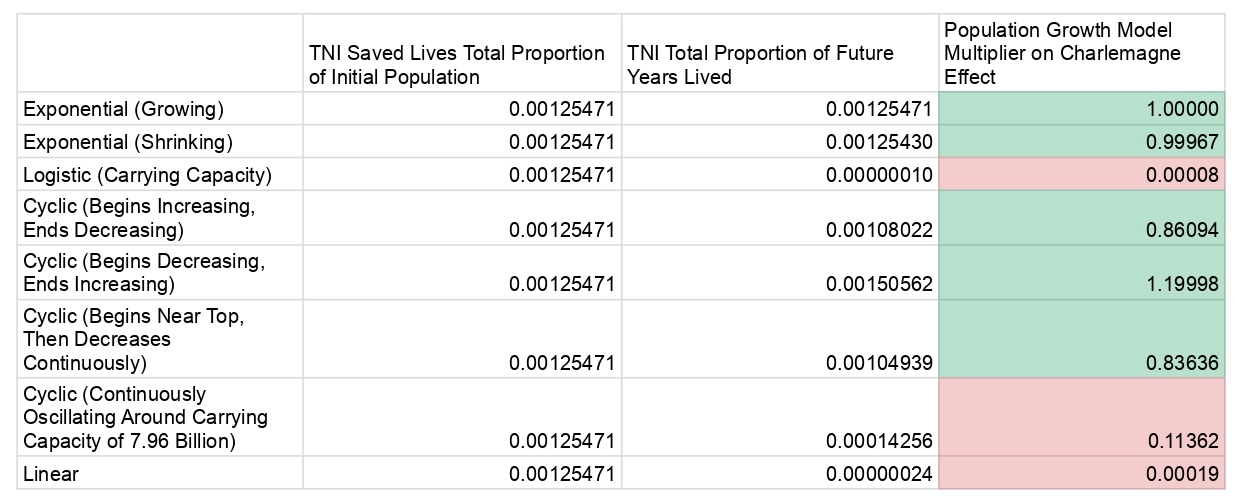

Given this critique, I created a few projections using Wolfram Alpha to show how the Charlemagne Effect responds to different models for population growth — exponential (set rate of growth or decline each year, j-curve), logistic (i.e. hitting a carrying capacity and then staying at that number, s-curve), cyclical (rises and falls), and linear (a constant amount of population growth each year). Under my projections, the Charlemagne Effect seems to generally apply under exponential and cyclical growth models, but not logistic or linear, where it is generally much weaker.[23] In other words, under exponential and cyclical growth models, the proportional long-term value of TNIs stays in line (within one magnitude) with the proportion of the lives saved by the TNI to the initial world population, while it is magnitudes less for logistic or linear growth models.[24]

Isn’t it damning enough that the Charlemagne Effect fails under logistic growth, given at some point it is realistic that we will hit some sort of cosmic carrying capacity, either because we fail to colonize space, or even if we do?

For those skeptical about a universe without a human carrying capacity, it is important to note that many of the estimates regarding humongous numbers of future people are predicated on large-scale expansion to new planets across the galaxy. As Greaves and MacAskill argue, it is feasible that future beings could colonize the estimated over 250 million habitable planets in the Milky Way, or even the billions of other galaxies accessible to us.[25] If this is the case, there doesn’t seem to be an obvious limit to human expansion until an unavoidable cosmic extinction event.

Further, even if we cannot be certain that indefinite space expansion will occur, if we assign a nonzero probability to it then the expected value of future people will still grow beyond any carrying capacity, supporting the Charlemagne Effect’s relevance.

However, even within the mathematical analysis the Charlemagne Effect appears to be surprisingly robust in many realistic circumstances that could constrain growth. For example, the exponential growth model only assumed a growth rate of 0.00001%/year (much lower than the current growth rate of 1%/year,),[26] reaching a very reasonable 8.8 billion current people 1,000,000 years from now.

Similarly, the Charlemagne Effect holds in situations where the population oscillates in “boom-and-bust” fashion from periods of expansion and contraction without a specific carrying capacity (e.g. cycles between prosperity/thriving and human-caused catastrophe, where an added person will increase by one the population ceiling), or quickly reaches a peak and then declines for the rest of human history.[27] It even holds up in circumstances where the population is exponentially declining, as is true in some European countries and Japan (I modeled a 1% rate of population decline; for comparison, Japan currently has a 0.3% rate of decline).[28] Thus, even in future scenarios where human population grows at a snail’s pace, or in countries where the population is actively declining, the Charlemagne Effect still holds.

What if the population reaches a carrying capacity, but instead of staying right at that number, it oscillates between being slightly above and slightly below that number as nature tries to correct for either over or underpopulation? Assuming that the TNI does not affect the overall carrying capacity and only temporarily affects the maximum or minimum population for one cycle before the boom-and-bust pattern returns to normal, then this version of cyclic growth is very similar to logistic growth in that the Charlemagne Effect will have little effect.[29]

However, note that even in the models where the Charlemagne Effect weakens, it is not necessarily completely irrelevant. In the logistic model I created, the Charlemagne Effect is about 10,000 times less strong — but on the humongous scales of future people, this wouldn’t necessarily disqualify TNIs from competition with TLIs. Under future population scenarios with a carrying capacity, the Charlemagne Effect will be disproportionately weaker the sooner we either reach or start oscillating around a carrying capacity.

If that happens in the very early days of humanity’s future (e.g. 10% or less of the way through), then the Charlemagne Effect will be much less important. But if it happens later, then the Charlemagne Effect will have mattered for a large chunk of our future and thus be important for our future as a whole.

But wait — how could the Charlemagne Effect stand up to this analysis? If the human population rises and falls, or constantly declines, how can the Charlemagne Effect work? A mathematical explanation is that for exponential and cyclic growth, the Charlemagne Effect creates a higher population value across the entire x-axis in proportion to the initial population size, thus creating a large effect when taking the area under the curve.[30] A conceptual explanation is that with exponential and cyclic growth, each person truly matters for the population size at any given time — depending on the model, one more person could mean exponentially more people (the traditional idea of the Charlemagne Effect), significantly slower population declines (as in the case of the Japan example), and more people during boom and bust periods (cyclic growth).

Counterargument: Cluelessness of TNI Long-Term Effects

Fourth, Greaves (2016 and 2020) and Mogensen (2019) have both pointed out the significant uncertainty of the long-term effects of TNIs, specifically looking at the example of bednets.[31] They point to studies suggesting that in certain regions decreasing child mortality might decrease population growth, or that an increasing population in certain regions could decrease average quality of life through overpopulation.[32] On the other hand, Mogensen also points to conflicting studies that indicate that the long-term effects of TNIs could increase population or improve quality of life, and suggests that TNIs could even reduce existential risk.[33]

From this, along with the simple unknowability of far-future effects, Mogensen and Greaves make the case that because it is effectively impossible to know what the long-term effects of TNIs are (both magnitude and whether the effects are positive or negative), that this weakens the case for TNIs compared to TLIs, which both argue do not suffer to the same extent from cluelessness.[34]

It is true that the academic literature is conflicted about how reductions in child mortality affects fertility rates, but there are clearly some cases where declines in child mortality have not been followed by significant decreases in fertility rates. For example, in Nigeria and Niger infant mortality has dropped dramatically in the past decades while total fertility has stayed steady, leading to projections of rapid population growth for both countries in the coming years.[35]

The academic studies cited by Mogensen explain that there are many confounding variables as well as questions regarding correlation vs. causation that indicate that there is not a 1:1 causal relationship between child mortality and fertility rates.[36] Further, even if a decrease in child mortality leads to fewer total people, then that does not mean the Charlemagne Effect is irrelevant for all TNIs — for example, children under the age of 5 are about 7 times more likely to die from malaria than the average person, but this still means that many people who could be saved by bednets are older than 5. Saving the lives of these people is much less likely to affect whether their parents have more children, so the Charlemagne Effect would still hold in these cases, even if dampened by about 7x.

To the question of whether population growth will lead to overcrowding that will worsen lives: this point is also highly uncertain. One could believe that, as Steven Pinker demonstrates in Enlightenment Now, life is generally getting better for most people, so the more people to experience that the better, including in developing countries. Or one could argue that more people will increase economic growth and promote technological innovation that will offset the strains of a growing population. Others might point to increasingly scarce resources and the effects of climate change as reasons that greater population will decrease the average quality of life. Again, the relationship between population growth and average quality of life is very complicated and is highly region-dependent.

However, current charitable interventions that EA considers (such as AMF) are at a small enough scale where the effect on overcrowding could be negligible anyways. For example, according to its Form 990 in 2021, AMF, one of the biggest GiveWell recommended charities, spent about $93m.[37] If we use the rough estimate that AMF saved one life for every $4,000 spent, then that means that they save about 23,250 lives per year. A remarkable and inspiring number, but not likely to contribute substantially to overpopulation, especially when considering these benefits are spread across dozens of regions across dozens of countries.[38]

That being said, at the end of the day it is true that it is impossible to fully predict the long-term impact of TNIs, but this is true with trying to predict the far future impact of anything.[39] Thus, TLIs should not be excluded from this critique. Greaves argues that her intuition is that efforts to reduce existential risk are more likely to help the long-term future than TNIs, and that “if we want to know what kinds of interventions might have that property, we just need to look at what people, in fact, do fund in the effective altruist community when they're convinced that longtermism is the best cause area.”[40]

But just because the EA community is already supporting TLIs is not proof that these are better for the long-term future. In fact, there are many reasonable unanticipated long-term effects of TLIs that could arise. To name a few examples, funding TLIs could lead to information hazards that help bad actors (or a sentient AGI) destroy humanity,[41] create a political backlash that sets back existential risk reduction prioritization amongst the public or government leadership, or even prevent a “warning shot” that would have led to greater understanding of key existential risk reduction strategies, and without which a future event causes extinction.

Contrary to Mogensen and Graves, I believe that the “cluelessness” of the long-term impacts of TLIs is at least as significant as that with TNIs. Further, I think it is likely that because of the inherent impossibility of studying historical data or conducting empirical studies, cluelessness would affect TLIs even more than TNIs.

Note that for all of the Charlemagne Effect’s counterarguments within longtermism (e.g. future existence of lots of digital beings, high effects of TLIs on probability of existential risk, presence of soon-to-be-reached carrying capacity, or unanticipated negative long-term effects of TNIs outpacing the unanticipated negative long-term effects of TLIs), it is quite possible that each of them could have merit to weaken the long-term effects of TNIs. But by the same token one could make counterarguments that weaken the long-term effects of TLIs (e.g. no future digital beings, very low effects of TLIs on probability of existential risk, continuously growing human population, or unanticipated negative long-term effects of TLIs outpacing the unanticipated negative long-term effects of TNIs).

With such a complicated number of unknowable assumptions within this forecasting, the point is not to show that TNIs are clearly more impactful in the long term than TLIs, but rather that there is no definitive reason that they would be strictly worse.

Counterarguments Outside Longtermism

Last, one could critique the Charlemagne Effect on the grounds that one could critique other longtermist arguments — fanaticism,[42] negative utilitarianism,[43] person-affecting views,[44] potentially anti-social implications,[45] etc. Many others have discussed these concerns already so I won’t get into them here.[46] The purpose of this blog is an internal critique of longtermist cause areas, not a critique of longtermism as a whole.

Analysis of Longtermist Value Calculations

After walking through the counterarguments, we can no longer say that many of the most well-known TLIs (i.e. reduction of asteroid, pandemics, or ASI existential risk) are strictly and obviously more cost-effective than the best TNIs from a longtermist perspective. In fact, TLIs are subject to the same standard analysis of importance, tractability, neglectedness, and numbers-backed impact estimates as with other EA cause areas.

It is thus importantt to consider the marginal value of investments. One could argue that a marginal investment in AI safety right now might have the highest expected value, but this might not always be the case. With diminishing marginal returns, this could switch at some point if the field is saturated with top-notch researchers and strong AI governance, or if many of the biggest threats of the technology are successfully mitigated. Of course, diminishing marginal returns also applies to other types of interventions, such as TNIs. It will be vital to continuously monitor the tractability and neglectedness of both TLIs and TNIs alike.

When evaluating TLIs, the expected value will hinge heavily on the estimation of how much the intervention will improve the long term human survival rate, and at what cost. And importantly, there may be many interventions, previously thought of as neartermist, that once incorporating the Charlemagne Effect, could look as good or better than TLIs. In reality, the distinction between TNIs and TLIs is a superficial one. All interventions will have some impact on the long-term future, though some will clearly be better than others.

Considering Potential Implications

Someone could try to take the Charlemagne Effect in directions that go beyond the typical understanding of “altruism” (i.e. directly helping people). For example, assuming these interventions wouldn’t cause unintended suffering, someone could argue that TNIs are better that directly impact people most likely to reproduce in the future, such as children, or people in developing countries with higher reproduction rates, or Catholics who tend to have more children than average. This could include anything from advocating against China’s two-child policy or implementing child care tax credits.

In addition, the Charlemagne Effect’s logic on its surface could be wielded to deprioritize TNIs that don’t directly increase reproduction, or that improve animal welfare.

In response to the pro-reproduction argument, this seems to be a marked shift away from charitable work (preventing suffering) toward social engineering (actively increasing the number of people for its own sake), which I would strongly argue is not the original intent or purpose of the effective altruism community.[47][48] Further, getting involved in these sorts of interventions would almost certainly polarize the reputation and thus viability of the broader EA movement.

Second, the pro-reproduction argument and the argument to deprioritize TNIs that don’t encourage reproduction ignore other effects on reproductive fitness, such as having healthy and supportive family, friends, and neighbors as described in the “grandmother hypothesis.”[49]

Third, the Charlemagne Effect is likely only one of many significant long-term effects of TNIs. There may be other significant drivers of TNI longtermist impact, such as future-oriented impacts on the effectiveness of government institutions, the likelihood that animal welfare interventions could lay a foundation for much better animal welfare in the future, or even the popularity of EA on a meta level. Many of these may be harder to quantify and may require more qualitative analysis to define, but this doesn’t mean they are unlikely to be very important.

More research would be needed on other longtermist effects of TNIs. It’s possible that some TNIs are much more relatively effective or ineffective than previously thought when viewed through this longtermist lens. It’s also possible that issues that were never seen as EA cause areas before could now be much more competitively impactful.

Conclusion

After laying out my argument, I hope it is clear that I am not critiquing the fundamental philosophy of longtermism. In fact, this post is an internal critique of longtermism — I am hoping to start a conversation about how we as EAs should invest our resources if we take seriously the longtermist effects of TNIs.

It is clear that, even after factoring in the Charlemagne Effect, AI safety and pandemic prevention are likely high-impact cause areas for the EA community. But it is also clear that some TNIs like distributing bednets are likely in the same ballpark of impact or higher, even from a long-term perspective.

With all of this, it is important to emphasize deep epistemic humility in my analysis. I believe reasonable people could weigh the impact of TLIs very differently from me. Some might do their own analysis and determine that resources invested in AI safety, pandemic prevention, and meteor safety are far more impactful than investing in TNIs. Conversely, I think others might find no TLI to be as cost-effective as many top TNIs. If each of these sides are valid and reasonable, then it would be wise to ensure the EA community maintains a healthy balance of resources going to both TLIs and TNIs.

Further, it could be reasonable to think that, with so many uncertain, unknowable factors that could be forecast to show either TLIs or TNIs to be significantly more impactful than the other, quantitative analysis may be less valuable than qualitative analysis in longtermist decision-making, or perhaps quantitative analysis could be just one reference point among many in this context.[50]

However, for those who read this post and still believe in the significance of conducting value calculations to inform longtermist decision-making, then this analysis points to the extreme importance of accurately forecasting the probability that a given intervention would reduce existential risk, and calculating a base rate for long-term human survival. Further, the EA community would strengthen this quantitative analysis if it developed more sophisticated longtermist value calculators that take into account quality-of-life impacts and other long-term impacts of TNIs which might take more research and thought to define. I would encourage anyone who is thinking about these issues to develop their own longtermist value calculators and plug in their own numbers to make an independent judgment of what they believe is most important and how to use their time and money.

Finally, I think it would be very important for the EA community to foster healthy debate comparing the effectiveness of various TNIs and TLIs from both a qualitative and quantitative lens. The EA community could work to ensure that folks more inclined to support TNIs or TLIs have strong mutual respect for one another, recognize that different people might be better suited to spend their resources on one or the other, and appreciate the fact that there must be some division of labor within any successful team or movement.

Final Reflection

Writing this post, I realized that I could dramatically help people in the future by helping people in the present, at scales I had never comprehended before. This was incredibly empowering. Beyond knowing that my past donations to the GiveWell Maximum Impact Fund will have a tremendous amount of future impact (as well as present impact), this was also a beautiful reminder of the importance of investing in the relationships in my life.

The impact of consoling a friend during a difficult breakup or mentoring my younger brother will not only help my loved ones (though personally this alone is plenty enough reason to support them). It is quite possible that these interactions will positively influence my loved ones’ future well-being, or how they treat others, even if by a tiny amount. Applying the same principle as the Charlemagne Effect, this positivity could spread virally to everyone who encounters them, and it could also be passed on to their descendants.

Treating others well could have far-reaching ripple effects, spreading happiness to the coming generations across time and space. Thus, breaking down the artificial distinction between neartermism and longtermism could serve not only as a way to help EAs determine how to do the most good with their income and careers, but also as another profound reason to love and care for those around us.

- ^

This piece by Holden Karnofsky effectively articulates many of the best arguments for longtermism, and responses to common counterarguments.

- ^

Critiques: “The Hinge of History,” Peter Singer; “Against Strong Longtermism,” Ben Chugg. Convergence argument: “Convergence thesis between longtermism and neartermism.”

- ^

For what I mean by longtermism, see this post.

- ^

See a discussion about potential GiveWell alternatives in longtermism here.

- ^

“The Case For Strong Longtermism,“ Hilary Greaves and William MacAskill, page 5.

- ^

$4,000 is the estimate used in “The Case For Strong Longtermism” to save an average of one life for the distribution of insecticide-treated bednets. This is in the same ballpark as GiveWell’s most recent estimates.

- ^

“So you’re related to Charlemagne? You and every other living European…,” Adam Rutherford. This of course doesn’t mean that most Europeans are directly descended from Charlemagne, but rather at some point most European pedigrees reproduced with a descendant of Charlemagne. Also note that Charlemagne had at least 18 children, so his case is a bit unusual but the principle still applies. For a more academic treatment of this idea, see “Most Europeans share recent ancestors,” Ewen Callaway.

- ^

Note that these functions are not necessarily linear, and that different amounts of resources could create non-linear outputs (diminishing returns, economies of scale, etc.). Also note that near-term value is ignored in these equations for simplicity, since with most longtermist analyses this value will be very small compared to long-term effects.

- ^

“The Case For Strong Longtermism,“ Hilary Greaves and William MacAskill, page 11.

- ^

“The Case For Strong Longtermism,“ Hilary Greaves and William MacAskill, page 9.

- ^

It’s quite possible that the real rate would be higher as fertility rates tend to be higher in the countries where such bednets are being deployed.

- ^

I couldn’t find good research on estimating this number, so I used Toby Ord’s ⅙ estimate for existential risk in the next century, and multiplied that by a factor of ~3 to assume that in following centuries there will still be existential risk but it will diminish over time as we master new technologies. I have very little epistemic confidence in this estimate, but an accurate forecast here isn’t essential for my big-picture analysis and conclusions.

- ^

Note that this calculation is simplified to exclude the increased quality of life for those who did not contract malaria because of the bednets, but would also not have died if they did contract malaria. There is medical evidence indicating that those who contract malaria but don’t die from it have long-term health repercussions which could affect reproduction rates. Also, the improved quality of life of not contracting malaria could reasonably be expected to improve the quality of life of the children of bednet recipients by at least some small amount, and then their children’s children, ad infinitum. This same argument could apply to other types of initiatives that improve lives but don’t save them too.

- ^

“The Case For Strong Longtermism,“ Hilary Greaves and William MacAskill, page 12.

- ^

“The Case For Strong Longtermism,“ Hilary Greaves and William MacAskill, page 15.

- ^

You can view the calculations here.

- ^

Again, note that other effects, such as changes in quality of life for future beings, could be added to this equation to make it more sophisticated. I would guess that adding a quality-of-life factor is likely to favor TNIs.

- ^

- ^

For example, neuroscientist Christof Koch believes that, according to his Integrated Information Theory, the Internet is one united consciousness.

- ^

- ^

- ^

I chose 1010 as the number for this example because 10 billion seems to be a pretty common estimate for humanity’s carrying capacity on Earth.

- ^

You can view detailed calculations here. To briefly summarize my methodology, I researched population growth equations for exponential, logistic, cyclic, and linear growth, then plugged in numbers that I thought would be most illuminating into Wolfram Alpha. I then recorded the output of the total people that would be alive because of a TNI after 1 million years, and how much long-term value that TNI would’ve provided after 1 million years by comparing it to a control model with no TNI. Note that using different numbers in the formulas would affect the values, but based on my understanding it is unlikely that this would change the big-picture takeaways.

To estimate long-term value, I took the integral of the function with the TNI value (with x between 0 and 1,000,000). This integral should provide a value in a unit I call “Total Lived Years.” The motivation for this unit is that by taking the area under the curve of this population function, you will get the total number of years that were actually lived by people. If you don’t believe me, try sketching out a simple example on a pen and paper. For example, if in Year 0, 1, and 2 there are 0 people but in Year 3 and 4 there are 5 people, then there will be 5 lived years (the area under the curve is 1 * 5). If in Year 0 there are 0 people but in each successive year 1 person is added until Year 4, then there will be 8 lived years (4 people on average live for half of the 4 years = 8, or the area under the curve is 4 * 4 * ½ = 8). It would be interesting to explore the robustness and applicability of the “Total Lived Years'' unit to future analyses of longtermism.

All calculations and charts can be reviewed at the link in this footnote. I have also described all equations and assumptions in the document. Note that while my models use somewhat arbitrary numbers for the modeling (such as that humanity will only continue for an additional 1 million years), the trends and implications discussed in this analysis should be robust, though it will be important to test this understanding with further research.

- ^

Note that I don’t discuss linear growth in this post because the idea that population would grow by a set amount each year and be independent of current population size is a highly unrealistic model for large-scale population growth.

- ^

“The Case For Strong Longtermism,“ Hilary Greaves and William MacAskill, page 7.

- ^

- ^

In cyclic models, the Charlemagne Effect is either slightly weakened or strengthened depending on the situation, but not by more than 25% in any of my models. The variation appears to depend on what radian (i.e. what part of the wave) you determine to be time t = 0. Further investigation of this is beyond the scope of this post, but it would be interesting to further explore. Note that for these boom-and-bust models we are assuming no carrying capacity. If there was a carrying capacity, the Charlemagne Effect would behave similarly as with the logistic growth model.

- ^

Population growth (annual %) - Japan. Note that in a universe where all of humanity has an exponentially declining population, this might suggest that the quality of life might be low enough where bringing more people into the world could be a bad idea!

- ^

What about in circumstances where a TNI is deployed in a country with a growing population but the growth rate slows to zero after a certain number of generations? This is a possible future for many developing countries where such TNIs are used. Just as with an analysis of the total human population, if the growth can be modeled as logistic with a carrying capacity, then the Charlemagne Effect would be weakened. However, if there is not a true carrying capacity and the population continues to grow slowly, cycle up and down, or even decline, the Charlemagne Effect would still hold. And last, of course in the single-country example a fraction of people would migrate to other places that might not have carrying capacities, allowing the Charlemagne Effect to continue to apply at some level.

- ^

This doesn’t apply to logistic growth as the Charlemagne Effect stops creating a higher population value once the carrying capacity is reached. This doesn’t apply to linear growth because the Charlemagne Effect creates a higher value for the initial population, but stops affecting population size after this.

- ^

“Cluelessness,” Hilary Greaves; “Evidence, Cluelessness, and the Long Term,” Hilary Greaves; “Maximal Cluelessness,” Andreas Mogensen.

- ^

“Maximal Cluelessness,” Andreas Mogensen, pages 13-16; “Cluelessness,” Hilary Greaves, page 10.

- ^

“Maximal Cluelessness,” Andreas Mogensen, pages 13-16.

- ^

“Maximal Cluelessness,” Andreas Mogensen, pages 15-16; “Evidence, Cluelessness, and the Long Term,” Hilary Greaves, Response five: "Go longtermist."

- ^

- ^

“Maximal Cluelessness,” Andreas Mogensen, pages 13-16.

- ^

As of the publication of this post, the only two GiveWell recommended charities by expenses are GiveDirectly at $229m and Sight Savers International at $194m based on most recently available tax forms. GiveDirectly 990; Sight Savers International, Inc. 990.

- ^

- ^

This point is made, amongst other places, in Superforecasting: The Art and Science of Prediction, Philip Tetlock.

- ^

“Evidence, Cluelessness, and the Long Term,” Hilary Greaves, Response five: "Go longtermist.”

- ^

“Roko’s basilisk” might be the most famous example of a potential AI information hazard.

- ^

“Fanaticism.”

- ^

“Three Types of Negative Utilitarianism,” Brian Tomasik.

- ^

“The Nonidentity Problem,” Stanford Encyclopedia of Philosophy.

- ^

“The Hinge of History,” Peter Singer.

- ^

“The Case for Strong Longtermism,” Hilary Greaves and William MacAskill; “Possible misconceptions about (strong) longtermism,” Jack Malde.

- ^

Holden Karnofsky makes a similar point, writing in a Socratic dialogue with himself the following regarding longtermism:

Non-Utilitarian Holden: “I mean, that’s super weird, right? Like is it ethically obligatory to have as many children as you can?”

Utilitarian Holden: “It’s not, for a bunch of reasons. The biggest one for now is that we’re focused on thin utilitarianism - how to make choices about actions like donating and career choice, not how to make choices about everything. For questions like how many children to have, I think there’s much more scope for a multidimensional morality that isn’t all about respecting the interests of others.” [Lightly edited for clarity.]

- ^

Note that there are other prominent examples of taking utilitarian logic to extremes unconstrained by other moral considerations, some of which many in the EA community would likely object to. For example, Michael Sandel poses the question, “In ancient Rome, they threw Christians to the lions in the Coliseum for the amusement of the crowd. Imagine how the utilitarian calculus would go: Yes, the Christian suffers excruciating pain as the lion mauls and devours him. But think of the collective ecstasy of the cheering spectators packing the Coliseum. If enough Romans derive enough pleasure from the violent spectacle, are there any grounds on which a utilitarian can condemn it?”

- ^

“Grandmother Hypothesis, Grandmother Effect, and Residence Patterns,” Mwenza Blell.

- ^

Many disciplines, such as international relations, political campaigning, or business management generally use qualitative analysis to make decisions, even while often being informed by quantitative analysis.

Thanks for the post.

As you note, whether you use exponential or logistic assumptions is essentially decisive for the long-run importance of increments in population growth. Yet we can rule out exponential assumptions which this proposed 'Charlemagne effect' relies upon.

In principle, boundless forward compounding is physically impossible, as there are upper bounds on growth rate from (e.g.) the speed of light, and limitations on density from the amount of available matter in a given volume. This is why logistic functions, not exponential ones, are used for modelling populations in (e.g.) ecology.

Concrete counter-examples to the exponential modelling are thus easy to generate. To give a couple:

A 1% constant annual growth rate assumption would imply saving one extra survivor 4000 years ago would have result in a current population of ~ 2* 10 ^17: 200 Quadrillion people.

A 'conservative' 0.00001% annual growth rate still results in populations growing one order of magnitude every ~25 million years. At this rate, you end up with a greater population than atoms in the observable universe within 2 billion years. If you run until the end of the stelliferous era (100 trillion years) at the same rate, you end up with populations on the order of 10^millions, with a population density basically 10^thousands every cubic millimetre.

I think it’s worth mentioning that what you’ve said is not in conflict with a much-reduced-but-still-astronomically-large Charlemagne Effect: you’ve set an upper bound for the longterm effects of nearterm lives saved at <<<2 billion years, but that still leaves a lot of room for nearterm interventions to have very large long term effects by increasing future population size.

That argument refers to the exponential version of the Charlemagne Effect; but the logistic one survives the physical bounds argument. OP writes that they don’t consider the logistic calculation of their Charlemagne Effect totally damning, particularly if it takes a long time for population to stabilise:

Hi Greg,

Thanks for reading the post, and your feedback! I think David Mears did a good job responding in a way aligned with my thinking. I will add a few additional points:

Just came across this post, I can’t understate how great I think this is. I would love to see someone respond to this post with another post including some hardcore QALY and DALY calculations, and/or further considering population ethics and the trade offs between infant mortality and fertility rates.

This sentiment has already been expressed by others across the forum lately, but in recent times (maybe the past two to three years) it seems that EA has been largely co-opted by longtermism and rationalism, which I think is a big turn off for both new and old EAs that are more aligned with TNIs. I think EA needs to reinstate a healthy balance and carefully reconsider how it is currently going about community-building

[I read this quickly, so sorry if I missed something]

First, on the scope of the argument. When you talk about "Traditional neartermist interventions", am I right in thinking you *only* have life-saving interventions in mind? Because there are "traditional neartermist interventions", such as alleviating poverty, that not only do not save lives, but also do not appear to have large effects on future population size.

If your claim applies only to the traditionally near-term interventions which increase the population size (in the near term), then you should make that clear. (Aside, I found the "TLIs" and "TNIs" thing really confusing because the two are so similar and this would be a further reason to change them).

Second, on your argument itself. It seems to rest on this speculative claim.

But, as an empirical matter, this is highly unlikely. If you look at the UN projections for world population, it looks like this will peak around 2100. As countries get richer, fertility - the number of children each woman has - goes down. Fertility rates are below 2 in nearly every rich country (e.g. see this), which is below the 'Replacement level fertility' of 2.1, the average number of children per woman you need to keep the population constant over time. As poorer countries get richer, we can expect fertility to come down there too. So, your claim might be true if the Earth's population was set to keep growing in perpetuity, but it's not. On current projections, saving a life in a high fertility county might lead to one to two generations of above replacement level fertility. (You mention interstellar colonisation, but it's pretty unclear what relationship that would have with Earth's population size).

All this is really before you get into debates about optimum population, a topic there is huge uncertainty about.

We don’t need to be certain the UN projections are wrong to have some credence that world population will continue to grow. If that credence is big enough, the Charlemagne Effect can still win.

Hi Michael, thanks for the feedback and the interest in this post! I'll try to respond to both of your points below:

The hope of this post is not to argue that TNIs are more impactful than TLIs, but rather to make the case that people could reasonably disagree about which are more impactful based on any number of assumptions and forecasts. And therefore, that even within a longtermist utilitarian analysis, it is not obviously better to invest only in TLIs.