Written by Brian Tomasik. Published by the EA Forum team as a classic repost.

Summary

I think some in the effective-altruism movement overestimate the extent to which charities differ in their expected marginal cost-effectiveness. This piece suggests a few reasons why we shouldn't expect most charities to differ by more than hundreds of times. In fact, I suspect many charities differ by at most ~10 to ~100 times, and within a given field, the multipliers are probably less than a factor of ~5. These multipliers may be positive or negative, i.e., some charities have negative expected net impact. I'm not claiming that charities don't differ significantly, nor that we shouldn't spend substantial resources finding good charities; indeed, I think both of those statements are true. However, I do hope to challenge black/white distinctions about effective/ineffective charities, so that effective altruists will see greater value in what many other people are doing in numerous places throughout society.

Introduction

"It is very easy to overestimate the importance of our own achievements in comparison with what we owe others."

— attributed to Dietrich Bonhoeffer

Sometimes the effective-altruist movement claims that "charities differ in cost-effectiveness by thousands of times." While this may partly be a marketing pitch, it also seems to reflect some underlying genuine sentiments. Occasionally there are even claims to the effect that "shaping the far future is 10^30 times more important than working on present-day issues," based on a naive comparison of the number of lives that exist now to the number that might exist in the future.

I think charities do differ a lot in expected effectiveness. Some might be 5, 10, maybe even 100 times more valuable than others. Some are negative in value by similar amounts. But when we start getting into claimed differences of thousands of times, especially within a given charitable cause area, I become more skeptical. And differences of 10^30 are almost impossible, because everything we do now may affect the whole far future and therefore has nontrivial expected impact on vast numbers of lives.

It would require razor-thin exactness to keep the expected impact on the future of one set of actions 10^30 times lower than the expected impact of some other set of actions.

I'll elaborate a few weak arguments why our expectations for charity cost-effectiveness should not diverge by many orders of magnitude. I don't claim any one of these is a fully general argument. But I do see a macro-level trend that, regardless of which particular case you look at, you can come up with reasons why a given charity isn't more than, say, 10 or 100 times better than many other charities. Among charities in a similar field, I would expect the differences to be even lower—generally not more than a factor of 10.

Note that these are arguments about ex ante expected value, not necessarily actual impact. For example, if charities bought lottery tickets, there would indeed be a million-fold difference in effectiveness between the winners and losers, but this is not something we can figure out ahead of time. Similarly, there may be butterfly-effect situations where just a small difference in inputs produces an enormous difference in outputs, such as in the proverb "For Want of a Nail". But such situations are extremely rare and are often impossible to predict ex ante.

By "expected value" I mean "the expected value estimate that someone would arrive at after many years of studying the charity and its impacts" rather than any old expected value that a novice might have. While it's sometimes sensible to estimate expected values of 0 for something that you know little about on grounds of a principle of indifference, it's exceedingly unlikely that an expected value will remain exactly 0 after studying the issue for a long time, because there will always be lots of considerations that break symmetry. For example, if you imagine building a spreadsheet to estimate the expected value of a charity as a sum of a bunch of considerations, then any single consideration whose impact is not completely symmetric around 0 will give rise to a nonzero total expected value, except in the extremely unlikely event that two or more different considerations exactly cancel.

I'm also focusing on the marginal impact of your donating additional dollars, relative to the counterfactual in which you personally don't donate those dollars, which is what's relevant for your decisions. There are of course some crucially important undertakings (say, keeping the lights on in the White House) that might indeed be thousands of times more valuable per dollar than most giving opportunities we actually face on the margin, but these are already being paid for or would otherwise be funded by others without our intervention.

Of course, there are some extreme exceptions. A charity that secretly burns your money is not competitively effective with almost anything else. Actually, if news of this charity got out, it might cause harm to philanthropy at large and therefore would be vastly negative in value. Maybe donating to painting galleries or crowd-funding altruistically neutral films might be comparable in impact to not giving at all. And even money spent on different luxuries for yourself may have appreciably different effects that are within a few orders of magnitude of the effect of a highly effective charity donation.

What I'm talking about in this piece are charities that most people reasonably consider to be potentially important for the world—say, the best 50% of all registered 501(c)(3) organizations. Of course, there are also many uses of money that do substantial good that do not involve donating to nonprofits.

Finally, note that this discussion assumes valuation within a particular moral framework, presumably a not-too-weird total consequentialism of some sort. Comparing across moral frameworks requires an exchange rate or other harmonization procedure, which is not my concern here.

With these qualifications out of the way, I'll dive into a few specific arguments.

Arguments for the claim

Argument 1: Many types of flow-through effects

By "flow-through effects" I mean indirect effects of a charity's work. These could include the spill-over implications on the far future of the project in which the charity engages, as well as other side effects of the charity's operations that weren't being directly optimized for.

Charities have multiple flow through effects. For example, with an international-health organization, some relevant flow-through impacts include

-

lives saved

-

contribution to health technology

-

contribution to transparency, measurement, and research in the field

-

inspiring developed-world donors of the importance of global health, thereby increasing donations and compassion in the long run

-

writing newsletters, a website, and editorials in various magazines

-

providing training for staff of the organization so that they can be empowered to do other things in the future[1]

-

etc.

For papers written about dung beetles, effects include

- more knowledge of dung beetles

- better understanding of ecology and evolution in general, with some possible spillover applications to game theory, economics, etc.

- better understanding of behavior, neuroscience, and nature's algorithms

- development of scientific methodology (statistics, data sampling, software, communication tools, etc.) with applications to other fields

- training for young scientists

etc.

Even if a charity is, say, 10 times better along one of these dimensions, it's unlikely to be 10 times better along all of them at once. As a result, a charity that seems 10 times as cost-effective on its main metric will generally be less than 10 times as effective all things considered.

Of course, probably some charities are better along all dimensions at once, just as some people may be smarter across all major domains of intelligence than others, but it's unlikely that the degree of outstandingness is similarly high across all dimensions.

To illustrate, suppose we're comparing charity A and charity B. In terms of the most salient impact of their work, charity A has a cost-effectiveness per dollar of 1000, while charity B's cost-effectiveness per dollar is only 1. So it looks like charity A is 1000 times more cost-effective than charity B. However, along another dimension of altruistic impact, suppose that charity A is 10, while charity B is 0.5. On a third dimension, charity A is 40, and charity B is 6.5. On a fourth dimension, charity A is -20, and charity B is -1. All told, the ratio of charity A to charity B is (1000 + 10 + 40 - 20) / (1 + 0.5 + 6.5 - 1) = 147.

This point would not be relevant if only one or maybe two of the flow-through effects completely dominates all the others. That seems unlikely to me, though it's more plausible for lives saved than for dung-beetle papers, since for dung-beetle papers, most of the flow-through effects come from the indirect contributions toward scientific training, methodology, and transferable insights. (Of course, these effects are not necessarily positive. Faster science might pose more of a risk than a benefit to the future.)

In any event, my claim is only that charities are unlikely to differ by many orders of magnitude; they might still differ by one or two, and of course, they may also have net negative impacts. Even for health charities, I think it's understood that they don't differ by more than a few orders of magnitude, and indeed, if you pick a random developing-world health charity, it's unlikely to be even one or two orders of magnitude worse than a GiveWell-recommended health charity, even just on the one dimension that you're focusing on.

Health charities between the developed world and the developing world may differ by more than 100 times in direct impact as measured by lives saved or DALYs averted, but the ultimate impacts will differ less than this, because (a) developed-world people contribute more to world GDP on average and (b) the memes of developed-world society have more impact than those in the developing world, inasmuch as the developed world exports a lot of culture, and artificial general intelligence will probably be shaped mostly by developed-world individuals. Note that I'm personally uncertain of the sign of GDP growth on the far future, and the sign of saving developed-world lives on society's culture isn't completely obvious either. But whether positive or negative, these impacts are more positive or negative per person in the developed world. That said, I still think it's better to support developing-world charities, in part because this helps expand people's moral circle of compassion. I'm just pointing out that the gap is not as stark as it may seem when examining only direct effects on individual lives in the short run.

Argument 2: Replaceability of donations

There's another reason we should expect the cost-effectiveness of charities to even itself out over time. If a charity is really impressive, then unless it's underfunded due to having weird values or otherwise failing to properly make itself known, it should either already have funding from others or should be in a position to get funding from others without your help. Just as the question in career choice is not "Where can I make the biggest impact?" but "Where can I make the biggest impact that wouldn't have happened without me?" so also in philanthropy, it's relevant whether other people would have donated to the funding gap that you're intending to fill. The more effective the charity is, the more likely your donation would have been eventually replaced.

I think people's effectiveness can vary quite significantly. Some academics are hundreds of times more important than others in their impact on a field. Some startup founders appear to have vastly greater skill than average. But what I'm discussing in this piece is the value of marginal dollars. A brilliant startup founder should have no trouble attracting VC money, nor should an outstanding academic have trouble securing grants. Differences in outstandingness tend to be accompanied by differences in financial support. The value of additional money to a brilliant person is probably much lower, because otherwise someone would have given it already.

Engineering vs. market efficiency

Naively one might think of cost-effective charity like a problem of engineering efficiency: Charity A is ten times more effective than charity B, so I should fund charity A and get a 10X multiplier on my impact. This may sometimes be true, especially when choosing a cause to focus on. However, to some extent, the process of charity selection more resembles how banks and hedge funds search for a company that's temporarily undervalued so as to make some profits before its price returns to equilibrium. If a highly successful charity is underfunded, we shouldn't necessarily expect it to stay that way forever, even without our help. Of course, charity markets aren't nearly as efficient as financial markets, so the proper characterization of searching for cost-effective charities is some mix between the permanent engineering free lunch and the competitive search for a vanishing moment of under-valuation.

The engineering free lunch is more likely to exist in cases of divergent values. For instance, some people may have an emotional connection to an art gallery or religious cathedral and insist on donating to that rather than, say, research on world peace. Unlike in the case of financial markets, efficiency will not necessarily close this gap, because there's not a common unit of "good" shared by all donors in the way that there is a common unit of value in financial markets. That said, if there are some cross-cause donors who pick charities based on room for more funding, then donation levels may become more insensitive to cause-specific donors, as Carl Shulman explains in "It's harder to favor a specific cause in more efficient charitable markets."

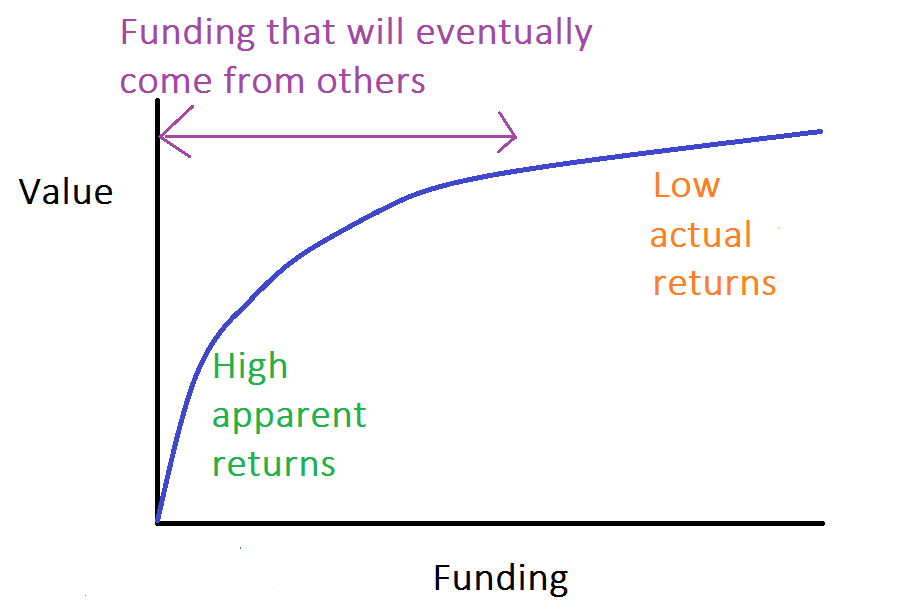

Returns look high before big players enter

Consider the cause of figuring out technical details for how to control advanced artificial general intelligence (AGI). In 2014, I'd guess this work has maybe ~$1 million in funding per year, give or take. Because very few people are exploring the question, the leverage of such research appears extremely high. So it's tempting to say that the value of supporting this research is immense. But consider that if the problem is real, it seems likely that the idea will accumulate momentum and would have done so to some degree even in the absence of the current efforts. Already in 2014 there seems to be an increasing trend of mainstream academics and public figures taking AGI control more seriously. In the long run, it's plausible that AGI safety will become a recognized subfield of computer science and public discourse. If that happens, there will be much more investigation into these topics, which means that work done now will comprise a tiny portion of the total. (I originally wrote this paragraph in mid-2014. As of late 2017, this prediction seems to have largely come true!)

Consider the following graph for the returns from investment in the cause of AGI safety. Right now, because so little is known, the returns appear huge: This is the early, steep part of the curve. But if significant funding and expertise converge on the issue later, then the marginal value of the work now is much smaller, since it only augments what would have happened anyway.

Paul Christiano makes a similar point in the language of rates of return:

A mistake I sometimes see people make is using the initial rates of return on an investment to judge its urgency. But those returns last for a brief period before spreading out into the broader world, so you should really think of the investment as giving you a fixed multiplier on your dollar before spreading out and having a long-term returns that go like growth rates.

See also my "The Haste Consideration, Revisited". Because multiplication is commutative, in oversimplified models, it shouldn't matter when the periods of high growth rates come. For instance, suppose the typical growth rate is 3%, but when the movement hits a special period, growth is 30%. 1.3 * 1.3 * 1.03 * 1.03 = 1.03 * 1.03 * 1.3 * 1.3. Of course, in practice, the set of growth rates will vary based on the ordering of events.

The picture I painted here assumed the topic would go mainstream at some point. But arguably the most important reason to work on AGI safety now is to set the stage so that the topic can go mainstream later. Indeed, a lot of the attention the topic has received of late has been due to efforts of the "early adopters" of the cause. Making sure there will be big funding later on seems arguably the most important consequence of early work. This consideration argues why early work is indeed highly valuable, even though the value is mainly indirect.

Argument 3: Cross-fertilization

Thought communities are connected. Ideas like effective altruism that persuade people on one dimension—say, the urgency of global poverty—also tend to persuade people on other dimensions—such as the importance of shaping the far future to avoid allowing vast amounts of suffering to occur. Slippery slopes are not really logical fallacies but are often genuine and important. Encouraging people to take the first step toward caring about animals by not eating cows and chickens might later lead them to see the awfulness of predation in the wild, and they might eventually also begin to take the possibility of insect suffering seriously. Just as no man is an island, no idea or movement is either.

Suggesting that one charity is astronomically more important than another assumes a model in which cross-pollination effects are negligible. But as quantum computer scientists know, it's extremely hard to keep a state from entangling with its environment. Likewise with social networks: If you bring people in to cause W, some fraction will trickle from there to causes X, Y, and Z that are connected to W. Even if you think W is useless by itself, if 5% of the people you bring to cause W move to cause X, then adding new people to cause W should be about 5% as good as adding them to cause X. Of course, people may flow the other way, from X back to W, and maybe promoting cause W too successfully would drag people away from X. Regardless, it's unlikely all these effects will exactly cancel, and in the end, working on cause W should be at least an appreciable fraction of 5% as important (in either a positive or negative way) as X itself, even ignoring any direct effects of W. (See also "Appendix: Concerns about distracting people.")

As an example, consider a high-school club devoted to international peace. You might say those students won't accomplish very much directly, and that's true. They won't produce research papers or host a peace-building conference. But they will inspire their members and possibly their fellow students to become more interested in the cause, and perhaps some of them will go on to pursue careers in this field, drawing some inspiration from that formative experience. Of course, some high-school clubs will have negative impacts in the same way, by drawing people's attention from more important to less important or actively harmful pursuits. But regardless of the sign, the magnitude of impact per dollar should not differ hundreds of times from that of, e.g., a professional institute for peace studies.

Example: Veg outreach vs. welfare reforms

Cross-fertilization also matters for the projects worked on as well as the people working on them. Suppose you believe that the dominating impact of animal charity is encouraging people to care about animal suffering in general, with implications for the trajectory of the future. Naively you might then think that veg outreach is vastly more important than, say, animal-welfare reforms, because veg outreach is spreading concern, while welfare reforms are just helping present-day animals out of the sight of most people.

But this isn't true. For one thing, legislative reforms—especially ballot initiatives like Proposition 2—involve widespread outreach, and many people learn about animal cruelty via such petitions and letters to Congress. Moreover, laws are a useful memetic tool, because activists can say things like, "If dog owners treated their dogs the way hog farmers treat their pigs, the dog owners would be guilty of violating animal-cruelty laws," and so on. Given that beliefs often follow actions rather than preceding them, having a society where animals are implicitly valued in legislation or industry welfare standards can make a subtle difference to vast numbers of people. Now, this isn't to claim that laws are necessarily on the same order of magnitude for antispeciesism advocacy as direct veg outreach, but they might be—indeed, it's possible they're better. Of course, veg outreach can also inspire people to work on laws, showing that the cross-pollination is bidirectional. The broader point is, until much more analysis is done, I think we should regard the two as being roughly in the same ballpark of effectiveness, especially when we consider model uncertainty in our assessments.

And as mentioned earlier, there's crossover of people too. By doing welfare advocacy, you might bring in new activists who then move to veg outreach. Of course, the reverse might happen as well: Activists start out in veg outreach and move to welfare. (I personally started out with a focus on veg outreach but may incline more toward welfare because I think animal welfarism encourages much more rational thinking about animals and is potentially more likely to recognize the importance of averting wild-animal suffering. In contrast, many veg*ans ideologically support wilderness.)

Fungibility of good

Holden Karnofsky coined the term "fungibility of good" to describe the situation in which solving some problems opens up resources to focus on other problems. For instance, maybe by addressing the worst abuses of lab animals, more people are then able to focus on the welfare of (far more numerous) farm animals. So even if you think helping lab animals is negligibly valuable compared with helping farm animals in terms of direct impact, helping lab animals may still conduce to other people focusing on farm animals.

That said, the reverse dynamic is also possible: The more people focus on lab animals, the more attention that cause gets, and the more further funding it can attract—stealing funding from farm-animal work. Therefore, this point is only tentative.

Argument 4: Your idea is probably easy to invent or misguided

Suppose you find a charity that you think has an astoundingly important idea. If this charity isn't funded, it may dwindle and die, and the idea may not gain the audience that it deserves. Isn't funding this charity then overwhelmingly cost-effective?

The charity does sound promising, yes, but here are two possibilities that rein in expectations:

**Epistemic modesty. **If the idea hasn't been proposed before, maybe there's a good reason for that? Maybe it's a reason you haven't thought of yet? If something seems too good to be true, perhaps your analysis is flawed—if not completely, then at least enough to downgrade the seeming astronomical importance. When we conclude that one cause is astronomically more important than another, we typically do so within the framework of a model or perhaps a few models. But we should also account for model uncertainty.

Rare breakthroughs are rare. Some new ideas are relatively easy to invent, and others are hard. If you invent what you think is a new idea, chances are it was an easy idea, because it's much more rare for people to invent hard ideas. The fact that you thought of it makes it more likely that someone else would also have thought of it. Thus, the value of your particular discovery is probably lower than it might seem. Likewise with an organization to promote the idea: Even if your organization fails, perhaps someone else will re-invent the idea later and spread it then. When an idea or cause will be invented multiple times, our impact comes from just inventing it sooner or at more critical periods in humanity's development. It's less probable we'd be the only ones to ever invent it.

These mediocritarian considerations are weakened if you have a track record of genius. Albert Einstein or Srinivasa Ramanujan—after seeing that they had made several groundbreaking discoveries—should rightly have assessed themselves as worth hundreds of ordinary scientists. Minor geniuses can also rightly assess themselves as having more altruistic potential than an average person.

In general, though, people in other communities than our own are not dumb. Often traditional norms and ways of doing business have wisdom, perhaps implicit or only tribally known, that's hard to see from the outside. In most cases where someone thinks he can do vastly better than others by applying some new technique, that person is mistaken. Of course, we can try, just like some startup founders can shoot to become the next Facebook. But that doesn't mean we're likely to succeed, and our estimates should reflect that fact.

Argument 5: Logical action correlations

Typically claims for the astronomical dominance of some charities over others rely on a vision of the universe in which the colonization of our supercluster depends upon the trajectory of Earth-originating intelligence. It would seem that the only astronomical impacts of our actions would come through the "funnel" of influencing the nature of Earth-based superintelligence and space colonization, and actions not affecting this funnel don't matter in relative terms.

As I've pointed out already, any action we take does affect this funnel in some way or other via flow-through effects, cross-fertilization, etc. But another factor deserves consideration as well: Given that some algorithms and choices are shared between our brains and those of other people, including large post-human populations, our choices have logical implications for the choices of other people, including at least a subset of post-humans to a greater or lesser degree.

Following is an unrealistic example only for purposes of illustration. Suppose you're deciding between two actions:

work to shape the funnel of the takeoff of Earth-originating intelligence in order to reduce expected future suffering by 10^-20 percent

help prevent suffering in the short term, which itself has negligible impact on total suffering, but which logically implies a tiny difference in the decision algorithms of all ~10^40 future people, implying a total decrease in suffering of 10^-19 percent.

Here, the short-term action that appeared astronomically less valuable when only looking at short-term impact (ignoring flow-through effects and such) ended up being more valuable because of its logical implications about decision algorithms in general.

As I said, this example was just to clarify the idea. It's not obvious that reducing short-term suffering actually does imply better logical consequences for the actions of others; maybe it implies worse consequences. In general, the project of computing the logical implications of various choices seems to be a horrendously complicated and not very well studied. But by adding yet more variance to estimation of the cost-effectiveness of different choices, this consideration reduces further the plausibility that a random pair of charities differs astronomically.

Fundraising and meta-charities

In addition to donating to a charity directly, another option is to support fundraisers or meta-charities that aim to funnel more donations toward the best regular charities.

Fundraising can sometimes be quite effective. For instance, every ~$0.20 spent on it might yield $1 of donations. However, even taken at face value, this is only a 5X multiplier on the value of dollars spent, and the figure shouldn't be taken at face value. Fundraising only works if the rest of the organization is doing enough good work to motivate people to donate. The publicity that a charity generates by its campaigns is an important component of attracting funds and maintaining existing donors, and that publicity requires direct, in-the-trenches work on the charity's programs. So it's not as though you can just continue adding to the charity's fundraising budget while keeping everything else constant and maintain the 5X return on investment. Marginal fundraising dollars will be less and less effective, and at some point, fundraising might cause net harm when it starts to spam donors too much.

Organizations like Giving What We Can (GWWC) share similarities with fundraising but also differ. GWWC is independent of the charities to which it encourages donations, which may reduce donor backlash against excessive fundraising. It also encourages more total giving, which may create donations that wouldn't have happened anyway. In contrast, fundraising for charity X might largely steal donations from charities Y and Z (though how much this is true would require research). GWWC estimates that based on pledged future donations and consideration of counterfactuals, it will generate ~60 times as many dollars to top charities as it spends on its own operations. I tinkered with GWWC's calculation to reduce the optimism in some of the estimates, and I got an ending figure of about ~20 times rather than ~60. This is still reasonably impressive. However:

- The calculation uses sequence thinking and should be reined in based on priors against such high multipliers.

- My impression is that GWWC has been one of the more successful charities, so this figure has a bit of selection bias; other efforts that might have looked similar to GWWC's ex ante probably didn't take off as well. There are plenty examples of business startups that surpass most people's expectations for growth by hundreds of times, but these are the exceptions rather than the rules. The ex ante expected value of a given startup is high but not astronomically high.

- Because these figures are pretty impressive, and because GWWC has so many supporters, I expect GWWC will be able to convince a reasonable number of donors to keep funding it, which means your particular donations to GWWC might not make a big difference in a counterfactual sense as to whether GWWC's work continues.

Beyond the specific arguments that I put forward, part of my skepticism just comes from claims of astronomical cost-effectiveness "not passing the sniff test" given how competent lots of non-GWWC charity workers are and how easy it is to come up with a charity like GWWC. (Tithing and giving pledges are nothing new.) If GWWC really has stumbled on an amazingly effective new approach, eventually that approach should be replicated until marginal returns diminish, unless one thinks that GWWC's discovery will languish because outsiders irrationally won't see how amazing it is.

Charities that claim extraordinary cost-effectiveness based on unusual axiological assumptions, such as consideration of astronomical outcomes in the far future or the neglected importance of insect suffering, seem slightly more plausible, since many fewer people are devoting their lives to such issues. In contrast, probably thousands of smart people outside the effective-altruism community are already promoting and raising money for effective developing-world charities.

In general, I think it's plausible that meta-charities with sound business models will tend to have more impact—maybe several times more impact—than the regular charities they aim to support, but I remain skeptical that a good meta-charity for a cause is more than ~5 times more cost-effective, in terms of marginal ex ante expectation relative to the counterfactual where you personally don't donate, than a good regular charity for that cause once all factors are considered.

The distribution of effectiveness

When talking about the impact of a charity or other use of money, we need a reference point: impact relative to what? Let's say the reference point is that you burn the money that you would have donated (in a hypothetical world where doing so was not illegal). This of course has some effect in its own right, because it causes deflation and makes everyone else's money worth slightly more. However, we have to set a zero point somewhere.

All spending has impact

Now, relative to this reference point, almost everything you spend money on will have a positive or negative effect. It's hard to avoid having an impact on other things even if you try. Spending money is like pushing on how society apportions its resources. The world has labor and capital that can be applied to one task or another (charity work, building mansions, playing video games, etc.), and any way you spend money amounts to pushing more labor/capital toward certain uses and away from others. Money doesn't create productive capacity; it just moves it. And when we move resources in one region, "we find it hitched to everything else" in the economy. I picture spending money like pulling up a piece of dough in one spot: It increases that spot and also tugs (either positively or negatively) on other dough, most strongly the other dough in its vicinity.

Even different non-charitable uses of money may have appreciably different impacts. Buying an expensive watch leads people to spend more time on watch design, construction, and marketing. Buying a novel leads to slightly more fiction writing. Buying a cup of coffee leads to more coffee cultivation and more Starbucks workers. The recipients of your money may go on to further spend it on different kinds of things, and those effects may be relevant as well.

Buying a self-published philosophy book for your own personal enjoyment isn't typically seen as charity, but paying someone to write about philosophy is presumably more helpful to society than paying someone to spend 400 hours carefully crafting the $150,000 Parmigiani Kalpa XL Tourbillon watch, only to serve the purpose of conspicuous consumption in a status arms race.

In general, there's no hard distinction between charity vs. other types of consumption and investment. There's just spending money and encouraging some activities more than others, with society classifying some of those activities as "charity." The ethical-consumerism movement recognizes the impact of dollars outside of charitable spending. For instance, vegetarianism is premised on the idea that buying meat leads to more animal farming. (There's an ethical-investment movement too, though it's less clear how much impact this can have in an efficient capital market.)

One way to state the argument in this piece is as follows. Suppose you spend $5 million on a seemingly worthless charity. That's $5 million of spending that occurs within the economy, and such spending must have side effects (both good and bad) on other things that happen in the economy. It would seem to be a miracle if a whole $5 million of economic activity had a net impact on the altruistic things you care about smaller than, e.g., donating just $1 to the most cost-effective charity you can think of.

Case study: Homeopaths Without Borders

What about Homeopaths Without Borders (HWB)? Aren't they a textbook case of a charity with literally zero impact? Well, the medicine they provide does have no mechanistic effect on patient health, but that's not the end of the story. Consider some additional impacts of HWB:

- Providing homeopathic treatment itself. [May have either negative or positive consequences. For instance, if it leads people not to seek other treatment, it's harmful. If it has helpful placebo effect and makes people feel cared for, it's potentially positive. Maybe HWB also brings additional non-medical services?]

- Allowing developed-world people to experience developing-world conditions, potentially leading to actually useful altruism of other sorts later. [Somewhat positive.]

- Setting up offices and homeopathic schools in poor communities. [Sign isn't clear.]

- Promoting the idea of homeopathy, both in the countries where HWB works and in countries where they attract donors. [Moderately bad.]

- Drawing away well-meaning volunteers who could be doing actually helpful work for poor people if they hadn't been caught by HWB's marketing materials. [Very bad.]

- Donation drives encourage well-meaning people to donate to HWB instead of legitimate international-health charities. [Very bad.]

Consider just the issue of drawing away donors from legitimate groups. Suppose that 5% of people from whom HWB raises money would have given to a legitimate international-health charity like Oxfam or UNICEF if they hadn't given to HWB. Also assume that HWB spends marginal donations on fundraising at the same rate as it spends its whole budget on fundraising. Then donating D dollars to HWB is, on this dimension alone, 5% as bad as donating D dollars to Oxfam or UNICEF is good because HWB steals 5% of D dollars from those kinds of charities.[2] If marginal HWB donations go relatively less to fundraising, or if marginal fundraising is less effective per dollar, then the harm drops to less than 5%, but probably not that much less. And when you combine in all the other impacts that HWB has, the negative consequences could be greater.

That said, it's possible the cross-fertilization effect goes the other way. Maybe HWB attracts primarily homeopathy disciples and encourages them to take interest in international health. Then, if 5% of them eventually learn that homeopathy is medically useless, they might remain in the field of international health and do more productive work. In this model, HWB would have a positive effect of channeling counterfactual homeopathy people and dollars toward programs that have spillover effects of creating a few serious future international-health professionals.

So theoretically the effect could go either way, and we'd need more empirical research to determine that. However, I find it extremely unlikely that these effects are all going to magically cancel out. There are just too many factors at play. And while opposing considerations may cancel out to some extent, more considerations still means higher variance. For example, suppose HWB's total expected effect is a sum of N independent components C1 to CN. Based on past experience, but before doing any research on HWB itself, we approximate these components as coming from a distribution with mean zero and standard deviation σ. Then a priori, Σi Ci has mean zero and standard deviation σ * sqrt(N). In other words, the cumulative effect tends to be farther from zero than any individual effect, although not N times farther.

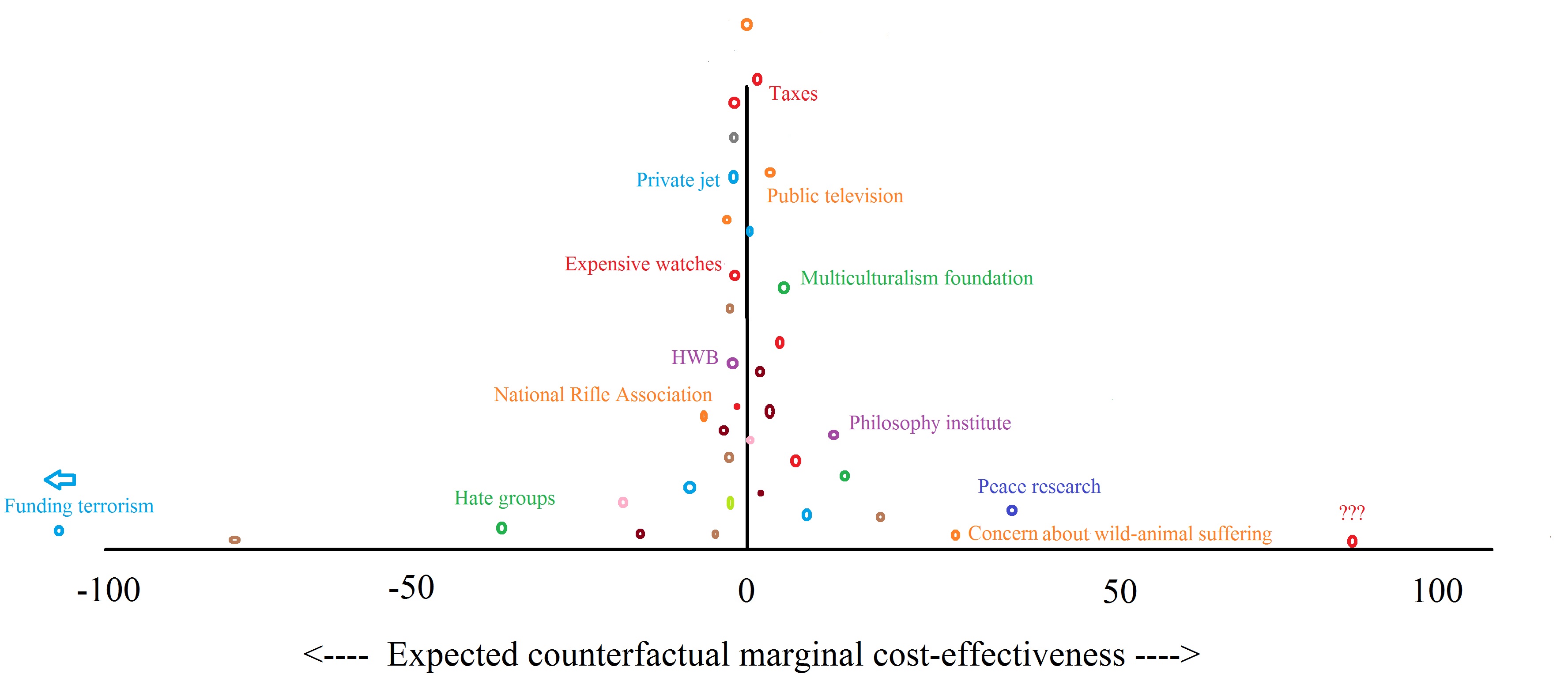

A sample cost-effectiveness distribution

We might think of the distribution of impact of various uses of money as is shown in the following figure, where colored dots represent a way to spend money, and collectively they illustrate the shape of the frequency distribution for uses of money that we actually observe being done. The vertical location of a dot doesn't mean anything; the dots just vary on the vertical dimension to give them space so that they won't be crowded and so that they collectively trace out a bell-shaped curve.

I don't claim to know exactly the shape or spread of the curve, nor would I necessarily stand by my example charity assessments if I thought about the issues more. This is just an off-the-cuff illustration of what I have in mind.

Burning money should be the weighted center of the distribution of presently undertaken monetary expenditures, because deflation helps all other uses of money in proportion to their volume. Also note that it's not always true that charities are within a few hundreds of times the expected effectiveness of one another. A rare few lie in the tails, at least for a brief period, just like a few rare investments really can dramatically outperform the market temporarily. Or, if we compare some typical charity X against some charity Y that happens to fall exceedingly close to the zero point, the ratio of cost-effectiveness for X to Y will indeed be large. But this is an exception and it's not what I mean when I say that most charities don't differ by more than tens to hundreds of times.

Effectiveness entropy

Some of the above arguments boil down to pointing out that the variance of our cost-effectiveness estimates tend to increase as we consider more factors and possibilities. For instance, consider the argument about flow-through effects. Prior to incorporating flow-through effects, we might compute

effectiveness of charity = years of direct animal suffering prevented per dollar,

or, to abbreviate:

charity = direct_suff_prev.

This estimate has some variance:

Var(charity) = Var(direct_suff_prev).

Now suppose we consider N flow-through effects of the charity (FTE1, ..., FTEN), all of which happen to be probabilistically independent of each other. Then

charity = direct_suff_prev + FTE1 + ... + FTEN.

Since the effects are independent, the variance of the sum is the sum of the variances:

Var(charity) = Var(direct_suff_prev) + Var(FTE1) + ... + Var(FTEN).

In other words, the variance in our effectiveness estimate increases dramatically as more considerations are incorporated. Other arguments discussed above, like cross-fertilization and consideration of action correlations, also add complexity and hence variance to our estimates.

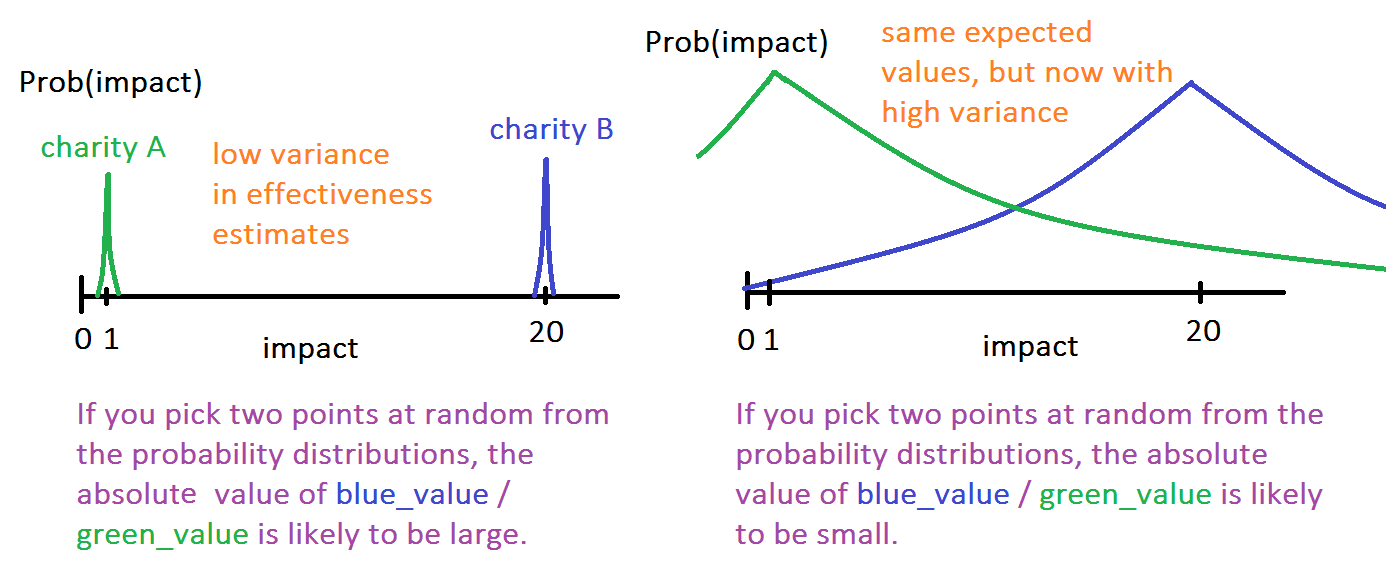

This is relevant because as the variance of our subjective probability distribution for the net impact of each charity grows, the (absolute value of the) ratio of the actual cost-effectiveness values of the two charities will probably shrink:

(Why am I talking about actual cost-effectiveness values if, as the title of this piece says, my argument refers to expected cost-effectiveness? See the next subsection.)

This phenomenon is similar to the way in which those who think a policy question is obvious and purely one-sided tend to know least about the topic. The more you learn, the more you can see subtle arguments on different sides that you hadn't acknowledged before.

We can think of this as "entropy" in cost-effectiveness. In a similar way as it's rare in a chemical solution to have ions of type A cluster together in a clump separated from ions of type B, it's rare to see charities whose efforts have so little uncertainty that we can be confident that, upon further analysis, the effectiveness estimates will remain very far apart. Rather, the more uncertainty we have, the more the charities tend to blur together, like different types of ions in a solution. (The analogy is not perfect but only illustrative. Diffusion should almost perfectly mix ions in a solution, while real-world charities do retain differences in expected cost-effectiveness.)

Objection: Expected value is all that matters

In my diagram above, I kept the expected values of charities A and B the same, only increasing the variance of our subjective probability distributions for their effectiveness. But if we're forced to make a choice now, we should maximize expected value, regardless of variance. So don't the expected values still differ astronomically?

Yes, but variance is important. For one thing, the absolute ratio of actual effectiveness is probably lower than the difference in expected values. Of course, you can never observe the actual effectiveness, since this requires omniscience. But you can reduce the variance in your estimates by studying the charities further and refining your analyses of them. Studying further leads to wiggling the expected-value estimates around a bit and reducing the variances of the charities' probability distributions. This is like a less pronounced version of drawing a single point from the distributions. In particular, charities that started out at almost zero in expected value will tend to move left or right somewhat, which significantly reduces the absolute value of effectiveness ratios.

If you're forced to make an irrevocable tradeoff between charities now, and if you've done your Bayesian calculations perfectly, then yes: trade off the charities based on expected values without concern for variance. But if you or others will have time to study the issues further and refine the effectiveness estimates, you can predict that the absolute ratio of expected values will probably go down rather than up on further investigation.

At this point, you might be wondering: If we expect the absolute effectiveness ratio to decrease with further investigation, why not get ahead of the curve and reduce our tradeoff ratio between the charities ahead of time? The following example illustrates the answer:

Example: Suppose charity X has expected value of literally 0. Charity Y has expected value of 1000. If you had to donate money right now, you'd favor Y over X to an infinite degree. If instead you waited and learned more, X would almost certainly move away from zero in expected value, but if its effectiveness probability distribution is symmetric, it's just as likely to turn out to be negative as to be positive, so donating to X is just as likely to cause harm as benefit. This is why you shouldn't jump the gun and be more willing to donate to X ahead of time.

If charities could only have positive effects, then an increase in variance would automatically imply an increase in expected value. But in that case, if both charities had large variances, the expected values themselves wouldn't have differed astronomically to begin with.

As a footnote, I should add that there are some cases where increased variance probably increases the absolute ratio of effectiveness. For instance, if two charities start out with the same expected values (or if one charity has the negative of another's expected value), then further investigation has to accentuate the multiplicative ratio between the charities (since the absolute value of the ratio of the charity with the bigger-magnitude expected value to the charity with the smaller-magnitude expected value can't be less than 1).

Why does this question matter?

Maybe it's true that charities don't necessarily differ drastically in expected cost-effectiveness. Why is this relevant? We should still pick the best charities when donating, regardless of how much worse the other options are, right?

Yes, that's right, but here are a few of many examples showing why this discussion is important.

- Not appearing naive. If we talk about differences among charities that are thousands of times, sophisticated observers may consider us young and foolish at best, or arrogant and deceptive at worst.

- Competing for donors. Suppose that for the same amount of effort, you can either (A) raise $500 from totally new donors who wouldn't have otherwise donated or (B) raise $600 from existing donors to so-called less effective charities. If you really think your charity is vastly better, you'll choose option (B). If you recognize that the charities are closer in effectiveness than they seem, option (A) appears more prudent. Of course, one could also make game-theoretic or rule-utilitarian arguments not to steal other people's donors, although some level of competition can be healthy to keep charities honest.

- Movement building vs. direct value creation. A generalization of the point about competing for donors is that when we recognize the value of charity work outside our sphere of involvement, the value of spreading effective altruism is slightly downshifted compared with the value of doing useful altruism work directly, because spreading effective altruism sometimes takes away resources from existing so-called "non-effective" altruist projects. The downshift in emphasis on movement building may be small. For one thing, many new effective altruists may not have done any altruism before and thus don't have as much opportunity cost. In addition, even if differences in effectiveness are only, say, 3 times, between what the activists are now doing versus what they had been doing, that's still 2/3 as good per activist as if they had previously been doing nothing.

- Hesitant donors. A mainstream donor approaches you asking for a suggestion on where to give. Because she comes from a relatively conventional background, she'd be weirded out by the charity that you personally think is most effective. If you believe your favorite charity really is vastly better than alternatives, you might take a gamble and suggest it anyway, knowing she'll probably reject it. If you think other, more normal-seeming alternatives are not dramatically worse, you might recommend them instead, with a higher chance they'll be chosen.

- Your relative strengths. Suppose you're passionate about field A and have unusual talents in that domain. However, a naive cost-effectiveness estimate says that field B is vastly more important than field A. You resign yourself to grudgingly working in field B instead. But if your estimate of the relative difference is more refined, you'll see that A and B are closer than they seem, and combined with your productivity multiplier for working in field A, it may end up being better for you to pursue field A after all. Holden Karnofsky made a similar point in the end of an interview with 80,000 Hours. (Of course, it's also important not to assume prematurely that you know what field you'd enjoy most.)

- General insight. Having a more accurate picture of how various activities produce value is broadly important. One could similarly ask, Why should I read a proof of this theorem if I already know the theorem is true? It's because reading the proof enhances your understanding of the material as a whole and makes you better able to draw connections and produce further contributions.

Acknowledgments

This piece was inspired by a blog post from Jess Riedel. I clarified the discussion further in response to feedback from Ben Kuhn and Ben West, and a later round from Darren McKee, Ben Kuhn, and several others.

See also

An argument for apportioning resources somewhat evenly among causes.

Appendix: Concerns about distracting people

Suppose you think cause X is vastly more intrinsically important than cause W. Some fraction of people working on X might stray and work on W if you talk too much about cause W. This might lead you to be nervous about distracting X people with W. On the other hand, by being involved with W, you can bring W people toward cause X. Thus, the flow can go both ways, and being involved with W isn't obviously a bad idea on balance.

One way to attempt to deal with this is to socialize more with the W supporters, talking openly about X with them, while not mentioning W when socializing with the X supporters. However, sometimes the bidirectionality is harder to evade. One example is this website: Because I discuss many issues at once, some supporters of the more important topics will get distracted by less important ones, while some supporters of the less important topics will through this site find the more important ones. For web traffic, this may be basically unavoidable. For directed traffic, I suppose I could aim to promote my site more among supporters of the less important causes.

Ideally one would hope that the more important cause would be "stickier" in terms of better retaining the people who had found it, while members of the less important cause would be more likely to gravitate to the more important cause if they knew about it. In practice I'm not sure this is the case. I think a lot of how people choose causes is based on what other people are doing rather than a pure calculation. To a large extent this makes sense, because what others believe is important evidence for what we should believe, but this can also lead to information cascades.

There are also more complicated possibilities. For example, maybe there are supporters of cause V whom your website convinces to support cause W, which tends to be a stepping stone on the way to cause X. Then if you get V readers who move to W, and not a lot of W readers who move back to V, this could be a good tradeoff. And so on.

Of course, other jobs where people would work would also provide training, so training by any particular charity may not be a counterfactual benefit. Or it might be if the counterfactual jobs would be in, say, shoe shining. In general, a flow-through analysis should consider replaceability questions. If people weren't working for the health charity or writing beetle papers, maybe they would be doing something with even higher impact, so funding health charities or beetle papers pulls them away from better things. Of course, it might also pull them away from worse things. Either way, the effect on labor pools is an important consideration. If you find a charity with really high marginal impact because its employees are so effectiveness-minded, then chances are they would also be doing something really effective if they weren't working for this organization, so the impact of your dollars may be less than it seems. ↩︎

In case this isn't clear, here's the reasoning in more detail. Say HWB's annual budget is B dollars. Some fraction f of it is spent on fundraising, and suppose that any additional donation is also devoted to fundraising in the proportion f. Each year, f * B fundraising dollars yield another budget of size B, so the multiplier of "dollars raised" to "dollars spent on fundraising" is 1/f, assuming unrealistically that each fundraising dollar contributes the same increase in donations. Suppose you donate D dollars to HWB. f * D dollars go to fundraising, and this yields f * D * (1/f) = D dollars to HWB from its future fundraising efforts. Because 5% of these D dollars raised would have gone to charities like Oxfam, your D-dollar donation is taking away 0.05 * D dollars from charities like Oxfam. In practice, the amount taken from Oxfam-like charities may be lower if marginal fundraising dollars don't have constant fundraising returns or if marginal donated dollars go to fundraising with a proportion less than f. Also keep in mind that the division between "fundraising dollars" and "program dollars" is somewhat artificial. In practice, all of a charity's activities contribute toward fundraising because the more it does, the more impressive it looks, the more media mentions and website references it gets, and so forth. This suggests that even if HWB explicitly spent no marginal dollars on fundraising-categorized expenses, it would still expand its donations just by being able to have more bodies doing more work. ↩︎

Eight years later, I still think this post is basically correct. My argument is more plausible the more one expects a lot of parts of society to play a role in shaping how the future unfolds. If one believes that a small group of people (who can be identified in advance and who aren't already extremely well known) will have dramatically more influence over the future than most other parts of the world, then we might expect somewhat larger differences in cost-effectiveness.

One thing people sometimes forget about my point is that I'm not making any claims about the sign of impacts. I expect that in many cases, random charities have net negative impacts due to various side effects of their activities. The argument doesn't say that random charities are within 1/100 times as good as the best charities but rather that all charities have a lot of messy side effects on the world, and when those side effects are counted, it's unlikely that the total impact that one charity has on the world (for good or ill) will be many orders of magnitude higher than the total impact that another charity has on the world (for good or ill).

I think maybe the main value of this post is to help people keep in mind how complex the effects of our actions are and that many different people are doing work that's somewhat relevant to what we care about (for good or ill). I think it's a common trend for young people to feel like they're doing something special, and that they have new, exciting answers that the older generations didn't. Then as we mature and learn about more different parts of the world, we tend to become less convinced that we're correct or that the work we're doing is unique. On the other hand, it can still be the case that our work may be a lot more important (relative to our values) than most other things people are doing.

This was useful pushback on the details of a claim that is technically true, and was frequently cited at one point, but that isn't as representative of reality as it sounds.

"This is relevant because as the variance of our subjective probability distribution for the net impact of each charity grows, the (absolute value of the) ratio of the actual cost-effectiveness values of the two charities will probably shrink"

I have written this simple program to confirm that statement.

Thanks for posting this.

Just a quick note that it confused me a little to see the statement "And differences of 1030 are almost impossible" until I realised it is meant to be 10^30. It might be worth editing the post to make that clear.

Edited to fix this, thanks!

Yeah, I was about to say the same thing. Looks like some exponents got lost in the crossposting.