Habryka [Deactivated]

Bio

Head of Lightcone Infrastructure. Wrote the forum software that the EA Forum is based on. I've historically been one of the most active and highest karma commenters on the forum. I no longer post or comment here, and recommend the same to others.

My best guess is EA at large is causing large harm for the world, and there is no leadership or accountability in-place to fix it. Many of the principles are important, but I don't think this specific community embodies these principles very much and often actively sabotages them.

Posts 39

Comments1442

Topic contributions1

(I think this level of brazenness is an exception, the broader thing has I think occurred many dozens of times. My best guess, though I know of no specific example, is that probably as a result of the FTX stuff, many EA organizations changed websites and made requests to delete references from archives, in order to lower their association with FTX)

> Risk 1: Charities could alter, conceal, fabricate and/or destroy evidence to cover their tracks.

I do not recall this having happened with organisations aligned with effective altruism.

(FWIW, it happened with Leverage Research at multiple points in time, with active effort to remove various pieces of evidence from all available web archives. My best guess is it also happened with early CEA while I worked there, because many Leverage members worked at CEA at the time and they considered this relatively common practice. My best guess is you can find many other instances.)

Now, consider this in the context of AI. Would the extinction of shumanity by AIs be much worse than the natural generational cycle of human replacement?

I think the answer to this is "yes", because your shared genetics and culture create much more robust pointers to your values than we are likely to get with AI.

Additionally, even if that wasn't true, humans alive at present have obligations inherited from the past and relatedly obligations to the future. We have contracts and inheritance principles and various things that extend our moral circle of concern beyond just the current generation. It is not sufficient to coordinate with just the present humans, we are engaging in at least some moral trade with future generations, and trading away their influence to AI systems is also not something we have the right to do.

(Importantly, I think we have many fewer such obligations to very distant generations, since I don't think we are generally borrowing or coordinating with humans living in the far future very much).

From a more impartial standpoint, the mere fact that AI might not care about the exact same things humans do doesn’t necessarily entail a decrease in total impartial moral value—unless we’ve already decided in advance that human values are inherently more important.

Look, this sentence just really doesn't make any sense to me. From the perspective of humanity, which is composed of many humans, of course the fact that AI does not care about the same things as humans creates a strong presumption that a world optimized for those values will be worse than a world optimized for human values. Yes, current humans are also limited to what degree we successfully can delegate the fulfillment of our values to future generations, but we also just share, on-average, a huge fraction of our values with future generations. That is a struggle every generation faces, and you are just advocating for... total defeat being fine for some reason? Yes, it would be terrible if the next generation of humans suddenly did not care about almost anything I cared about, but that is very unlikely to happen, but it is quite likely to happen with AI systems.

Yeah, this.

From my perspective "caring about anything but human values" doesn't make any sense. Of course, even more specifically, "caring about anything but my own values" also doesn't make sense, but in as much as you are talking to humans, and making arguments about what other humans should do, you have to ground that in their values and so it makes sense to talk about "human values".

The AIs will not share the pointer to these values, in the same way as every individual does to their own values, and so we should a-priori assume the AI will do worse things after we transfer all the power from the humans to the AIs.

In the absence of meaningful evidence about the nature of AI civilization, what justification is there for assuming that it will have less moral value than human civilization—other than a speciesist bias?

You know these arguments! You have heard them hundreds of times. Humans care about many things. Sometimes we collapse that into caring about experience for simplicity.

AIs will probably not care about the same things, as such, the universe will be worse by our lights if controlled by AI civilizations. We don't know what exactly those things are, but the only pointer to our values that we have is ourselves, and AIs will not share those pointers.

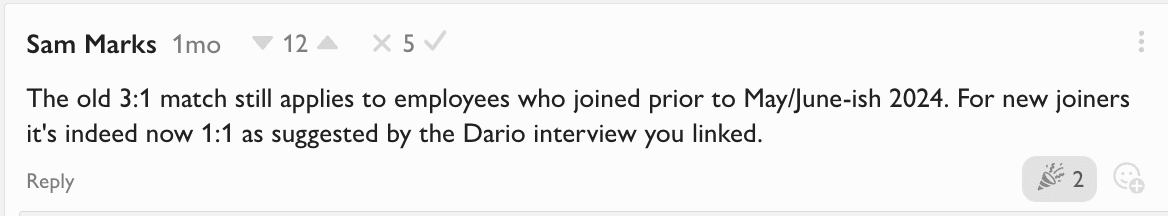

It's been confirmed that the donation matching still applies to early employees: https://www.lesswrong.com/posts/HE3Styo9vpk7m8zi4/evhub-s-shortform?commentId=oeXHdxZixbc7wwqna

So long and thanks for all the fish.

I am deactivating my account.[1] My unfortunate best guess is that at this point there is little point and at least a bit of harm caused by me commenting more on the EA Forum. I am sad to leave behind so much that I have helped build and create, and even sadder to see my own actions indirectly contribute to much harm.

I think many people on the forum are great, and at many points in time this forum was one of the best places for thinking and talking and learning about many of the world's most important topics. Particular shoutouts to @Jason, @Linch, @Larks, @Neel Nanda and @Lizka for overall being great commenters. It is rare that I had conversations with any of you that I did not substantially benefit from.

Also great thanks to @JP Addison🔸 for being the steward of the forum through many difficult years. It's been good working with you. I hope @Sarah Cheng can turn the ship around as she takes over responsibilities. I still encourage you to spin out of CEA. I think you could fundraise. Of course the forum is responsible for more than 3% of CEA's impact by I think most people's lights, and all you need is 3% of CEA's budget to make a great team.

I have many reasons for leaving, as I have been trying to put more distance between me and the EA community. I won't go into all of them, but I do encourage people to read my comments over the last 2 years to get a sense of them, I think there is some good writing in there.

The reason I think I would be most amiss to not mention here is the increasing sense of disconnect I have been feeling between what once was a thriving and independent intellectual community, open to ideas and leadership from any internet weirdo that wants to do as much good as they can, and the present EA community whose identity, branding and structure is largely determined by a closed-off set of leaders with little history of intellectual contributions, and with little connection to what attracted me to this philosophy and community in the first place. The community feels very leaderless and headless these days, and in the future I only see candidates for leadership that are worse than none. Almost everyone who has historically been involved in a leadership position has stepped back and abdicated that role.

I no longer really see a way for arguments, or data, or perspectives explained on this forum to affect change in what actually happens with the extended EA community, especially in domains like AI Safety Research, AGI Policy, internal community governance, or more broadly steering humanity's development of technology in positive directions. I think while shallow criticism often gets valorized, the actual life of someone who tries to make things better by trying to reward and fund good work and hold people accountable, is one of misery and adversarial relationship, accompanied by censure, gaslighting and overall a deep sense of loneliness.

To be clear, there has always been an undercurrent of this in the community. When I was at CEA back in 2015 we frequently and routinely deployed highly adversarial strategies to ensure we maintained more control over what people understood what EA meant, and who would get so shape it, and the internet weirdos were often a central target of our efforts to make others less influential. But it is more true now. The EA Forum was not run by CEA at the time, and maybe that was good, and funding was not so extremely centralized in a single large foundation, and that foundation still had a lot more freedom and integrity back then.

It's been a good run. Thanks to many of you, and ill wishes to many others. When the future is safe, and my time is less sparse, I hope we can take the time and figure out who was right in things. I certainly don't speak with confidence on many things I have disagreed with others on, only with conviction to try to do good even in a world as confusing and uncertain as this and to not let the uncertainty prevent me from saying what I believe. It sure seems like we all made a difference, just unclear what sign.

I won't use the "deactivate account" future which would delete my profile. I am just changing my user name and bio to indicate I am no longer active.