This post is a summary of Tiny probabilities and the value of the far future, a Global Priorities Institute Working Paper by Petra Kosonen. This post is part of my sequence of GPI Working Paper summaries.

If you’d like a brief summary, skip to “Conclusion/Brief Summary.”

Introduction

Many people reasonably doubt the probability of our actions today causing better outcomes in the distant future, leading them to eschew longtermism. After all, something feels wrong with a theory that lets tiny probabilities of enormous value dictate our actions. For instance, it leaves us vulnerable to Pascal’s mugging.

However, Kosonen argues that even if we discount small probabilities—which protects us from cases like Pascal’s mugging—longtermism is not undermined. She refutes three arguments:

- The Low Risks Argument: The probabilities of existential risks are so low that we should ignore them.

- The Small Future Argument: After we ignore unlikely outcomes (e.g., space settlement or digital minds), the number of individuals in the future is too low for longtermism to be correct.

- The No Difference Argument: The probability we make a difference in whether extinction occurs is so low we should ignore it.

The Low Risks Argument

Naive Discounting

Before discussing arguments against longtermism, Kosonen introduces one way to discount probabilities, which she calls Naive Discounting.

- Naive Discounting: Ignore probabilities below some threshold[1].

The Low Risks Argument

- The Low Risks Argument: The probabilities of existential risks are so low that we should ignore them.

In response, Kosonen argues it’s unclear what Naive Discounting says about The Low Risks Argument:

- Probabilities of existential risks from various sources in the next century seem to fall above any reasonable discounting threshold.[2]

- For any risk, we can make it specific enough to make its probability fall below the threshold (e.g., extinction from an asteroid on Jan. 4, 2055), or we can make it broad enough to fall above the threshold (e.g., extinction from a pandemic this century).

- We don’t have guidelines for choosing how specific to make these risks when assessing their probabilities, so whether they fall above or below the threshold is arbitrary.

- Hence, it’s unclear what Naive Discounting says, and it doesn’t impair longtermism based on the Low Risks Argument.

Tail Discounting

Even another, more plausible, way to discount probabilities, called Tail Discounting, doesn’t undermine longtermism.

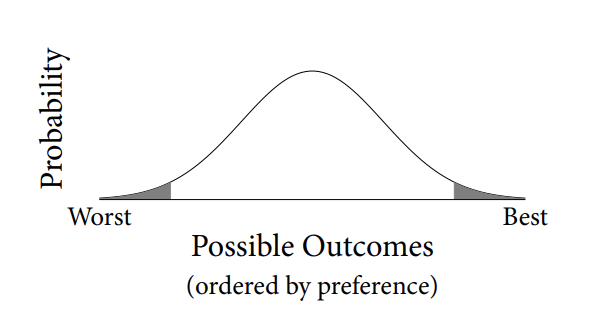

- Tail Discounting: Ignore the very best and very worst outcomes (tails). That is, ignore the grey areas under this curve:

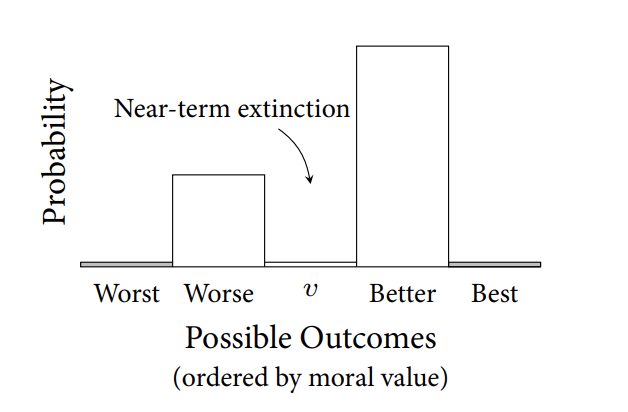

However, Kosonen argues Tail Discounting doesn’t ignore the possibility of extinction because extinction plausibly doesn’t sit in the tails—a world worse than extinction[3] is likely enough, and a world better than extinction is likely enough (see Figure 2).

Hence, Kosonen argues the Low Risks Argument—that existential risks are so unlikely we should ignore them—doesn’t erode longtermism because:

- Naive Discounting struggles with how to specify risks, so it’s unclear what it says.

- Tail Discounting doesn’t ignore extinction risks as long as there are meaningful probabilities of outcomes better and worse than extinction.

The Small Future Argument

Kosonen addresses another argument against longtermism.

- The Small Future Argument: After we ignore unlikely outcomes (e.g., space settlement or digital minds), the number of individuals in the future is too low for longtermism to be correct.

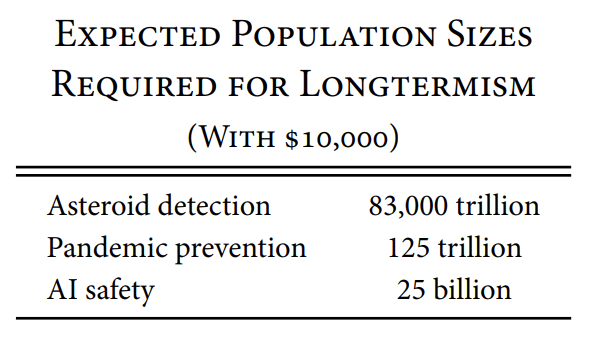

She compares the cost-effectiveness of giving $10,000 to the AMF and giving it to existential risk reduction. She calculates[4] the following effects:

- AMF: Save 2.5 lives

- Asteroid detection: Reduce the chance of extinction from an asteroid this century by 1 in 33,000 trillion[5]

- Pandemic prevention: Reduce the chance of extinction from a pandemic this century by 1 in 50 trillion[5]

- AI safety: Reduce the chance of extinction from AI this century by 1 in 10 billion[5]

Using these, she calculates the size of the future population required for existential risk reduction to beat the AMF by saving more than 2.5 lives in expectation (see Figure 3).

Assuming the population reaches and stays at 11 billion and people live for 80 years on average, she calculates the future population if humanity continues for…

- 87,000 years (based on extinction risks from natural causes[6])

- There will be 12 trillion future humans, meaning AI safety beats the AMF

- 1 million years (the average lifespan of hominins)

- The future holds 140 trillion people, meaning AI safety and pandemic prevention beat the AMF

- 1 billion years (until Earth becomes uninhabitable)

- The future population is 140,000 trillion, meaning AI safety, pandemic prevention, and asteroid detection beat the AMF

In fact, with these assumptions, humanity must continue for only 265 years for AI safety to beat the AMF. Humanity is expected to continue this long even with a 1/6 probability of extinction in the next 100 years (Toby Ord’s estimate).

Kosonen also argues that assigning a negligible probability to space settlement or digital minds is overconfident, and if we don’t ignore these possibilities, the expected number of future lives becomes far greater.

Overall, Kosonen thinks the future seems large enough for longtermism to ring true, even if we ignore unlikely possibilities.

The No Difference Argument

- The No Difference Argument: The probability we make a difference in whether extinction occurs is so low we should ignore it.

On its face, the No Difference Argument seems to impair longetermism. However, Kosonen argues it faces collective action problems. For instance, imagine an asteroid is about to hit Earth. We have multiple defenses, but each one has a very low probability of working and incurs a small cost.

Since each defense probably won’t make a difference, the No Difference Argument says we shouldn’t launch them, even if we almost certainly would stop the asteroid if we launch many of them.

Kosonen argues our decisions about whether we work on existential risk reduction are similar to the asteroid defenses; we plausibly ought to take others’ decisions into account and consider whether the collective has a non-negligible probability of making a difference. She discusses the justifications for this view as well as the problems with it on pages 27 to 33.

Moreover, she thinks the collective has a non-negligible probability of preventing extinction. For instance, spending $1 billion on AI safety would plausibly provide an absolute reduction in extinction risk of at least 1 in 100,000—though this estimate is conservative.[7]

Conclusion/Brief Summary

To recap, Kosonen argues that even if we discount small probabilities, longtermism holds true. She refutes three arguments.

- The Low Risks Argument: The probabilities of existential risks are so low that we should ignore them. She finds this isn’t true because…

- If you ignore probabilities below some threshold (Naive Discounting), it’s unclear what you should do.

- If you ignore the best and worst extremes (Tail Discounting), you won’t ignore extinction risk, as there are (plausibly) meaningful probabilities of outcomes better and worse than extinction.

- The Small Future Argument: After we ignore unlikely outcomes (e.g., space settlement or digital minds), the number of individuals in the future is too low for longtermism to be correct.

- She finds this isn’t true because likely scenarios feature humanity persisting for long enough that longtermism is true.

- The No Difference Argument: The probability we make a difference in whether extinction occurs is so low we should ignore it.

- She offers reasons you should consider our collective probability of making a difference instead of your individual probability.

- She argues our collective probability of preventing extinction is non-negligible.

- ^

Kosonen finds it implausible that there is a specific required threshold. For instance, Monton, who defends probability discounting, considers this threshold "subjective within reason."

Others have proposed thresholds, such as 1 in 10,000 (Buffon) and 1 in 144,768 (Condorcet). Buffon's threshold is the probability of a 56-year-old dying on a given day, a chance most people ignore. Condorcet had a similar justification. - ^

See pages 6 and 7 to see the estimates she consults.

- ^

She thinks there's a non-negligible probability that human and non-human suffering make the world's value net negative (an outcome worse than extinction).

- ^

See pages 11 to 14 for her calculations.

- ^

These are absolute (not relative) reductions in probabilities.

- ^

Snyder-Beattie et al. (2019).

- ^

This calculation assumes extinction from AI has a 0.1% risk in the next 100 years (while the median expert estimate is 5%), and it assumes $1 billion will only decrease the chance of extinction by AI by 1%.

I suppose my main objection to the collective decision-making response to the no difference argument is that there doesn't seem to be any sufficiently well-motivated and theoretically satisfying way of taking into account the collective probability of making a difference, especially as something like a welfarist consequentialist, e.g. a utilitarian. (It could still be the case that probability difference discounting is wrong, but it wouldn't be because we should take collective probabilities into account.)

Why should I care about this collective probability, rather than the probability differences between outcomes of the choices actually available to me? As a welfarist consequentialist, I would compare probability distributions over population welfare values[1] for each of my options, and use rules that rank them pairwise, or within subsets of options, or selects maximal options from subsets of options. Collective probabilities don't seem to be intrinsically important here. They wouldn't be part of the descriptions of the probability distributions of population welfare values for options actually available to you or calculable from them, if and because you don't have enough actual influence over what others will do. You'd need to consider the probability distributions of unavailable options to calculate them. Taking those distributions into account in a way that would properly address collective action problems seems to require non-welfarist reasons. This seems incompatible with act utilitarianism.

The differences in probabilities between options, on the other hand, do seem potentially important, if the probabilities matter at all. They're calculable from the probability measures over welfare for the options available to you.

Two possibilities Kosonen discusses that seem compatible with utilitarianism are rule utilitarianism and evidential decision theory. Here are my takes on them:

Or aggregate welfare, or pairwise differences in individual welfare between outcomes.