Aaron Bergman

Bio

Participation4

I graduated from Georgetown University in December, 2021 with degrees in economics, mathematics and a philosophy minor. There, I founded and helped to lead Georgetown Effective Altruism. Over the last few years recent years, I've interned at the Department of the Interior, the Federal Deposit Insurance Corporation, and Nonlinear.

Blog: aaronbergman.net

How others can help me

- Give me honest, constructive feedback on any of my work

- Introduce me to someone I might like to know :)

- Offer me a job if you think I'd be a good fit

- Send me recommended books, podcasts, or blog posts that there's like a >25% chance a pretty-online-and-into-EA-since 2017 person like me hasn't consumed

- Rule of thumb standard maybe like "at least as good/interesting/useful as a random 80k podcast episode"

How I can help others

- Open to research/writing collaboration :)

- Would be excited to work on impactful data science/analysis/visualization projects

- Can help with writing and/or editing

- Discuss topics I might have some knowledge of

- like: math, economics, philosophy (esp. philosophy of mind and ethics), psychopharmacology (hobby interest), helping to run a university EA group, data science, interning at government agencies

Posts 19

Comments173

Topic contributions1

Is there a good list of the highest leverage things a random US citizen (probably in a blue state) can do to cause Trump to either be removed from office or seriously constrained in some way? Anyone care to brainstorm?

Like the safe state/swing state vote swapping thing during the election was brilliant - what analogues are there for the current moment, if any?

~30 second ask: Please help @80000_Hours figure out who to partner with by sharing your list of Youtube subscriptions via this survey

Unfortunately this only works well on desktop, so if you're on a phone, consider sending this to yourself for later. Thanks!

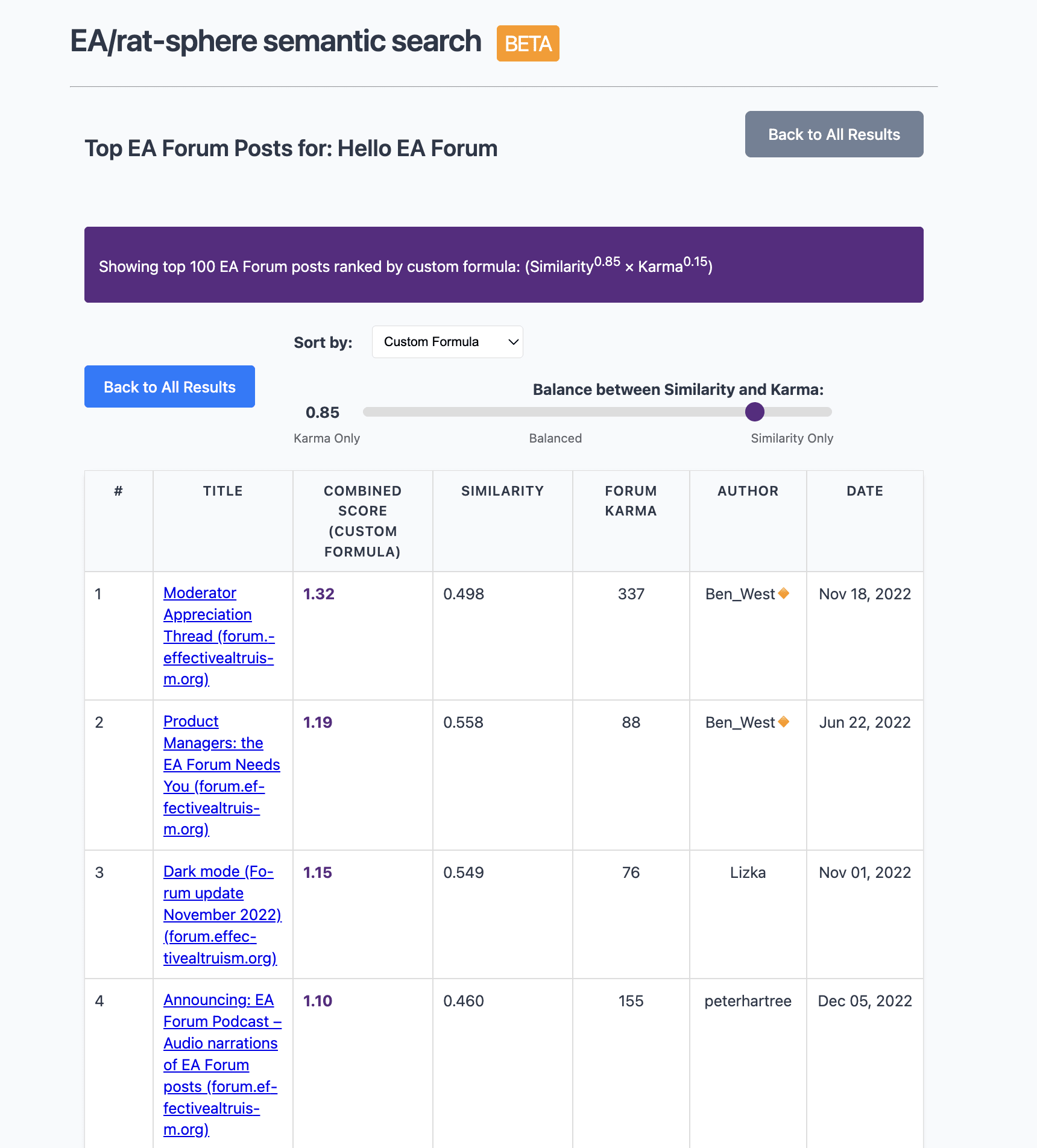

Sharing https://earec.net, semantic search for the EA + rationality ecosystem. Not fully up to date, sadly (doesn't have the last month or so of content). The current version is basically a minimal viable product!

On the results page there is also an option to see EA Forum only results which allow you to sort by a weighted combination of karma and semantic similarity thanks to the API!

Final feature to note is that there's an option to have gpt-4o-mini "manually" read through the summary of each article on the current screen of results, which will give better evaluations of relevance to some query (e.g. "sources I can use for a project on X") than semantic similarity alone.

Still kinda janky - as I said, minimal viable product right now. Enjoy and feedback is welcome!

Thanks to @Nathan Young for commissioning this!

Christ, why isn’t OpenPhil taking any action, even making a comment or filing an amicus curiae?

I certainly hope there’s some legitimate process going on behind the scenes; this seems like an awfully good time to spend whatever social/political/economic/human capital OP leadership wants to say is the binding constraint.

And OP is an independent entity. If the main constraint is “our main funder doesn’t want to pick a fight,” well so be it—I guess Good Ventures won’t sue as a proper donor the way Musk is; OP can still submit some sort of non-litigant comment. Naively, at least, that could weigh non trivially on a judge/AG

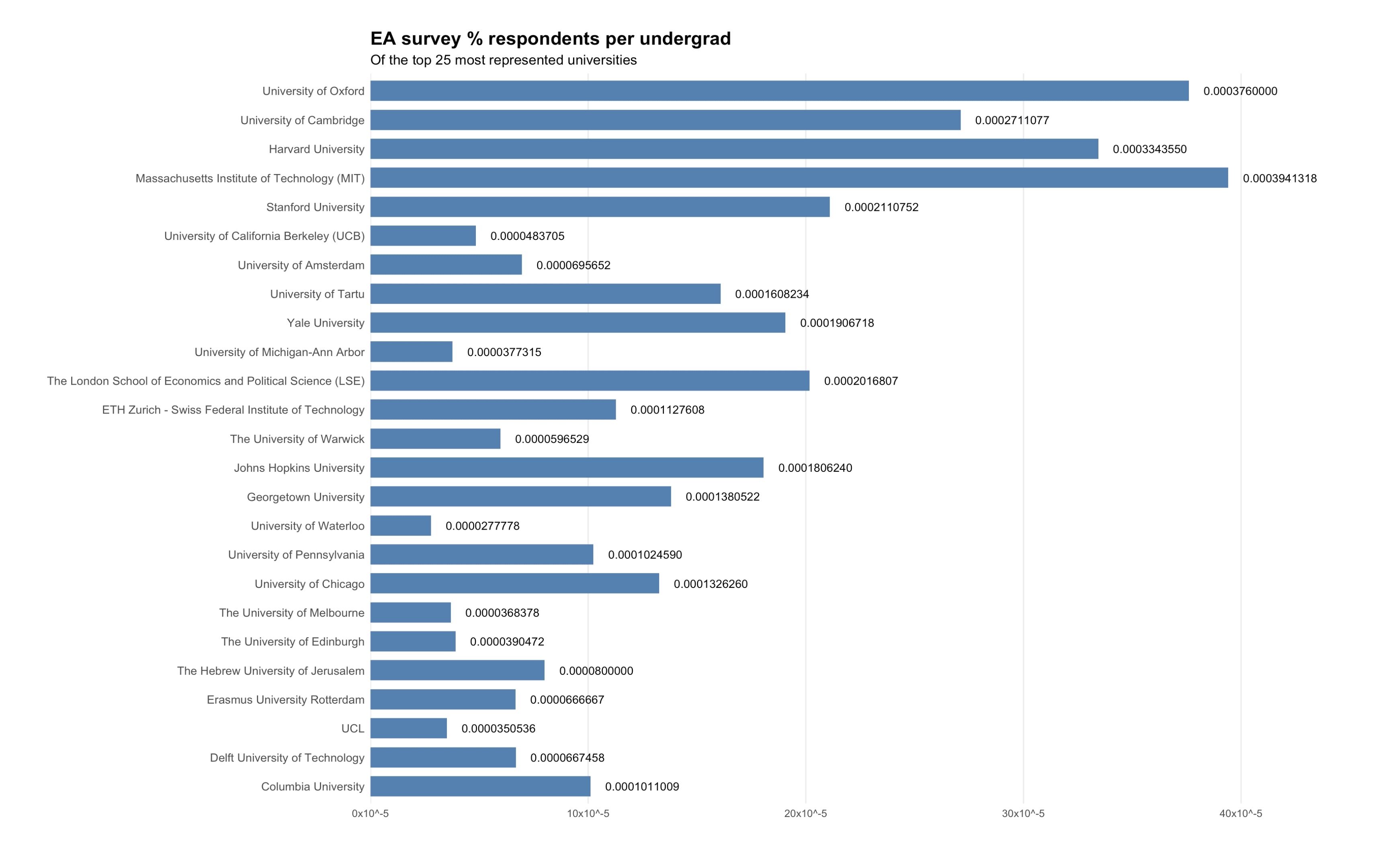

Reranking universities by representation in EA survey per undergraduate student, which seems relevant to figuring out what CB strategies are working (obviously plenty of confounders). Data from 1 minute of googling + LLMs so grain of salt

There does seem to be a moderate positive correlation here so nothing shocking IMO.

Same chart as above but by original order

Offer subject to be arbitrarily stopping at some point (not sure exactly how many I'm willing to do)

Give me chatGPT Deep Research queries and I'll run them. My asks are that:

- You write out exactly what you want the prompt to be so I can just copy and paste something in

- Feel free to request a specific model (I think the options are o1, o1-pro, o3-mini, and o3-mini-high) but be ok with me downgrading to o3-mini

- Be cool with me very hastily answering the inevitable set of follow-up questions that always get asked (seems unavoidable for whatever reason). I might say something like "all details are specified above; please use your best judgement"

Was sent a resource in response to this quick take on effectively opposing Trump that at a glance seems promising enough to share on its own:

From A short to-do list by the Substack Make Trump Lose Again:

Bolding is mine to highlight the 80k-like opportunity. I'm abusing the block quote a bit by taking out most of the text, so check out the actual post if interested!

There's also a volunteering opportunities page advertising "A short list of high-impact election opportunities, continuously updated" which links to a notion page that's currently down.