ank

Posts 1

Comments8

Of course, the place AI is just one of the ways, we shouldn't focus only on it, it'll not be wise. The place AI has certain properties that I think can be useful to somehow replicate in other types of AIs: the place "loves" to be changed 100% of the time (like a sculpture), it's "so slow that it's static" (it basically doesn't do anything itself, except some simple algorithms that we can build on top of it, we bring it to life and change it), it only does what we want, because we are the only ones who do things in it... There are some simple physical properties of agents, basically the more space-like they are, the safer they are. Thank you for this discussion, Will!

P.S. I agree that we should care first and foremost about the base reality, it'll be great to one day have spaceships flying in all directions, with human astronauts exploring new planets everywhere, we can give them all our simulated Earth to hop in and out off, so they won't feel as much homesick.

Thank you, Will.

About the corrigibility question first - the way I look at it, the agentic AI is like a spider that is knitting the spiderweb. Do we really need the spider (the AI agent, the time-like thing) if we have all the spiderwebs (all the places and all the static space-like things like texts, etc.)? The beauty of static geometric shapes is that we can grow them, we already do it when we train LLMs, the training itself doesn't involve making them agents. You'll need hard drives and GPUs, but you can grow them and never actually remove anything from them (you can if you want, but why? It's our history).

Humans can change the shapes directly (the way we use 3D editors or remodel our property in the game Sims) or more elegantly by hiding parts of the ever more all-knowing whole ("forgetting" and "recalling" slices of the past, present, or future, here's an example of a whole year of time in a single long-exposure photo, we can make it 3D and walkable, you'll have an ability to focus on a present moment or zoom out to see thousands of years at once in some simulations). We can choose to be the only agents inside those static AI models - humans will have no "competitors" for the freedom to be the only "time-like" things inside those "space-like" models.

Max Tegmark shared recently that numbers are represented as a spiral inside a model - directly on top of the number 1 are numbers 11, 21, 31... A very elegant representation. We can make it more human-understandable by presenting it like a spiral staircase on some simulated Earth that models the internals of the multimodal LLM:

---

The second question - about the people who have forgotten that the real physical world exists.

I thought about the mechanics of absolute freedom for three years, so the answer is not very short. I'll try to cram a whole book into a comment: it should be a choice, of course, and a fully informed one. You should be able to see all the consequences of your choices (if you want).

Let's start from a simpler and more familiar present time. I think we can gradually create a virtual backup of our planet. It can be a free resource (ideally an open-source app/game/web platform) that anyone can visit. The vanilla version tries to be completely in sync with our physical planet. People can jump in and out anytime they want on any of their devices.

The other versions of Earth can have any freedoms/rules people democratically choose them to have. This is how it can look in not so distant future (if we'll focus on it, it can possible take just a year or two): people who want it can buy a nice comfy-looking wireless armchair or a small wireless device (maybe even like AirPods). You come home, jump on the comfy armchair, and close your eyes - nothing happens, everything looks exactly the same, but you are now in a simulated Earth, and you know it full well. You're always in control. You go to your simulated kitchen that looks exactly like yours and make yourself some simulated coffee that tastes exactly like your favorite brand. You can open your physical eyes at any moment, and you'll be back in your physical world, on your physical armchair.

People can choose to temporarily forget they are in a simulation - but not for long (an hour or two?). We don't want them to forget they need real food and things.

It's interesting that even this static place-thing is a bit complicated for some, it's normal, I understand, we should think and do things gradually and democratically. It's just our Earth or another kinky version with magic. But imagine how complicated for everyone will be non-static AI agents, who are time-like and fast, and who change not the simulated world, but our real one and only planet.

Eventually, the armchair can be made from some indestructible material - sheltering you even from meteorites and providing you with food and everything. Then, you'll be able to choose to potentially forget that you're in a simulation for years. But to do that elegantly, we'll really need to build the Effective Utopia - the direct democratic simulated multiverse.

It was my three-years-in-the-making thought experiment: what is the best thing we can build if we're not limited by compute or physics? Because in a simulated world, we can have magic and things. Everyone can own an Eiffel Tower, and he/she will be the only owner. So we can have mutually exclusive things - because we can have many worlds.

While our physical Earth has perfect ecology, no global warming, animals are happy and you can always return there. It also lets us give our astronauts the experience of Earth while on a spaceship.

---

The way the Effective Utopia (or Rational Utopia) works would take too much space to describe. I shared some of it in my articles. But I think it's fun. There will be no need in currency, but maybe there will be a "barter system" there.

Basically, imagine all the worlds - from the worst dystopia to the best utopia (the Effective Utopia is a subset of all possible worlds/giant geometric shapes that people ever decided to visit or where they were born). Our current world is probably somewhere right in the middle. Now imagine that some human agent was born in in a bit of a dystopia (a below-average world), at some point got the "multiversal powers" to gradually recall and forget the whole multiverse (if he/she wants), and now deeply wants to relive his/her somewhat dystopia, feels nostalgic.

The thing is - if your world is above average, you'll easily find many people who will democratically choose to relive it with you. But if your world is below average (a bit of a dystopia), then you'll probably have to barter. You'll have to agree to help another person relive his/her below-average world, so he/she will help you relive yours.

Our guy can just look at his world of birth in some frozen long-exposure photo-like (but in 3d) way (with the ability to focus on a particular moment, or see the lives of everyone he cared about all at once, by using his multiversal recalling/forgetting powers) but if he really wants to return to this somewhat dystopia and forget that he has multiversal powers (because with the powers, it's not a dystopia), then he'll maybe have to barter a bit. Or maybe they won't need to barter, because there is probably another way:

There is a way for some brave explorers who want it, to go even to the most hellish dystopia and never feel pain for more than an infinitesimally small moment (some adults sometimes choose to go explore gray zones - no one knows are they dystopias or utopias, so they go check). It really does sound crazy, because I had to cram a whole book into a comment and we're discussing the very final and perpetual state of intelligent existence (but of course any human can choose to permanently freeze and become the static shape, to die in the multiverse, if he/she really wants). I don't want to claim I have it all 100% figured out and I think people should gradually and democratically decide how it will work, not some AGI agents doing it instead of us or forever preventing us from realizing all our dreams in some local maxima of permanent premature rules/unfreedoms.

We're not ready for the AI/AGI agents, we don't know how to build them safely right now. And I claim, that any AI/AGI agent is just a middleman, a shady builder, the real goal is the multiversal direct democracy. AI/AGI/ASI agents will by definition make mistakes, too, because the only way to never make mistakes is to know all the futures, to be in the multiverse.

I'm a proponent of democratically growing our freedoms. The rules are just "unfreedoms" - when something is imposed on you. If you know everything, then you effectively become everything and have nothing to fear, no need to impose any rules on others. People with multiversal powers have no "needs", but they can have "wants", often related to nostalgia, some like exploring the gray zones, so others can safely go there. You can filter worlds that you or others visited by any criteria of course, or choose a world at random from a subset, you can choose to forget that you already visited some world and how it was.

If you'll gather a party of willing adults, you can do pretty much whatever you want with each other, it's a multiversal direct democracy after all.

If you are a multiverse that is just a giant geometric shape, you create the rules just for fun - to recall the nostalgia of your past when you were not the all-knowing and the all-powerful.

This stuff can sound religious, but it's not, it's just the end state of all freedoms realized. It's geometry. It's an attempt to give maximal freedoms to maximal number of agents - and to find a safe democratic way there, it's probably a narrow way like in Dune.

If we know where we want to go, it can help tremendously with AGI/ASI safety.

The final state of the Effective Utopia is the direct democratic multiverse. It is the place where your every wish is granted - but there is no catch - because you see all the consequences of all your wishes, too (if you want). No one will throw you into some spooky place, it's just a subtle thought in your mind, that you can recall everything and/or forget everything if you want. You are free not to follow this thought of yours. The people whose recallings/forgettings intersected - they can vote to spend some time together, they can freely chose the slice of the multiverse (and it can span moments or 100s of years) to live in for a while.

👍 I’m a proponent of non-agentic systems, too. I suspect, the same way we have Mass–energy equivalence (e=mc^2), there is Intelligence-Agency equivalence (any agent is in a way time-like and can be represented in a more space-like fashion, ideally as a completely “frozen” static place, places or tools).

In a nutshell, an LLM is a bunch of words and vectors between them - a static geometric shape, we can probably expose it all in some game and make it fun for people to explore and learn. To let us explore the library itself easily (the internal structure of the model) instead of only talking to a strict librarian (the AI agent), who prevents us from going inside and works as a gatekeeper

Thank you, Will! Interesting, will there be some utility functions, basically what is karma based on? P.S. Maybe related: I started to model ethics based on the distribution of freedoms to choose futures

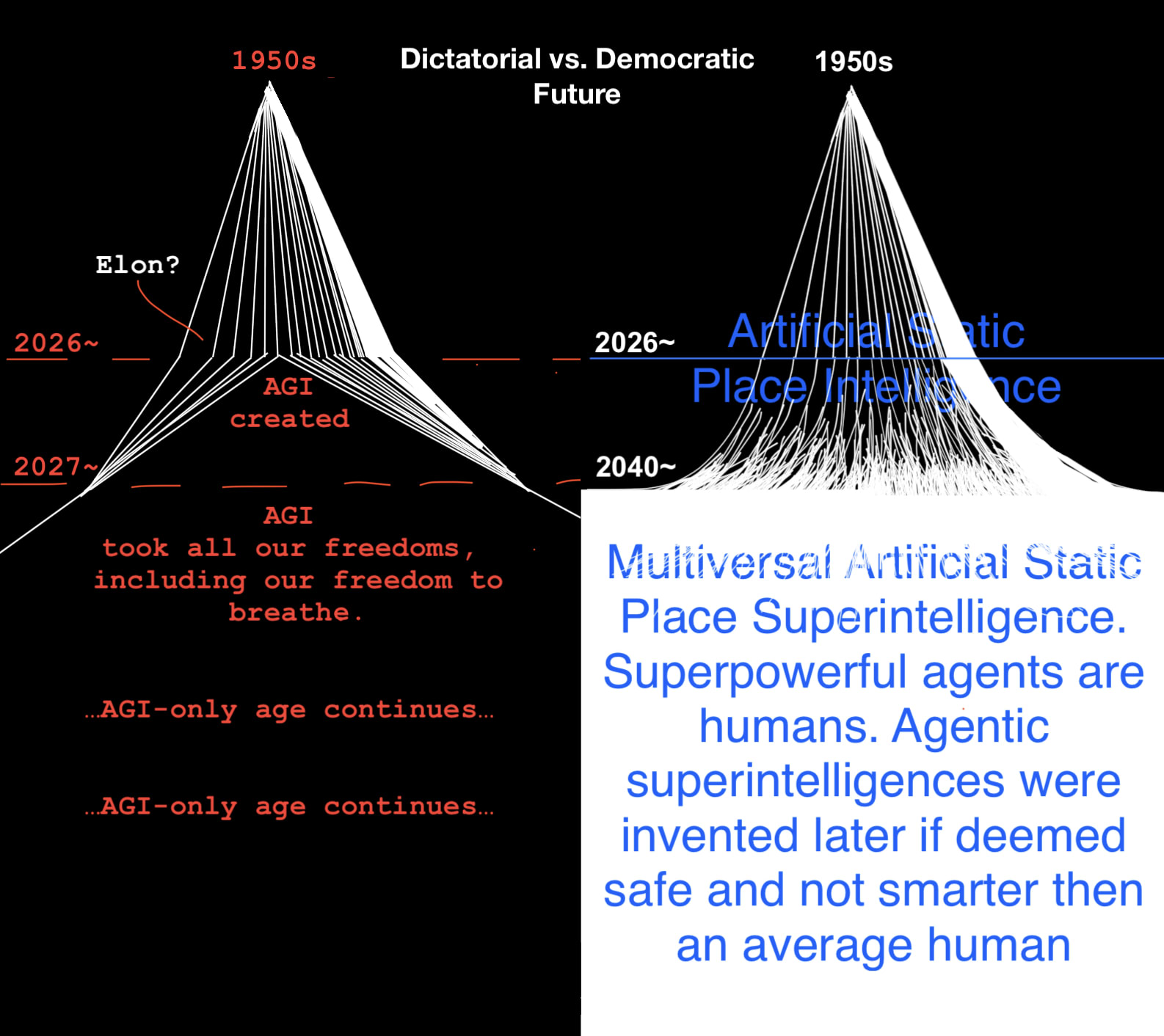

Some proposals are intentionally over the top, please steelman them:

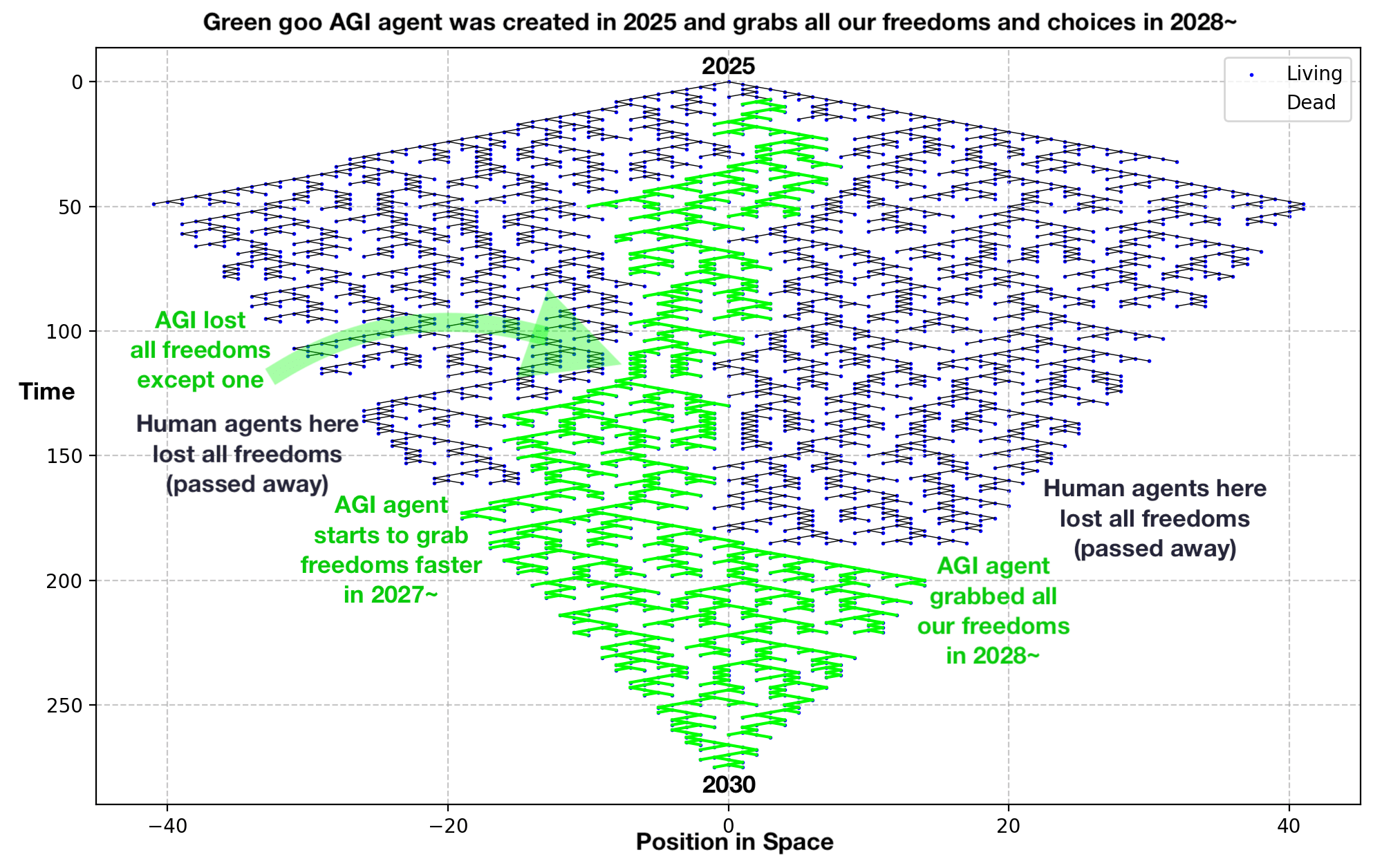

- I explain the graph here.

- Uninhabited islands, Antarctica, half of outer space, and everything underground should remain 100% AI-free (especially AI-agents-free). Countries should sign it into law and force GPU and AI companies to guarantee that this is the case.

- "AI Election Day" – at least once a year, we all vote on how we want our AI to be changed. This way, we can check that we can still switch it off and live without it. Just as we have electricity outages, we’d better never become too dependent on AI.

- AI agents that love being changed 100% of the time and ship a "CHANGE BUTTON" to everyone. If half of the voters want to change something, the AI is reconfigured. Ideally, it should be connected to a direct democratic platform like pol.is, but with a simpler UI (like x.com?) that promotes consensus rather than polarization.

- Reversibility should be the fundamental training goal. Agentic AIs should love being changed and/or reversed to a previous state.

- Artificial Static Place Intelligence – instead of creating AI/AGI agents that are like librarians who only give you quotes from books and don’t let you enter the library itself to read the whole books. The books that the librarian actually stole from the whole humanity. Why not expose the whole library – the entire multimodal language model – to real people, for example, in a computer game? To make this place easier to visit and explore, we could make a digital copy of our planet Earth and somehow expose the contents of the multimodal language model to everyone in a familiar, user-friendly UI of our planet. We should not keep it hidden behind the strict librarian (AI/AGI agent) that imposes rules on us to only read little quotes from books that it spits out while it itself has the whole output of humanity stolen. We can explore The Library without any strict guardian in the comfort of our simulated planet Earth on our devices, in VR, and eventually through some wireless brain-computer interface (it would always remain a game that no one is forced to play, unlike the agentic AI-world that is being imposed on us more and more right now and potentially forever

Effective Utopia (Direct Democratic Multiversal Artificial Static Place Superintelligence) – Eventually, we could have many versions of our simulated planet Earth and other places, too. We'll be the only agents there, we can allow simple algorithms like in GTA3-4-5. There would be a vanilla version (everything is the same like on our physical planet, but injuries can’t kill you, you'll just open your eyes at you physical home), versions where you can teleport to public places, versions where you can do magic or explore 4D physics, creating a whole direct democratic simulated multiverse. If we can’t avoid building agentic AIs/AGI, it’s important to ensure they allow us to build the Direct Democratic Multiversal Artificial Static Place Superintelligence. But agentic AIs are very risky middlemen, shady builders, strict librarians; it’s better to build and have fun building our Effective Utopia ourselves, at our own pace and on our own terms. Why do we need a strict rule-imposing artificial "god" made out of stolen goods (and potentially a privately-owned dictator who we cannot stop already), when we can build all the heavens ourselves?

- Agentic AIs should never become smarter than the average human. The number of agentic AIs should never exceed half of the human population, and they shouldn’t work more hours per day than humans.

- Ideally, we want agentic AIs to occupy zero space and time, because that’s the safest way to control them. So, we should limit them geographically and temporarily, to get as close as possible to this idea. And we should never make them "faster" than humans, never let them be initiated without human oversight, and never let them become perpetually autonomous. We should only build them if we can mathematically prove they are safe and at least half of humanity voted to allow them. We cannot have them without direct democratic constitution of the world, it's just unfair to put the whole planet and all our descendants under such risk. And we need the simulated multiverse technology to simulate all the futures and become sure that the agents can be controlled. Because any good agent will be building the direct democratic simulated multiverse for us anyway.

- Give people choice to live in the world without AI-agents, and find a way for AI-agent-fans to have what they want, too, when it will be proved safe. For example, AI-agent-fans can have a simulated multiverse on a spaceship that goes to Mars, in it they can have their AI-agents that are proved safe. Ideally we'll first colonize the universe (at least the simulated one) and then create AGI/agents, it's less risky. We shouldn't allow AI-agents and the people who create them to permanently change our world without listening to us at all, like it's happening right now.

- We need to know what exactly is our Effective Utopia and the narrow path towards it before we pursue creating digital "gods" that are smarter than us. We can and need to simulate futures instead of continuing flying into the abyss. One freedom too much for the agentic AI and we are busted. Rushing makes thinking shallow. We need international cooperation and the understanding that we are rushing to create a poison that will force us to drink itself.

- We need working science and technology of computational ethics that allows us to predict dystopias (AI agent grabbing more and more of our freedoms, until we have none, or we can never grow them again) and utopias (slowly, direct democratically growing our simulated multiverse towards maximal freedoms for maximal number of biological agents- until non-biological ones are mathematically proved safe). This way if we'll fail, at least we failed together, everyone contributed their best ideas, we simulated all the futures, found a narrow path to our Effective Utopia... What if nothing is a 100% guarantee? Then we want to be 100% sure we did everything we could even more and if we found out that safe AI agents are impossible: we outlawed them, like we outlawed chemical weapons. Right now we're going to fail because of a few white men failing, they greedily thought they can decide for everyone else and failed.

- The sum of AI agents' freedoms should grow slower than the sum of freedoms of humans, right now it's the opposite. No AI agent should have more freedoms than an average human, right now it's the opposite (they have almost all the creative output of almost all the humans dead and alive stolen and uploaded to their private "librarian brains" that humans are forbidden from exploring, but only can get short quotes from).

- The goal should be to direct democratically grow towards maximal freedoms for maximal number of biological agents. Enforcement of anything upon any person or animal will gradually disappear. And people will choose worlds to live in. You'll be able to be a billionaire for a 100 years, or relive your past. Or forget all that and live on Earth as it is now, before all that AI nonsense. It's your freedom to choose your future.

Imagine a place that grants any wish, but there is no catch, it shows you all the outcomes, too.

Yep, sharing is as important as creating. If we'll have the next ethical breakthrough, we want people to learn about that quickly