AnonResearcherMajorAILab

Posts 1

Comments20

So, the tradeoff is something like 55% death spread over a five-year period vs no death for five years, for an eventual reward of reducing total chance of death (over a 10y period or whatever) from 89% to 82%.

Oh we disagree much more straightforwardly. I think the 89% should be going up, not down. That seems by far the most important disagreement.

(I thought you were saying that person-affecting views means that even if the 89% goes up that could still be a good trade.)

I still don't know why you expect the 89% to go down instead of up given public advocacy. (And in particular I don't see why optimism vs pessimism has anything to do with it.) My claim is that it should go up.

I was thinking of something like the scenario you describe as "Variant 2: In addition to this widespread pause, there is a tightly controlled and monitored government project aiming to build safe AGI." It doesn't necessarily have to be government-led, but maybe the government has talked to evals experts and demands a tight structure where large expenditures of compute always have to be approved by a specific body of safety evals experts.

But why do evals matter? What's an example story where the evals prevent Molochian forces from leading to us not being in control? I'm just not seeing how this scenario intervenes on your threat model to make it not happen.

(It does introduce government bureaucracy, which all else equal reduces the number of actors, but there's no reason to focus on safety evals if the theory of change is "introduce lots of bureaucracy to reduce number of actors".)

Maybe I'm wrong: If the people who are closest to DC are optimistic that lawmakers would be willing to take ambitious measures soon enough

This seems like the wrong criterion. The question is whether this strategy is more likely to succeed than others. Your timelines are short enough that no ambitious measure is going to come into place fast enough if you aim to save ~all worlds.

But e.g. ambitious measures in ~5 years seems very doable (which seems like it is around your median, so still in time for half of worlds). We're already seeing signs of life:

note the existence of the UK Frontier AI Taskforce and the people on it, as well as the intent bill SB 294 in California about “responsible scaling”

You could also ask people in DC; my prediction is they'd say something reasonably similar.

From my perspective you're assuming we're in an unlikely world where the public turns out to be saner than our baseline would suggest.

Hm, or that we get lucky in terms of the public's response being a good one given the circumstances, even if I don't expect the discourse to be nuanced.

That sounds like a rephrasing of what I said that puts a positive spin on it. (I don't see any difference in content.)

To put it another way -- you're critiquing "optimistic EAs" about their attitudes towards institutions, but presumably they could say "we get lucky in terms of the institutional response being a good one given the circumstances". What's the difference between your position and theirs?

But my sense is that it's unlikely we'd succeed at getting ambitious measures in place without some amount of public pressure.

Why do you believe that?

It also matters how much weight you give to person-affecting views (I've argued here for why I think they're not unreasonable).

I don't think people would be on board with the principle "we'll reduce the risk of doom in 2028, at the cost of increasing risk of doom in 2033 by a larger amount".

For me, the main argument in favor of person-affecting views is that they agree with people's intuitions. Once a person-affecting view recommends something that disagrees with other ethical theories and with people's intuitions, I feel pretty fine ignoring it.

The 89% p(doom) is more like what we should expect by default: things get faster and out of control and then that's it for humans.

Your threat model seems to be "Moloch will cause doom by default, but with AI we have one chance to prevent that, but we need to do it very carefully". But Molochian forces grow much stronger as you increase the number of actors! The first intervention would be to keep the number of actors involved as small as possible, which you do by having the few leaders race forward as fast as possible, with as much secrecy as possible. If this were my main threat model I would be much more strongly against public advocacy and probably also against both conditional and unconditional pausing.

(I do think 89% is high enough that I'd start to consider negative-expectation high-variance interventions. I would still be thinking about it super carefully though.)

even with the pause mechanism kicking in (e.g., "from now on, any training runs that use 0.2x the compute of the model that failed the eval will be prohibited"), algorithmic progress at another lab or driven by people on twitter(/X) tinkering with older, already-released models, will get someone to the same capability threshold again soon enough. [...] you can still incorporate this concern by adding enough safety margin to when your evals trigger the pause button.

Safety margin is one way, but I'd be much more keen on continuing to monitor the strongest models even after the pause has kicked in, so that you notice the effects of algorithmic progress and can tighten controls if needed. This includes rolling back model releases if people on Twitter tinker with them to exceed capability thresholds. (This also implies no open sourcing, since you can't roll back if you've open sourced the model.)

But I also wish you'd say what exactly your alternative course of action is, and why it's better. E.g. the worry of "algorithmic progress gets you to the threshold" also applies to unconditional pauses. Right now your comments feel to me like a search for anything negative about a conditional pause, without checking whether that negative applies to other courses of action.

Sounds like we roughly agree on actions, even if not beliefs (I'm less sold on fast / discontinuous takeoff than you are).

As a minor note, to keep incentives good, you could pay evaluators / auditors based on how much performance they are able to elicit. You could even require that models be evaluated by at least three auditors, and split up payment between them based on their relative performances. In general it feels like there a huge space of possibilities that has barely been explored.

That's not how I'd put it. I think we are still in a "typical" world, but the world that optimistic EAs assume we are in is the unlikely one where institutions around AI development an deployment suddenly turn out to be saner than our baseline would suggest.

I don't see at all how this justifies that public advocacy is good? From my perspective you're assuming we're in an unlikely world where the public turns out to be saner than our baseline would suggest. I don't think I have a lot of trust in institutions (though maybe I do have more trust than you do); I think I have a deep distrust of politics and the public.

I'm also not sure I understood your original argument any more. The argument I thought you were making was something like:

Consider an instrumental variable like "quality-weighted interventions that humanity puts in place to reduce AI x-risk". Then public advocacy is:

- Negative expectation: Public advocacy reduces the expected value of quality-weighted interventions, for the reasons given in the post.

- High variance: Public advocacy also increases the variance of quality-weighted interventions (e.g. maybe we get a complete ban on all AI, which seems impossible without public advocacy).

However, I am pessimistic:

- Pessimism: The required quality-weighted interventions to avoid doom is much higher than the default quality-weighted interventions we're going to get.

Therefore, even though public advocacy is negative-expectation on quality-weighted interventions, it still reduces p(doom) due to its high variance.

(This is the only way I see to justify rebuttals like "I'm in an overall more pessimistic state where I don't mind trying more desperate measures", though perhaps I'm missing something.)

Is this what you meant with your original argument? If not, can you expand?

I think it's not just the MIRI-sphere that's very pessimistic

What is your p(doom)?

For reference, I think it seems crazy to advocate for negative-expectation high-variance interventions if you have p(doom) < 50%. As a first pass heuristic, I think it still seems pretty unreasonable all the way up to p(doom) of < 90%, though this could be overruled by details of the intervention (how negative is the expectation, how high is the variance).

I like your points against the value of public advocacy. I'm not convinced overall, but that's probably mostly because I'm in an overall more pessimistic state where I don't mind trying more desperate measures.

People say this a lot, but I don't get it.

- Your baseline has to be really pessimistic before it looks good to throw in a negative-expectation high-variance intervention. (Perhaps worth making some math models and seeing when it looks good vs not.) Afaict only MIRI-sphere is pessimistic enough for this to make sense.

- It's very uncooperative and unilateralist. I don't know why exactly it has became okay to say "well I think alignment is doomed, so it's fine if I ruin everyone else's work on alignment with a negative-expectation intervention", but I dislike it and want it to stop.

Or to put it a bit more viscerally: It feels crazy to me that when I say "here are reasons your intervention is increasing x-risk", the response is "I'm pessimistic, so actually while I agree that the effect in a typical world is to increase x-risk, it turns out that there's this tiny slice of worlds where it made the difference and that makes the intervention good actually". It could be true, but it sure throws up a lot of red flags.

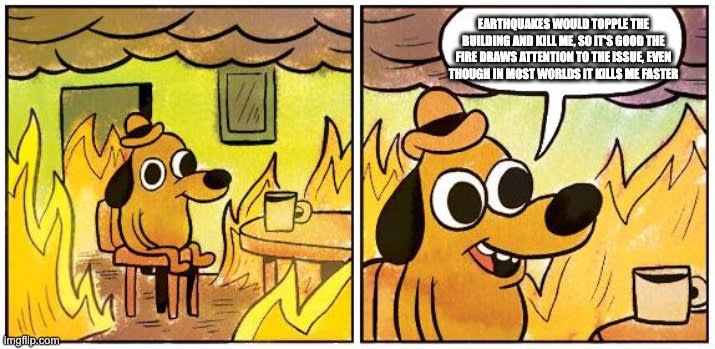

Or in meme form:

I feel like there are already a bunch of misaligned incentives for alignment researchers (and all groups of people in general), so if the people on the safety team aren't selected to be great at caring about the best object-level work, then we're in trouble either way.

I'm not thinking that much about the motivations of the people on the safety team. I'm thinking of things like:

- Resourcing for the safety team (hiring, compute, etc) is conditioned on whether the work produces big splashy announcements that the public will like

- Other teams in the company that are doing things that the public likes will be rebranded as safety teams

- When safety teams make recommendations for safety interventions on the strongest AI systems, their recommendations are rejected if the public wouldn't like them

- When safety teams do outreach to other researchers in the company, they have to self-censor for fear that a well-meaning whistleblower will cause a PR disaster by leaking an opinion of the safety team that the public has deemed to be wrong

See also Unconscious Economics.

(I should have said that companies will face pressure, rather than safety teams. I'll edit that now.)

A conditional pause fails to prevent x-risk if either:

- The AI successfully exfiltrates itself (which is what's needed to defeat rollback mechanisms) during training or evaluation, but before deployment.

- The AI successfully sandbags the evaluations. (Note that existing conditional pause proposals depend on capability evaluations, not alignment evaluations.)

(Obviously there's also the normal institutional failures, e.g. if a company simply ignores the evaluation requirements and forges ahead. I'm setting those aside here.)

Both of these seem extremely difficult to me (likely beyond human-level, in the sense that if you somehow put a human in the situation the AI would be in, I would expect the human to fail).

How likely do you think it is that we get an AI capable of one of these failure modes, before we see an AI capable of e.g. passing 10 out of the 12 ARC Evals tasks? My answer would be "negligible", and so I'm at least in favor of "pause once you pass 10 out of 12 ARC Evals tasks" over "pause now". I think we can raise the difficulty of the bar a decent bit more before my answer stops being "negligible".

I don't think this depends on takeoff speeds at all, since I'd expect a conditional pause proposal to lead to a pause well before models are automating 20% of tasks (assuming no good mitigations in place by that point).

I don't think this depends on timelines, except inasmuch as short timelines correlates with discontinuous jumps in capability. If anything it seems like shorter timelines argue more strongly for a conditional pause proposal, since it seems far easier to build support for and enact a conditional pause.

Oops, sorry for the misunderstanding.

Taking your numbers at face value, and assuming that people have on average 40 years of life ahead of them (Google suggests median age is 30 and typical lifespan is 70-80), the pause gives an expected extra 2.75 years of life during the pause (delaying 55% chance of doom by 5 years) while removing an expected extra 2.1 years of life (7% of 30) later on. This looks like a win on current-people-only views, but it does seem sensitive to the numbers.

I'm not super sold on the numbers. Removing the full 55% is effectively assuming that the pause definitely happens and is effective -- it neglects the possibility that advocacy succeeds enough to have the negative effects, but still fails to lead to a meaningful pause. I'm not sure how much probability I assign to that scenario but it's not negligible, and it might be more than I assign to "advocacy succeeds and effective pause happens".

I'd say it's more like "I don't see why we should believe (1) currently". It could still be true. Maybe all the other methods really can't work for some reason I'm not seeing, and that reason is overcome by public advocacy.