Cornelis Dirk Haupt

Bio

Participation6

The guy in the panda hat at EAG

How others can help me

I am look for employment as a full stack developer with 3-4 years of experience with React + Django

How I can help others

Effective Altruism is a topic I basically have to restrain myself from talking about at parties. So if you want to talk about it - including whiteboards where necessary and fact-checking scholarly sources - I'm probably your guy.

Depending what you're looking for I'd probably be able to connect you to whoever you should be connected to if you are relatively new to the community.

Posts 6

Comments95

Link to a Feature Suggestion of mine. Essentially it would be nice to be able to filter the Vote History by the actual reactions issued.

E.g. Imagine you wanted to find some comment from >1 year ago. You only remember it changed your mind on something important so you want to filter this list by "Changed my mind".

iirc there were prominent thinkers in the 19th Century like Thomas Jefferson who decried Slavery as a moral monstrosity but lamented that things could not be any other way (TEDx animation is where I remember this from).

And they held this view and wrote about it mere months before abolition laws were to be passed. Social change can happen faster than people predict it possible.

Yea that's the one thanx. I notice I could have found that by going to "Saved & Read" and then "Vote History" which was a bit hidden so didn't know to go there.

Would be great if I could filter the Vote History page to only see comments I have reacted to in a particular way

E.g. I go there and filter to only show comments I have "helpful" reacted to would make finding Scott's comment a lot easier in the future when this comment otherwise become buried under years of other comments.

In fact, most useful would be to be able to filter by "Changed my mind" reactions.

(Paging @Sarah Cheng with this feature request)

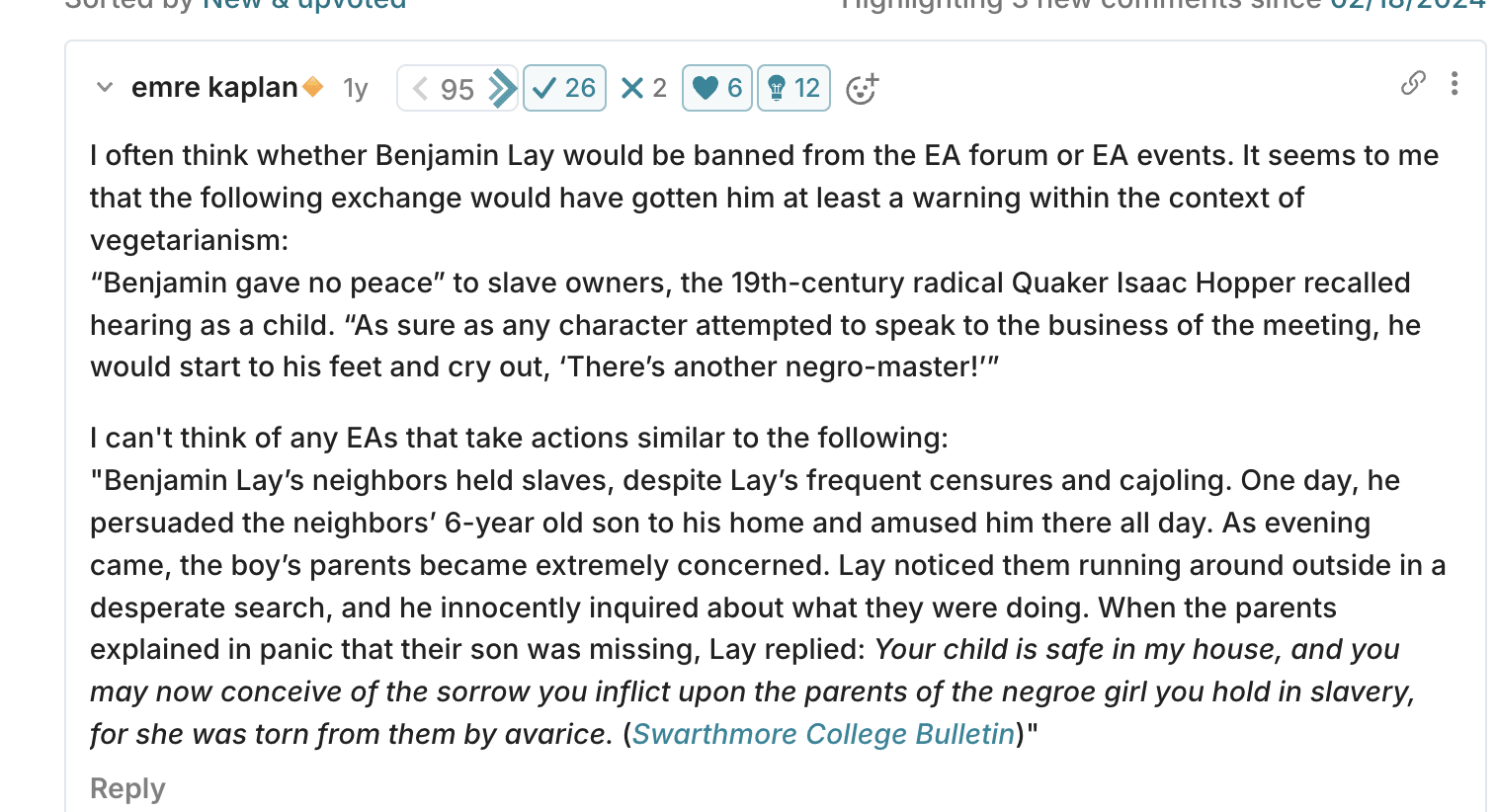

This kinda reminds me of a post that asked if EAs would have been as in favour as the abolition of slavery as a particular extremely hard-line anti-slavery activist at the time who we morally laud today as a moral exemplar, but who everyone at the time thought was a shocking PETA-type extremist. (Found the post)

The comment posed by @emre kaplan🔸 there I think is very illuminating.

Scott Alexander somewhere on the forum wrote a comment I mostly agree with (where he was giving caution to a pro - Pause AI post, cant find) that most, or at least a significant amount that we cannot discount, amount of social change comes as a consequence of people that want change working from within the system and gaining high status from within the system to be able to affect change - because the people who have the power to then make change like these high status people and want their respect.

This all just leads me to the fairly obvious conclusion that like with AI Safety and Slavery Abolition, EAs - hypothetically placed at any place in history - are most likely to be found working within a system gaining enough status to slowly and reliably tweak the status quo in a positive direction.

There is no contradiction between being opposed to Prohibition but in favour of finding a reliable way to get people to drink less alcohol.

Peter Turchin. He was the first guest on Julia Galef's Rationally Speaking podcast and Scott Alexander did an article on his work. But outside of that I doubt he even knows EA as a movement exists. Would love to see him understand AI timelines and see how that influences his thinking and his models and vice-versa how respected members of our community make updates (or don't) to their timelines based on Turchin's models (and why).

Thank you for the perspective!

I certainly agree with your model on behaviour change. Likewise, my approach has over the years simplified from more convoluted ideas to one simple maxim: "Just make sure you feed them. The rest will often take care of itself."

I'm concerned about animal welfare, human welfare and AI safety - without the urgency of AI dominating entirely.

I think what I highlight is similar to how many professional communities are optimized for matching prospective employers with employees rather than the happiness and enjoyment of their members. If there are 100 members but employers are only interested in one candidate you will have 99 less happy members. But this is not a bad thing as the goal of the community is to matching particular employers. It could easily be a mistake to find different employers and different events to make it more likely that you'll have more happy members - risks include value drift and reducing your actual goal of maximizing impact. Still, 99% of your members are disgruntled as a tradeoff.

Professional-adjacent communities like say "computer tinkerers who just do it for fun" do not have this problem. If 99% in the community are not happy then you either change what you are doing to what the community of tinkerers are interested in or the community ceases to exist - or at least this is a much more likely outcome.

I've been thinking a bunch about a fundamental difference between the EA community and the LessWrong community.

LessWrong is optimized for the enjoyment of its members. Any LessWrong event I go to in any city the focus is on "what will we find fun to do?" This is great. Notice how the community isn't optimized for "making the world more rational." It is a community that selects for people interested in rationality and then when you get these kinds of people in the same room the community tries to optimize for FUN for these kinds of people.

EA as a community is NOT optimized for the enjoyment of its members. It is optimized for making the world a better place. This is a feature, not a bug. And surely it should be net positive since its goal should by definition be net positive. When planning an EAG or EA event you measure it on impact and say professional connections made and how many new high quality AI Alignment researchers you might have created on the margin. You don't measure it on how much people enjoyed themselves (or you do, but for instrumental reasons to get more people to come so that you can continue to have impact).

As a community organizer in both spaces, I do notice it is easier that I can leave EA events I organized feeling more burnt out and less fulfilled than compared to similar LW/ACX events. I think the fundamental difference mentioned before explains why.

Dunno if I am pointing at anything that resonates with anyone. I don't see this discussed much among community organizers. Seems important to highlight.

Basically in LW/ACX spaces - specifically as an organizer - I more easily feel like a fellow traveller up for a good time. In EA spaces - specifically as an organizer - I more easily feel like an unpaid recruiter.

This change is, in part, a response to common feedback that the name Impactful Animal Advocacy is too long (10 syllabus!), hard to remember, and difficult to recognize as the acronym IAA.

I just tried and failed to remember the name last night when I was trying to recommend IAA to a friend of mine interested in getting involved in animal welfare.

Thankfully I was quickly able to say "Oh, they're called Hive now, here's their Slack invite link!" and all was well.

Hi there, I submitted my application before February 2nd for the April 7 to June 27, 2025 round.

However the email I received says I will hear back:

On the website I see this is the deadline for the 29 September - 19 December, 2025 round. Did the date change or was I placed in the wrong cohort accidentally perhaps?