Nathan Young

Bio

Participation4

Builds web apps (eg viewpoints.xyz) and makes forecasts. Currently I have spare capacity.

How others can help me

Talking to those in forecasting to improve my forecasting question generation tool

Writing forecasting questions on EA topics.

Meeting EAs I become lifelong friends with.

How I can help others

Connecting them to other EAs.

Writing forecasting questions on metaculus.

Talking to them about forecasting.

Posts 141

Comments2522

Topic contributions20

I think it would allow many very online slightly anxious people to note how the situation is changing, rather than plug their minds into twitter each day.

I think the bird flu site helped a little in my part of twitter to tell people to chill out a bit and not work themselves into a frenzy earlier than was necessary. At least one powerful person said it was cool.

I think that civil servants might use it but I'm not sure they know what they want here and it's good to have something concrete to show.

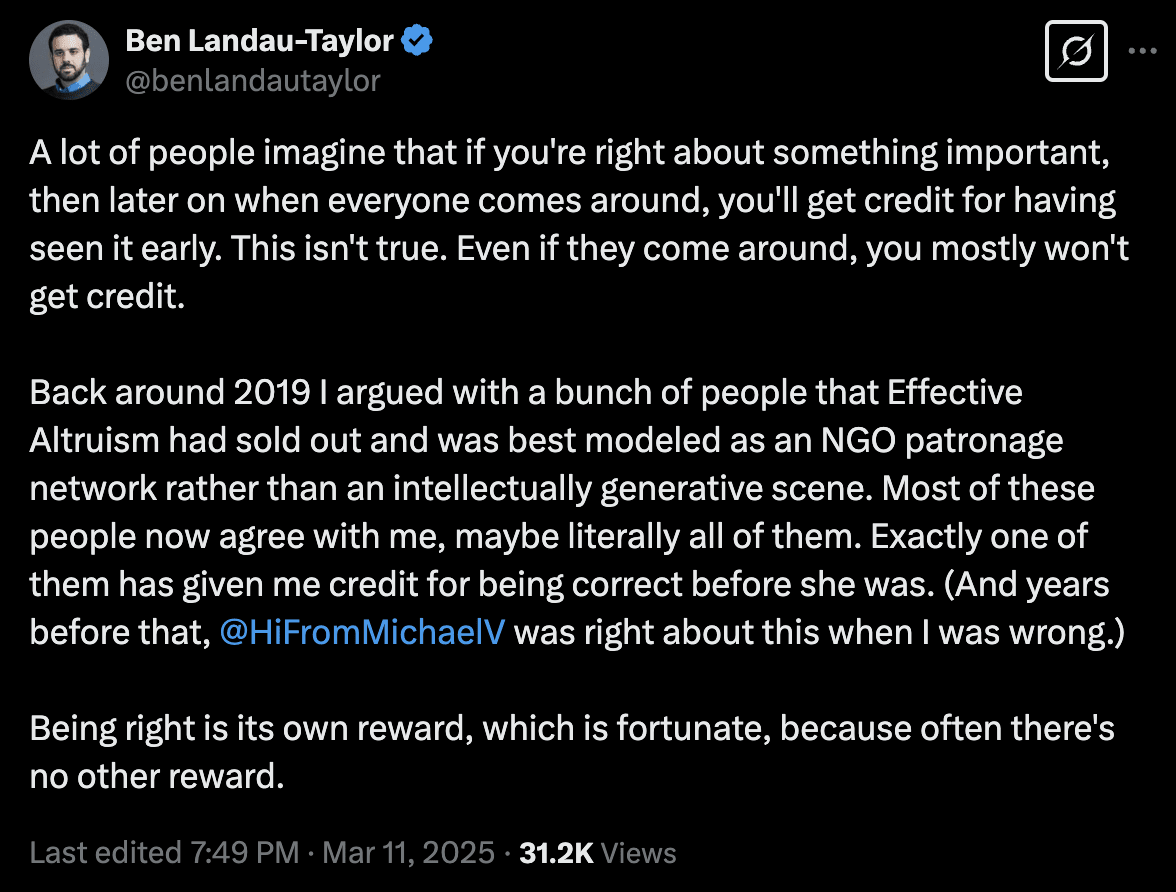

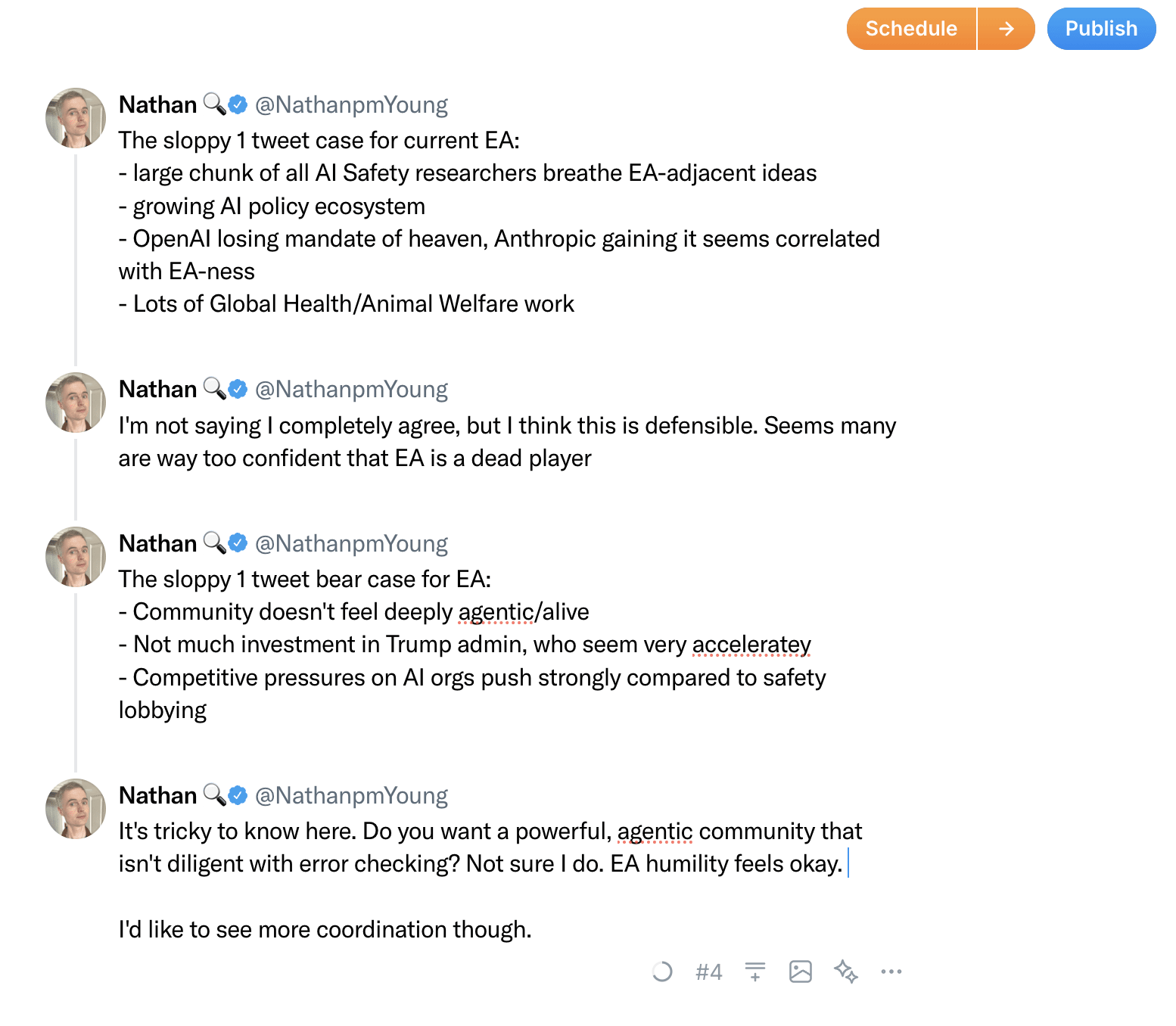

Seems notable that my modes is that OpenPhil has stopped funding some right wing or even centrist projects, so has less power in this world than it could have done.

Ben Todd writes:

Most philanthropic (vs. government or industry) AI safety funding (>50%) comes from one source: Good Ventures, via Open Philanthropy.2 But they’ve recently stopped funding several categories of work (my own categories, not theirs):

- Many Republican-leaning think tanks, such as the Foundation for American Innovation

...

Likewise almost all the people I know 2nd hand who have power in the new admin are via either twitter or rationality, both spaces where EA has, to my eye, sought to distance itself in recent years.

I have been tempted to make a US democracy dashboard, tracking the likelihood according to prediction markets of different democracy outcomes.

Similar to https://birdflurisk.com

Should anyone wish to fund this to cause it to happen faster, they are welcome to dm me.

Interesting take. I don't like it.

Perhaps because I like saying overrated/underrated.

But also because overrated/underrated is a quick way to provide information. "Forecasting is underrated by the population at large" is much easier to think of than "forecasting is probably rated 4/10 by the population at large and should be rated 6/10"

Over/underrated requires about 3 mental queries, "Is it better or worse than my ingroup thinks" "Is it better or worse than my ingroup thinks?" "Am I gonna have to be clear about what I mean?"

Scoring the current and desired status of something requires about 20 queries "Is 4 fair?" "Is 5 fair" "What axis am I rating on?" "Popularity?" "If I score it a 4 will people think I'm crazy?"...

Like in some sense your right that % forecasts are more useful than "More likely/less likely" and sizes are better than "bigger smaller" but when dealing with intangibles like status I think it's pretty costly to calculate some status number, so I do the cheaper thing.

Also would you prefer people used over/underrated less or would you prefer the people who use over/underrated spoke less? Because I would guess that some chunk of those 50ish karma are from people who don't like the vibe rather than some epistemic thing. And if that's the case, I think we should have a different discussion.

I guess I think that might come from a frustration around jargon or rationalists in general. And I'm pretty happy to try and broaden my answer from over/underrated - just as I would if someone asked me how big a star was and I said "bigger than an elephant". But it's worth noting it's a bandwidth thing and often used because giving exact sizes in status is hard. Perhaps we shouldn't have numbers and words for it, but we don't.