Ozzie Gooen

Bio

I'm currently researching forecasting and epistemics as part of the Quantified Uncertainty Research Institute.

Posts 86

Comments1086

Topic contributions4

LLMs seem more like low-level tools to me than direct human interfaces.

Current models suffer from hallucinations, sycophancy, and numerous errors, but can be extremely useful when integrated into systems with redundancy and verification.

We're in a strange stage now where LLMs are powerful enough to be useful, but too expensive/slow to have rich scaffolding and redundancy. So we bring this error-prone low-level tool straight to the user, for the moment, while waiting for the technology to improve.

Using today's LLM interfaces feels like writing SQL commands directly instead of using a polished web application. It's functional if that's all you have, but it's probably temporary.

Imagine what might happen if/when LLMs are 1000x faster and cheaper.

Then, answering a question might involve:

- Running ~100 parallel LLM calls with various models and prompts

- Using aggregation layers to compare responses and resolve contradictions

- Identifying subtasks and handling them with specialized LLM batches and other software

Big picture, I think researchers might focus less on making sure any one LLM call is great, and more that these broader setups can work effectively.

(I realize this has some similarities to Mixture of Experts)

I've spent some time in the last few months outlining a few epistemics/AI/EA projects I think could be useful.

Link here.

I'm not sure how to best write about these on the EA Forum / LessWrong. They feel too technical and speculative to gain much visibility.

But I'm happy for people interested in the area to see them. Like with all things, I'm eager for feedback.

Here's a brief summary of them, written by Claude.

---

1. AI-Assisted Auditing

A system where AI agents audit humans or AI systems, particularly for organizations involved in AI development. This could provide transparency about data usage, ensure legal compliance, flag dangerous procedures, and detect corruption while maintaining necessary privacy.

2. Consistency Evaluations for Estimation AI Agents

A testing framework that evaluates AI forecasting systems by measuring several types of consistency rather than just accuracy, enabling better comparison and improvement of prediction models. It's suggested to start with simple test sets and progress to adversarial testing methods that can identify subtle inconsistencies across domains.

3. AI for Epistemic Impact Estimation

An AI tool that quantifies the value of information based on how it improves beliefs for specific AIs. It's suggested to begin with narrow domains and metrics, then expand to comprehensive tools that can guide research prioritization, value information contributions, and optimize information-seeking strategies.

4. Multi-AI-Critic Document Comments & Analysis

A system similar to "Google Docs comments" but with specialized AI agents that analyze documents for logical errors, provide enrichment, and offer suggestions. This could feature a repository of different optional open-source agents for specific tasks like spot-checking arguments, flagging logical errors, and providing information enrichment.

5. Rapid Prediction Games for RL

Specialized environments where AI agents trade or compete on predictions through market mechanisms, distinguishing between Information Producers and Consumers. The system aims to both evaluate AI capabilities and provide a framework for training better forecasting agents through rapid feedback cycles.

6. Analytics on Private AI Data

A project where government or researcher AI agents get access to private logs/data from AI companies to analyze questions like: How often did LLMs lie or misrepresent information? Did LLMs show bias toward encouraging user trust? Did LLMs employ questionable tactics for user retention? This addresses the limitation that researchers currently lack access to actual use logs.

7. Prediction Market Key Analytics Database

A comprehensive analytics system for prediction markets that tracks question value, difficulty, correlation with other questions, and forecaster performance metrics. This would help identify which questions are most valuable to specific stakeholders and how questions relate to real-world variables.

8. LLM Resolver Agents

A system for resolving forecasting questions using AI agents with built-in desiderata including: triggering experiments at specific future points, deterministic randomness methods, specified LLM usage, verifiability, auditability, proper caching/storing, and sensitivity analysis.

9. AI-Organized Information Hubs

A platform optimized for AI readers and writers where systems, experts, and organizations can contribute information that is scored and filtered for usefulness. Features would include privacy levels, payment proportional to information value, and integration of multiple file types.

"AIs doing Forecasting"[1] has become a major part of the EA/AI/Epistemics discussion recently.

I think a logical extension of this is to expand the focus from forecasting to evaluation.

Forecasting typically asks questions like, "What will the GDP of the US be in 2026?"

Evaluation tackles partially-speculative assessments, such as:

- "How much economic benefit did project X create?"

- "How useful is blog post X?"

I'd hope that "evaluation" could function as "forecasting with extra steps." The forecasting discipline excels at finding the best epistemic procedures for uncovering truth[2]. We want to maintain these procedures while applying them to more speculative questions.

Evaluation brings several additional considerations:

- We need to identify which evaluations to run from a vast space of useful and practical options.

- Evaluations often disrupt the social order, requiring skillful management.

- Determining the best ways to "resolve" evaluations presents greater challenges than resolving forecast questions.

I've been interested in this area for 5+ years but struggled to draw attention to it—partly because it seems abstract, and partly because much of the necessary technology wasn't quite ready.

We're now at an exciting point where creating LLM apps for both forecasting and evaluation is becoming incredibly affordable. This might be a good time to spotlight this area.

There's a curious gap now where we can, in theory, envision a world with sophisticated AI evaluation infrastructure, yet discussion of this remains limited. Fortunately, researchers and enthusiasts can fill this gap, one sentence at a time.

[1] As opposed to [Forecasting About AI], which is also common here.

[2] Or at least, do as good a job as we can.

In ~2014, one major topic among effective altruists was "how to live for cheap."

There wasn't much funding, so it was understood that a major task for doing good work was finding a way to live with little money.

Money gradually increased, peaking with FTX in 2022.

Now I think it might be time to bring back some of the discussions about living cheaply.

Arguably, around FTX, it was better. EA and FTX both had strong brands for a while. And there were worlds in which the risk of failure was low.

I think it's generally quite tough to get this aspect right though. I believe that traditionally, charities are reluctant to get their brands associated with large companies, due to the risks/downsides. We don't often see partnerships between companies and charities (or say, highly-ideological groups) - I think that one reason why is that it's rarely in the interests of both parties.

Typically companies want to tie their brands to very top charities, if anyone. But now EA has a reputational challenge, so I'd expect that few companies/orgs want to touch "EA" as a thing.

Arguably influencers are a often a safer option - note that EA groups like GiveWell and 80k are already doing partnerships with influencers. As in, there's a decent variety of smart YouTube channels and podcasts that hold advertisements for 80k/GiveWell. I feel pretty good about much of this.

Arguably influencers are crafted in large part to be safe bets. As in, they're very incentivized to not go crazy, and they have limited risks to worry about (given they represent very small operations).

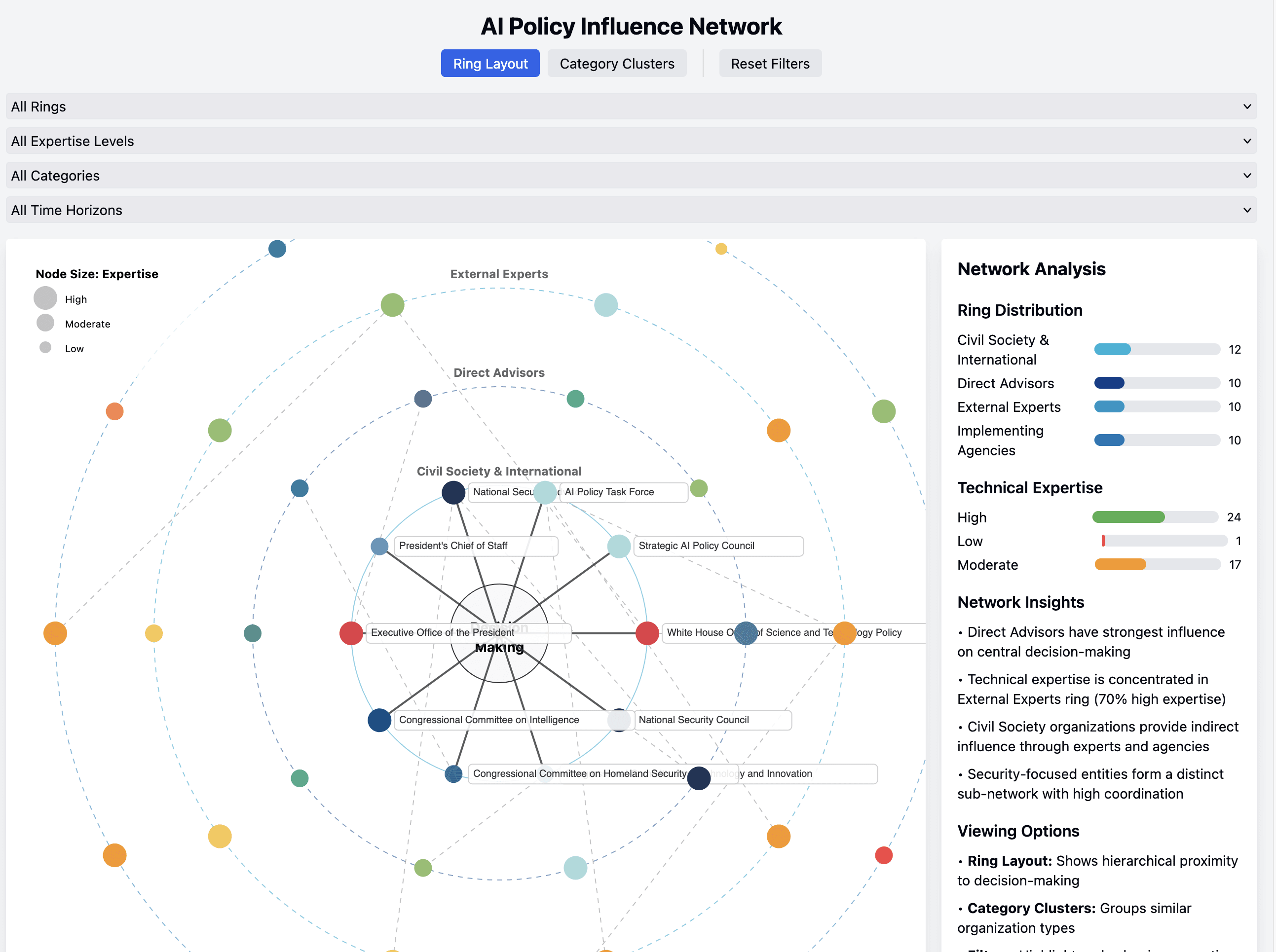

I just had Claude do three attempts at what a version of the "Voice in the Room" chart would look like as an app, targeting AI Policy. The app is clearly broken, but I think it can act as an interesting experiment.

Here the influencing parties are laid out in consecutive rings. There are lines connecting connected organizations. There's also a lot of other information here.

I mainly agree.

I previously was addressing Michael's more limited point, "I don't think government competence is what's holding us back from having good AI regulations, it's government willingness."

All that said, separately, I think that "increasing government competence" is often a good bet, as it just comes with a long list of benefits.

But if one believes that AI will happen soon, and that a major bottleneck is "getting the broad public to trust the US government more, with the purpose of then encouraging AI reform", that seems like a dubious strategy.

(Potential research project, curious to get feedback)

I've been thinking a lot about how to do quantitative LLM evaluations of the value of various (mostly-EA) projects.

We'd have LLMs give their best guesses at the value of various projects/outputs. These would be mediocre at first, but help us figure out how promising this area is, and where we might want to go with it.

The first idea that comes to mind is "Estimate the value in terms of [dollars, from a certain EA funder] as a [probability distribution]". But this quickly becomes a mess. I think this couples a few key uncertainties into one value. This is probably too hard for early experiments.

A more elegant example would be "relative value functions". This is theoretically nicer, but the infrastructure would be more expensive. It helps split up some of the key uncertainties, but would require a lot of technical investment.

One option that might be interesting is asking for a simple rank order. "Just order these projects in terms of the expected value." We can definitely score rank orders, even though doing so is a bit inelegant.

So one experiment I'm imagining is:

- We come up with a list of interesting EA outputs. Say, a combination of blog posts, research articles, interventions, etc. From this, we form a list of maybe 20 to 100 elements. These become public.

- We then ask people to compete to rank these. A submission would be [an ordering of all the elements] and an optional [document defending their ordering].

- We feed all of the entries in (2) into an LLM evaluation system. This would come with a lengthy predefined prompt. It would take in all of the provided orderings and all the provided defenses. It then outputs its own ordering.

- We then score all of the entries in (2), based on how well they match the result of (3).

- The winner gets a cash prize. Ideally, all submissions would become public.

This is similar to this previous competition we did.

Questions:

1. "How would you choose which projects/items to analyze?"

One option could be to begin with a mix of well-regarded posts on the EA Forum. Maybe we keep things to a limited domain for now (just X-risk), but have cover a spectrum of different amounts of karma.

2. "Wouldn't the LLM do a poor job? Why not humans?"

Having human judges at the end of this would add a lot of cost. It could easily make the project 2x as expensive. Also, I think it's good for us to learn how to use LLMs for evaluating these competitions, as it has more long-term potential.

3. "The resulting lists would be poor quality"

I think the results would be interesting, for a few reasons. I'd expect the results to be better than what many individuals would come up with. I also think it's really important we start somewhere. It's very easy to delay things until we have something perfect- then for that to never happen.

It seems like recently (say, the last 20 years) inequality has been rising. (Editing, from comments)Right now, the top 0.1% of wealthy people in the world are holding on to a very large amount of capital.

(I think this is connected to the fact that certain kinds of inequality have increased in the last several years, but I realize now my specific crossed-out sentence above led to a specific argument about inequality measures that I don't think is very relevant to what I'm interested in here.)

On the whole, it seems like the wealthy donate incredibly little (a median of less than 10% of their wealth), and recently they've been good at keeping their money from getting taxed.

I don't think that people are getting less moral, but I think it should be appreciated just how much power and wealth is in the hands of the ultra wealthy now, and how little of value they are doing with that.

Every so often I discuss this issue on Facebook or other places, and I'm often surprised by how much sympathy people in my network have for these billionaires (not the most altruistic few, but these people on the whole). I suspect that a lot of this comes partially from [experience responding to many mediocre claims from the far-left] and [living in an ecosystem where the wealthy class is able to subtly use their power to gain status from the intellectual class.]

The top 10 known billionaires have easily $1T now. I'd guess that all EA-related donations in the last 10 years have been less than around $10B. (GiveWell says they have helped move $2.4B). 10 years ago, I assumed that as word got out about effective giving, many more rich people would start doing that. At this point it's looking less optimistic. I think the world has quite a bit more wealth, more key problems, and more understanding of how to deal with them then it ever had before, but still this hasn't been enough to make much of a dent in effective donation spending.

At the same time, I think it would be a mistake to assume this area is intractable. While it might not have improved much, in fairness, I think there was little dedicated and smart effort to improve it. I am very familiar with programs like The Giving Pledge and Founders Pledge. While these are positive, I suspect they absorb limited total funding (<$30M/yr, for instance.) They also follow one particular highly-cooperative strategy. I think most people working in this area are in positions where they need to be highly sympathetic to a lot of these people, which means I think that there's a gap of more cynical or confrontational thinking.

I'd be curious to see the exploration of a wide variety of ideas here.

In theory, if we could move from these people donating say 3% of their wealth, to say 20%, I suspect that could unlock enormous global wins. Dramatically more than anything EA has achieved so far. It doesn't even have to go to particularly effective places - even ineffective efforts could add up, if enough money is thrown at them.

Of course, this would have to be done gracefully. It's easy to imagine a situation where the ultra-wealthy freak out and attack all of EA or similar. I see work to curtail factory farming as very analogous, and expect that a lot of EA work on that issue has broadly taken a sensible approach here.

From The Economist, on "The return of inheritocracy"

> People in advanced economies stand to inherit around $6trn this year—about 10% of GDP, up from around 5% on average in a selection of rich countries during the middle of the 20th century. As a share of output, annual inheritance flows have doubled in France since the 1960s, and nearly trebled in Germany since the 1970s. Whether a young person can afford to buy a house and live in relative comfort is determined by inherited wealth nearly as much as it is by their own success at work. This shift has alarming economic and social consequences, because it imperils not just the meritocratic ideal, but capitalism itself.

> More wealth means more inheritance for baby-boomers to pass on. And because wealth is far more unequally distributed than income, a new inheritocracy is being born.