Introduction:

The (highly interrelated) effective altruist and Rationalist communities are very small on a global scale. Therefore, in general, most intelligence, skill and expertise is outside of the community, not within it.

I don’t think many people will disagree with this statement. But sometimes it’s worth reminding people of the obvious, and also it is worth quantifying and visualizing the obvious, to get a proper feel for the scale of the difference. I think some people are acting like they have absorbed this point, and some people definitely are not.

In this post, I will try and estimate the size of these communities. I will compare how many smart people are in the community vs outside the community. I will do the same for people in a few professions, and then I will go into controversial mode and try and give some advice that I think might follow from fully absorbing these results.

How big is EA and rationalism

To compare EA/rats against the general population, we need to get a taste for the size of each community. Note that EA and Rationalism are different, but highly related and overlapping communities, with EA often turning to rationalism for it’s intellectual base and beliefs.

It is impossible to give an exact answer to the size of each community, because nobody has a set definition of who counts as “in” the community. This gets even more complicated if you factor in the frequently used “rationalist adjacent” and “EA adjacent” self labels. For the sake of this article, I’m going to grab a pile of different estimates from a number of sources, and then just arbitrarily pick a number.

Recently JWS placed an estimate of the EA size as the number of “giving what we can” pledgees, at 8983. Not everyone who is in EA signs the pledge. But on the flipside, not everyone who signs the pledge is actively involved in EA.

A survey series from 2019 tried to estimate this same question. They estimated a total of 2315 engaged EA’s, 6500 active EA’s in the community, with 1500 active on the EA forum.

Interestingly, there has also been an estimate of how many people had even heard of effective altruism, finding 6.7% of the US adult population, and 7.9% of top university students, had heard of EA. I would assume this has gone up since then due to various scandals.

We can also look at the subscriber counts to various subreddits, although clicking a “subscribe” button once is not the same thing as being a community member. It also doesn’t account for people that leave. On the other hand, not everyone in a community joins their subreddit.

r/effectivealtruism: 27k

r/slatestarcodex: 64k

r/lesswrong: 7.5k

The highest estimate here would be the r/slatestarcodex, however this is probably inflated by the several year period when the subreddit hosted a “culture war” thread for debate on heated topics, which attracted plenty of non-rationalists. Interestingly, the anti-rationalist subreddit r/sneerclub had 19k subscribers, making it one of the most active communities related to rationalism before the mods shut it down.

The (unofficial?) EA discord server has around 2000 members.

We can also turn to surveys:

The lesswrong survey in 2023 only had 588 respondents, of which 218 had attended a meetup.

The EA survey in 2022 had 3567 respondents.

The Astralcodexten survey for 2024 had 5,981 respondents, of which 1174 were confirmed paid subscribers and 1392 had been to an SSC meetup.

When asked about what they identified as, 2536 people identified as “sort of “ Lesswrong identified, while only 736 definitively stated they were. Similarly, 1908 people said they were “sort of” EA identified, while 716 identified as such without qualification. I would expect large overlap between the two groups.

These surveys provide good lower bounds for community size. They tell us less about what the total number is. These latter two surveys were generally well publicised on their respective communities, so I would assume they caught a decent chunk of each community, especially the more dedicated members. One article estimated a 40% survey response rate when looking at EA orgs.

The highest estimate I could find would be the number of twitter followers of Lesswrong founder Eliezer yudkowsky, at 178 thousand . I think it would be a mistake to claim this as a large fraction of the number of rationalists: a lot of people follow a lot of people, and not every follow is actually a real person. Stephen pinker, for example, has 850 thousand followers, with no large scale social movement at his back.

I think this makes the community quite small. For like to like comparisons, the largest subreddit (r/slatestarcodex on 64k) is much smaller than r/fuckcars, a community for people who hate cars and car-centric design with 443k users. Eliezer has half as many twitter followers as this random leftist with a hippo avatar. The EA discord has the same number of members as the discord for this lowbrow australian comedy podcast.

Overall, going through these numbers, I would put the number at about 10,000 people who are sorta in the EA or rationalist community, but I’m going to round this all the way up to 20,000 to be generous and allow for people who dip in and out. If you only look at dedicated members the number is much lower, if you include anybody that has interacted with EA ever the number would be much higher.

Using this generous number of 20000, we can look at the relative size of the community.

Looking at the whole planet, with roughly 8 billion people, for every 1 person in this community, there are about four hundred thousand people out of it. If we restrict this to the developed world (which almost everybody in EA comes from), this becomes 1 in 60000 people.

If we go to just America, we first have to estimate what percentage are in the US. The surveys have yielded 35% in the EA survey, 50% in the lesswrong survey, 57% in the astralcodex survey. For the purpose of this post, I’ll just put it at 50% (it will not signficantly affect the results). So, in the US, the community represents 1 in 33,000 people.

What about smart people?

Okay, that’s everyone in general. But what if we’re only interested in smart people?

First off, I want to caveat that “IQ” and “intelligence” are not the same thing. Equating the two is a classic example of the spotlight effect: IQ scores are relatively stable and so you can use them for studies, whereas intelligence is a more nebulous, poorly defined concept. If you score low on IQ tests, you are not doomed, and if you score highly on IQ tests, that does not make you smart in the colloquial sense: over the years I have met many people with high test scores that I would colloquially call idiots. See my mensa post for further discussion on this front.

However, IQ is easier to work with for statistics, so for the sake of this section, let’s pretend to be IQ chauvinists, that only care about people with high IQ’s.

This seems like it would benefit the rationalist community a lot. The rationalist community is known for, when asked on surveys, giving very high IQ scores, with medians more than two SD’s above average. This has been the source of some debate, Scott alexander weighed in on the debate gave his guess as an average of 128, which would put the median rationalist at roughly MENSA level. This still feels wrong to me: if they’re so smart, where are the nobel laureates? The famous physicists? And why does arguing on Lesswrong make me feel like banging my head against the wall? However, these exact same objections apply to MENSA itself, with an even larger IQ average. So perhaps they really are that smart, it’s just that high IQ scores, on their own, are not all that useful.

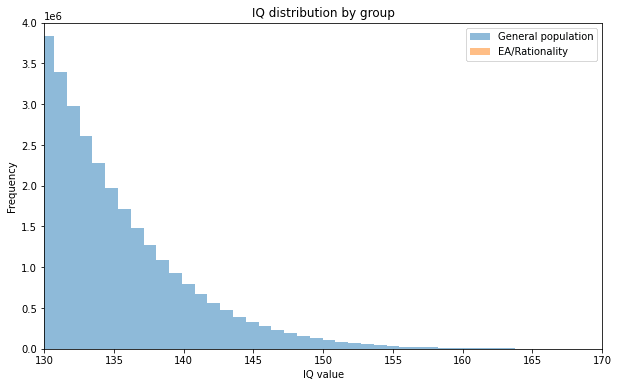

Let’s take the 20000 or so rat/Eaers, and take the 128 median IQ estimate at face value, assuming the same standard deviation, and compare that to the general population of the developed world (roughly 1.2 billion people), assuming an average IQ of 100. We will assume a normal distribution and SD of 15 for both groups. Obviously, this is a rough approximation. Now we can put together a histogram of how many people are at each IQ level, restricting our view to only very smart people with more than 130 IQ:

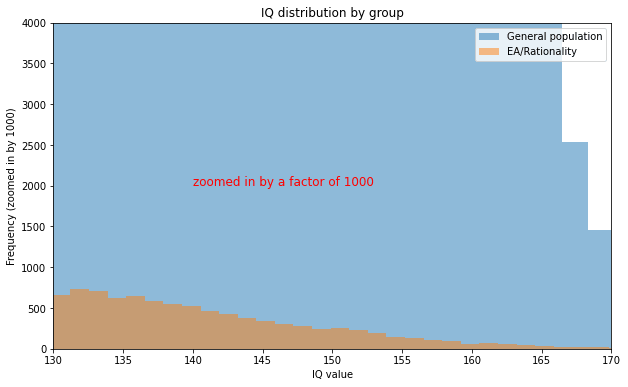

I promise you I didn’t forget the rationalists! To find them, let’s zoom in by a factor of one thousand:

In numbers, the community has roughly 1 in 10000 of the MENSA level geniuses in the developed world. Not bad, but greatly drowned out by the greater public.

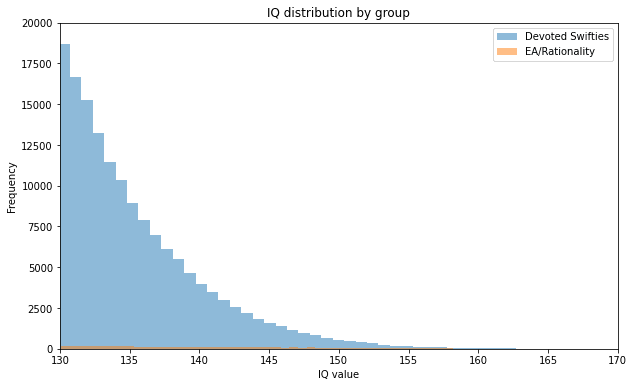

Just for fun, let’s compare this to another group of devoted fanatics: Swifties.

A poll estimated that 22% of the US population identify as taylor swift fans, which is 66 million people. Let’s classify the top 10% most devoted fans as the “swifties”, which would be 6.6 million in the US alone. The Eras tour was attended by 4.35 million people, and that was famously hard to get tickets for, so this seems reasonable. Let’s say they have the same IQ distribution as the general population, and compare them to the US Rationalist/EA community:

The Swifties still win on sheer numbers. According to this (very rough) analysis, we would expect roughly 30 times as many genius swifties as there are genius rationalists. If only this significantly greater intellectual heft could be harnessed for good! Taylor, if you’re reading this, it’s time to start dropping unsolved mathematical problems as album easter eggs.

Note that I restricted this to the developed world for easier comparison, but there are significantly more smart people in the world at large.

Share of skilled professionals

Ok, let’s drop the IQ stuff now, and turn to skilled professionals.

The lesswrong survey conveniently has a breakdown of respondents by profession:

Computers (Practical): 183, 34.8%

Computers (AI): 82, 15.6%

Computers (Other academic): 32, 6.1%

Engineering: 29, 5.5%

Mathematics: 29, 5.5%

Finance/Economics: 22, 4.2%

Physics: 17, 3.2%

Business: 14, 2.7%

Other “Social Science”: 11, 2.1%

Biology: 10, 1.9%

Medicine: 10, 1.9%

Philosophy: 10, 1.9%

Law: 9, 1.7%

Art: 7, 1.3%

Psychology: 6, 1.1%

Statistics: 6, 1.1%

Other: 49, 9.3%

I’ll note that this was from a relatively small sample, but it was similar enough to the ACX survey that it seems reasonable. We will use the earlier figure of 10000 rat/Eas in the US, and assume the percentages in the survey are the same for the entire community.

I’ll look at three different professions: law, physics, and software.

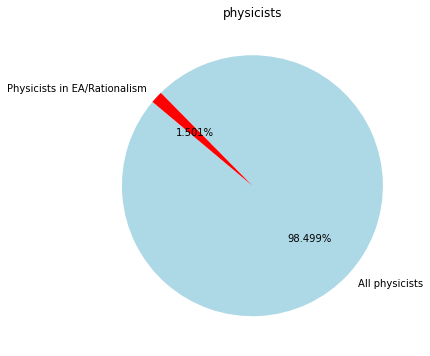

Physicists:

The number of physicists in total in the US is 21000. It’s quite a small community. The percentage of physicists in the surveys 3.2%, while low, is much higher than it is in the general population (0.006%), perhaps as a result of having lots of nerds and having a pop-science focus.

We will use the estimate of 10000 Rationalist/Eaers in the US. 3.2% of this is 320 physicists. This seems kinda high to me, but remember that I’m being generous with my definition here: the number that are actively engaged on a regular basis is likely to be much lower than that (for example, only 17 physicists answered the lesswrong survey). It also may be that people are studying physics, but then going on to do other work, which is pretty common.

Despite all of that, this is still a small slice of the physicist population.

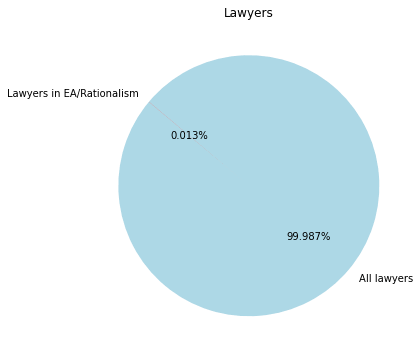

Lawyers:

The number of lawyers in the US is roughly 1.3 million, or 0.4% of the population. In rationalism/EA, this number is higher, at 1.7%. I think basically all white collar professions are overrepresented due to the nerdy nature of the community.

Going by our estimate, the number of lawyers in the US rationalist/EA community is about 170. Graphing this against the 1.3 million lawyers:

The lawyers in the community make up a miniscule 0.01% of the total lawyers in the US.

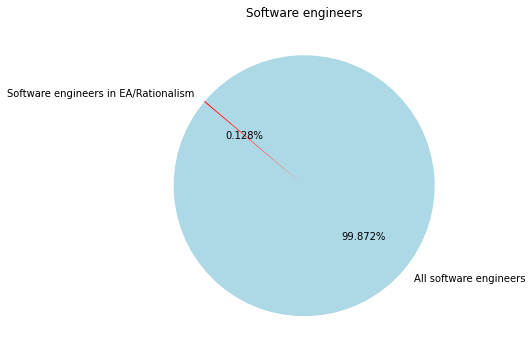

Software engineers:

4.4 million software engineers in the US, or 1.3% of the population. In contrast, 56.5% of the lesswrong survey worked with computers, another dramatic overrepresentation, reflecting the particular appeal of the community to tech nerds. This represents an estimated 5650 software engineers in the US.

This means that despite the heavy focus on software and AI, the community still only make up 0.13% of the population of software engineers in the US.

When you’re in an active community, where half the people are software engineers discussing AI and software constantly, it can certainly feel like you have a handle on what software engineers in general think about things. But beware: You are still living in that tiny slice up there, a slice that was not randomly selected. What is true for your bubble may not be true for the rest of the world.

Effective altruism:

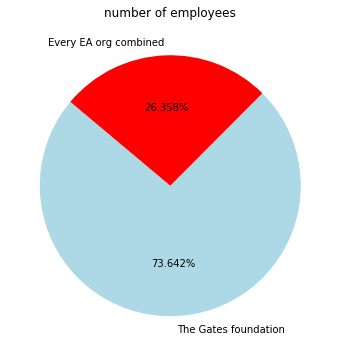

Okay, these are general categories. How about if we just looked at the entire point of EA: effective altruism: evidence based philanthropy.

Well, why don’t we look at a different “effective altruist” organisation: the Gates foundation. You can disagree about the decisions and methods of the Gates foundation, but I think it’s clear that they think they are doing altruism that is evidence based and effective, and trying to find the best things to do with the money. They are doing a lot of similar actions to EA, such as large donation pledges. Both groups have been credited for saving lots of lives with targeted third world interventions, but have also been subject to significant scrutiny and criticism.

A post from 2021 (before the FTX crash) estimated there were about 650 people employed in EA orgs. The Gates foundation has 1818 employees:

This one organisation still has more people than every EA organisation. Add to that all the other similar orgs, and the rise of the randomista movement being the most influential force in development aid, and it seems that the Effective Altruism movement only makes up a small fraction of “effective altruists”.

Are there any areas where EA captures the majority of the talent share? I would say the only areas are in fields that EA itself invented, or is close to the only group working on, such as longtermism or wild animal suffering.

Some advice for small communities:

Up till, now we’ve been in the “graphing the obvious” phase, where I establish that there is much more talent and expertise outside the community than in it. This applies to any small group, it does not mean the group is doomed and can’t accomplish anything.

But I think there quite a few takeaways for a community that actually internalizes the idea of being a small fish in a big pond. I’m not saying people aren’t doing any of these already, for example I’ve seen a few EA projects working in partnership with leading university labs, which I think is a great way to utilise outside expertise.

Check the literature

Have you ever had a great idea for an invention, only to look it up and find out it was already invented decades ago?

There are so many people around, that chances are that any new idea you have will already have been thought up already. Academia embraces this fact thoroughly with it’s encouragement of citations. You are meant to engage with what has already been said or done, and catch yourself up. Only then can you identify the gaps in research that you can jump into. You want to be standing on the shoulders of giants, not reinventing the wheel.

This saves everybody a whole lot of time. But unfortunately a lot of articles in the ea/rat community seem to only cite or look at other blog posts in the same community. It has a severe case of “not invented here” syndrome. This is not to say they don’t ever cite outside sources, but when they do the dive is often shallow or incomplete. Part of the problem is that rationalists tend to valorise coming up with new ideas more than they do finding old ones.

Before doing any project or entering any field, you need to catch up on existing intellectual discussion on the subject. This is easiest to find in academia as it’s formally structured, but there is plenty to be found in books, blogs, podcasts and whatever that are not within your bubble.

The easiest way to break ground is to find underexplored areas and explore them. The amount of knowledge that is known is vast, but the amount that is unknown is far, far vaster.

Outside hiring

There have been a few posts on the EA forum talking about “outside hiring” as a somewhat controversial thing to encourage. I think the graphs above are a good case for outside hiring.

If you don’t consider outside hires, you are cutting out 99% or more of your applicant pool. This is very likely to exclude the best and most qualified people for the job, especially if the job is something like a lawyer for the reasons outlined above.

Of course, this is job dependent, and there are plenty of other factors involved, such as culture fit, level of trust, etc, that could favour the in-community members. But just be aware that a decision to only hire or advertise to ingroup members comes at a cost.

Consult outside experts

A while back I did an in depth dive into drexlerian nanotech. As a computational physicist, I had a handle on the theoretical side, but was sketchy on the experimental side. So I just… emailed the experimentalist. He was very friendly, answered all my questions, and was all around very helpful.

I think you’d be surprised at how many academics and experts are willing to discuss their subject matter. Most scientists are delighted when outsiders show interest in their work, and love to talk about it.

I won’t name names, but I have seen EA projects that failed for reasons that are not obvious immediately, but in retrospect would have been obvious to a subject matter expert. In these cases a mere one or two hour chat with an existing outside expert could save months of work and thousands of dollars.

The amount of knowledge outside your bubble outweighs the knowledge within it by a truly gargantuan margin. If you entering a field that you are not already an expert in, it is foolish to not consult with the existing experts in that field. This includes paying them for consulting time, if necessary. This doesn’t mean every expert is automatically right, but if you are disagreeing with the expert about their field, you should be able to come up with a very good explanation as to why.

Insularity will make you dumber

It’s worth stating outright: Only talking within your ingroup will shut you off from a huge majority of the smart people of the world.

Rationalism loves jargon, including jargon that is just completely unnecessary. For example, the phrase “epistemic status” is a fun technique where you say how confident you are in a post you make. But it could be entirely replaced with the phrase “confidence level”, which means pretty much the exact same thing. This use of “shiboleths” is a fun way to promote community bonding. It also makes a lot of your work unreadable to people who haven’t been steeped in a movement for a very long ride, thus cleaving off the rationalist movement from the vast majority of smart and skilled people in society. It gets insulated from criticisism by the number of qualified people who can’t be arsed learning a new nerd language in order to tear apart your ideas.

If you primarily engage with stuff written in rationalist jargon, you are cutting yourself of from almost all the smart people in the world. You might object that you can still access the information in the outside world, from rationalists that look through it and communicate it in your language. But be clear that this is playing a game of chinese whispers with knowledge: whenever information is filtered through a pop science lens, it gets distorted, with greater distortion occurring if the pop science communicator is not themselves a subject matter expert. And the information you do see will be selected: the community as a whole will have massive blindspots of information.

Read widely, read outside your bubble. And when I say “read outside your bubble”, I don’t mean just reading people in your bubble interpreting work outside your bubble. And in any subject where all or most of your information comes from your bubble, reduce your confidence about that subject by a significant degree.

In group “consensus” could just be selection effects

Physicists are a notoriously atheistic bunch. According to one survey, 79% of physicists do not believe in god. But on the converse, that means that 21% of them do. if we go by the earlier figure of 20000 physicists in the US, means that there are 4200 professional physicists who believe in god. This is still a lot of people!

Imagine I started a forum aimed at high-IQ religious people. Typical activities involve reading bible passages, preaching faith, etc, which attracts a large population of religious physicists. I then poll the forum, and we find that we have hundreds of professional physicists, 95% of whom believe in God, all making convincing physics based arguments for that position. What can I conclude from this “consensus”? Nothing!

The point here is that the consensus of experts within your group will not necessarily tell you anything about the consensus of experts outside your group. This is easy to see in questions where we have polls and stuff, but on other questions may be secretly reinforcing false beliefs that just so happen to be selected for in the group.

Within Rationalism, the obvious filter is the reverence for “the sequences”, an extremely large series of pop science blogposts. In it’s initial form, Lesswrong.com was basically a fanforum for these blogs. So obviously people that like the sequences are far more likely to be in the community than those that don’t. As a result, there is a consensus within rationalism that the core ideas of sequences are largely true. But you can’t point to this consensus as good evidence that they actually are true, no matter how many smart ingroup members you produce to say this, because there could be hundreds of times as many smart people outside the community who think they are full of shit, and bounced off the community after a few articles. (obviously, this doesn’t prove that it’s false either).

You should be skeptical of claims only made in these spaces

In any situation where the beliefs of a small community are in conflict with the beliefs of outside subject matter experts, your prior (initial guess) should be high that your community is wrong.

It’s a simple matter: the total amount of expertise, intelligence, and resources outside of your community almost always eclipses the amount within it by a large factor. You need an explanation of why and how your community is overcoming this massive gap.

I’m not saying you can never beat the experts. For an example of a case where contrarianism was justified, we can take the replication crisis in the social sciences. Here, statisticians can justify their skepticism of the field: statistics is hard and social scientists are often inadequately trained in it, and we can look at the use of statistics and find that it is bad and could lead to erroneous conclusions. Many people were pointing out these problems before the crisis became publicized.

It’s not enough to identify a possible path for experts to be wrong, you also have to have decent evidence that this explanation is true. Like, you can identify that because scientists are more left wing, they might be more inclined to think that climate change is real and caused by humans. And it is probably true that this leads to a nonzero amount of bias in the literature. But is it enough bias to generate such an overwhelming consensus of evidence? Almost certainly not.

The default stance towards any contrarian stance should be skepticism. That doesn’t mean you shouldn’t try things: sometimes long shots actually do work out, and can pay off massively. But you should be realistic about the odds.

Conclusion

I’m not trying to be fatalist here, and say you shouldn’t try to discuss or figure things out, just because you are in a small community. Small communities can get a lot of impressive stuff done. But you should remember, and internalize, that you are small, and be appropriately humble in response. Your little bubble will not solve the all the secrets of the universe. But if you are humble and clever, and look outwards rather than inwards, you can still make a small dent in the vast mine of unknown knowledge.

Related to this, Julius Bauman has made an excellent talk about inward facing vs outward facing communities. In general, you don't find inward facing communities among the large social movements who succeed at their goals.

I'm still very worried about the fact that the community hasn't seemingly caught up on this and is not promoting outward facing practices more than in the past. I myself often get advised to orient my work to the EA community when I actually want to be outward facing.

do you have a link to that talk? couldn't find anything online

Just wanted to bump this comment, I'm interested

Conclusion is probably valid, argument probably invalid? The argument proves too much — “Most smart and skilled people are outside of the Vienna Circle”

What are you saying it proves that is demonstrably untrue?

Just because a community is small and most smart people are outside of it doesn't mean that the community cannot have a disproportionately large impact. The Vienna Circle is one example (analytic philosophy and everything downstream of it would look very different without them) and they were somewhat insular. Gertrud Stein's Salons and the Chelsea Hotel might have had a similar impact on art and literature in the 20th century.

Importantly, I'm not saying EA is the same (arguably it's already too large to be included in that list), but the argument that statistically most smart people are outside of a small community is not a good argument for whether that community will be impactful or for whether that community should engage more with the rest of the world.

This seems like a straw man. Where does the OP claim EA is not impactful? Given his strong engagement with the movement, I assume he believes that it is.

Cherry picking a couple of small groups that achieved moderate success - one of whom entirely abandoned their research project after several decades after sustained and well-argued attack from people outside the circle - doesn't seem like a good counterargument to the claim that being a small group should encourage humility.

I think there might be a misunderstanding here? My entire point is that OP's conclusions are valid (they're saying a lot of true things like "Small communities can get a lot of impressive stuff done" and "Your little bubble will not solve the all the secrets of the universe") but the argument for those conclusions is invalid.

The argument goes[1]: if you are a small community and you don't following my advice then your impact is strongly limited.

My counter is a demonstration by counterexample, I argue that the argument can't be valid, since there exist small communities that don't follow OPs advice and that made a lot more than, in OPs words, "a small dent in the vast mine of unknown knowledge".

I hope the previous paragraph makes it clear why 'cherry picking' is appropriate here? To disprove an implication it's sufficient to produce an instance where the implication doesn't hold.

I guess here we have more of an object-level disagreement? Just because a philosophy eventually became canonised doesn't mean it was moderately successful. The fact that most of analytic philosophy was developed either in support or in opposition of logical positivism is pretty much the highest achievement possible in philosophy? And, according to the logic outlined above, it's not actually sufficient to explain away one of my counter-examples, to defend the original argument you actually have to explain away all of my counter-examples.

my paraphrase, would be curious if you disagree

My handwavey argument around this would be something like "it's very hard to stay caring about the main thing".

I would like more non-EAs who care about the main thing - maximising doing good - but not just more really talented people. If this is just a cluster of really talented people, I am not sure that we are different to universities or top companies - many of which don't achieve our aims.

I think expecting nobel laureates is a bit much, especially given the demographics (these people are relatively young). But if you're looking for people who are publicly-legible intellectual powerhouses, I think you can find a reasonable number:

(Many more not listed, including non-central examples like Robin Hanson, Vitalik Buterin, Shane Legg, and Yoshua Bengio[2].)

And, like, idk, man. 130 is pretty smart but not "famous for their public intellectual output" level smart. There are a bunch of STEM PhDs, a bunch of software engineers, some successful entrepreneurs, and about the number of "really very smart" people you'd expect in a community of this size.

He might disclaim any current affiliation, but for this purpose I think he obviously counts.

Who sure is working on AI x-risk and collaborating with much more central rats/EAs, but only came into it relatively recently, which is both evidence in favor of one of the core claims of the post but also evidence against what I read as the broader vibes.

This list includes many people that, despite being well-known as very smart people in the EA/rationalist community, are not really “publicly-legible intellectual powerhouses.”

Taylor, Garrabrant and Demski publish articles on topics related to AI primarily, but as far as I could tell, none of them have NeurIPS or ICML papers. In comparison, the number of unique authors with papers featured on NeurIPS is more than 10,000 each year. Taylor and Demski have fewer than 4,000 Twitter followers, and Garrabrant does not seem to use Twitter. The three researchers have a few hundred academic citations each.

I find it hard to justify describing them as “publicly-legible intellectual powerhouses” when they seem, at a glance, not much more professionally impressive than great graduate students at top math or CS graduate programs (though such students are very impressive).

A better example of an EA-adjacent publicly-legible intellectual powerhouse in the ML area is Dan Hendrycks, who had a NeurIPS paper published in college and has at least 60 times more citations than those MIRI or former MIRI researchers you mentioned.

My claim is something closer to "experts in the field will correctly recognize them as obviously much smarter than +2 SD", rather than "they have impressive credentials" (which is missing the critically important part where the person is actually much smarter than +2 SD).

I don't think reputation has anything to do with titotal's original claim and wasn't trying to make any arguments in that direction.

Also... putting that aside, that is one bullet point from my list, and everyone else except Qiaochu has a wikipedia entry, which is not a criteria I was tracking when I wrote the list but think decisively refutes the claim that the list includes many people who are not publicly-legible intellectual powerhouses. (And, sure, I could list Dan Hendryks. I could probably come up with another twenty such names, even though I think they'd be worse at supporting the point I was trying to make.)

I strongly endorse the overall vibe/message of titotal's post here, but I'd add, as a philosopher, that EA philosophers are also a fairly professionally impressive bunch.

Peter Singer is a leading academic ethicist by any standards. The GPI in Oxford's broadly EA-aligned work is regularly published in leading journals. I think it is fair to say Derek Parfit was broadly aligned with EA, and a key influence on the actually EA philosophers, and many philosophers would tell you he was a genuinely great philosopher. Many of the most controversial EA ideas like longtermism have roots in his work. Longtermism is less like a view believed only by a few marginalised scientists, and more like say, a controversial new interpretation of quantum mechanics that most physicists reject, but some young people at top departments like and which you can publish work defending in leading journals.

Yeah "2 sds just isn't that big a deal" seems like an obvious hypothesis here ("People might over-estimate how smart they are" is, of course, another likely hypothesis).

Also of course OP was being overly generous by assuming that it's a normal distribution centered around 128. If you take a bunch of random samples of a normal distribution, and only look at subsamples with median 2 sds out, in approximately ~0 subsamples will you find it equally likely to see + 0 sds and +4 sds.

Wait, are you claiming +0 SD is significantly more likely than +4 SD in a subsample with median +2 SD, or are you claiming that +4 SD is more likely than +0 SD? And what makes you think so?

The former. I think it should be fairly intuitive if you think about the shape of the distribution you're drawing from. Here's the code, courtesy of Claude 3.5. [edit: deleted the quote block with the code because of aesthetics, link should still work].

I worry that this is an incredibly important factor in much EA longtermist/AI safety/X-risk thinking. In conversation, EAs don't seem to appreciate how qualitative the arguments for these concerns are (even if you assume a totalising population axiology) and how dangerous to epistemics that is in conjunction with selection effects.

Could you give some examples?

Do you mean examples of such conversations, of qualitative arguments for those positions, or of danger to epistemics from the combination

Examples of arguments you see as having this issue

Off the top of my head:

* Any moral philosophy arguments that imply the long term future dwarfs the present in significance (though fwiw I agree with these arguments - everything below I have at least mixed feelings on)

* The classic arguments for 'orthogonality' of intelligence and goals (which I criticised here)

* The classic arguments for instrumental convergence towards certain goals

* Claims about the practical capabilities of future AI agents

* Many claims about the capabilities of current AI agents, e.g. those comparing them to intelligent high schoolers/university students (when you can quickly see trivial ways in which they're nowhere near the reflexivity of an average toddler)

* Claims that working on longtermist-focused research is likely to be better for the long term than working on nearer term problems

* Claims that, within longtermist-focused research, focusing on existential risks (in the original sense, not the very vague 'loss of potential sense') is better than working on ways to make he long term better conditional on it existing (or perhaps looking for ways to do both)

* Metaclaims about who should be doing such research, e.g. on the basis that they published other qualitative arguments that we agree with

* Almost everything on the list linked in the above bullet

[edit: I forgot * The ITN framework]

Executive summary: The effective altruist and rationalist communities are very small compared to the global population, containing only a tiny fraction of smart and skilled people across various professions, which has important implications for how these communities should operate and view their capabilities.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

This seems like a variation on "If you’re so smart, why aren’t you rich?" The responses to these two prompts seems quite similar. Being smarter tends to lead toward more money (and more Nobel prizes), all else held equal, and there are many factors aside from intelligence that influence these outcomes.

There does seem to be some research supporting the idea that non-intelligence factors play the predominant role in success, but I have to confess that I have not studied this area, and I only have vague impressions as to how reality functions here.

Thanks for writing this :)

I haven’t had a chance to read it all yet, so there is a chance this point is covered in the post, but I think more EA influence vs non-EA influence (let’s call them “normies”) within AI Safety could actually be bad.

For example, a year ago the majority of normies would have seen Lab jobs being promoted in EA spaces and gone “nah that’s off”, but most of EA needed more nuance and time to think about it.

This is a combo of style of thinking / level of conscientiousness that I think we need less of and leads me to think my initial point (there are other dimensions around which kind of normies I think we should target within AI Safety but that’s it’s own thing altogether).

I think this post serves as a very good reminder. Thank you for writing this.

I like the EA community a lot, but it is helpful to have a reminder that we aren't so special, we don't have all the answers, and we should be willing to seek 'outside' help when we lack the experience or expertise. It is easy to get too wrapped up in a simplistic narrative.

@Lukeprog posted this few decades ago "neglected rationalist virtue of scholarship"

I think it's mostly just cognitive science which is daniel kanehman and others(which is well known), good bunch of linguistics (which I have heard are well known), and anti-philosophy (because we dislike philosophy as it is done), rest is just ethics and objective bayesianism, with a quinean twist.

I think there is a difference between epistemic status and confidence level, I could be overtly confident and still buy the lottery ticket while knowing it won't work. I think there is a difference between social and epistemic confidence, so it's better to specify.