Summary

I describe and review four broad potential types of subjective welfare: 1) hedonic states, i.e. pleasure and unpleasantness, 2) felt desires, both appetitive and aversive, 3) belief-like preferences, i.e. preferences as judgements or beliefs about value, and 4) choice-based preferences, i.e. what we choose or would choose. My key takeaways are the following:

- Belief-like preferences and choice-based preferences seem unlikely to be generally comparable, and even comparisons between humans can be problematic (more).

- Hedonic states, felt desires and belief-like preferences all plausibly matter intrinsically, possibly together in a morally pluralistic theory, or unified as subjective appearances of value or reasons or even under belief-like preferences (more).

- Choice-based preferences seem not to matter much or at all intrinsically (more).

- The types of welfare are dissociable, so measuring one in terms of another is likely to misweigh it relative to more intuitive direct standards and risks discounting plausible moral patients altogether (more).

- There are multiple potentially important variations on the types of welfare (more).

Acknowledgements

Thanks to Brian Tomasik, Derek Shiller and Bob Fischer for feedback. All errors are my own.

The four types

It appears to me that subjective welfare — welfare whose value depends only or primarily[1] on the perspectives or mental states of those who hold them — can be roughly categorized into one of the following four types based on how they are realized: hedonic states, felt desires, belief-like preferences and choice-based preferences. To summarize, there’s welfare as feelings (hedonic states and felt desires), welfare as beliefs about value (belief-like preferences) and welfare as choices (choice-based preferences). I will define, illustrate and elaborate on these types below.

For some discussion of my choices of terminology, see the following footnote.[2]

Hedonic states

Hedonic states: feeling good and feeling bad, or (conscious) pleasure and unpleasantness/unpleasure/displeasure,[3] or (conscious) positive and negative affect. Their causes can be physical, like sensory pleasures and physical pain, or psychological, like achievement, failure, loss, shame, humour and threats, to name a few.

It’s unclear if interpersonal comparisons of hedonic state can be grounded in general, whether or not they can be between beings who realize them in sufficiently similar ways. In my view, the most promising approaches would be on the basis of the magnitudes of immediate and necessary cognitive or mental effects, causes or components of hedonic states. Other measures seem logically and intuitively dissociable (e.g. see the section on dissociation below) or incompatible with functionalism at the right level of abstraction (e.g. against using the absolute number of active neurons, see Mathers, 2022 and Shriver, 2022).

Felt desires

Felt desires: desires we feel. They are often classified as one of two types, either a) appetitive — or incentive and typically conducive to approach or consummatory behaviour and towards things — like in attraction, hunger, cravings and anger, or b) aversive — and typically conducive to avoidance and away from things — like in pain, fear, disgust and again anger (Hayes et al., 2014, Berridge, 2018, and on anger as aversive and appetitive, Carver & Harmon-Jones, 2009, Watson, 2009 and Lee & Lang, 2009). However, the actual approach/consummatory or avoidance behaviour is not necessary to experience a felt desire, and we can overcome our felt desires or be constrained from satisfying them.

Potentially defining functions of felt desires could be their effects on attention and its control, as motivational salience, or incentive salience and aversive/threat salience, which draw attention towards stimuli (Berridge, 2018, Kim et al., 2021). Motivational salience is also recruited for our everyday goals, drawing attention to relevant stimuli (Brown, 2022, Berridge, 2018, Anselme & Robinson, 2016 (pdf)). It could even be that the only features common to all felt desires worthy of moral concern are just motivational salience and whatever it might depend on, and possibly consciousness in general. For example, hunger may just be the hunger-related perceptions and sensations, the motivational salience of food-related stimuli and/or of the hunger sensations themselves, and it's sometimes unpleasant.

Effects on attention and its control seem to be a promising basis for comparisons of the strengths of desires, intrapersonally and interpersonally: the more a desire pulls an individual’s attention or resists top-down attention control, the stronger it is. Or, generally, the more attention paid to something — in particular what the individual believes is practically at stake — the more important it is. However, even these could turn out to be essentially individual-relative, e.g. relative to their maximum or nonzero minimum attention or awareness, which may have no basis for general comparison across individuals. Or, it may be meaningful to speak of the size of access consciousness, like the number or amount of sensations, with interpersonally comparable units. The space available in access consciousness is a limited and essential resource, and attention determines how it’s used. On the other hand, it’s not clear that or why desires would feel more intense because of more or richer sensory experience.

Like for hedonic states, the most promising approaches seem to be on the basis of the magnitudes of immediate and necessary cognitive or mental effects, causes or components of felt desires.

Belief-like preferences

Belief-like preferences: judgements or beliefs about subjective value, worth, need, desirability, good, bad, betterness or worseness. If I judge it to be better to have a 10% chance of dying tomorrow than to live the rest of my life with severe chronic pain — in line with the standard gamble approach to estimating quality-adjusted life years (QALYs) — then I prefer it on this account. Or, I might judge my life to be going well, and am satisfied with my life as a whole — a kind of global preference (Parfit, 1984, Plant, 2020) —, because it’s going well in work, family and other aspects important to me. Other examples include:

- our everyday explicitly conscious goals, like finishing a task or going to the gym today,

- more important explicit goals or projects, like getting married, raising children, helping people,

- moral beliefs, like that pain is bad, knowledge is good, utilitarianism, against harming others, about fair treatment, etc.,

- general beliefs about how important things are to us, other honestly reported preferences, overall judgements about possible states of the world.

Howe (1994), Berridge (2009), and Berridge and Robinson (2016) use the term cognitive desires for a similar concept. Howe (1994) also uses desiderative beliefs, and Berridge (2009), ordinary wanting.

For an overview of various accounts of beliefs, see Schwitzgebel, 2023, section 1.[4]

I suspect we should distinguish believing, judging or thinking that from merely entertaining or thinking of a possibility. Entertaining the possibility that something could be better or worth doing doesn’t mean actually believing it. People themselves can tell when they are doing one or the other, and there are some plausible directions for how this could work, but I don’t commit to any specific approach here.[5]

I’m skeptical that we can ground belief-like preference comparisons that are both:

- intuitive interpersonally, e.g. without some humans having far larger stakes than other humans, and

- intuitive intrapersonally, e.g. agreeing with each person’s global belief-like preferences, i.e. what they would endorse after reflection.

These are largely the same problems for intertheoretic comparisons of moral theories (e.g. Tomasik, 2018 and MacAskill, Bykvist & Ord, 2020, Chapters 4 and 6). Global belief-like preferences will face similar challenges because they include or reflect moral beliefs, and otherwise for similar reasons. Hausman (1995), Greaves and Lederman (2018) and Barrett (2019) discuss similar challenges directly for preference views. See the following footnote for some examples.[6]

One response may be to look for mental, cognitive or physical features of belief-like preferences to compare. I’m skeptical that we’ll find any that can be used to satisfy the two criteria above while grounding the vast majority of interpersonal comparisons between humans.[7]

Another response may be to normalize in some way, e.g. based on the range or variance (MacAskill, Bykvist and Ord, 2020, Chapter 4) or by eliciting responses on a fixed scale, like the Satisfaction with Life Scale (Diener et al., 1985, Novopsych, Fetzer Institute) or quality-adjusted life years (QALYs) questionnaires. You can do this, but this doesn’t actually ground interpersonal comparisons. It doesn’t mean they actually assign the same or even similar absolute subjective value to similar points or between pairs of points along the scale. And there are reasons to believe they don’t even in common cases.[8]

Choice-based preferences

Choice-based preferences: our actual choices, as in revealed preferences, or what we would choose.[9] If I choose an apple over an orange when both are available in a given situation, then, on this account and in that situation, I prefer the apple to the orange.[10] In order to exclude reflexive and unconscious responses (even if learned), I’d consider only choices under voluntary/top-down/cognitive control, driven (at least in part) by reasons of which the individual is conscious, (conscious) intentions[11] and/or choices following (conscious) plans (any nonempty subset of these requirements). Conscious reasons could include hedonic states, felt desires or belief-like preferences. For example, the normal conscious experience of pain drives behaviour in part through executive functions (cognitive control), not just through (apparently) unconscious pain responses like the withdrawal reflex.

Heathwood (2019) calls these behavioral desires and argues against their moral importance, because they don’t require any genuine attraction to the chosen options (or aversion to the alternatives).

It also seems unlikely that choice-based preferences are interpersonally comparable in a way that matches how people would endorse their preferences be weighed intrapersonally, directly for the same reasons as belief-like preferences as just discussed, and indirectly because choice-based preferences can reflect belief-like preferences.

Welfare should be conscious

To count morally in an individual, I'd assume some of the relevant states must be consciously experienced, or, could be in the right circumstances. Therefore, systems incapable of consciousness wouldn’t have morally relevant welfare. Affect and motivational salience can apparently both be unconscious, so there are apparently unconscious versions of hedonic states and felt desires.[12] Beliefs also often seem to be unconscious. They don’t count unless they are or could be conscious.

Furthermore, it’s not enough to just be aware or conscious of negative affect, say; it has to consciously feel negative. For example, I can be conscious of someone else’s negative affect without it making me experience negative affect (although it may often do so, because of emotional empathy). Likewise, a system just being a conscious observer of its own negative affect doesn’t make it feel bad. The same may go for motivational salience, too.

However, on some views, e.g. some highly graded illusionist accounts of consciousness or some panpsychist views, just having such states at all could be enough to establish at least some minimal consciousness and the states could necessarily be conscious in the right way.

Moral plurality or unification

Hedonic states, felt desires and belief-like preferences all plausibly ground some kind of moral value, perhaps even simultaneously within a pluralistic theory of (subjective) wellbeing. (Maybe also choice-based preferences, but I’m more skeptical of their intrinsic value.) This is, however, probably a controversial position, and comes with substantial costs and challenges. I expect tradeoffs between the types of welfare to be impossible to ground intrapersonally in a satisfactory way, i.e. non-arbitrarily and in a way that respects my intuitions about how each type of welfare scales in itself. That seems pretty unsatisfying. However, each type of welfare seems to independently intrinsically matter to me, and discounting plausible moral patients — individuals with some types of welfare but not necessarily all — just because they’re missing one or multiple other types seems far worse.

For example animals that experience suffering but have no belief-like preferences would matter to me. We can also imagine belief-like preferences (and choice-based preferences) without hedonic states or felt desires, as in the thought experiments of Phenumb (Carruthers, 1999, also discussed in Muehlhauser, 2017, section 6.1.3.2) and philosophical Vulcans (Chalmers et al., 2019, Chalmers, 2022, also discussed in Roelofs, 2022 and Birch, 2022). Such beings could still believe things matter, even if they didn’t feel like anything mattered. And they seem to matter to me, too. Not all of what seems to matter to us matters to us directly on the basis of feelings. Some things matter deductively, e.g. the logical implications of the reasons and principles we recognize. Some atypical humans (e.g. due to brain lesions or genetics) or future artificial intelligence could be like this.

Roelofs (2022, ungated) simultaneously captures hedonic states, felt desires and belief-like preferences together in motivating consciousness and grounds their value with Motivational Sentientism:

The basic argument for Motivational Sentientism is that if a being has conscious states that motivate its actions, then it voluntarily acts for reasons provided by those states. This means it has reasons to act: subjective reasons, reasons as they appear from its perspective, and reasons which we as moral agents can take on vicariously as reasons for altruistic actions. Indeed, any being that is motivated to act could, it seems, sincerely appeal to us to help it: whatever it is motivated to do or bring about, it seems to make sense for us to empathise with that motivating conscious state, and for the being to ask us to do so if it understands this.

Roelofs (2022, ungated) further elaborates on motivation itself:

Finally, motivating consciousness should be suitable to motivate, but need not actually motivate. A desire that I never get the chance to act on is still a desire, and likewise a pain I cannot do anything about. In the extreme, this means that a being who felt pleasure and displeasure but had no capacity to act in any way (like the ‘Weather Watchers’ described by Strawson, 1994, p. 251 ff ) would count as having motivating consciousness, and thus moral status.

and

As I am using it, ‘motivation’ here does not mean anything merely causal or functional: motivation is a distinctively subjective, mental, process whereby some prospect seems ‘attractive’, some response ‘makes sense’, some action seems ‘called for’ from a subject’s perspective. The point is not whether a given sort of conscious state does or does not cause some bodily movement, but whether it presents the subject with a subjective reason for acting.[13]

In other words, it’s the subjective appearance of value or reasons — or according to Roelofs, the “subjective sense of better or worse” or “subjective goodness and badness” — that matters, and this could come in the form of hedonic states, felt desires or belief-like preferences. Choice-based preferences are just voluntary acts guided by these reasons (and other processes).

Framed this way, it could also turn out to not be pluralistic at all: there could be just one broad type of subjective welfare, namely the subjective appearance of value or reasons, if there’s a general enough account of it. Hedonic states, felt desires and belief-like preferences would just be special cases.

We might also take an individual with access to multiple of the types to be made up of distinct (but possibly interacting) moral patients, (at least) one for each type of welfare available to them, corresponding to the subsystems that realize them. Whether or not we actually do this, it can be instructive to think of them this way to highlight the separateness of the types of welfare or systems realizing them, potential disagreement between them and cases where we might otherwise inadvertently privilege one type of welfare or system over another.

Whether or not they turn out to be one type of welfare (but realized by different systems, say), I will continue to generally refer to them as different types of welfare.

More on unification

Belief-like preferences may already be general enough to capture hedonic states and felt desires, using a broad enough account of belief or something similar or more general, like appearance. For example, if believing something is bad means representing it in some kind of negative light, being drawn away from it, motivated to stop or prevent it, or otherwise acting as if it’s bad (see Schwitzgebel, 2023, section 1 for various accounts of belief), then unpleasantness and aversive felt desires seem to do these[14] and should qualify. Hedonic states and felt desires could also qualify as the recognition and application of concepts of good and bad.

It’s also plausible to me that hedonic states just are hardwired or cognitively impenetrable belief-like preferences, as attitudes in attitudinal accounts (e.g. discussed in Lin, 2020 and Pallies, 2021) and representations of value in representationalist/evaluativist accounts (Bain, 2017).[15] This seems especially plausible under some moral interpretations of illusionist accounts of consciousness for which actual illusions (themselves beliefs or appearances) are what ground moral value, to which I’m sympathetic.[16]

Felt desires and perhaps desires in general seem to in fact be at least partially attention-based (Berridge, 2018, Kim et al., 2021), so may not (just) be attitudes or representations, and whether or not they are attitudes or representations, they could matter (in part) because of how they affect attention. On the other hand, the way felt desires affect attention could just be a different way of being belief-like preferences, under a dispositionalist or interpretationist account of belief (described in Schwitzgebel, 2023, section 1). This could also match the more abstract attention-based theories of desire as described by Schroeder, 2015, section 1.4, according to which, say:

For an organism to desire p is for it to be disposed to keep having its attention drawn to reasons to have p, or to reasons to avoid not-p.

Others have defended desire-as-belief, desire-as-perception and generally desire-as-guise or desire-as-appearance of normative reasons, the good or what one ought to do. See Schroeder, 2015, 1.3 for a short overview of different accounts of desire-as-guise of good, and Part I of Deonna (ed.) & Lauria (ed), 2017 for more recent work on and discussion of such accounts and alternatives. See also Archer, 2016, Archer, 2020 for some critiques, and Milona & Schroeder 2019 for support for desire-as-guise (or desire-as-appearance) of reasons. A literal interpretation of Roelofs (2022, ungated)’s “subjective reasons, reasons as they appear from its perspective” would be as desire-as-appearance of reasons.

The right account may not specifically be desire-as-belief, and in that case, “belief-like preferences” either wouldn’t capture all of subjective welfare if belief-like preferences are understood as literal beliefs, or would need to not necessarily specifically be beliefs.

Unfortunately, unification wouldn’t help with comparisons between hedonic states, felt desires and other belief-like preferences, if and because belief-like preferences are already too hard to compare in general. In fact, if and because of the potential barriers to interpersonal comparisons of belief-like preferences, this unification could also end up counting against interpersonal comparisons of hedonic states and felt desires, at least across sufficiently different beings, if what matters about them is just what characterizes belief-like preferences or is common to all types of welfare in general.

Do choice-based preferences matter?

There’s a question here whether choice-based preferences should be considered a type of welfare at all and matter intrinsically, especially if we want to consider what choices a being practically incapable of choice would make if they were capable of choice, like Roelofs (2022, ungated) does. Or, we could consider people with locked-in syndrome, who are almost entirely incapable of motor control and movement. My intuition is that their limited physical capacities have no bearing on how much things can matter to them if their other welfare ranges aren’t limited (e.g. they can suffer just as intensely and have similar beliefs about what matters). So, either choice-based preferences add little or they shouldn’t depend on the physical capacity to effect change in the world, e.g. they should be idealized in some way.

Choice-based preferences could just be consequences of the other types of welfare and other non-welfare processes.

However, if choice-based preferences don't matter, do we exclude plausible moral patients?

In my initial characterization of choice-based preferences, their most potentially distinctive properties seem to be voluntary choice, intentions or plans, or specifically conscious versions of these. It's not clear what else the reasons on the basis the choices are made could be other than hedonic states, felt desires or belief-like preferences.

So, imagine a conscious being that has no hedonic states, felt desires or belief-like preferences. To them, nothing feels good, bad, desirable or aversive, and they don’t believe anything matters. Still, they imagine alternatives, make plans consciously, have conscious intentions, choose and follow through. They’re conscious of their choices and how and why those choices are made as they are made, i.e. the cause of their choices, but these causes are not any kind of feeling or belief that makes that plan appear better or better justified to them.

Would there be something wrong with thwarting their plans? Do they actually care? Do they act like something matters, and is that enough for this being to matter? What exactly matters to them?

- The causes of their choices of which they’re conscious? It seems not. If I were conscious of more of the details of how my brain worked as it did, those wouldn’t necessarily feel important, and I don’t think I’d be inclined to believe they mattered intrinsically.

- The choices, intentions or plans themselves? It’s not clear why they would matter.

- The objectives of their plans? What if there are no objectives? Maybe the mattering doesn’t have to be about anything in particular,[17] just like it seems that we can directly stimulate pleasure or unpleasantness in brains.

It’s hard to see what exactly would matter to this being, if anything. So, I’m inclined to say nothing does, or at least not much.

Heathwood (2019) considers a similar thought experiment, but further allowing goals and choices on their basis:

So what we need to imagine instead is a being who has goals, and can bring them about, but is in no way “invested” in them. The achievement of their goals doesn’t genuinely appeal to this being, or excite them to any degree. The being is simply disposed, like a machine, to coolly and detachedly bring them about. When the being wins its game of chess, it does so joylessly, with no enthusiasm or real interest. Nor is the being genuinely averse to losing the game of chess. The being will try to win, of course, but won’t mind if it loses. It wins chess games the way Deep Blue, IBM’s chess-playing computer, does, or the way Quinn’s Radio Man turns on radios.

Once we see that this is how this being would have to be, it is no longer intuitive to say that the being benefits when it satisfies its merely behavioral desire to win the game. Just as winning holds no appeal for the being, losing doesn’t bother it one bit. It seems to get nothing out of it either way. Thus I believe that on closer examination, this case—similar as it is to the cases of Quinn’s Radio Man and Parfit’s drug addict—in fact provides confirmation for the restriction to desires in the genuine-attraction sense rather than evidence against it.

What separates this from Phenumb and philosophical Vulcans — or will by assumption here — is that this being doesn’t actually believe its goals matter. On the other hand, does having a goal and acting to achieve it a kind of functionalist way of believing the goal matters, anyway? If so, then Heathwood's being could have belief-like preferences. Perhaps the distinction is just that Phenumb and philosophical Vulcans believe things matter in a more robust sense, but Heathwood’s being still, at least in some minimal sense, also believes its goals matter. If that’s the case, then Heathwood’s being could still matter minimally.

Welfare types can influence each other

All of these types of welfare can influence each other in humans. Hedonic states reinforce to give us felt desires, while continuous felt desire frustration can become unpleasant. Our feelings (hedonic states and felt desires) affect and inform our beliefs, e.g. we endorse what feels good and things to which we have appetitive desires, and we disapprove of what feels bad or things to which we have aversive desire. Our beliefs affect how we feel. Our beliefs and feelings affect and inform our choices. Our choices affect what we feel and believe, sometimes regardless of their effects on the world outside us, e.g. our feelings and beliefs about ourselves, like our identities or character, pride or shame.

However, there can still be substantial disagreement between the types of welfare.

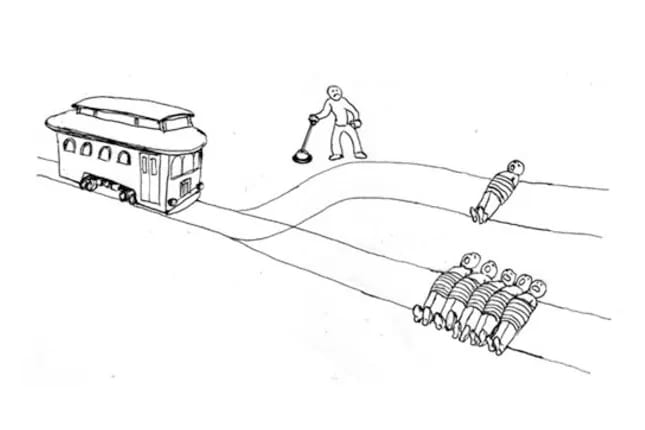

Dissociation between welfare types

These types of welfare are generally pairwise dissociable in humans and other animals, at least experimentally. In other words, there could be one type of welfare without the other matching in sign or direction. For example, an addict may have strong felt desires for the thing to which they are addicted, but believe it would be better to stop and they may no longer experience much pleasure from it. Someone with motivational anhedonia may no longer be motivated — whether as felt desire or belief-like preference — by what they enjoy, even if they would still enjoy it. Felt desire (or ‘wanting’) is dissociable from pleasure (or ‘liking’) and belief-like preferences (or ‘cognitive desires’), according to Berridge, 2018, Baumgartner et al., 2021 and Berridge, 2023. On the other hand, Shriver (2014, 2021) argues that unpleasantness and aversive desire are not dissociable in physical pain in humans and rats. However, even if this turns out to be the case, this wouldn’t mean they aren’t dissociable in every possible moral patient or other unpleasant or aversive experiences.

These dissociations imply that measuring one type of welfare in terms of another — e.g. the strengths of desires or preferences on the basis of how much pleasure is derived from their satisfaction, or pleasure intensity on the basis of how much it is desired or preferred — can badly intuitively misweigh the type of welfare from its own lights. Another risk is entirely failing to count some moral patients who have one type but not the type used to measure, e.g. using belief-like preferences to measure if some beings with hedonic states have none (e.g. perhaps some nonhuman animals), or using hedonic states or felt desires to measure if some beings with belief-like preferences have none (e.g. some conceivable artificial consciousness).

Variations

There are multiple variations or further classifications that can be applied across the types of welfare (not necessarily each variation to each type of welfare). I describe some potentially important distinctions here, on which our theories may depend.

Occurrent — actively playing a role in our mental states or behaviour, and possibly but not necessarily conscious at the time — vs standing — implicit or dispositions, how we would respond (Schroeder, 2015 and separately for beliefs, Schwitzgebel, 2023, sections 2.1 and 2.2). For example, if when you see a specific person, you would often feel attraction to them, then this could be a standing felt desire. It becomes occurrent when their appearance actually acts on your attention. Similarly, if you would often feel joy when you see someone, this could be like a standing version of a hedonic state.[18] Moods could be unconscious but occurrent versions of hedonic states, as active dispositions to feel more or less pleasure and unpleasantness generally, e.g. anhedonia in depression. In contrast, hedonism is standardly only concerned with conscious hedonic states. Conscious states are always occurrent, but not all occurrent states are conscious.

If you would be asked about your life satisfaction or about quality-adjusted life year (QALY) tradeoffs, how you would (honestly) respond would be standing belief-like preferences. Beliefs and desires are occurrent when we act on them. For example, opening the fridge to get food for yourself indicates both a desire for food and belief that there is food in the fridge. In general, you know there’s food in the fridge, even when it doesn’t affect your behaviour, so it’s typically standing. Beliefs are at least (indirectly) conscious when we represent them explicitly and consciously experience those representations, whether in thought, speech, writing or illustration.

I’d expect standing versions of beliefs and desires to often be dispositional without actually being stored explicitly anywhere in the brain. For example, a person won’t store any kind of representation of their life satisfaction until they judge it, and they won’t revise those representations until they judge it again, even if it would be reasonable to say they would judge it differently now.[19] Still, these dispositions have to be realized in some way in the brain, e.g. based on their other beliefs that are in fact stored, the neural connections, various factors that influence the likelihood of a neuron firing, and so on.

Intrinsic/terminal vs instrumental. For example, someone may want to succeed in school to become a doctor and help others, so this preference to succeed in school is (at least partially) instrumental, i.e. we satisfy it in order to satisfy other preferences from which it derives, like helping others. Intrinsic/terminal versions are not derived from others in this way. Generally, it seems people intrinsically prefer not to suffer severely, although they may also often have instrumental reasons to avoid suffering or avoid things that coincide with suffering, e.g. failure or the loss of loved ones.

Various hypothetical idealizations like under full or with more information (about what options are available, about their consequences, about the state of the world, about reasons, etc.), after training or practice, or to satisfy certain norms of (epistemic or instrumental) rationality.

For choice-based preferences in particular, if it’s the decisions or intentions themselves that matter and we want to attribute such preferences to individuals with limited physical capacities to pursue options, like in locked-in syndrome, without severely limiting the welfare ranges of such individuals relative to typically abled adult humans, we may need to idealize to hypothetical choices under situations in which the individual has greater capacities for actually choosing. For example, a machine that reads brain signals or eye movements under their conscious control, or what they would do if they had typical motor control. And even typically abled adult humans are limited in their capacities and care (or would care) about more than they can control in practice.

Personal vs non-personal or other-regarding, although the distinction may be somewhat artificial, given indirectly other-regarding personal desires, like being a successful parent (Parfit, 1984).

Local — about a specific thing, like just about your job in general, your current project, that a meeting goes well, or scoring that goal in a soccer match — vs global — about their life as a whole (Parfit, 1984) like life satisfaction (Plant, 2020), quality-adjusted life years (QALYs), and generalizations of QALYs to include extrinsic goals (Hazen, 2007), or other preferences about the state of the world as a whole or all-things-considered, like an individual's moral beliefs, their utility function or preferences over outcomes, prospects or actions. Life satisfaction and QALYs are most naturally interpreted as global belief-like preferences, but we can also consider global versions of other types of welfare, like your emotional reactions — hedonic states or felt desires — to imagery or descriptions of your life so far or possible futures, or the choices you’ve made or would make between futures on choice-based preferences.

Parfit (1984) motivated global preferences with the following example:

I shall inject you with an addictive drug. From now on, you will wake each morning with an extremely strong desire to have another injection of this drug. Having this desire will be in itself neither pleasant nor painful, but if the desire is not fulfilled within an hour it will then become very painful. This is no cause for concern, since I shall give you ample supplies of this drug. Every morning, you will be able at once to fulfil this desire. The injection, and its aftereffects, would also be neither pleasant nor painful. You will spend the rest of your days as you do now.

If desire satisfaction is good and its value sums within an individual (or we might assume it’s also or instead pleasurable), then this seems very good and could even outweigh your life being much worse in other ways. However, I’d guess many people would judge such a life as worse overall, and I’m inclined to let people decide for themselves and respect their judgements either way. The alternative seems alienating and paternalistic. Those judgements — how people decide for themselves — are global preferences.

Even if we use global preferences to avoid alienation, it also matters how exactly we use them, in case they can be changed or replaced.[20]

- ^

Including possibly the relationships between those perspectives or mental states and the world, e.g. that things actually go as someone prefers, not just that they believe things are going as they prefer, as in the experience machine thought experiment (Wikipedia, Buscicchi, 2014, Carlsmith, 2021).

- ^

I use ‘preference’ over ‘desire’ for belief-like and choice-based preferences, because desire typically has connotations of being directed towards (or away from, e.g. aversive) specific objects or features (or states of affairs), but preferences can also just be comparative or pairwise (Schroeder, 2015, sections 2.1 and 3.3), so are more general. Felt desires, on the other hand, do seem to be directed.

The ‘felt’ in ‘felt desire’ is based on Helm’s (2002) concept of “felt evaluations”. I don’t want to commit to “felt desires” being types of “felt evaluations” in case that Helm’s account turns out to be wrong, but it’s clear that there are desires that feel like something, and if Helm’s account is correct, then felt desires are probably types of felt evaluations, along with hedonic states.

For belief-like preferences, ‘belief-like’ is more specific than ‘cognitive’, which has also come to be used fairly broadly to include study of consciousness and emotion. There’s also no sharp line between the cognitive and affective (Pessoa, 2008, Shackman et al., 2011) and felt desires, understood as motivational salience, are therefore characterized by their effects on attention, a cognitive/executive function, and so felt desires are a form of cognitive control. On the other hand, there are various accounts of what beliefs are and require, and I don’t want to commit to these types of preferences necessarily coinciding with whatever account of belief we eventually settle on, so I use ‘belief-like’, not, say, ‘belief-based’.

I use 'choice-based' over 'behavioral' to emphasize voluntary choice.

- ^

I avoid the term suffering as definitional for negative hedonic states, because it often has other connotations. Brady (2022) defines suffering as “negative affect that we mind, where minding is cashed out in terms of an occurrent desire that the negative affect not be occurring”. Corns (2021) and McClelland (2019, preprint) define suffering at least partially in terms of disruption of agency. Dennett (1995) also distinguished suffering from negatively-signed experience:

While the distinction between pain and suffering is, like most everyday, nonscientific distinctions, somewhat blurred at the edges, it is, nevertheless, a valuable and intuitively satisfying mark or measure of moral importance. When I step on your toe, causing a brief but definite (and definitely conscious) pain, I do you scant harm -- typically none at all. The pain, though intense, is too brief to matter, and I have done no long-term damage to your foot. The idea that you "suffer" for a second or two is a risible misapplication of that important notion, and even when we grant that my causing you a few seconds pain may irritate you a few more seconds or even minutes -- especially if you think I did it deliberately -- the pain itself, as a brief, negatively-signed experience, is of vanishing moral significance. (If in stepping on your toe I have interrupted your singing of the aria, thereby ruining your operatic career, that is quite another matter.)

- ^

To highlight some important possibilities, it could turn out to be the case:

1. that the correct account of belief is pluralistic, i.e. it suffices to meet one of multiple sets of requirements,

2. that some accounts should be combined and to have beliefs means meeting the combined requirements, or

3. that an individual has beliefs in a more robust sense the more such requirements they meet.

- ^

Some have argued that there’s no way to distinguish them and in fact entertaining is a kind of believing (e.g. Mandelbaum, 2014). However, believing could be a predisposition to judge, and judging could just mean entertaining + affirming (Kriegel, 2013), so we would just need to characterize affirming. Believing and entertaining might feel different (see Dietrich et al., 2020 on epistemic feelings) or otherwise have different effects on us, our motivations or actions, e.g. we only act as if the things we actually believe are true (see Schwitzgebel, 2023 on accounts of belief). Or, believing might require greater consistency with other beliefs and entertainments than mere entertaining can achieve (see Dennett, 2013 on holism). Or, we actively apply built-up concepts for belief or truth vs entertaining/hypothetical or false (or just one of the two concepts).

These concepts may be built up differentially related to capacities like surprise, reality monitoring, i.e. distinguishing between externally derived and internally generated information or perceptions, among others.

For beings unable to entertain, they may have no need to distinguish, and may just believe all of their perceptions.

One way might be via recruiting motivational salience, intentions and/or action towards satisfying the preference, but sometimes we are not motivated this way by what we genuinely believe matters. So, an account more general to belief seems necessary, or one that allows multiple ways of judging.

- ^

A fairly successful utilitarian could judge their own life as far better than the typical person’s, judging based on their expected impact on total welfare. A dutiful absolutist deontologist could also judge their own life as far better than the typical person’s, because the typical person violates some of their duties, and such violations are absolutely impermissible. The utilitarian and deontologist could each judge their own life as far better than the other’s, if the utilitarian violates duties according to the deontologist, and the deontologist has only a small impact on the welfare of others. Each may see the stakes as far larger on their own views than on the other’s (MacAskill, Bykvist & Ord, 2020, 6.I). Different utilitarians can similarly disagree with one another, and different deontologists can similarly disagree with one another.

Barrett (2019) illustrates similarly:

Suppose that, in [the outcome] x, i is a political activist in jail and k is a corrupt politician at a fancy resort. i might personally prefer to be at the resort than in jail, but loathe k and everything k stands for so much that i would rather be herself in jail than k at the resort.

There were also huge discrepancies in preferences between community cash transfers vs saving the life of a child in the community according to a survey of respondents from Ghana and Kenya by IDInsight, with 38% preferring to save the life up to or past the maximum surveyed ($10 million), and of those preferring community cash transfers at any point below the maximum, 54% preferred community cash transfers at values below $30,000 (Redfern et al., 2019, p.18). IDInsight (2019) also wrote

For example, a number of respondents placed extremely high value on averting death relative to increasing income using deontological arguments arguing that the two just cannot morally be compared, which is in direct contrast to GiveWell’s utilitarian approach.

Counting only “personal” preferences doesn’t address the problem, because people’s personal preferences can be indirectly other-regarding with hugely varying stakes between them and between them and other personal preferences, e.g. being a good parent, fulfilling their moral duties, maximizing their net impact on total welfare. Discounting even the indirectly other-regarding preferences seems alienating. And even if we did discount them, we can still imagine hugely varying entirely personal stakes, e.g. someone could recognize absolutist moral duties to themself.

- ^

It isn’t often necessary to know how much better one option is than another to make choices, so we don’t bother to represent that information, nor would our functions, dispositions or other consequences covary with it reliably across contexts. Schroeder (2015) wrote:

I can tell that I prefer securing my father's health to doing the laundry, and I know which I would choose if it came to a choice. But can I introspect the degree to which I prefer my father's health over clean laundry? Perhaps not. And if I cannot, perhaps this is because I have introspective access to the most basic psychological facts only, and these are facts about simple pairwise preferences.

See also Brandstätter et al., 2006 for the priority heuristic in decision-making.

There’s substantial neurological and behavioural evidence against representations of value in a “common currency” even within each person’s brain across tasks (e.g. Hayden and Niv, 2021, Garcia et al., 2023, Kellen et al., 2016), as well as evidence of intransitivity or dependence on irrelevant alternatives, including even in honeybees (Shafir, 1994).

We can off-load representations of value externally, e.g. to paper or computers, and even do so with computer programs without ever actually seeing the final representations of value, and instead just the recommendations, like an algorithm filtering and selecting stocks to buy or movies to watch. Or, we can defer to the judgements of other people.

The contents of the belief-like preferences can differ dramatically in type or scales to which they refer, like pleasure intensities and durations, QALYs saved, tonnes of CO2 emissions averted, thousands of tonnes of CO2 emissions averted, or RAM in a laptop. Only sufficiently similar features can be used to ground comparisons.

Global preferences can dissociate from other measures, like hedonic states, felt desires, choice-based preferences — as discussed in a later section —, and probably attention.

- ^

For example, people probably don’t equally value a year of life at full health — which would follow from treating each QALY equally — because they probably tend to come to value their own lives more if they have young children or partners, for the sake of those children or partners or because they value their time with their children or partners. They tend to be less willing to sacrifice or risk years of their own life to live in better health (van der Pol & Shiell, 2007, Matza et al., 2014, Hansen et al., 2021, van Nooten et al., 2015).

- ^

We could consider behaviours and behavioural dispositions in general, but this seems too trivial to me to warrant any significant concern. For example, electrons tend to avoid one another, so we might interpret them as having preferences to be away from each other. Choice-based preferences (and perhaps other kinds of welfare here) could be understood as far more sophisticated versions of such basic behavioural preferences, but choice seems like a morally significant line to draw. For more on how simple behaviours in fundamental physics might indicate welfare, see Tomasik, 2014-2020.

- ^

Or, I prefer choosing the apple to choosing the orange for more indirect reasons related to my choice.

- ^

Haggard (2005) summarized the neuroscience of intentions:

Instead, recent findings suggest that the conscious experience of intending to act arises from preparation for action in frontal and parietal brain areas. Intentional actions also involve a strong sense of agency, a sense of controlling events in the external world. Both intention and agency result from the brain processes for predictive motor control, not merely from retrospective inference.

- ^

On the possibilities of unconscious emotions and unconscious affect, see, for example, Berridge & Winkielman, 2003 and Winkielman & Hofree, 2012 or Smith & Lane, 2016.

Incentive salience, the apparent basis for appetitive felt desires, can also be unconscious. Berridge and Aldridge (2009, ungated) write:

Importantly, incentive salience attributions are encapsulated and modular in the sense that people may not have direct conscious access to them and find them difficult to cognitively controls (Robinson & Berridge, 1993, 2003; Winkielman & Berridge, 2004). Cue-triggered “wanting” belongs to the class of automatic reactions that operate by their own rules under the surface of direct awareness (see Chapters 5 and 23; Bargh & Ferguson, 2000; Bargh, Gollwitzer, Lee-Chai, Barndollar, & Trötschel, 2001; Dijksterhuis, Bos, Nordgren, & van Baaren, 2006; Gilbert & Wilson, 2000; Wilson, Lindsey, & Schooler, 2000; Zajonc, 2000). People are sometimes aware of incentive salience as a product but never of the underlying process. And without an extra cognitive monitoring step, they may not even be always aware of the product. Sometimes incentive salience can be triggered and can control behavior with very little awareness of what has happened. For example, subliminal exposures to happy or angry facial expressions, too brief to see consciously, can cause people later to consume more or less of a beverage—without being at all aware their “wanting” has been manipulated (Winkielman & Berridge, 2004). Additional monitoring by brain systems of conscious awareness, likely cortical structures, is required to bring a basic “want” into a subjective feeling of wanting.

- ^

However, in response to this last quoted paragraph, if we are functionalists, then motivation should be definable in purely functional terms. For example, the conscious states could have important effects on mental states worthy of identifying as motivation even without externally-directed behaviour, e.g. effects on attention in particular — like felt desires — or cognitive control in general.

- ^

Or when a hedonic state and an aversive felt desire occur together, e.g. pain is often simultaneously unpleasant and aversive.

- ^

Other effects could be unnecessary for and dissociable from the pleasure and unpleasantness themselves, e.g. optimism and pessimism bias, learning/reinforcement.

- ^

Kammerer (2019), considering the normative implications of illusionism in general, not just the particular interpretation that assigns value to illusions, also writes:

The best option here for the illusionist would probably be to draw inspiration from desire-satisfaction views of well-being (Brandt 1979; Heathwood 2006) or from attitudinal theories of valenced states (Feldman 2002), and to say that pain is bad (even if it is not phenomenal) because it constitutively includes the frustration of a desire, or the having of a certain negative attitude of dislike. After all, when I am in pain, there is something awful which is that I want it to stop (and that my desire is frustrated); alternatively, one could insist on the fact that what is bad is that I dislike my pain. This frustration or this dislike are what makes pain a harm, which in turn grounds its negative value. This might be the most promising lead to an account of what makes pain bad.

- ^

The word ‘intentionality’ is used to describe mental states being about something (Jacob, 2023). This is of course more general than an intention to do something, as a kind of plan or commitment, which is how I’ve used the word in the rest of this piece.

- ^

We might think of hedonic states as necessarily occurrent by definition, but there are still relevant dispositions.

- ^

Someone could report their previous judgement if nothing of importance has changed or use it as a baseline from which to judge again.

- ^

We could in principle just manipulate or replace a person’s global preferences to be more satisfied after the fact, by directly changing how they evaluate their life. We need to count your original global preference(s) as less satisfied or further frustrated, and this shouldn’t be offset or outweighed by your new global preference(s). As far as I can tell, the only robust subjective welfare solutions to this are preference-affecting views, similar to a person-affecting view but applied to individual preferences (e.g. Bykvist, 2008), and antifrustrationism (Fehige, 1998), the axiology of negative preference utilitarianism. These could be applied to global preferences only.

People are often okay with some kinds of preference change or reasons for preference change, e.g. further reflection or experience, and this could already be reflected in their global preferences. In those cases, it wouldn’t be worse for their global preferences to change. To accommodate this, people’s global preferences could be understood as made up in part by placeholders:

I prefer whatever I prefer now or what I would prefer with further reflection or experience in typical ways, with my consent and on the basis of accurate beliefs. This excludes things like coercion in general, drugging, brain stimulation and brain reorganization without my informed consent, as well as deception and misleading. There could be other ways of preference change of which I’d disapprove that I haven’t thought of yet, but I could recognize them with full information ahead of time.