Each year, Manifund partners with regrantors: experts in the field of AI safety, each given an independent budget of $100k+. Regrantors can initiate fast, small grants, seeding early-stage projects with $5k-$50k.

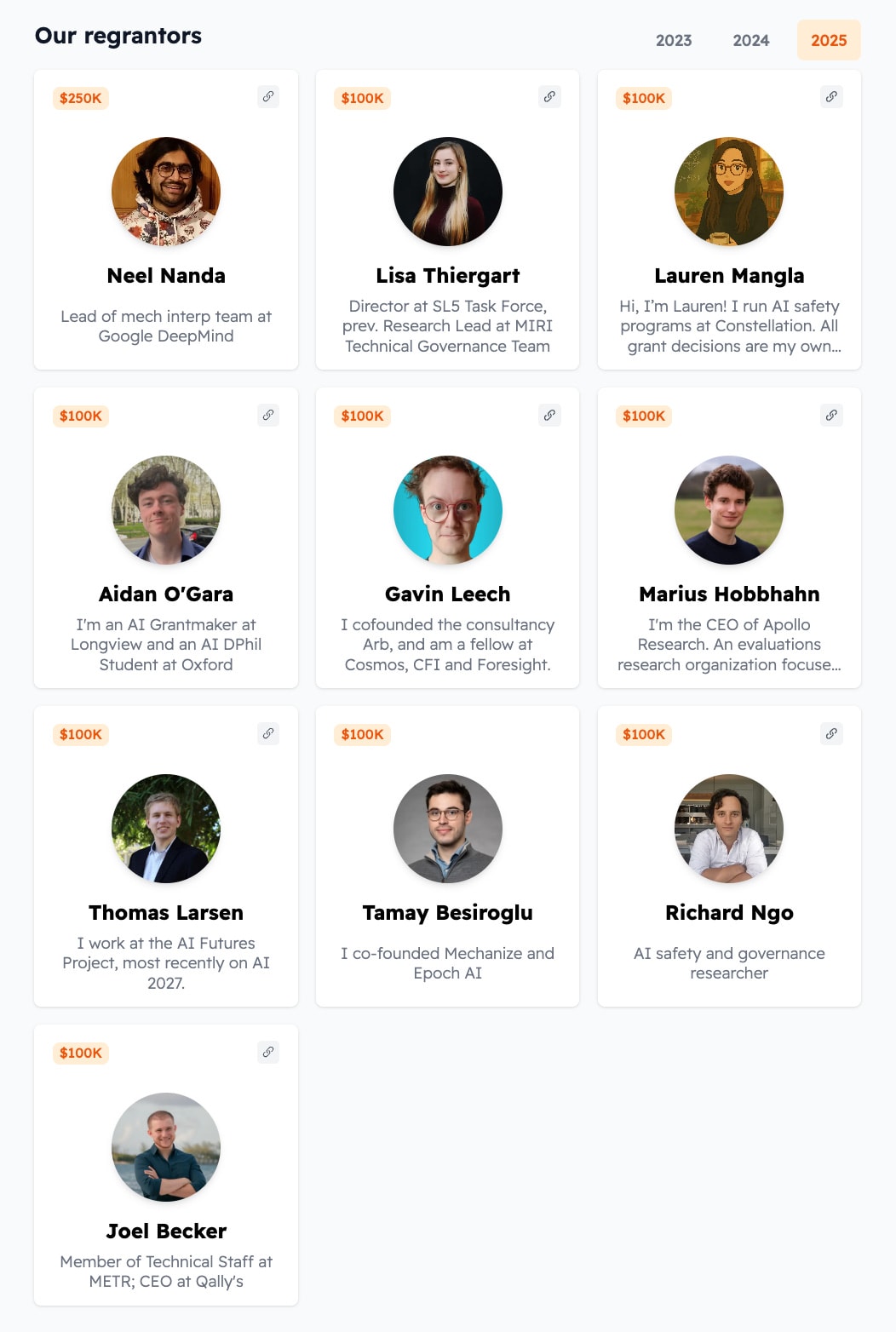

For 2025, we’ve raised $2.25m so far, and are excited to announce our first 10 regrantors:

- Neel Nanda — DeepMind

- Lisa Thiergart — SL5 Task Force

- Lauren Mangla — Constellation

- Aidan O'Gara — Longview

- Gavin Leech — Arb

- Marius Hobbhahn — Apollo

- Thomas Larsen — AI Futures Project

- Tamay Besiroglu — Mechanize

- Richard Ngo — Independent

- Joel Becker — METR

We deeply respect what our regrantors have each accomplished in their fields, and are excited to see what they choose to fund!

Why does regranting matter?

Regranting fills a critical role in the AI safety funding ecosystem:

- Fast, low-friction grants: when needed, we can go from “grant recommended” to “money in grantee’s bank account” in <1 week.

- Hits-based giving: regrantors can make grants solo, and are directly responsible for the quality of the grant. This encourages more speculative grants, and avoids problems in review-by-committee.

- Proactive funding, not reactive: regrantors can offer funding, knowing that they have the money to back their promises. Most other grant processes (OpenPhil, LTFF, SFF) are application-based.

- Support beyond money: regrantors form closer relationships with grantees, and can provide feedback, intros, mentorship, and publicity

- Startups will often accept small angel checks for the prestige, connections & advice; regrantors can provide similar support to grantees

- In contrast, OpenPhil program officers or LTFF fund managers are responsible for hundreds of grants and thus are often too busy to help

- Efficient markets in grantmaking: regranting provides more influence (dollars as ”votes”) to AI safety experts with good track records

- Similar to how tech startup exits create angel investors, who “vote” on the next batch of startups by funding them

- Similar to how prediction markets provide more “votes” to good forecasters, becoming more accurate over time

- Regranting budgets themselves are similar to retroactive funding for past good work!

- Regranting is a decentralizing force, seeding new independent orgs, instead of concentrating talent within AI labs, or large charities with strong fundraising operations

- Our regrants are transparent, with project descriptions and grant writeups in public

- This provides a public track record and social proof for grantees, which helps them with future fundraising and hiring. For example, early public comments from regrantors Evan Hubinger and Ryan Kidd likely helped Timaeus raise more from SFF.

- Transparency also helps the community understand which kinds of work are respected and funded. Manifund regrants provide more detail per grant compared to every other AI safety grantmaker.

- Regranting builds up the Manifund network, helping us meet great grantees

- This gives us a picture of the landscape of AI safety,

- We can follow up with intros, referrals, funding down the line

- Many of the 2025 regrantors started out as Manifund grantees from past regrants (eg Tamay Besiroglu from Epoch, Lisa Thiergart from MIRI, Marius Hobbhahn from Apollo)

What makes a good regrantor?

With regranting, the most important choice Manifund & our donors face is: “who gets these $100k+ budgets?” Here are some of the criteria we look for, when deciding who to invite:

- Good taste in projects and people

- Regranting is a similar skillset to angel investing. Good regrantors have a track record of being early and right; of finding undervalued opportunities.

- Regranting is also similar to hiring: both require identifying great talent. Standard advice for investing in startups is to focus on the founders, not the ideas — we think this holds true for regrants, too. “Fund people, not projects”.

- Has capacity to regrant, proactively

- That is, regrantors should not too busy to actually find, consider, and make grants

- Willing to research potential grantees, reach out and form relationships, follow up with mentorship and support

- Regrantors would ideally spend 5h+/month talking to grantees & writing up the grants

- It helps if a regrantor’s day-to-day work already provides them with good leads and ideas, eg they are more of a manager than a researcher

- Extends coverage of AI safety funding. One goal of regranting is to spot new opportunities — a wide net helps with this. Some kinds of coverage we think about:

- Geographic coverage: Bay Area vs DC vs London vs China

- Subject matter coverage: evals vs mech interp vs AI policy vs fieldbuilding

- Different kinds of orgs: labs vs thinktanks vs startups vs charities

- Different competing orgs: Anthropic vs GDM vs OpenAI

- Doesn’t already have easy access to funding

- People who are already grantmakers, or are well connected to and can recommend grants to philanthropists, might use regranting budgets less counterfactually. We mostly don’t invite existing grantmakers to regrant.

- There are exceptions though! It depends on specific circumstances. For example, Aidan O’Gara already makes grants at Longview, but we were still excited to have him regrant.

- A Manifund regranting budget provides Aidan with more flexibility and risk appetite (can make regrants that Longview may be unwilling to publicly endorse), and is more lightweight (regrants are fast and can go out in small amounts, whereas Longview rarely considers grants of <$200k).

- Grantmaking may be a skill that improves with practice, so budgets may go farther in the hands of experienced grantmakers

We’re still looking for more regrantors; consider applying here!

For regrantors: what makes a good regrant?

Good regrants are often local, small & fast:

- A local grant is made within a regrantor’s unique network, without prior knowledge from OpenPhil or others — our donors aren’t excited to funge against OpenPhil dollars (neither are we!). At the same time, it’s important not to overthink this criterion. There are plenty of cases where OpenPhil sees an opportunity but can’t fund it due to e.g. reputational risk, and also cases where they may be lacking information or are just wrong about the value of a proposal.

- If the org receiving the grant isn’t small (raised $1M+), it probably already has a public track record and a fundraising team, and should therefore be on the radar for OpenPhil and others. As an example, Evan Hubinger’s grant to MATS itself is less exciting to us than grants to his own MATS mentees, who have less funding and less visibility.

- One strength of regranting is that it can be fast, i.e., time sensitive grants, as Manifund can move dollars to grantees within days of the recommendation. Types of funding that might fit into this category include funding for compute or travel expenses, or bringing on world-class talent, ASAP. On the other hand, yearly planning for orgs is better captured by OpenPhil and SFF, who move more slowly but have a lot more money available.

Some examples of regrants that we particularly liked (full review coming soon!):

- Scoping Developmental Interpretability by Jesse Hoogland - the first funding for Timaeus, accelerating its research by months

- Support for deep coverage of China and AI to ChinaTalk - reporting on DeepSeek, ahead of the curve

- Shallow review of AI safety 2024 to Gavin Leech - quick regrants inducing further funding from OpenPhil & others

Ultimately, regrantors have a lot of discretion for making grants, and we encourage you to use it! We expect many great regrants to come not just from looking at new proposals as they appear on the Manifund website, but from proactively finding them. This could look like asking your friends or colleagues for leads, launching a request for proposals, or a prize contest.

See also “Some fun lessons I learned as a junior regrantor” from Joel Becker.

For donors: why fund regrantors?

If you’re looking for a turnkey way to seed great AI safety projects, consider funding our regranting program! We think it’s a good fit for donors who are:

- Earning to give, and donating $50k+ a year

- Concerned about AI safety, but less familiar with the landscape, and not interested in spending a lot of your time getting up to speed

- Excited to back new, undiscovered opportunities

For such donors, regranting provides a “part-time program officer, as a service”. It’s often hard to find great AI safety program officers because their opportunity costs are high; those with the appropriate context could just do direct work. But regranting doesn’t take an expert away from their day job. Instead, they can keep an eye out for good opportunities, while continuing to work in the field.

So rather than spinning up your own foundation, with the overhead that imposes, you can tap into the expertise of someone you trust — and have Manifund handle the logistics of sending out grants. We do ask large donors to contribute an extra 5% towards Manifund’s operational budget; in exchange, Manifund works closely with each donor to understand your giving objectives.

We’re quite flexible with how to distribute budgets:

- You can fund the regrantor program as a whole, allowing Manifund to allocate the budget

- You can increase the budgets for specific regrantors you trust

- You can nominate your own regrantors to join the program

If you’re interested in donating via regranting, please reach out to austin@manifund.org!

Thanks to Jesse Richardson and Leila Clark for input~