One of the weaker parts of the Situational Awareness essay is Leopold's discussion of international AI governance.

He argues the notion of an international treaty on AI "fanciful", claiming that:

It would be easy to "break out" of treaty restrictions

There would be strong incentives to do so

So the equilibrium is unstable

That's basically it - international cooperation gets about 140 words of analysis in the 160 page document.

I think this is seriously underargued. Right now it seems harmful to propagate a meme like "International AI cooperation is fanciful".

This is just a quick take, but I think it's the case that:

It might not be easy to break out of treaty restrictions. Of course it will be hard to monitor and enforce a treaty. But there's potential to make it possible through hardware mechanisms, cloud governance, inspections, and other mechanisms that we haven't even thought of yet. Lots of people are paying attention to this challenge and working on it.

There might not be strong incentives to do so. Decisionmakers may take the risks seriously and calculate the downsides of an all-out race exceed the potential benefits of winning. Credible benefit-sharing and shared decision-making institutions may convince states they're better off cooperating than trying to win a race.

International cooperation might not be all-or-nothing. Even if we can't (or shouldn't!) institute something like a global pause, cooperation on more narrow issues to mitigate threats from AI misuse and loss of control could be possible. Even in the midst of the Cold War, the US and USSR managed to agree on issues like arms control, non-proliferation, and technologies like anti-ballistic missile tech.

(I critiqued a critique of Aschenbrenner's take on international AI governance here, so I wanted to clarify that I actually do think his model is probably wrong here.)

Many organizations I respect are very risk-averse when hiring, and for good reasons. Making a bad hiring decision is extremely costly, as it means running another hiring round, paying for work that isn't useful, and diverting organisational time and resources towards trouble-shooting and away from other projects. This leads many organisations to scale very slowly.

However, there may be an imbalance between false positives (bad hires) and false negatives (passing over great candidates). In hiring as in many other fields, reducing false positives often means raising false negatives. Many successful people have stories of being passed over early in their careers. The costs of a bad hire are obvious, while the costs of passing over a great hire are counterfactual and never observed.

I wonder whether, in my past hiring decisions, I've properly balanced the risk of rejecting a potentially great hire against the risk of making a bad hire. One reason to think we may be too risk-averse, in addition to the salience of the costs, is that the benefits of a great hire could grow to be very large, while the costs of a bad hire are somewhat bounded, as they can eventually be let go.

the costs of a bad hire are somewhat bounded, as they can eventually be let go.

This depends a lot on what "eventually" means, specifically. If a bad hire means they stick around for years—or even decades, as happened in the organization of one of my close relatives—then the downside risk is huge.

OTOH my employer is able to fire underperforming people after two or three months, which means we can take chances on people who show potential even if there are some yellow flags. This has paid off enormously, e.g. one of our best people had a history of getting into disruptive arguments in nonprofessional contexts, but we had reason to think this wouldn't be an issue at our place... and we were right, as it turned out, but if we lacked the ability to fire relatively quickly, then I wouldn't have rolled those dice.

The best advice I've heard for threading this needle is "Hire fast, fire fast". But firing people is the most unpleasant thing a leader will ever have to do, so a lot of people do it less than they should.

One way of thinking about the role is how varying degrees of competence correspond with outcomes.

You could imagine a lot of roles have more of a satisficer quality- if a sufficient degree of competence is met, the vast majority of the value possible from that role is met. Higher degrees of excellence would have only marginal value increases; insufficient competence could reduce value dramatically. In such a situation, risk-aversion makes a ton of sense: the potential benefit of getting grand slam placements is very small in relation to the harm caused by an incompetent hire.

On the other hand, you might have roles where the value scales very well with incredible placements. In these situations, finding ways to test possible fit may be very worth it even if there is a risk of wasting resources on bad hires.

It looks like there are two people who voted disagree with this. I'm curious as to what they disagree with. Do they disagree with the claim that some organizations are "very risk-averse when hiring"? Do they disagree with the claim that "reducing false positives often means raising false negatives"? That this has a causal effect with organisations scale slowly? Or perhaps that "the costs of a bad hire are somewhat bounded"? I would love for people who disagree vote to share information regarding what it is they disagree with.

Forgive my rambling. I don't have much to contribute here, but I generally want to say A)I am glad to see other people thinking about this, and B) I sympathize with the difficulty

The "reducing false positives often means raising false negatives" is one of the core challenges in hiring. Even the researchers who investigate the validity of various methods and criteria in hiring don't have a great way to deal with it this problem. Theoretically we could randomly hire 50% of the applicants and reject 50% of them, and then look at how the new hires perform compared to the rejects one year later. But this is (of course) infeasible. And of course, so much of what we care about is situationally specific: If John Doe thrives in Organizational Culture A performing Role X, that doesn't necessarily mean he will thrive in Organizational Culture B performing Role Y.

I do have one suggestion, although it isn't as good of a suggestion as I would like. Ways to "try out" new staff (such as 6-month contacts, 12-month contracts, internships, part-time engagements, and so on) can let you assess with greater confidence how the person will perform in your organization in that particular role much better than a structured interview, a 2-hour work trial test, or a carefully filled out application form. But if you want to have a conversation with some people that are more expert in this stuff I could probably put you in touch with some Industrial Organizational Psychologists who specialize in selection methods. Maybe a 1-hour consultation session would provide some good directions to explore?

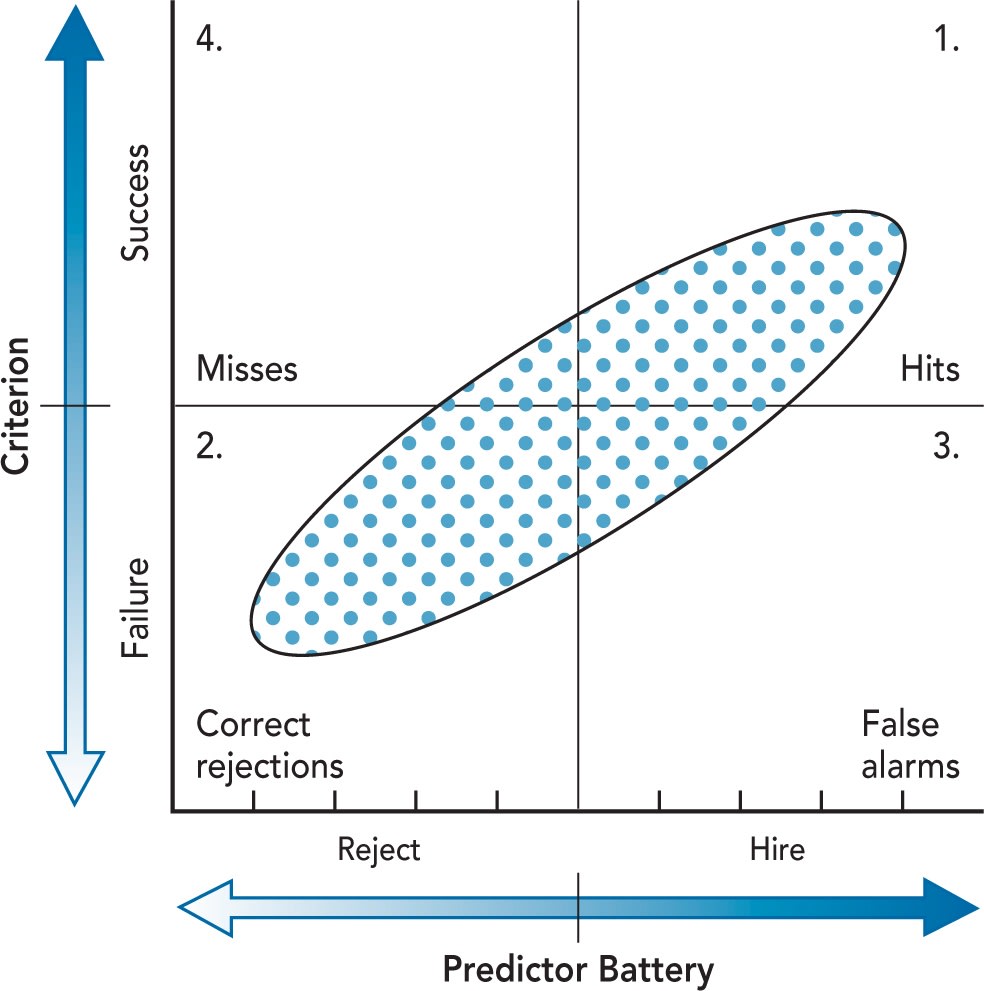

I've shared this image[1] with many people, as I think it is a fairly good description of the issue. I generally think of one of the goals of hiring to be "squeezing" this shape to get as much off the area as possible in the upper right and lower left, and to have as little as possible in the upper left and lower right. We can't squeeze it infinitely thin, and there is a cost to any squeezing, but that is the general idea.

I left this as a comment on a post which has since been deleted, so wanted to repost it here (with a few edits).

Some, though not all, men in EA subscribe to an ask culture philosophy which endorses asking for things, including dates, even when the answer is probably no. On several occasions this has caused problems in EA organizations and at EA events. A small number of guys endorsing this philosophy can make multiple women feel uncomfortable.

I think this is pretty bad. Some people point out that the alternative, guess culture, is more difficult for people who struggle to read social cues. But if there's a trade-off between making this community safe and comfortable for women and making it easier for guys to find dates, then, sorry, the former is the only reasonable choice.

Probably there are formal solutions, such as clearer rules and codes of conduct, which could help a bit. But I also think there's a cultural element, created and enforced through social norms, that will only change when EAs (especially men) choose to notice and push back on questionable behaviour from our acquaintances, colleagues, and friends.

Am a woman in EA, I personally feel more uncomfortable with guess culture at times. Being asked out directly is a more comfortable experience for me than having to navigate plausible deniability because in the latter case, it feels like the other person has more power over the narrative and there isn't a clear way for me to turn them down quickly if I suspect they are subtly trying to make a romantic advance.

There are other norms that can make people more comfortable but "don't explicitly ask out women you are interested in" would be bad for me because it causes people who would otherwise do that to behave in ways that are more difficult to scrutinise - Eg: "I was never interested in her" when called out on their behaviour/persistent flirting.

It's not unusual to see a small army of people thanked in the "Acknowledgements" section of a typical EA Forum post. But one should be careful not to get too much feedback. For one, the benefits of more feedback diminish quickly, while the community costs scale linearly. (You gain fewer additional insights from the fifth person who reads your draft than you do from the first, but it takes the fifth person just as long to read and comment.)

My biggest worry, though, is killing my own vision by trying to incorporate comments from too many other people. This is death by feedback. If you try to please everyone, you probably won't please anyone.

There are lots of different ways one could write about a given topic. Imagine I'm writing an essay to convince EA Forum readers that theresplendent quetzal is really cool. There's lots I could talk about: I could talk about its brilliant green plumage and long pretty tail; I could talk about how it's the national animal of Guatemala, so beloved that the country's currency is called the quetzal; or I could talk about its role in Mesoamerican mythology. Different people will have different ideas about which tack I should take. Some framings will be more effective than others. But any given framing can be killed by writing a scattered, unfocused, inconsistent essay that tries to talk about everything at once.

Sure, go ahead and get feedback from a few people to catch blunders and oversights. It's pretty awesome that so many clever, busy people will read your Forum posts if you ask them to. But don't Frankenstein your essay by stitching together different visions to address all concerns. It's important to recognize that there's not a single, ideal form a piece can approach if the author keeps gathering feedback. "Design by committee" is a perjorative phrase for a reason.

Thanks to absolutely nobody for giving feedback on this post.

I have some feedback on this post that you should feel free to ignore.

In my experience, when you ask someone for feedback, there's about a 10% chance that they will bring up something really important that you missed. And you don't know who's going to notice the thing. So even if you've asked 9 people for feedback and none of them said anything too impactful, maybe the 10th will say something critically important.

Hm, maybe. I still think there are diminishing returns - the first person I ask is more likely to provide that insight than the 10th.

Under your model, the questions I'd have are (1) whether one person's insight is worth the time-cost to all 10 people, and (2) how do you know when to stop getting feedback, if each person you ask has a 10% chance of providing a critical insight?

I think it's possible there's too much promotion on the EA Forum these days. There are lots of posts announcing new organizations, hiring rounds, events, or opportunities. These are useful but not that informative, and they take up space on the frontpage. I'd rather see more posts about research, cause prioritization, critiques and redteams, and analysis. Perhaps promotional posts should be collected into a megathread, the way we do with hiring.

In general it feels like the signal-to-noise ration on the frontpage is lower now than it was a year ago, though I could be wrong. One metric might be number of comments - right now, 5/12 posts I see on the frontpage have 0 comments, and 11/12 have 10 comments or fewer.

We might also want to praise users to those who have a high ratio of highly upvoted comments to posts

One thing that confuses me is that the karma metric probably already massively overemphasizes rather than underemphasizes the value of comments relative to posts. Writing 4 comments that have ~25 karma each probably provides much less value (and certainly takes me much less effort) than writing a post that gets ~100 karma.

There is a population of highly informed people on the forum.

The activity of these people on the forum is much less "share new insights or introduce content", but instead they guide discussion and balance things out (or if you take a less sanguine view, "intervene").

This population of people, who are very informed, persuasive and respected, is sort of a key part of how the forum works and why it functions well. Like sort of quasi-moderators.

This comment isn't super directly relevant, I guess the awareness of this population of people, as opposed to some technology or maybe "culture" thing, is good to know and related to the purpose of this thread.

Also many of those people are on that list and it's good to be explicit about their intentional role, and not double count them in some way.

The Against Malaria Foundation is described as about 5-23x more cost-effective than cash transfers in GiveWell's calculations, while Founders Pledge thinks StrongMinds is about 6x more cost-effective.

But this seems kind of weird. What are people buying with their cash transfers? If a bednet would be 20x more valuable, than why don't they just buy that instead? How can in-kind donations (goods like bednets or vitamins) be so much better than just giving poor people cash?

I can think of four factors that might help explain this.

Lack of information. Perhaps beneficiaries aren't aware of the level of malaria risk they face, or aren't aware of the later-life income benefits GiveWell models. Or perhaps they are aware but irrationally discount these benefits for whatever reason.

Lack of markets. Cash transfer recipients usually live in poor, rural areas. Their communities don't have a lot of buying power. So perhaps it's not worth it for, e.g., bednet suppliers to reach their area. In other words, they would purchase the highly-valuable goods with their cash transfers, but they can't, so they have to buy less valuable goods instead.

Economies of scale. Perhaps top-rated charities get a big discount on the goods they buy because they buy so many. This discount could be sufficiently large that, e.g., bednets are the most cost-effective option when they're bought at scale, but not the most cost-effective option for individual consumers.

Externalities. Perhaps some of the benefits of in-kind goods flow to people who don't receive cash transfers, such as children. These could account for their increased cost-effectiveness if cash transfer recipients don't account for externalities.

I think each of these probably plays a role. However, a 20x gap in cost-effectiveness is really big. I'm not that convinced that these factors are strong enough to fully explain the differential. And that makes me a little bit suspicious of the result.

I'd be curious to hear what others think. If others have written about this, I'd love to read it. I didn't see a relevant question in GiveWell's FAQs.

Meta-level: Great comment- I think we should be starting more of a discussion around theoretical high-level mechanisms of why charities would be effective in the first place - I think there's too much emphasis on evidence of 'do they work'.

I think the main driver of the effectiveness of infectious disease prevention charities like AMF and deworming might be that they solve coordination/ public goods problems, because if everyone in a certain region uses a health intervention it is much more effective in driving down overall disease incidence. Because of the tragedy of the commons, people are less likely to buy bed nets themselves.

For micronutrient charities it is lack of information and education - most people don't know about and don't understand micronutrients.

Lack of information / markets

Flagging that that there were charities - DMI and Living Goods - which address these issues, and so, if these turn out to explain most of the variance in differences in cost-effectiveness you highlight then these need to be scaled up. I never quite understood why a DMI-like charity with ~zero marginal cost-per-user couldn't be scaled up more until it's much more cost-effective than all other charities.

That first factor isn't just "lack of information," it is also the presence of cognitive biases in risk assessment and response. Many people -- in both higher- and lower-income countries -- do not accurately "price" risks of unlikely but catastrophic-to-them/their-family risks. This is also true on a societal level. Witness, for instance, how much the US has spent on directly and indirectly reducing the risk of airplane hijackings over the last 20 years vs. how much we spent on pandemic preparedness.

One of the most common lessons people said they learned from the FTX collapse is to pay more attention to the character of people with whom they're working or associating (e.g. Spencer Greenberg, Ben Todd, Leopold Aschenbrenner, etc.). I agree that some update in this direction makes sense. But it's easier to do this retrospectively than it is to think about how specifically it should affect your decisions going forward.

If you think this is an important update, too, then you might want to think more about how you're going to change your future behaviour (rather than how you would have changed your past behaviour). Who, exactly, are you now distancing yourself from going forward?

Remember that the challenge is knowing when to stay away basically because they seem suss, not because you have strong evidence of wrongdoing.

One of the weaker parts of the Situational Awareness essay is Leopold's discussion of international AI governance.

He argues the notion of an international treaty on AI "fanciful", claiming that:

That's basically it - international cooperation gets about 140 words of analysis in the 160 page document.

I think this is seriously underargued. Right now it seems harmful to propagate a meme like "International AI cooperation is fanciful".

This is just a quick take, but I think it's the case that:

(I critiqued a critique of Aschenbrenner's take on international AI governance here, so I wanted to clarify that I actually do think his model is probably wrong here.)

Can I tweet this? I think it's a good take

Tweet away! 🫡

Many organizations I respect are very risk-averse when hiring, and for good reasons. Making a bad hiring decision is extremely costly, as it means running another hiring round, paying for work that isn't useful, and diverting organisational time and resources towards trouble-shooting and away from other projects. This leads many organisations to scale very slowly.

However, there may be an imbalance between false positives (bad hires) and false negatives (passing over great candidates). In hiring as in many other fields, reducing false positives often means raising false negatives. Many successful people have stories of being passed over early in their careers. The costs of a bad hire are obvious, while the costs of passing over a great hire are counterfactual and never observed.

I wonder whether, in my past hiring decisions, I've properly balanced the risk of rejecting a potentially great hire against the risk of making a bad hire. One reason to think we may be too risk-averse, in addition to the salience of the costs, is that the benefits of a great hire could grow to be very large, while the costs of a bad hire are somewhat bounded, as they can eventually be let go.

Some interesting stuff to read on the topic of when helpful things probably hurt people:

Helping is helpful

Helping is hurtful

This depends a lot on what "eventually" means, specifically. If a bad hire means they stick around for years—or even decades, as happened in the organization of one of my close relatives—then the downside risk is huge.

OTOH my employer is able to fire underperforming people after two or three months, which means we can take chances on people who show potential even if there are some yellow flags. This has paid off enormously, e.g. one of our best people had a history of getting into disruptive arguments in nonprofessional contexts, but we had reason to think this wouldn't be an issue at our place... and we were right, as it turned out, but if we lacked the ability to fire relatively quickly, then I wouldn't have rolled those dice.

The best advice I've heard for threading this needle is "Hire fast, fire fast". But firing people is the most unpleasant thing a leader will ever have to do, so a lot of people do it less than they should.

One way of thinking about the role is how varying degrees of competence correspond with outcomes.

You could imagine a lot of roles have more of a satisficer quality- if a sufficient degree of competence is met, the vast majority of the value possible from that role is met. Higher degrees of excellence would have only marginal value increases; insufficient competence could reduce value dramatically. In such a situation, risk-aversion makes a ton of sense: the potential benefit of getting grand slam placements is very small in relation to the harm caused by an incompetent hire.

On the other hand, you might have roles where the value scales very well with incredible placements. In these situations, finding ways to test possible fit may be very worth it even if there is a risk of wasting resources on bad hires.

It looks like there are two people who voted disagree with this. I'm curious as to what they disagree with. Do they disagree with the claim that some organizations are "very risk-averse when hiring"? Do they disagree with the claim that "reducing false positives often means raising false negatives"? That this has a causal effect with organisations scale slowly? Or perhaps that "the costs of a bad hire are somewhat bounded"? I would love for people who disagree vote to share information regarding what it is they disagree with.

Forgive my rambling. I don't have much to contribute here, but I generally want to say A)I am glad to see other people thinking about this, and B) I sympathize with the difficulty

The "reducing false positives often means raising false negatives" is one of the core challenges in hiring. Even the researchers who investigate the validity of various methods and criteria in hiring don't have a great way to deal with it this problem. Theoretically we could randomly hire 50% of the applicants and reject 50% of them, and then look at how the new hires perform compared to the rejects one year later. But this is (of course) infeasible. And of course, so much of what we care about is situationally specific: If John Doe thrives in Organizational Culture A performing Role X, that doesn't necessarily mean he will thrive in Organizational Culture B performing Role Y.

I do have one suggestion, although it isn't as good of a suggestion as I would like. Ways to "try out" new staff (such as 6-month contacts, 12-month contracts, internships, part-time engagements, and so on) can let you assess with greater confidence how the person will perform in your organization in that particular role much better than a structured interview, a 2-hour work trial test, or a carefully filled out application form. But if you want to have a conversation with some people that are more expert in this stuff I could probably put you in touch with some Industrial Organizational Psychologists who specialize in selection methods. Maybe a 1-hour consultation session would provide some good directions to explore?

I've shared this image[1] with many people, as I think it is a fairly good description of the issue. I generally think of one of the goals of hiring to be "squeezing" this shape to get as much off the area as possible in the upper right and lower left, and to have as little as possible in the upper left and lower right. We can't squeeze it infinitely thin, and there is a cost to any squeezing, but that is the general idea.

.

it is from a textbook: Industrial/Organizational Psychology: Understanding the Workplace, Paul E. Levy

I left this as a comment on a post which has since been deleted, so wanted to repost it here (with a few edits).

Some, though not all, men in EA subscribe to an ask culture philosophy which endorses asking for things, including dates, even when the answer is probably no. On several occasions this has caused problems in EA organizations and at EA events. A small number of guys endorsing this philosophy can make multiple women feel uncomfortable.

I think this is pretty bad. Some people point out that the alternative, guess culture, is more difficult for people who struggle to read social cues. But if there's a trade-off between making this community safe and comfortable for women and making it easier for guys to find dates, then, sorry, the former is the only reasonable choice.

Probably there are formal solutions, such as clearer rules and codes of conduct, which could help a bit. But I also think there's a cultural element, created and enforced through social norms, that will only change when EAs (especially men) choose to notice and push back on questionable behaviour from our acquaintances, colleagues, and friends.

Am a woman in EA, I personally feel more uncomfortable with guess culture at times. Being asked out directly is a more comfortable experience for me than having to navigate plausible deniability because in the latter case, it feels like the other person has more power over the narrative and there isn't a clear way for me to turn them down quickly if I suspect they are subtly trying to make a romantic advance.

There are other norms that can make people more comfortable but "don't explicitly ask out women you are interested in" would be bad for me because it causes people who would otherwise do that to behave in ways that are more difficult to scrutinise - Eg: "I was never interested in her" when called out on their behaviour/persistent flirting.

Death by feedback

It's not unusual to see a small army of people thanked in the "Acknowledgements" section of a typical EA Forum post. But one should be careful not to get too much feedback. For one, the benefits of more feedback diminish quickly, while the community costs scale linearly. (You gain fewer additional insights from the fifth person who reads your draft than you do from the first, but it takes the fifth person just as long to read and comment.)

My biggest worry, though, is killing my own vision by trying to incorporate comments from too many other people. This is death by feedback. If you try to please everyone, you probably won't please anyone.

There are lots of different ways one could write about a given topic. Imagine I'm writing an essay to convince EA Forum readers that the resplendent quetzal is really cool. There's lots I could talk about: I could talk about its brilliant green plumage and long pretty tail; I could talk about how it's the national animal of Guatemala, so beloved that the country's currency is called the quetzal; or I could talk about its role in Mesoamerican mythology. Different people will have different ideas about which tack I should take. Some framings will be more effective than others. But any given framing can be killed by writing a scattered, unfocused, inconsistent essay that tries to talk about everything at once.

Sure, go ahead and get feedback from a few people to catch blunders and oversights. It's pretty awesome that so many clever, busy people will read your Forum posts if you ask them to. But don't Frankenstein your essay by stitching together different visions to address all concerns. It's important to recognize that there's not a single, ideal form a piece can approach if the author keeps gathering feedback. "Design by committee" is a perjorative phrase for a reason.

Thanks to absolutely nobody for giving feedback on this post.

I have some feedback on this post that you should feel free to ignore.

In my experience, when you ask someone for feedback, there's about a 10% chance that they will bring up something really important that you missed. And you don't know who's going to notice the thing. So even if you've asked 9 people for feedback and none of them said anything too impactful, maybe the 10th will say something critically important.

Hm, maybe. I still think there are diminishing returns - the first person I ask is more likely to provide that insight than the 10th.

Under your model, the questions I'd have are (1) whether one person's insight is worth the time-cost to all 10 people, and (2) how do you know when to stop getting feedback, if each person you ask has a 10% chance of providing a critical insight?

I think it's possible there's too much promotion on the EA Forum these days. There are lots of posts announcing new organizations, hiring rounds, events, or opportunities. These are useful but not that informative, and they take up space on the frontpage. I'd rather see more posts about research, cause prioritization, critiques and redteams, and analysis. Perhaps promotional posts should be collected into a megathread, the way we do with hiring.

In general it feels like the signal-to-noise ration on the frontpage is lower now than it was a year ago, though I could be wrong. One metric might be number of comments - right now, 5/12 posts I see on the frontpage have 0 comments, and 11/12 have 10 comments or fewer.

Agreed- people should look at https://forum.effectivealtruism.org/allPosts and sort by newest and then vote more as a public good to improve the signal to noise ratio.

We might also want to praise users to those who have a high ratio of highly upvoted comments to posts - here's a ranking:

1 khorton

2 larks

3 linch

4 max_daniel

5 michaela

6 michaelstjules

7 pablo_stafforini

8 habryka

9 peter_wildeford

10 maxra

11 jonas-vollmer

12 stefan_schubert

13 john_maxwell

14 aaron-gertler

15 carlshulman

16 john-g-halstead

17 benjamin_todd

18 greg_colbourn

19 michaelplant

20 willbradshaw

21 wei_dai

22 rohinmshah

23 buck

24 owen_cotton-barratt

25 jackm

https://docs.google.com/spreadsheets/d/1vew8Wa5MpTYdUYfyGVacNWgNx2Eyp0yhzITMFWgVkGU/edit#gid=0

needs to grant access.

One thing that confuses me is that the karma metric probably already massively overemphasizes rather than underemphasizes the value of comments relative to posts. Writing 4 comments that have ~25 karma each probably provides much less value (and certainly takes me much less effort) than writing a post that gets ~100 karma.

There is a population of highly informed people on the forum.

The activity of these people on the forum is much less "share new insights or introduce content", but instead they guide discussion and balance things out (or if you take a less sanguine view, "intervene").

This population of people, who are very informed, persuasive and respected, is sort of a key part of how the forum works and why it functions well. Like sort of quasi-moderators.

This comment isn't super directly relevant, I guess the awareness of this population of people, as opposed to some technology or maybe "culture" thing, is good to know and related to the purpose of this thread.

Also many of those people are on that list and it's good to be explicit about their intentional role, and not double count them in some way.

It seems like there should be a way to get a graph of comments on front page posts over time going? :)

The Against Malaria Foundation is described as about 5-23x more cost-effective than cash transfers in GiveWell's calculations, while Founders Pledge thinks StrongMinds is about 6x more cost-effective.

But this seems kind of weird. What are people buying with their cash transfers? If a bednet would be 20x more valuable, than why don't they just buy that instead? How can in-kind donations (goods like bednets or vitamins) be so much better than just giving poor people cash?

I can think of four factors that might help explain this.

I think each of these probably plays a role. However, a 20x gap in cost-effectiveness is really big. I'm not that convinced that these factors are strong enough to fully explain the differential. And that makes me a little bit suspicious of the result.

I'd be curious to hear what others think. If others have written about this, I'd love to read it. I didn't see a relevant question in GiveWell's FAQs.

Meta-level: Great comment- I think we should be starting more of a discussion around theoretical high-level mechanisms of why charities would be effective in the first place - I think there's too much emphasis on evidence of 'do they work'.

I think the main driver of the effectiveness of infectious disease prevention charities like AMF and deworming might be that they solve coordination/ public goods problems, because if everyone in a certain region uses a health intervention it is much more effective in driving down overall disease incidence. Because of the tragedy of the commons, people are less likely to buy bed nets themselves.

For micronutrient charities it is lack of information and education - most people don't know about and don't understand micronutrients.

Flagging that that there were charities - DMI and Living Goods - which address these issues, and so, if these turn out to explain most of the variance in differences in cost-effectiveness you highlight then these need to be scaled up. I never quite understood why a DMI-like charity with ~zero marginal cost-per-user couldn't be scaled up more until it's much more cost-effective than all other charities.

That first factor isn't just "lack of information," it is also the presence of cognitive biases in risk assessment and response. Many people -- in both higher- and lower-income countries -- do not accurately "price" risks of unlikely but catastrophic-to-them/their-family risks. This is also true on a societal level. Witness, for instance, how much the US has spent on directly and indirectly reducing the risk of airplane hijackings over the last 20 years vs. how much we spent on pandemic preparedness.

One of the most common lessons people said they learned from the FTX collapse is to pay more attention to the character of people with whom they're working or associating (e.g. Spencer Greenberg, Ben Todd, Leopold Aschenbrenner, etc.). I agree that some update in this direction makes sense. But it's easier to do this retrospectively than it is to think about how specifically it should affect your decisions going forward.

If you think this is an important update, too, then you might want to think more about how you're going to change your future behaviour (rather than how you would have changed your past behaviour). Who, exactly, are you now distancing yourself from going forward?

Remember that the challenge is knowing when to stay away basically because they seem suss, not because you have strong evidence of wrongdoing.