TLDR

This is a report on the Machine Learning Safety Scholars program, organized over the summer by the Center for AI Safety. 63 students graduated from MLSS: the list of graduates and final projects is here. The program was intense, time-consuming, and at times very difficult, so graduation from the program is a significant accomplishment.

Overall, I think the program went quite well and that many students have noticeably accelerated their AI safety careers. There are certainly many areas of improvement that could be made for a future iteration, and many are detailed here. We plan to conduct followup surveys to determine the longer-run effects of the program.

This post contains three main sections:

- This TLDR, which is meant for people who just want to know what this document is and see our graduates list.

- The executive summary, which includes a high-level overview of MLSS. This might be of interest to students considering doing MLSS in the future or anyone else interested in MLSS.

- The full report, which was mainly written for future MLSS organizers, but I’m publishing here because it might be useful to others running similar programs.

The report was written by Thomas Woodside, the project manager for MLSS. “I” refers to Thomas, and does not necessarily represent the opinion of the Center for AI Safety, its CEO Dan Hendrycks, or any of our funders.

Visual Executive Summary

MLSS Overview

MLSS was a summer program for mostly undergraduate students aimed to teach the foundations of machine learning, deep learning, and ML safety. The program ended up being ten weeks long and included an optional final project. It incorporated office hours, discussion sections, speaker events, conceptual readings, paper readings, written assignments, and programming assignments. You can see our full curriculum here.

Survey Results

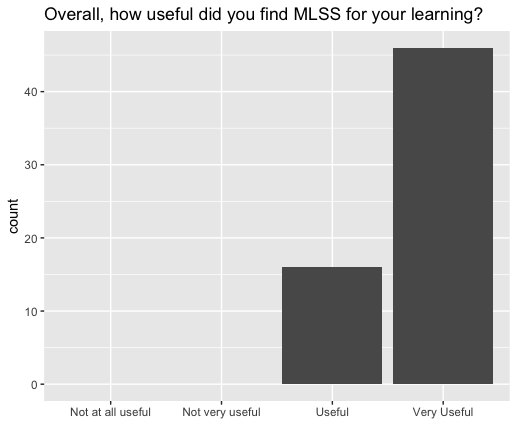

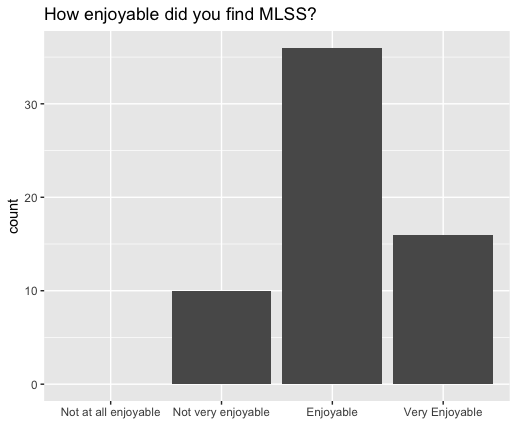

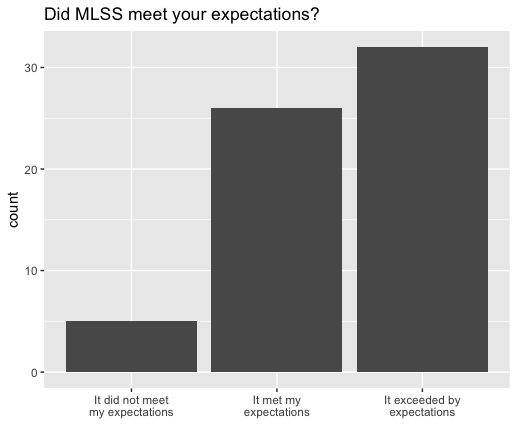

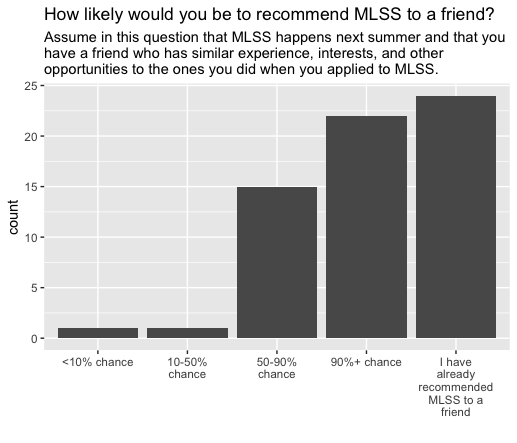

All MLSS graduates filled out an anonymous survey. What follows is a mostly visual depiction of the program through the lens of these survey results.

Overall Experience in MLSS

Of course, this sample is biased towards graduates of MLSS, since we required them to complete the survey to receive their final stipends (and non graduates didn’t get final stipends). However, it seems clear from the way that people responded to this survey that the majority had quite positive opinions of our program.

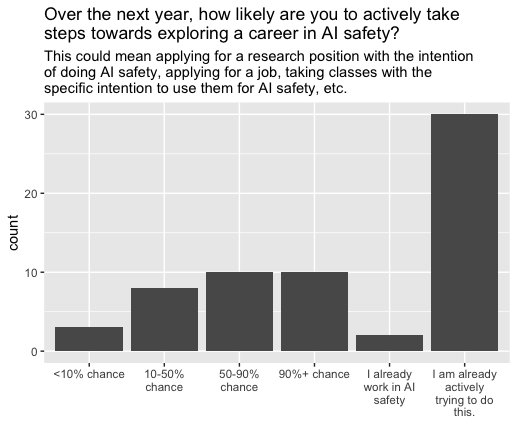

We also asked students about their future plans:

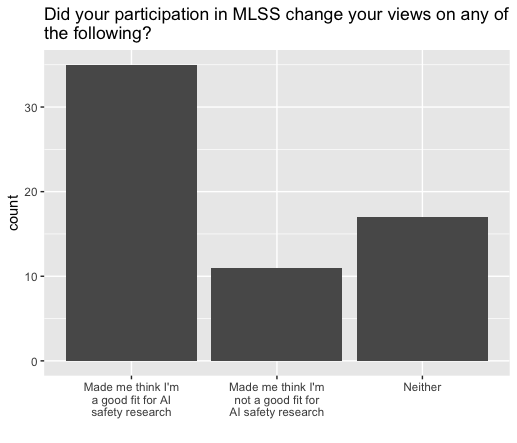

The results suggest that many students are actively trying to work in AI safety and that their participation in MLSS helped them become more confident in that choice. MLSS did decrease some students’ desire to research AI safety. We do not think this is necessarily a bad thing, as many students were using MLSS to test fit; presumably, some are not great fits for AI safety research but might be able to contribute in some other way.

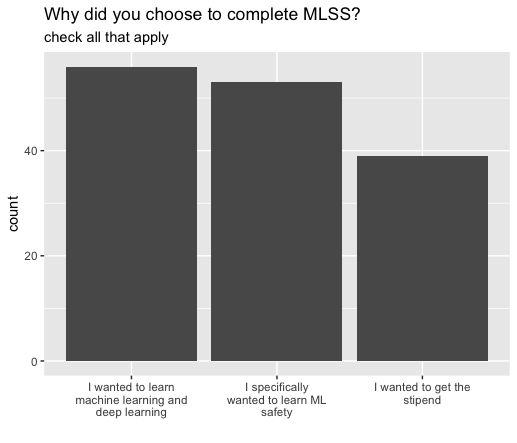

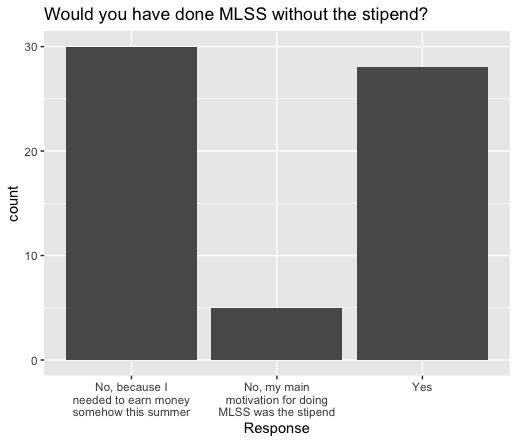

We asked students about why they chose to do the program:

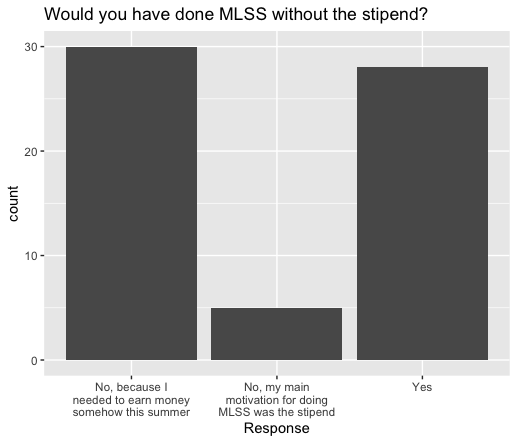

We conclude that the stipend was extremely useful to students, and allowed many students to complete the program who wouldn’t have otherwise been able to. Nearly all graduates said they were interested in learning about ML safety in particular.

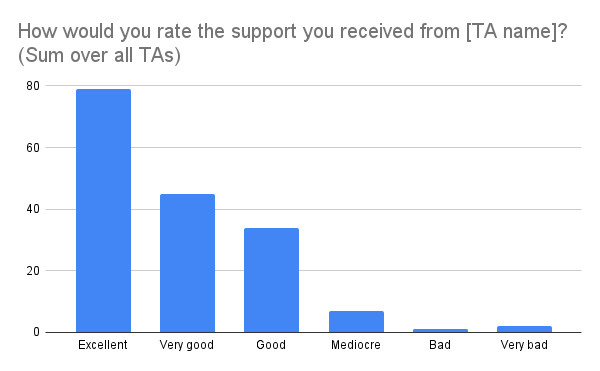

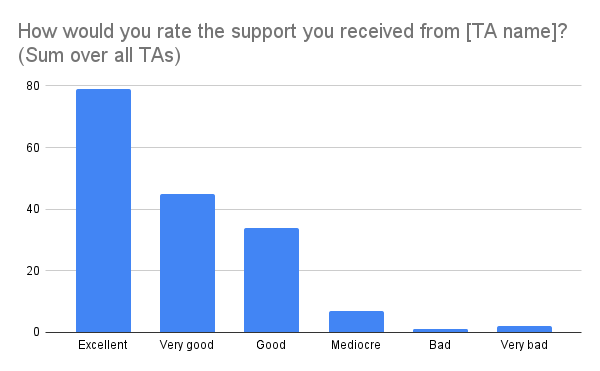

We asked students about the quality of the support from their TAs:

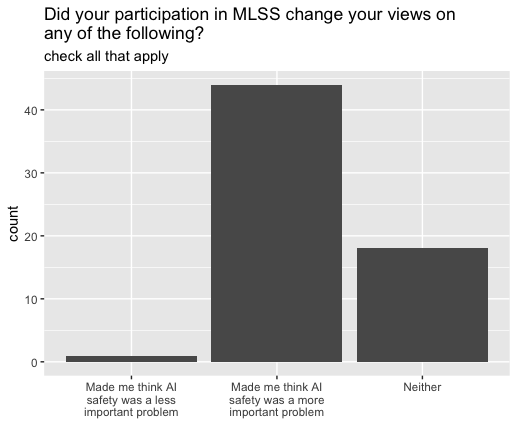

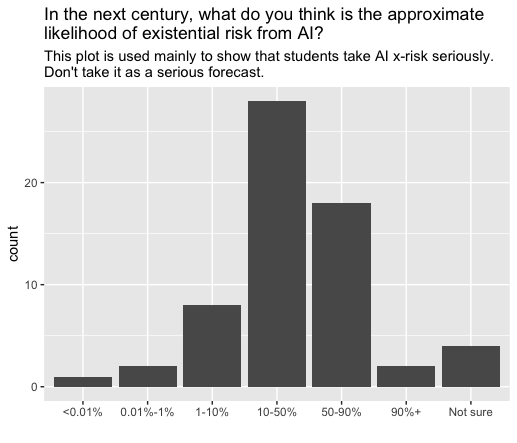

We asked students a few questions about what they thought about AI x-risk:

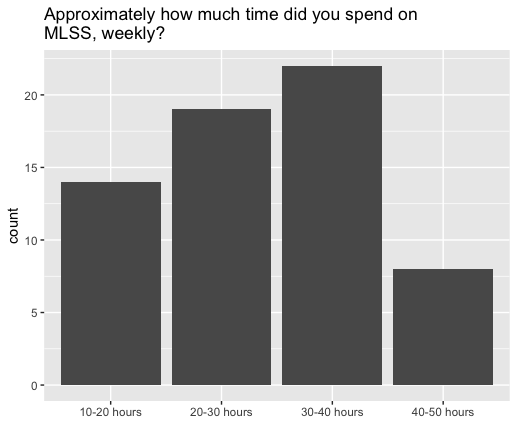

Lastly, we asked how many hours students spent in the course:

Concluding Thoughts

To a large extent, we think these results speak for themselves. Students said they got a lot out of MLSS, and I believe many of them are very interested in pursuing AI safety careers that have been accelerated by the program. The real test of our program, of course, will come when we survey students in the future to see what they are doing and how the course helped them. We plan to do this in the spring.

Full Report

This full report contains my full analysis of MLSS, including strengths and areas of improvement.

About our students

Here is some information on our graduates:

- Many different schools: Georgia Institute of Technology (6), UC Berkeley (5), Stanford (3), Yale (3) Australian National University (2), MIT (2), NYU Abu Dhabi (2), UCLA (2), UCL (2), University of Toronto (2), Vrije Universiteit Amsterdam (2), Brown (1), Columbia (1), Dublin City University (1), IIT Delhi (1), Institut Teknologi Bandung (1), London School of Economics (1), Lousiana State University (1), McGill (1), McMaster (1), NUS (1), Oxford Brookes (1), Pitzer (1), Reed (1), Trinity College Dublin (1), TU Delft (1), UC Davis (1), UC Irvine (1), UCSD (1), University of Bristol (1), Cambridge (1), University of Copenhagen (1), University of Michigan (1), Oxford (1), University of Queensland (1), University of Rochester (1), UT-Austin (1), University of Waterloo (1), University of Zagreb (1), UW-Madison (1)

- Many different countries: USA (32), UK (8), Canada (5), Australia (3), Netherlands (2), Ireland (2), India (2), Singapore (2), Croatia (1), Denmark (1), Estonia (1), Indonesia (1), Turkey (1), UAE (1)

- Class year breakdown (the class year the students are currently in as of Fall 2022)

- First year undergraduate: 18%

- Second year undergraduate: 32%

- Third year undergraduate: 21%

- Fourth year undergraduate: 15%

- Already completed undergraduate: 15%

- Gender breakdown

- Man: 78%

- Woman: 19%

- Nonbinary/other: 3%

- 66% had previously read a book on AI safety.

- 29% had previously completed AGI Safety Fundamentals or a similar reading group.

- 48% had previously taken an online ML course

- 34% had previously taken an ML/DL course in their university

- 26% had previously done ML in a job.

- 90% had previously had some interaction with EA or AI safety.

Anonymous graduation survey

At the end of the program, we administered an anonymous survey to all graduates. We also made them say in another (non-anonymous) survey that they had completed the anonymous survey. Completion of the non-anonymous survey was required in order for students to receive their stipend. As a result, we successfully achieved a 100% response rate for our anonymous survey. All survey data in this report is based on this anonymous survey.

Overview of the Curriculum

You can also view the final curriculum we used here.

MLSS was split into four main sections:

- Machine learning (weeks 1-2)

- Deep learning (weeks 3-5)

- Introduction to ML Safety (week 6-8)

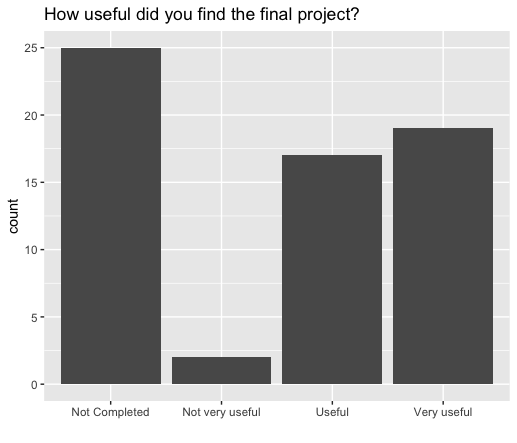

- Final Project (originally week 9, ended up being week 9-10)

The final project was optional, but students who didn’t choose to complete their final project received a stipend that was $500 lower.

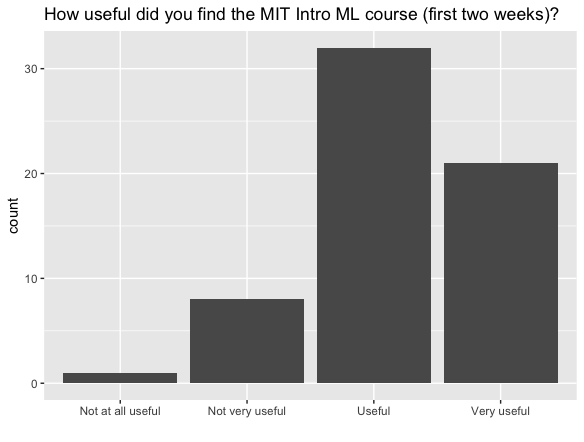

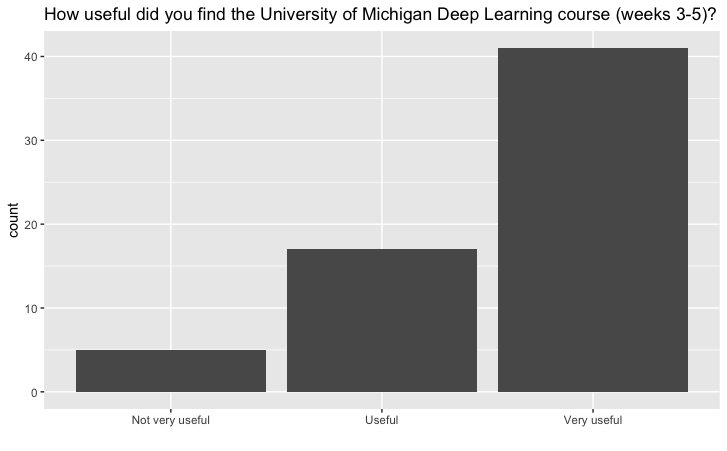

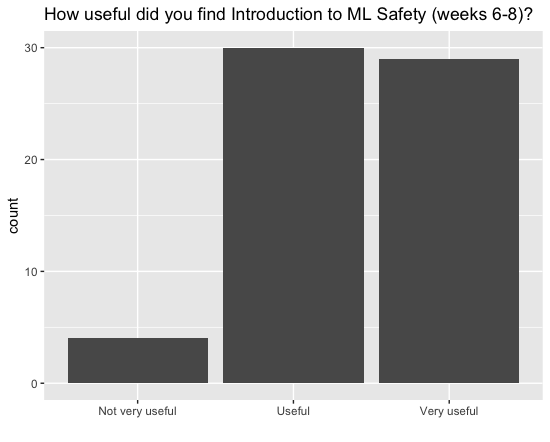

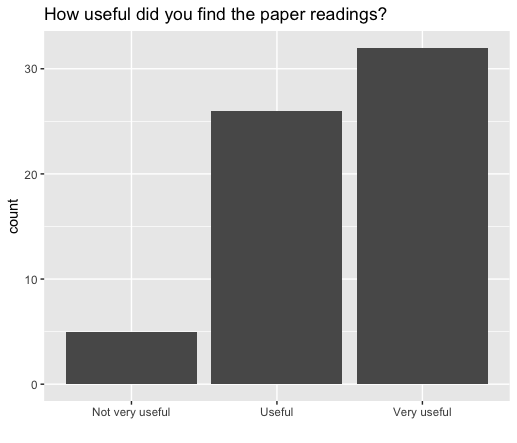

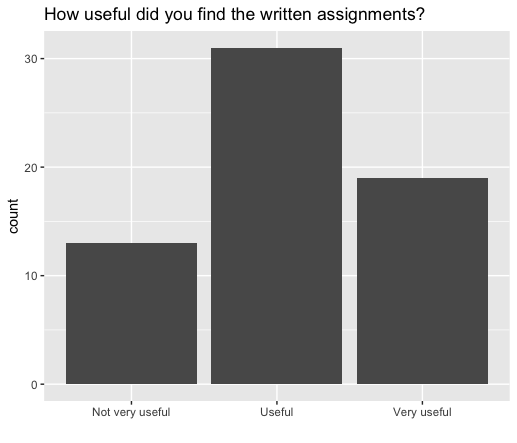

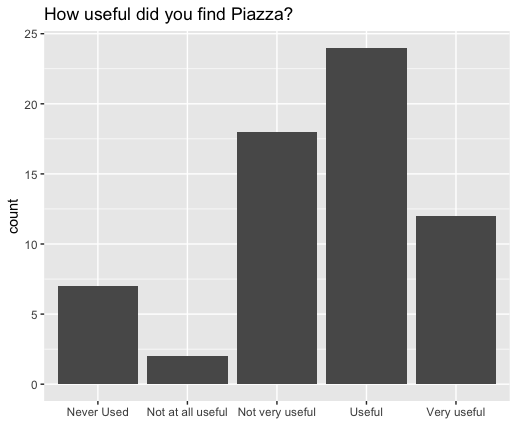

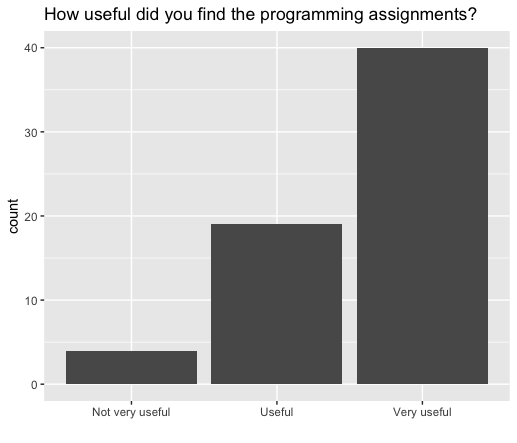

The following graphs show survey data on student opinions of each component:

Components of MLSS

MLSS included many moving parts, which I’ll detail here.

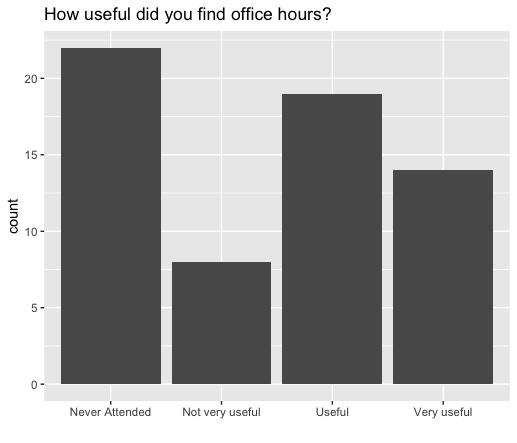

TA office hours

Each TA had two office hours every week, for a total of 16 office hours per week. Students could attend any office hours they wanted. We spaced out office hours so there were roughly a proportionate number of office hours in each timezone region. That meant that we had office hours at all times of day, but they were concentrated in the daytime for the Americas.

Most students attended office hours, and found them useful:

Most office hours were well-attended, although sometimes office hours had too many students, or not very many, depending on the time of week and day.

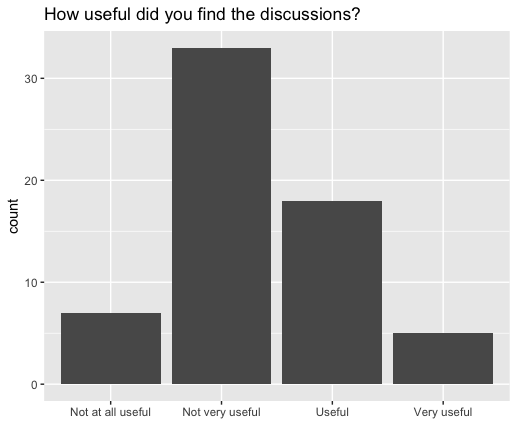

Discussion Sections

Each week, we had three one hour discussion sections. Students were required to attend at least one. We didn’t have enough TAs to facilitate small group discussions, so we had TAs run the overall meeting and put students into breakout rooms, and then go between the breakout rooms. We don’t think that the discussion sections went that well, and that they really needed to be facilitated. Though some students said they got a lot from discussion sections, others reported that other students often had their cameras and videos off in the breakout sessions, so they couldn’t talk to anyone. In the future, we will only do discussion sections if we have a designated facilitator for them.

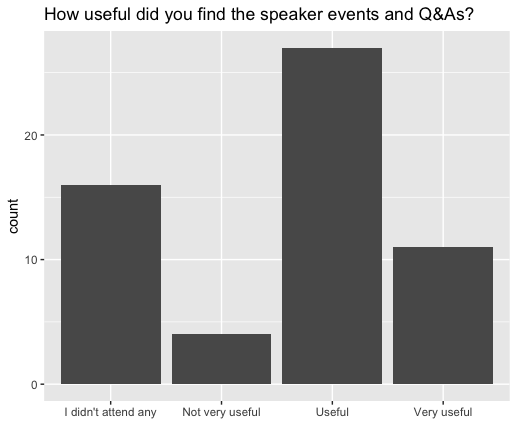

Speaker Events

We held speaker events and Q&As throughout the program. Specifically, we had events with Joseph Carlsmith, Victoria Krakovna, David Krueger, Dan Hendrycks, Sam Bowman, Alex Lawsen, and Holden Karnofsky. Most events were attended by 15-20 students. Overall, most students attended at least one speaker event, and found them fairly helpful. In general, I thought that students asked very good questions.

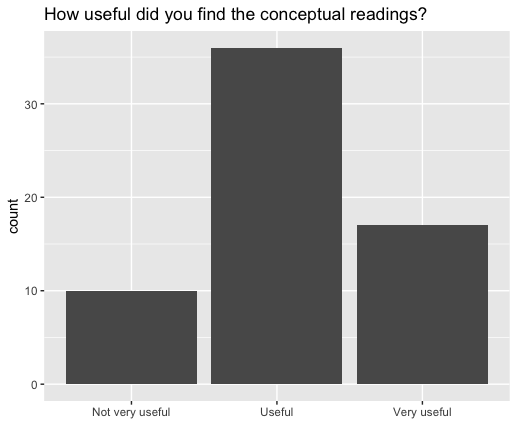

Conceptual readings

We gave students conceptual readings every week, to be discussed in their discussions. By “conceptual,” we don’t mean particular conceptual research agendas, but rather broader readings like Joseph Carlsmith’s Is Power-Seeking AI an Existential Risk? I thought the readings were quite important, since the online courses, focused as they are on ML, do not give the same foundation. Students mostly liked them.

Paper readings

Paper readings were introduced partway through the course on the suggestion of Dan Hendrycks. Students read papers (initially ML papers, later safety specific papers) and had to summarize and give a non-trivial strength and weakness of each paper. Students overwhelmingly liked paper readings, with many mentioning them specifically in freeform responses.

Written Assignments

We assigned students a number of written assignments throughout the course. First, we required students to respond to paper readings, and we also had designated written assignments for the ML safety portion of the course. We think these assignments were important for accountability.

Piazza

We used the Piazza question-answering platform to allow students to ask written questions outside of office hours. I assigned one TA each week to be in charge of answering these questions. I think this was a useful thing to do, but it certainly is no substitute for office hours and more involved questions are difficult to answer.

Programming assignments

All three portions of the course included programming assignments. These were meant to ensure students had a solid foundation in the concepts (it is one thing to think you understand something, and another to know how to implement it). Students found these assignments quite challenging, but overwhelmingly found them useful. There were some bugs in some of the programming assignments, which led to some people not liking them, but we hope to fix these in a future iteration.

Goals of MLSS

The MLSS courses are all online (Introduction to ML Safety was not made for MLSS, and would have been released anyway). That means that anyone who wants to can self-study the course. In addition, people can apply to the long-term future fund or other funding sources for their self-studying. As such, MLSS needed to provide a value add over these existing programs.

Help students understand the importance of AI safety

Description

In my view, it’s easy for students to be told of the importance of AI safety but not be given dedicated time to really think through various arguments that have been made. By requiring students to engage with AI safety conceptual and technical materials, and respond to them critically, we hoped to provide students with a more grounded understanding.

What went well

Students were very engaged with the AI safety content, and rated the Introduction to ML Safety course highly. In addition, 44 out of 63 graduates said MLSS made them think AI safety was a more important problem, while only 1 said it made them think it was less important.

Areas for improvement

I think we could have included more counterarguments to AI risk arguments. For instance, I would now include Katja Grace’s counterarguments if I were running this today.

Give students technical fluency in machine learning and deep learning

Description

Knowledge of deep learning is important for all kinds of AI safety research (in my view, this includes conceptual research). By giving students time to understand deep learning concepts, we hoped to provide them with this knowledge.

What went well

Students rated the deep learning portion of the course highly, with 62% rating it “very useful.” They by and large completed the assignments, and from anecdotes it seems like students learned a lot in these areas. Students mostly also did well on a final exam we administered. Lastly, students especially liked that they were assigned published papers to read, with many noting that it made them feel much more confident and less intimidated to read papers.

Areas for improvement

The deep learning content was too fast paced, whereas the machine learning content was less useful for people. In the future, I would recommend cutting out the machine learning portion and lengthening the amount of time allotted for the deep learning portion.

Help students understand the latest topics in ML-focused AI Safety research

Description

There is a lot of research that goes on in machine learning safety. We hoped to give students an overview of this research and equip them with the tools to stay up to date with it on their own.

What went well

Students liked the ML safety portion of the course and rated it as useful. In addition, their exam results and reading responses suggest that they had some grasp of the material.

Areas for improvement

The difficulty of the deep learning content meant that people spent less time on the ML safety component than we had hoped (we estimate that students spent about 2 weeks, rather than the 3 we intended). We would dedicate more time to ML safety in the future.

Provide students accountability to do what they set out to do

Description

It’s very difficult to stay accountable while self-studying. By giving students structure and checking if they completed their work, we make it much easier to stay on track and accomplish what you set out to accomplish.

What went well

47% of students reported spending more than 30 hours per week on MLSS, and 78% spent more than 20 hours per week. This indicates that students were sufficiently motivated to spend a significant portion of their time doing the necessary work for the program. We also anecdotally had several students tell us that MLSS provided accountability for them.

Areas for improvement

Our deadline process could have been improved (see below). In addition, we had a significant number of students (40%) not complete the course (more on this below).

Streamline access to funding

Description

Many students can’t spend their summer self-studying ML without any kind of income source. It’s possible to apply for funding, but many people are intimidated by this. In addition, many funders don’t have the same level of monitoring of grant recipients as we do in MLSS, so they might be more wary of giving grants to people who don’t have a track record. During MLSS, we ensured students completed all assignments before dispensing grants.

What went well

We thought this went quite well. We had aimed for a program that could support people who didn’t have the financial means to go without any income source, while avoiding having many students who were mainly motivated by money. When we asked, most of our graduates said that they wouldn’t have done MLSS without the stipend, indicating that the stipend made MLSS significantly more accessible to people.

Areas for improvement

There were five students who said they were mainly motivated by the stipend in their decision to do MLSS, but we think this is a small price to pay for being able to graduate 30+ students who were motivated but otherwise couldn’t have done the program. In addition, it appeared that at least two students enrolled in the program with the intention of plagiarizing on all of the assignments and no interest in the content. Presumably these students wouldn’t have done this if we hadn’t included a stipend. More on plagiarism is below; needless to say, these particular students were expelled from the program and did not receive stipends.

Support from TAs

Description

If you get stuck while self-studying, it’s possible to get unstuck by consulting other online resources. But this can be a slow and demotivating process. Having designated TA office hours can help speed up the learning process.

What went well

I thought our TAs were very good. Students for the most part did as well. I think it was very positive that we had the number of office hours that we did, because they were pretty well utilized, and I would advise anyone doing a similar program to ensure office hours are held well.

Areas for improvement

Based on feedback, there are a few ways we could improve office hours. First, some students were not familiar with the concept of “office hours” and thus were not sure what they were. Having a more specific description of office hours would be great. Second, sometimes students were struggling and found it difficult to get all their questions answered at office hours. In the future, I would probably have TAs have schedulable office hours in addition to open office hours, so students can directly book a block of time to talk to a TA.

Providing a more legible credential

“Self-studying” is very hard to put on your resume. MLSS might be a much more legible credential, particularly among those who know about it. Even outside the community, having a formal program with a stipend and an association with the Center for AI Safety is better than no credential at all. It’s relatively unclear how legible MLSS graduation will be, but maybe this post can help with that!

A community of students

Description

Having other students available to cowork and ask questions can be pretty useful. Having other students in the program also provides a sense of community you can’t get while self-studying.

What went well

Some students made heavy use of coworking, and the slack was pretty active. Several groups of students also worked together who hadn’t known each other beforehand, indicating there was some sense of community.

Areas for improvement

I think we could have done more to build community; I generally prioritized other aspects of the program, and though I don’t really regret that, I would like to spend more time on this in the future. We had one social at the beginning of the program that was pretty well attended, but nothing after that. It’s very hard to build a virtual community!

Statistical Analyses

Grades

We performed a linear regression that attempted to predict student grades from other factors. There were no significant correlations with any of the following variables (considering only graduates):

- Whether the student had taken an online ML course

- Whether the student had taken multivariable calculus

- Whether the student had taken advanced college level math courses

- Whether the student had completed AGI Safety Fundamentals or a similar reading group

- The gender of the student (based on subjective evaluation, as we did not have self-reported data for all students until the completion survey)

- Whether or not the student was from the USA

- Various factors related to our analysis of student applications (these won’t be noted here as we don’t want future applicants to be able to use this to game our application)

Attrition

We had attrition from the program for several reasons:

- Some students turned out to not have time for the program. Some of these students had thought we were exaggerating when we said the program was 40 hours per week.

- Some students had family/health issues that prevented them from finishing the program.

- Some students dropped out for plagiarism related reasons.

- Some students dropped out of the final project because they had school or had fallen behind.

In total we had 41 students drop out of the program. Below are the reasons students gave (these reasons are not mutually exclusive):

- Personal/family/health reasons (13)

- Wanted to spend time on other things (12)

- Content more difficult or time consuming than expected (8)

- Student had plagiarized (8)

- Didn’t submit the first assignment and never communicated with us (4)

- Work/school that took up time (3)

- Confused due to miscommunications from staff around expectations (2)

- Unspecified (6)

We think much of our attrition could be solved by being more explicit about the time commitment for the program. For this iteration, we asked people “Are you available 30-40 hours per week for the duration of this program?” and they needed to check yes. However, in conversations many students had thought that this must be an exaggeration, and had enrolled expecting it to be less than that. For future programs, we would probably add a further disclaimer that says something like “In the last iteration, many students read this and assumed that it must be an exaggeration. But then it really did take them 30-40 hours a week. Remember that all students in this program are very strong, so you should expect it to be a challenging and time consuming program.”

We ran an analysis on drop out rates from the program using the same factors we used to analyze grades. We found that women were significantly more likely to drop out of the program (odds ratio: 5.5 (80% CI: 2.6-12)). None of the other factors were significantly related to dropout rates.

To try to see why women dropped out at higher rates, we analyzed the gender breakdown of the reasons above:

- Personal/family/health reasons (20% of women/9% of men)

- Wanted to spend time on other things (13%/8)

- Content more difficult or time consuming than expected (13%/11%)

- Student had plagiarized (17%/5%)

- Didn’t submit the first assignment and never communicated with us (3%/4%)

- Work/school that took up time (3%/3%)

- Confused due to miscommunications from staff around expectations (3%/1%)

- Unspecified (7%/5%)

It appeared that women more frequently dropped out due to other commitments and personal, family, or health reasons. There was also a higher incidence of (detected) plagiarism. I asked for feedback from students who dropped out of the program, and feedback was fairly similar to feedback overall. In particular, many students wished the program had a lower workload and some suggested having a lower-workload track for students with more other commitments. While we were fairly accommodating of people who needed extensions for personal reasons, I think I could have done much better at communicating that and actively offering extensions to students who needed them. I would also have been better upfront with policies around assignments. For example, we ended up allowing students to not submit two assignments, but it would have been beneficial to make this known upfront. Lastly, more individualized support, especially in the early part of the program, would go a long way to make sure everyone felt supported. In retrospect, I should have spent more time actively following up with students who were falling behind, especially women.

Additional user interviews and qualitative analysis of attrition is ongoing.

Survey Results

We also tested the following survey variables in logistic regressions on gender (this time self-reported), class year, and regional location (conditional on graduation):

- Completion of the final project

- Said that MLSS was overall “very useful”

- Said that MLSS was overall “very enjoyable”

- Has already recommended MLSS to a friend, or 90%+ chance of doing this

- Already actively taking steps towards an ML safety career, or reported 90%+ chance of doing this in the next year

- Spent 30+ hours on MLSS per week

- Thinks AI x-risk probability is greater than 50%

- Says MLSS made them think AI safety was a more important problem

- Says MLSS made them think they were a good fit for working in AI safety

We only found a few significant correlations:

- People based in Asia were least likely to say they spent 30+ hours a week on the course, with people in Europe most likely to say this.

- 1st and 2nd year undergrads were significantly more likely to say MLSS made them think they were a good fit for ML safety research. Meanwhile, students based in the US were significantly less likely to say this compared with other regions.

Policies

Assignments

Students were originally required to pass all of the assignments in the course. Passing typically meant 70%+, but was occasionally lower for particularly difficult assignments. Students were originally told they needed to complete all the assignments, but ultimately were required to pass all but two assignments. I made this change because there were about 6 students who probably wouldn’t have graduated in time without the change. In the future, I would probably announce a similar policy upfront, so students could choose for themselves which assignments not to submit.

In cases where students didn’t pass an assignment, they were given an extension and time to resubmit the assignment for a passing score. The vast majority of the time, when students resubmitted they passed.

Plagiarism

We found a suspected total of 19 students who had plagiarized on assignments. To protect the integrity of our plagiarism process, I won’t publicly state how we checked for and detected plagiarism, but if you are running a similar program feel free to contact me.

I was surprised by the amount of plagiarism. I suppose I had naively assumed that people involved in EA would not be the type to plagiarize. While some of the students who plagiarized were part of the small group we admitted who didn’t have a background in EA, there wasn’t any significant relationship. However, it was certainly the case that people struggling more with assignments were plagiarizing more. Lastly, it is of course the case that the existence of stipends likely increased plagiarism, as there was money on the line. In my view, this is easily outweighed by the number of very high quality students who wouldn’t have done MLSS without a stipend (see above).

There are a number of ways we could potentially reduce plagiarism in the future:

- Being clearer about our plagiarism policy upfront. At the beginning of the course, I didn’t have an explicit, well-documented plagiarism policy with many examples. This wasn’t good. For example, I spoke with one student who genuinely didn’t seem to know what plagiarism rules are for programming assignments. As such, I would highly recommend making a policy as early as possible.

- A more liberal extension policy. Especially at the beginning, we were quite hesitant to give extensions except for health reasons or similar. Having explicit late days (or something of the sort) might reduce plagiarism, which usually occurs when people are submitting assignments at the last minute.

- Being “scarier” about warning people about plagiarism upfront. Maybe if students read this report, they will think twice before plagiarizing.

Deadlines

Assignments were typically due on Monday a week after their designated week ended. For example, an assignment for week 5 would be due on Monday, the first day of week 7. I did this because it’s a pretty typical schedule for a class.

I had forgotten that students really, really procrastinate, especially with difficult material. Because of this, having too many things due on one day (readings, programming assignments, etc.) was very difficult for students. In university classes, even if students procrastinate on the assignment, they still have to show up to class. For an online course, giving students “a week to finish the week 5 assignment” means that students don’t actually start week 5 at all (including lectures) until week 6, which is not what you want. I would recommend having very frequent, spaced out, assignment deadlines that are due immediately after you want students to complete the work.

Several times during the course I extended deadlines for all students because it was clear they were mostly behind. In one case, I canceled an assignment entirely.

Extensions

MLSS is very fast paced, and there was limited time in the summer for us to extend it after the original end date. As such, I was initially hesitant about giving people extensions if they didn’t have good reasons (e.g. a health reason). I think this was a mistake.

Prior to the course, I didn’t really consider what I would do if a student really tried but wasn’t able to complete an assignment on time. In practice students ended up doing one of the following:

- Asking for an extension, even though our extension policy technically didn’t cover these cases. We would nearly always give an extension in these cases.

- Drop out of the program. It’s really hard for me to know how many students dropped out of the program because they thought they wouldn’t be able to get an extension, but I suspect this number is nonzero.

- Plagiarism. When students feel backed into a corner, they will cheat. And students did.

In the future, I would probably do something like the following:

- Having some amount of designated late days that students can use as they see fit, for any reason. This would dramatically reduce the stress on students, and would make it less stressful for students to ask for extensions (since they wouldn’t have to).

- Making it very clear that we can offer additional extensions for health, personal, family reasons.

I suspect these changes would have helped more students stay in the program, particularly those more hesitant to ask for extensions. It could also have helped improve our retention of women.

Day to Day Operations

Prior to MLSS

We advertised MLSS on the EA Forum, LessWrong, EA Virtual Programs, and various EA groups. Our sense is that word of mouth significantly contributed to knowledge of MLSS, as we got some applicants who we don’t think frequently read any of these sources.

We also needed to hire teaching assistants. I reached out to people in my network for this, and also on the AGI Safety Fundamentals slack. I evaluated TAs based on their experience with machine learning and ML safety, as well as their track record in teaching (e.g. being a TA for another course). Overall I was expecting hiring good TAs to be much harder than it was; when one TA got sick, it was relatively hard to

We also needed to set stipends for TAs and students. We paid students stipends of $4500 for the 9 weeks of the program ($500/week), which is comparable to what was previously paid by CHAI (this amount is now higher for next year) and what is often paid to undergrads who get summer research grants. We think that in most cases, students who already have the ability to do research themselves should not be doing MLSS, so stipends were relatively low so as to not create any perverse incentives. We asked students afterwards what they thought about the size of the stipends, and 78% said the stipends were around the right size (of course, this is biased, because this is only students who chose to do the program knowing the size of the stipend). I think in the future, we might set the stipend slightly higher, but probably not much higher.

I won’t go into details of our application process in this public post, as I don’t want it to create any distortive effects for future MLSS cohorts. However, you can see the application we used here. We received over 450 applications and admitted just over 100 students.

During MLSS

Every week, TAs needed to grade all student assignments. I would assign each TA to a particular assignment and have them grade it by a week later. Many students also asked for extensions; I assigned a TA to handle this specifically and grant extensions as appropriate. It wasn’t uncommon for students to fail to submit assignments, and in this case we had a TA follow up with them to see what kind of extension they would need to complete the assignment. We also needed to do follow up in the rare cases that students failed assignments so that they could resubmit.

There was also a very high amount of administrative work, most of which I can’t easily describe here. Managing a program with 100 students involves many edge cases that need to be dealt with all the time. I probably spent at least 30 hours a week on MLSS when it was running.

Miscellaneous other improvement suggestions

This report includes a number of areas of improvement. Here are a few others that don’t really fit in any section:

- Assign students a dedicated TA who checks in with them weekly and asks them how they are doing.

- Some students clearly did not have the programming background required for the course. In the future, the application should more clearly require evidence that the student is comfortable writing a relatively large amount of code.

- Release the MLSS application earlier. Many students had already made other summer plans when we released the application.

Anecdotal comments

Some students also shared anecdotal comments (lightly edited for spelling and grammar only): Here are some that stood out to me (lightly edited for spelling and grammar only):

“When there is so much AI-related content out there and little accountability or reward for learning it, it's hard to stay motivated. Having an authority like MLSS filter out the most important content and give incentives for doing it made learning much easier and more effective. Especially since there were incentives for doing useful work which one might otherwise find tedious, like summarising papers.”

“It was a great course, I reckon if I knew about it sooner I could have set aside the right amount of time this course deserves”

“Obviously, this course was something of an experiment, but I think in the future changing more between runs and less within them would be for the best, even if some people are dissatisfied in the first couple weeks.”

[When asked about their favorite part of MLSS] “paper summaries! I felt like I was roleplaying as a researcher. It was very cool to demystify the idea of a paper and know that they're totally readable and approachable if I give them time. Plus I can now say that I've read a paper”

“I'm still fairly unsure how good a fit I am for technical ML Safety research, though that was not an explicit goal of the program, and I'm not sure there's time to incorporate it.”

“When I participated in the AI Seminar at [school] I felt like some stuff was going over my head and wondered if I would be able to pursue AI Safety as a career. I felt that, with MLSS, I got down to the "source" of what I did not know. I still don't feel I am there 100%, but I feel like I have a great first exposure and more knowledge of where to go to solidify the ideas.”

“I saw a lot of students complain about difficulty, however I don’t think that it should be made easier in future iterations. It is a very positive thing it is challenging!!”

“it probably accelerated my knowledge of AI safety by at least 1 year, maybe more”

“I am currently in the planning process for an ML safety reading group at my Uni, which would not be the case if not for this amazing opportunity”

“I feel as though MLSS has not only accelerated my knowledge in AI by years, but it has also instilled in me several new characteristics. I feel far more open to rigorously considering ideas that make me uncomfortable. I have a newfound confidence in going out and reading papers, brainstorming research, and following through with ideas I generate. I also have more confidence in myself and my own capabilities to learn and participate in the field. I feel more motivated than ever to keep learning and start contributing and for that, I am immensely grateful.”

“I came into the program not really knowing what to expect, and I can honestly say that I am more than impressed.”

“You've produced a sizable batch of people excited about working on saving the universe and doing it pragmatically - so I'd say this summer was a huge success.”

“A big thank-you to all the wonderful TAs! Your patience, understanding and helpfulness have meant the world to me. From the course, I’ve learned more than I have in years. MLSS has encouraged me to pursue a future in AI. I’m beyond grateful to MLSS for giving me so many opportunities.”

“I would have paid to have a group so what MLSS has done for me!”

“This program has definitely altered the course of my career.”

Conclusion

I would congratulate you for reaching the end of the report, but statistically, you probably just scrolled directly to the bottom to read this conclusion.

Overall, I’m really happy with how MLSS turned out. There were times when I was really worried it wasn’t going to, but I think that it really did. I’m extremely excited about all of the students in the program, and I think that some of them are going to make great AI safety researchers. There are certainly a lot of areas of improvement that can be made, and I think that the next MLSS can be even better than this one.

I think it’s right for me to end this report in the way I started it: by again linking the list of all the graduates. Every one of them completed a very rigorous and challenging program and is potentially a great candidate for an entry-level AI safety role.

Acknowledgements

I would like to thank all the students in MLSS for providing helpful feedback throughout the course and bearing through some hiccups. You all really made this program worth it for me.

I would like to thank the fantastic TAs: Abra Ganz, Ansh Radhakrishnan, Arush Tagade, Daniel Braun, JJ Balisanyuka-Smith, Joe Kwon, Kaivu Hariharan, Nikiforos Pittaras, and Mohammad Taufeeque. The course materials and policies were designed in close collaboration with Dan Hendrycks, who was an invaluable resource throughout the program. Thank you also to Oliver Zhang for occasionally providing operational support for the program.

While working on this program, I was personally supported by a grant from the Long-Term Future Fund in the first half and as an employee of the Center for AI Safety in the second half.

Lastly, I would like to thank the FTX Future Fund regranting program for their financial support of MLSS.

Thanks so much for writing and sharing this! I think postmortems like this can be really useful.

Also, thanks for this: "I would congratulate you for reaching the end of the report, but statistically, you probably just scrolled directly to the bottom to read this conclusion." :))

Out of interest, were you considering students working together and thus submitting similar work as being plagiarism? Or was it more just a lot of cases of some students fully copy/pasting another's work against their wishes?

Sounds like it was a very successful program!