Summary

- There may be some blindspots in EA research limiting its potential for impact.

- These may be connected to reliance on quantitative methods, demographics and limited engagement with stakeholders, both inside and outside of EA.

- Stakeholder-engaged research with qualitative components offers a pathway for EA research organisations to compensate for this, and to conduct more useable and impactful research.

- Good Growth’s past work using stakeholder-engaged methods in community building (with EA Singapore) and operations in EA (with EA Norway) illustrates how this might be done.

- Techniques that might be particularly useful include user research methods (user profiling, experience mapping), better use of qualitative methods (interviews, focus groups), and stakeholder-engaged processes for designing research.

- If you’re interested in applying stakeholder-engaged methods in your research, we encourage local EA groups and organisations to reach out to us at team@goodgrowth.io

Series Introduction

This will be a series of blog posts that explores the state of research in Effective Altruism and looks at the potential integration and expansion of some alternative research methods into the movement. The focus will be on methods that engage stakeholders (especially qualitative methods used in user, design and ethnographic research), and we’ll explain how Good Growth have been using these methods over the last few years.

- The first post will give an overview of research in EA as we see it: the strengths and potential weaknesses, how general epistemics and worldview formation interact with EA research, and how we’re hoping to approach this. Then it will discuss the use of stakeholder-engaged research methods in diagnosing issues in the EA-meta and community-building space.

- The second post will discuss the application of user and qualitative research to a specific EA cause area- the farmed animal welfare and alternative protein space in Asia.

- The final post will look at how we can integrate these methods into EA research in the future and how we can test the role of user and qualitative research methods in the research-to-action process. We’ll also look at our current thinking on how to co-create useful and high-impact research with other stakeholders.

Who are we?

Good Growth has been working in the EA space since 2019. Our team have a mix of academic, user, design and market research experience, applied across several sectors in China and Southeast Asia. Jah Ying (our founder)'s career has been in a variety of entrepreneurial settings, from movement building to social enterprises, venture philanthropy and incubators/accelerators. So most of her early research has been focused on understanding users, customers and stakeholders, and applying insights to design new products and programs. After selling her company, Jah Ying consulted for several impact-driven startups, social enterprises and non-profits, and mentored at government and corporate incubator/accelerator programs. She found that many EA organisations trying to scale faced a challenge of product-market fit, and helped to fill this gap through user research, leading to the founding of Good Growth in 2019. Since then, Good Growth has gained particular traction within the farmed animal welfare and alternative protein space, and in 2022, shifted to focusing primarily on projects that reduce the suffering of animals in Asian food systems.

I (Jack) joined Good Growth earlier this year as a writer and researcher. After studying Chinese Studies (undergrad) and Anthropology (MA), I worked on development and sustainability research focusing on the Hmong/ Miao ethnic group in China for a few years. After discovering EA, I took a second MA in International Development in Paris, and my work since has involved an internship at IPA Ghana, a China-focused mixed-methods COVID research project in France, working with the Future Matters Project, and a research assistant role in 2022.

Joining Good Growth has given me the opportunity to reconnect with my China research background, and combine this with my interests in animal welfare, sustainability and authentic Chinese food.

At Good Growth, we believe that the methods we’ve been using in our work can be relevant to other organisations seeking to use research insights to do the most good. These posts will focus on how we can bring perspectives from what we will call stakeholder-engaged research to current practices in EA. Drawing on Good Growth’s notes over the last three years, I (Jack) will be the main author of these blog posts, with Jah Ying as a co-writer.

Rapid Overview of Effective Altruism Research

At Good Growth, we're generally believers in EA methodologies and epistemologies. Put simply, people in EA care about developing an accurate model of the world and optimising their own actions to do the most good according to this model. This means prioritising impact-focused research that can either improve this model or guide effective actions within the model. This could be conceptual or philosophical research that lets us reassess the values behind our desired impact, strategic research to guide or prioritise certain actions, empirical economic research to judge the cost-effectiveness or utility of a given cause area or intervention, or technical research to solve a concrete problem.

Although these areas of research can take different forms, one common factor is that EA research emphasises reasoning transparency and epistemic legibility (making it clear how we know what we know). The Bayesian approach to reasoning allows EAs to update on multiple kinds of evidence and add qualified uncertainty into our models. EAs research addresses uncertainties face-on by using guesstimates, weighted factor models and community forecasting. And underlying this, we never lose sight of the question: “How do we do the most good?”.

At its best, EA research allows us to identify important, neglected and tractable research questions, answer them accurately and efficiently, and thus make our actions more effective. Transparent research processes in EA organisations, such as Charity Entrepreneurship, illustrate nicely how to do this. Their process includes multiple stages and levels of research depth, combines different forms of evidence (“RCTs, recommendations from effectiveness-focused organisations, macro/country-level data on effects, consensus among experts, existing effective implementations and expert interviews”), and uses weighted-factor models to make decisions accounting for uncertainty in a robust and bias-mitigating way.

Potential Blindspots in EA research

But, according to our experience, EA research tends to have some blindspots, which may limit its usefulness, relevance and impact. These blindspots can stem from the demographics and academic backgrounds of EAs, or distinctive characteristics of the EA ecosystem.

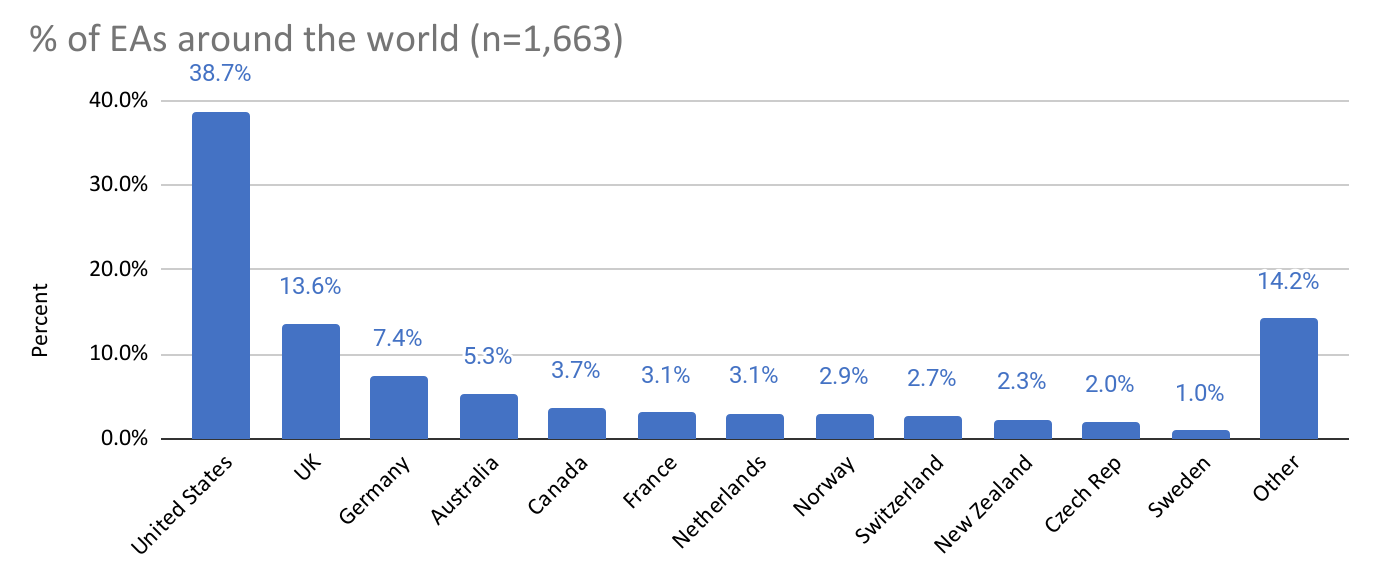

Demographic imbalances within the EA community are the first culprit. The predominantly western and anglophone EA community/ perspective is likely to create under-familiarity with certain geographical areas and non-WEIRD (Western, Educated, Industrialised, Rich and Democratic) ways of thinking.

Source: EA Survey 2019

In general epistemology and worldview development, this manifests itself in the way that people in the EA and rationalist community talk about our ways of thinking and our values. As Joseph Henrich popularised in his (fairly) recent book, ‘the WEIRDest people in the world’, western behavioural science research focuses almost exclusively on WEIRD populations (Western, Educated, Industrialised, Rich and Democratic), who only make up about 1/7 of the global population. Even among non-western “EIRD” cultures, we see fairly substantial differences in values, worldviews and decision-making linked to cultural differences. More theoretically, this leads to unclear estimates about the universality of psychological traits and moral intuitions; in practical decision-making, this ‘WEIRD bias’ affects how EA groups function outside of familiar geographical regions, in either community building or direct work. A couple of concrete examples might be:

- EA community-building models don’t address issues of linguistic or cultural translation, or struggles of movement growth in non-western contexts (as Jah Ying has noted in a previous post)- resulting in a movement whose expansion globally correlates very highly with English-speaking proficiency.

- Considering our preferences over those of people in poor countries may affect how resources are distributed to the (culturally distinct) beneficiaries of effective donations

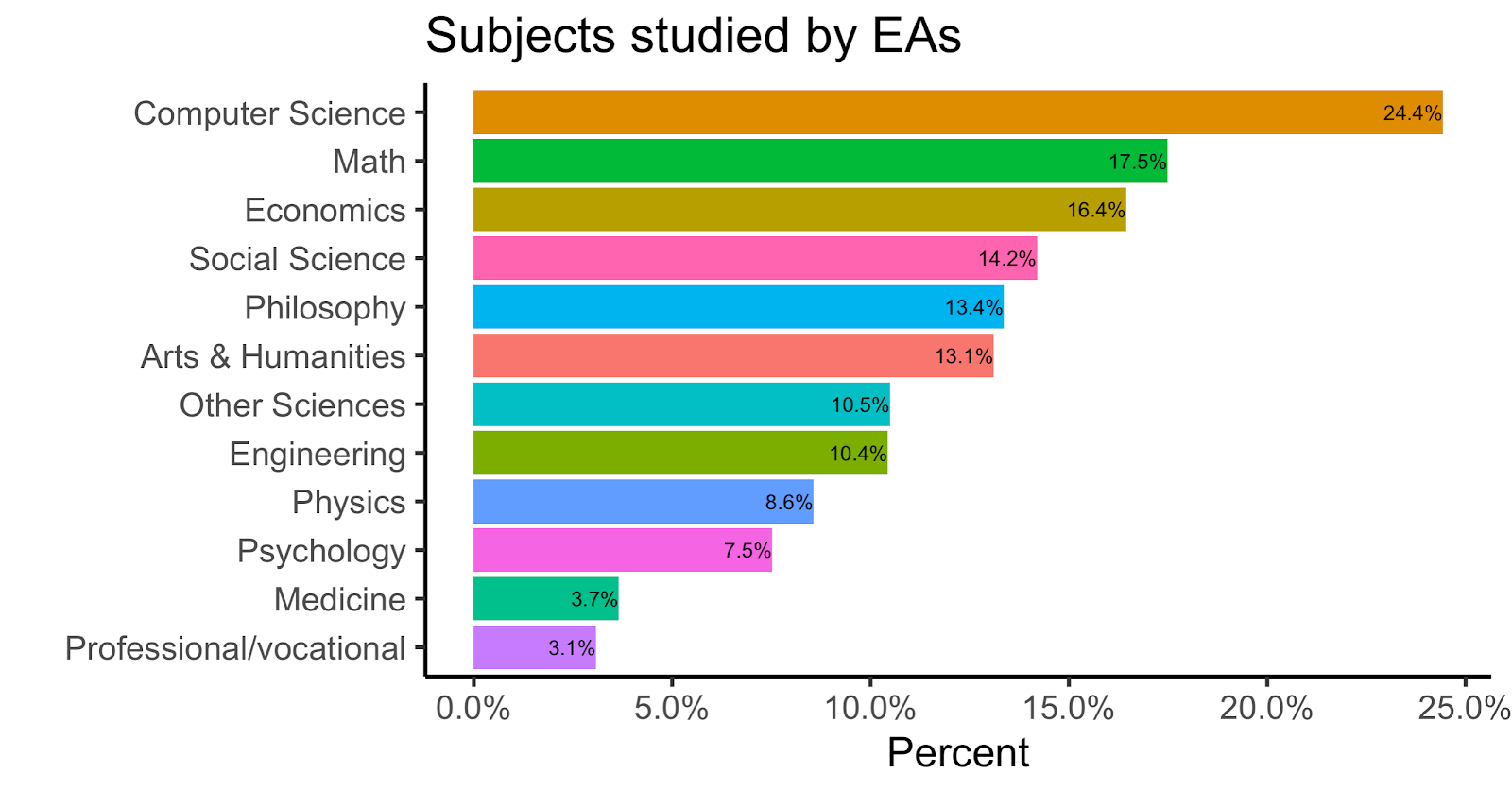

The second potential blindspot comes from academic and professional tendencies. Academic backgrounds in computer science, maths, economics and philosophy are overrepresented in the EA research space. This has led to people at the CEA theorising that non-STEM people are seen as less promising, and their ideas discounted, due to their academic or professional backgrounds.

Source: EA Survey 2019

This has probably led positivism (the idea that only that which can be scientifically verified or which is capable of logical or mathematical proof is valid), methodological individualism (a focus on individualistic explanations for social phenomena) and perhaps economic determinism or economism (the idea that all human interactions can be simplified to fit economic theories or models) to have a particularly strong role in the movement and the belief-formation systems of EAs. This has created a few of the following consequences:

- A comparative neglect of subjective perceptions of experience, especially around questions around effectively reducing global suffering and increasing global happiness (work by the Happier Lives Institute and Qualia Research Institute are some interesting attempts to remedy this)

- Social phenomena are framed as individual psychological phenomena, with consequences for developing effective social systems, community building and general worldview development

- Quantitative methodologies are typically favoured over qualitative

- Less engagement with stakeholders who don’t have quantitative backgrounds, or who reject common EA epistemologies

We’ll focus on the last couple of points here. The trend towards STEM subjects and quantitative economic thinking in EA creates a preference for the quantitative toolkit- surveys and economic data, RCTs, experiments, multivariate models and big data, over the qualitative toolkit- interviews, focus groups, ethnography and observation. The problems with overreliance on quantitative methods can be found in any basic textbook (validity and reliability section of this textbook) or review article on research methods- a couple of salient examples are:

- Quantitative research can generally only test characteristics that have already been identified, but whose value we don’t yet know (known unknowns). This may limit the scope of potential research questions and lead to an undersupply of exploratory research.

- Using a limited set of metrics may lead to identifying the wrong metrics and/or Goodharting (seeking to maximise a metric rather than the underlying phenomenon). For example, focusing on wealth or economic development as a proxy for well-being.

- Reliance on quantitative methods can neglect the wider context of a research question.

- For survey research in particular, there are a distinct set of biases associated with questionnaires, many of which are made worse by online surveys. Also, what people actually do in the real world can be really different from what they do in experiments, or what they say they’re going to do in a survey (example here).

A recurring Sentience Institute survey illustrates a couple of these issues. The purpose of this survey is to keep track of various attitudes and behaviours related to animal welfare in the US. Respondents are asked if they approve of various animal farming practices, whether they are vegetarian, and whether they oppose slaughterhouses. This led to a pretty weird finding: a very high percentage of meat-eaters stated that they want to ban slaughterhouses, seemingly totally unaware that this would make them unable to continue consuming meat products.

Noting this paradoxical finding and the fact that this may lead people to believe that the public is more opposed to slaughterhouses than they actually are, Rethink Priorities chose to redo this study with another survey question to check that the audience actually knew what they were agreeing to. Unsurprisingly, far fewer respondents wanted to ban slaughterhouses after acknowledging that this would make animal agriculture and meat consumption impossible.

While Rethink Priorities’ approach is a valuable corrective here, questions about our paradoxical views on meat consumption may be better addressed with a qualitative approach. The qualitative researcher would want to communicate with participants in greater depth, observe how they think about the issue beyond the survey context, observe how they discuss it with their peers, and analyse real-world behaviour.[1] Some recent examples of qualitative research in academia look at how questions around the meat paradox can be explored using alternative methods, including focus groups and interviews. It seems plausible that greater use of qualitative methods would augment the survey-based EA approaches to this issue.

Engaging Stakeholders

Another potential blindspot within EA research is neglecting the role of various stakeholders within the research process. This includes both those within the broader EA community and partner organisations, and those outside of EA.

The trends of EAs undervaluing outside experiences and interacting exclusively within EA circles have been criticised by many core EAs, as well as outsiders. This is a problem in the research space as well- too much EA research is created by and for EAs- so there’s a relatively high chance only people within or closely adjacent to EA will contribute to and read it. [2]

EA’s historical failure to engage stakeholders has led to suboptimal interactions between EA actors and the broader communities with which we interact (global health + development, animal welfare, nuclear and bio experts, political think-tanks). This may have limited the impact of both individual interventions and the EA community’s ability to have a greater influence.

Engaging stakeholders is not just a challenge in EA- it’s also been identified as a problem in many academic research disciplines. In medicine, for example, prior to the 1990s, using discipline-based research methods to produce knowledge within a narrower research field (“Mode 1 Knowledge Production”) was more common. A neurologist would focus on solving the neurology problems, hoping the knowledge would trickle down to the practitioners, decision-makers and patients. In the 1990s, however, there was a shift towards “Mode 2- knowledge production”- producing research in transdisciplinary teams, and interacting with practitioners to make sure the research was relevant, useful, and could be directly applied to a real-world setting. Through this process the discourse and application of stakeholder-engaged research became more popular .

What is stakeholder-engaged research?

Stakeholder-engaged research has been defined as “an umbrella term for the types of research that have community … stakeholder engagement, feedback, and bidirectional communication as approaches used in the research process” (Goodman et al, 2020).

This might be minimal engagement, for example, holding a few interviews with the people working on the ground to work out what questions they might want to find out about. But it could extend to fully collaborative research partnerships with multiple teams working together for the same research goals. Often, stakeholder-engaged research involves a decent chunk of qualitative research, such as ethnography, participant observation, in-depth interviews (structured, semi-structured or unstructured), and focus groups, where engagement is “built-in” to the methodology. These qualitative methods can be conducted with participants in, or recipients of a program, practitioners/ implementers, or other stakeholders.

Jah Ying is currently conducting her MPhil research on this topic, where she’s looking at how these methods are used in different disciplines, such as development studies, public policy, science and technology, education, business, design and computer science, and health systems research. The third forum post in this series will look more closely at our model of stakeholder-engaged research, how it has worked in these other disciplines, and how this can lead to improved research-to-impact outcomes in the EA space.

Do EAs do stakeholder-engaged research?

You could draw a straw-man of the EA movement as filled with a bunch of STEM nerds who rely exclusively on RCTs and meta-analyses before they believe anything- and who are so arrogant about their own ultra-rational epistemics that other stakeholders aren’t even worth consulting. But this isn’t really the case- we’ve found that attitudes to stakeholder-engaged research methods in EA are actually mixed and kind of hard to pin down.

Parts of EA thinking are clearly compatible with the value of stakeholder-engaged research. Expert interviews and 1-on-1s are flexible, difficult-to-quantify methods of information sharing that fit well into the stakeholder-engaged framework. The use of qualitative methods with various stakeholders is mentioned in the international relations, animal welfare and AI cause areas. Also, despite all of the STEM associations of the rationality movement, rationalists warn against a worldview that focuses solely on quantitative studies, and argue in favour of skilful, flexible Bayesian updating from various forms of evidence. The CFAR handbook, for example, starts each section with an overview of the informal, mixed-methods research used to reach an epistemic status on a given issue, such as ‘informal feedback’, ‘after-workshop follow-ups’ and ‘pair-debugging’ as justification.

But we’ve observed from our experience and a quick overview of the research currently produced in EA organisations that there is little explicit or systematic use of stakeholder-engaged methods in the research agendas of EA global health and well-being, animal welfare, longtermist or EA-meta cause areas[3]. We think that there are opportunities to integrate these methods throughout the EA space, and we will discuss a range of potential use cases in a later post. We’ll start, however, with a couple of case studies of research conducted by Good Growth in the EA meta and community building space. We think that these illustrate how we can use the stakeholder-engaged framework to make a local EA chapter, or EA generally, grow and become more effective.

Stakeholder-Engaged Research in Meta-EA

The need for stakeholder-engaged research with qualitative elements is particularly salient in the meta-EA/ community-building field. Small populations from which to draw samples within a given field, country or region mean that quantitative and survey research is likely to be underpowered or misleading.[4] You’re also looking at ecosystem-level challenges, or questions about complex individual motivations and social trends; this is likely to require a pretty significant depth of understanding, and surveys are unlikely to cut it. Working outside of the Western/ Anglophone hubs, you also need to understand how things work in different cultural contexts. Perhaps the biggest factor here is the unknown unknowns- factors affecting EA communities in a new context that you wouldn’t even have considered. Stakeholder-engaged research may also be simpler and more intuitive within the EA community- you don’t have to try to engage government actors, partner NGOs, academics and other potentially less-aligned actors. This makes stakeholder-engaged research in meta-EA a useful starting point to introduce the value of these approaches.

We’ll now introduce a couple of our studies that illustrate how we’ve managed to assist people working in the meta-EA space.

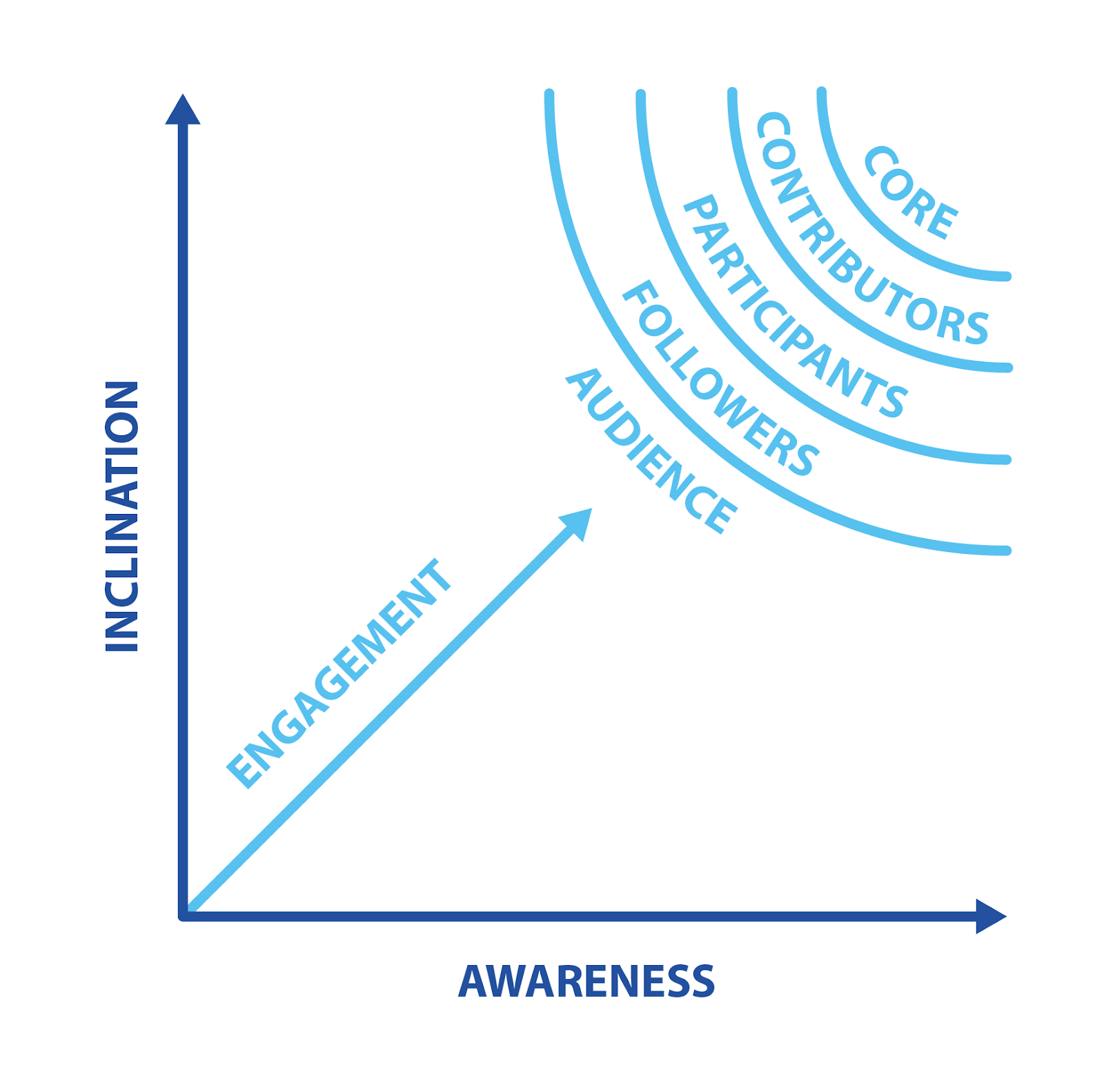

EA Singapore- Community Building Research

EA Singapore reached out to us in 2020 to help them to solve their challenges with member engagement. The team there was facing issues in getting more peripheral members to move deeper towards the core of the EA space (illustrated by the CEA’s concentric circle model). Local organisers estimated that most prospective members only attended 1-2 events and then either dropped out or remained at the ‘follower’ level. There was some interaction with online content, but this wasn’t translating to an active in-person community. For those who did become participants or contributors to EA Singapore, it was rare for them to engage seriously with the community and become committed EAs.

The concentric circle model of movement growth, CEA

As EA largely hasn’t taken off in Asia, we thought it was especially important to identify what the bottlenecks to growth might be. Being relatively rich, very international, and predominantly anglophone, with a large community of enterprising, socially conscious people, Singapore should be a natural hub for EA in Asia- so what was the problem?

We came to EA Singapore with the concrete goal of helping the team prioritise and improve their strategy, but quickly found a few information gaps- we didn’t really know the kind of people who were attracted to EA Singapore, or what factors were driving them towards, and away from the movement.

Specifically, we needed more detail on:

- Who EA Singapore thought their target audience was, and how they were targeting them.

- What the paths of “successful” members were, and what obstacles they faced

- What the subjective experiences of members were of different activities

- What impact activities had on the actions of members

So how did we do this?

Methods

In the spirit of stakeholder-engaged research, we started the research process by holding multiple meetings with the team at EA Singapore to establish what they wanted to find out, and how they would be able to act on our findings.

After establishing the desired outcomes of the research, we set up a process of data collection and analysis over a 2-month period, followed by a period of communicating these findings with the EA Singapore team through a written report, a presentation and multiple conversations.

To understand the broader target audience- the kind of people who clicked on EA Singapore’s online content- we started by setting up social media analytics, which involved Facebook and Linkedin pixel tracking, social media followers analysis, and user behaviour tracking. Then, to get some deeper information about members, we conducted 9 semi-structured interviews with community members at various levels of involvement. This allowed us to triangulate, incorporating quantitative and qualitative data, across different user groups, to get more robust findings.

We analysed the interviews thematically, combined the qualitative data with the analytics data, then used a couple of tools commonly used in user research in business/ industry to clarify and communicate the data- user personas and experience maps.

User personas are semi-fictionalised characters that allow us to distinguish different users of a product, service or members of a community, based on shared characteristics, experiences or use-profiles. Personas aren’t meant to be an exhaustive, scientific taxonomy of every possible user type, but they are supposed to be memorable, actionable, and distinct from one another. We use them to sum up the main needs of different member groups so that we know who we’re targeting.

Experience mapping (or journey mapping)- developing a visual representation of a customer’s actual experience with a tool, product or service- is also part of the UX toolkit. Just as you want to empathise with who your users are, you also want to identify with their experiences.

Both of these tools should be based on evidence, but they can be developed in a more ‘lightweight’ way, using simple data collection, while they can also be based on more extensive qualitative interviews, or statistical data.

Findings

It soon became clear that different community members were using EA Singapore in distinctive ways. We identified three fairly simple user personas with different needs, levels of engagement, motivations and community contributions. These personas are likely to be relevant to other EA groups, but probably also have characteristics specific to Singapore:

- EA practitioners work at EA orgs or are otherwise engaged with EA research. They want to interact more with people who understand EA more deeply, and be around other EAs to get support for their ideas and projects.

- EA-guided professionals are working in EA-related/-adjacent cause areas, or may be earning to give. They tend to be more focused on developing their own career, and improving effectiveness within their own given cause area.

- EA students and fresh graduates are a broad range of people who are just getting into EA, and are in a more exploratory stage of understanding the movement.

These personas provided the EA Singapore team with a specific category of people to target- creating a heuristic to ask questions like: “Does this event target EA-guided professionals? Does it put off students and fresh graduates?” As EA practitioners were more likely to be active members, there’s a risk that their suggestions and preferences might engage the core and put off EA professionals who might not be on board with longtermism and x-risk concerns.

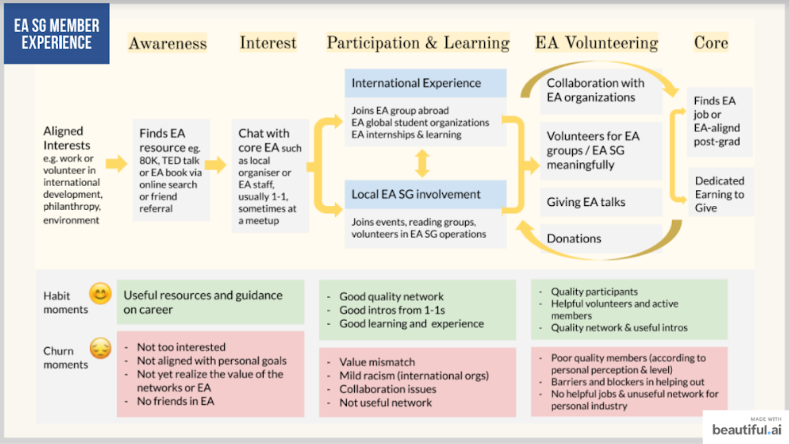

We also used the data to develop an experience map that identified common experiences, and how positive or negative these experiences were, on average. Using a mix of both data types, we also developed simplified categories for the more specific stages of a member's experience, including ‘habit moments’ and ‘churn moments’ at each stage of engagement.

Overall, some things were going well in EA Singapore: it was a welcoming, intellectual and helpful community, 1 and 1s with core EAs and practitioners were really valuable processes, and the movement was attractive to university students. But we also saw why they were having trouble retaining members:

- There was some audience mismatch, leading to disengagement, for example, younger members had trouble interacting with busy EA practitioners who didn’t want to waste their time with newbies

- The movement was reliant on one central figure for organisation, so subgroups struggled to function without leadership

- Younger people were pessimistic about finding EA-related careers within Singapore, so university students felt that they didn’t have a future in EA

- Some international interactions were more negative, so some people at EA Singapore didn’t feel part of the global EA community

This experience map laid out where things were likely to go wrong, so that the team could intervene and try to help people maintain their connections with the movement.

As well as giving them these two tools to guide their action, we also recommended that the team:

- Create an open directory of members to support continued participation

- Target events to personas, careers, industries or cause areas to unlock engagement

- Spark friendships to create commitment

- Organise social events based on popular interest

We also gave EA Singapore a set of next steps so that they could continue iterating on the community-building recommendations:

- Develop a prioritisation framework with other community members to increase engagement

- Validate some of our claims and outstanding questions

- Generate and test solutions to the specific problems we highlighted

This process should illustrate how stakeholder-engaged qualitative research may be conducive to community building. We think it would be valuable for other national groups to conduct similar studies, especially those experiencing issues related to community building or retaining members.

Operations Camp (EA Norway)

During a similar time period, Good Growth was also hired to deal with an EA community issue in the operations field. The global EA community had identified an internal operations bottleneck, and it seemed that there weren’t enough suitable people applying for available positions, and there was significant friction in the hiring process.

The executive director at EA Norway (Eirin) had done some preliminary research (here and here) identifying causes and possible solutions to developing operations talent in EA. She identified challenges in helping new people to test their fit in operations roles, get practical experience and acquire the relevant skills needed for these roles. Based on this research, the EA Norway team planned on organising an operations camp aimed at tackling these three challenges to improve recruitment of operations talent for EA organisations.

The EA Norway team were hoping to narrow the operations talent gap through three main mechanisms:

- Providing the camp participants a chance to test their fit and get to know the EA ops field.

- Giving participants an introduction to relevant subfields, signposting valuable resources and helping them grow as operations experts.

- Creating a strong and reliable signal for participants to send to potential employers of their skill level, high fidelity EA understanding and adherence to EA values.

However, there were still some key uncertainties about the theory of change behind the planned camp, so the team approached Good Growth to help better identify the characteristics of the operations bottleneck, and to improve the theory of change.

Methods

As there was a relatively small number of operations professionals in EA, with disproportionate knowledge, diagnosing the operations bottleneck would require high-fidelity understanding of their perceptions. To do this we combined a more formal interview process, with 9 in-depth, 1-on-1 interviews with multiple people across eight EA organisations in Europe, with some informal conversations with various people in operations roles, leaders, and other longtime EAs who we felt might have particular insight into this topic.

Based on data from these interviews, we identified twelve challenges in EA operations. To identify which of the challenges were the most pressing, we surveyed people involved in EA operations at EA Global San Francisco (in-person) and through an online survey. We then integrated this data into multiple differently weighted models, in order to identify the key challenges that stood out as most pressing.

Findings

The results of the interviews were surprising, and caused the EA Norway team to have to change their plans. We identified five significant challenges, and, counter to our prior assumptions, these did not include hiring. They were (in order of importance):

- Standardisation and documentation of routines and processes

- Talent retention

- Making time for strategic thinking

- Sharing best practices between organisations

- Onboarding and integrating new staff into the organisation and community

Although hiring was mentioned as one challenge among many, this research determined that it was not among the most pressing challenges. As talent retention and onboarding were more of an issue than actually acquiring the talent in the first place, hiring seemed unlikely to actually be the bottleneck to the ops issues faced by EA. On top of this, senior operations managers mentioned their preference for experienced ops professionals, rather than the kind of people who would be likely to attend the planned operations camp.

Diagnostic qualitative research, engaging many of the relevant stakeholders in the EA operations space, revealed that the initial project had misidentified the bottleneck, and that an operations camp was probably not the most effective way of dealing with the core problem. This encouraged the team at EA Norway to take a step back and question what they were fundamentally trying to achieve.

Of course, the higher-level goal was increasing the total operations capacity of EA organisations, so they cancelled their plans to hold the camp.

Conclusions

These two examples of qualitative methods in the meta-EA space show how these methods can be useful for decision-making. The EA Norway team actively changed their prioritisation, and were able to dedicate more time to a project with higher expected value, while the EA Singapore team was given a framework for more targeted community building. This demonstrates how qualitative research can be used to more effectively diagnose issues, test assumptions and identify opportunities within the EA movement. The methods used were relatively low-cost, simple and scalable, meaning that they could likely be implemented by smaller EA organisations or teams hoping to more accurately diagnose meta-EA-related issues.

The impact and cost-effectiveness of these interventions can be simple to analyse. Our research enabled some high-impact organisations and individuals to allocate their resources more effectively, potentially altering months of expected work. You can estimate the value of Good Growth’s contribution in these areas by determining the value of the existing intervention multiplied by how much more effective the projects/ organisations were after our involvement, divided by the resources needed for our diagnostic research project. As with the argument for prioritising operations, improving high-expected-value interventions by only a small amount is able to meet the criteria for cost-effectiveness, indicating a fairly low bar for effectiveness. This is especially true if the intervention changes the trajectory of larger-scale projects or organisations, and if interventions like these are neglected. The specific impact of marginally increasing stakeholder-engagement in these kinds of processes, or moving from a survey model to a mixed/ qualitative/ user research model, is more difficult to test, and we will explore the thinking behind this in more detail in the third post.

After doing these projects in 2019 and 2020, the Good Growth team took the decision to double down on the fields of animal welfare and alternative protein development in Asia, where most of our work has focused since then. The next post will introduce how we have used a different set of stakeholder-engaged and qualitative methods to understand the alternative protein market and the animal welfare ecosystem in China and East Asia.

If you’re interested in applying stakeholder-engaged methods in your research, feel free to reach out to us at team@goodgrowth.io or DM me on the forum. Local EA groups and EA organisations are especially encouraged to reach out!

Thanks to Gidi Kadosh, Ella Wong (our colleague at Good Growth), and Yi-Yang Chua for feedback on this post!

Edited to clarify some details about the value of research in both cases.

- ^

Qualitative research would also benefit the next stage of the research- testing how the public would react to different framings of the issue.

- ^

Admittedly, this might be better than academia, when there’s a decent chance that no-one will read your work except your supervisor.

- ^

The main exception seems to be using expert interviews when exploring a new cause area- we suspect that there is a bias towards only interviewing people perceived as experts, as opposed to recipients and people on the ground. Also, Effective Thesis contains a surprising amount of qualitative research in the finished theses. Also, EA-aligned organisations, such as IPA, where I (Jack) previously worked, are trying to increase the level of stakeholder engagement in their work.

- ^

“The median number of people engaging with a group over the last year was 50, with 10 people regularly attending a group’s events and 6 members who were highly engaged with the EA community.” EA Group Organisers Survey, 2019